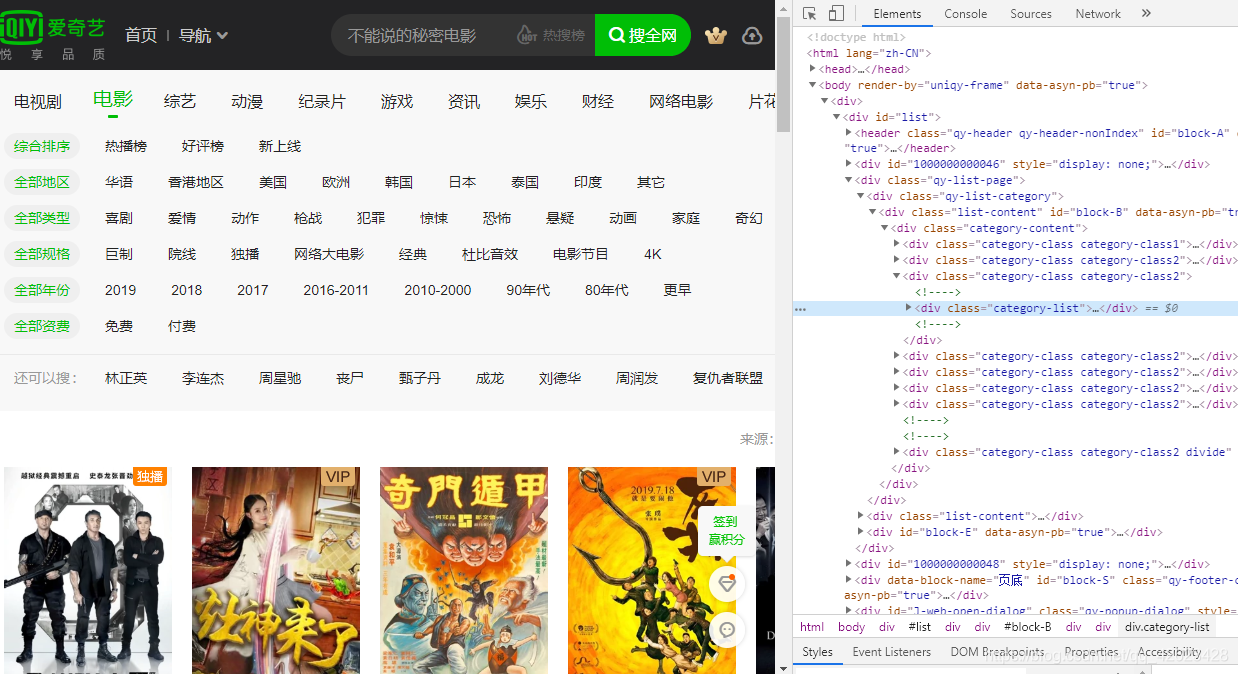

以chrome为例

首先打开开发者工具

把页面下拉一下,会出现一些新的文件,在network中可以很容易的发现我们需要找的一个XHR文件

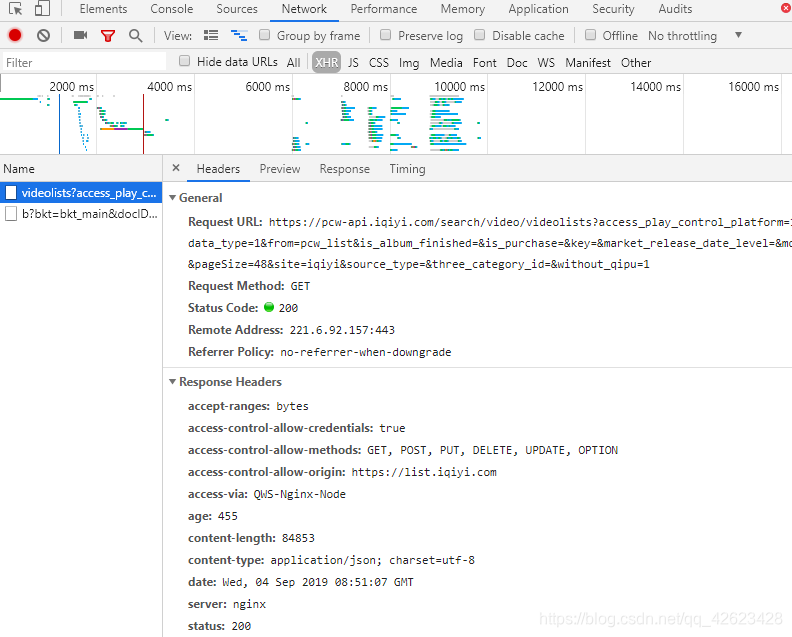

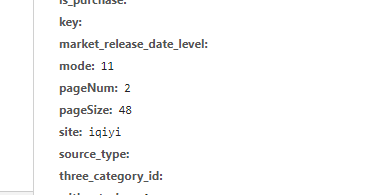

这个Request URL就是我们需要找的url,下面还有pageNum这个参数即说明是第几页的数据,我们在发送请求时也要带上

可以发现爱奇艺电影片库里一共有19页,就可以求得每一页的数据:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36',

'Origin': 'https://list.iqiyi.com'

}

for page in range(1,20):

url = 'https://pcw-api.iqiyi.com/search/video/videolists?access_play_control_platform=14&channel_id=1&data_type=1&from=pcw_list&is_album_finished=&is_purchase=&key=&market_release_date_level=&mode=11&pageNum={}&pageSize=48&site=iqiyi&source_type=&three_category_id=&without_qipu=1'

response = requests.get(url.format(page),headers=headers).content.decode('utf-8')

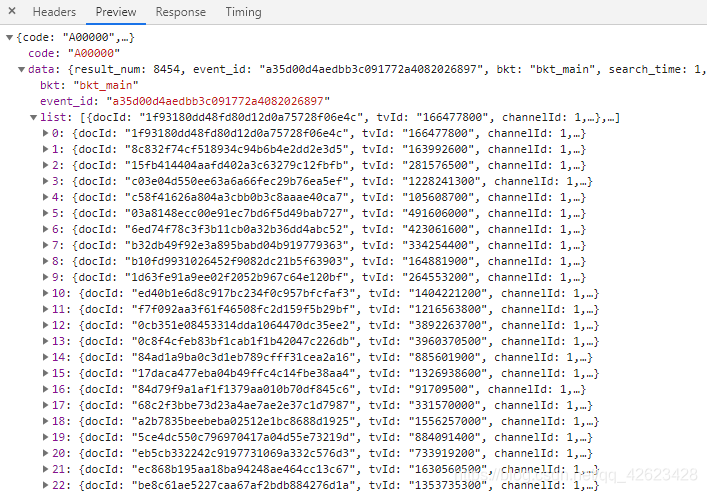

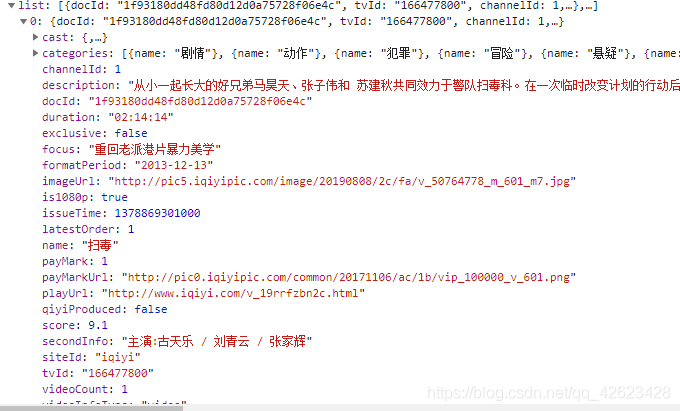

通过Preview发现,返回回来的是json数据包:

这个list里面就是这一页的电影的详细信息:

这样就可以比较轻松的获取我们想要的信息了:

json_dict = json.loads(response)

lists = json_dict['data']['list']

for film in lists:

name = film['name']

print(name)

imgUrl = film['imageUrl']

url = film['playUrl']

score = str(film['score'])

player = film['secondInfo']

try:

text = film['description']

except:

text=''

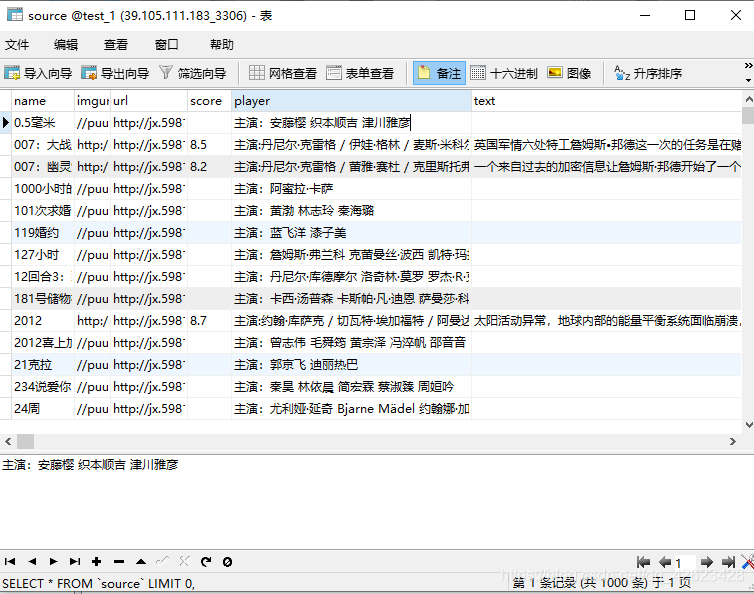

把我们需要的一些信息提取出来之后可以把这些顺便存到数据库里,这样也比较方便

connect = pymysql.connect(host='39.105.111.183',port=3306,user='test_1',password='***',database='test_1',charset='utf8')

#我保存在了服务器上的数据库里,本地的话host:localhost,端口默认是3306,有差别的话自己改一下,其他具体细节请自行百度

cursor = connect.cursor()

sql = "insert into source(name,imgUrl,url,score,player,text) values('{}','{}','{}','{}','{}','{}')"

def check(name,cursor): #检查是否有重复电影已经添加了

sql = "select count(1) from source where name = '{}'".format(name)

cursor.execute(sql)

res = cursor.fetchall()[0]

return res[0]

if check(name,cursor) >0:

continue

else:

cursor.execute(sql.format(name,imgUrl,url,score,player,text))

connect.commit()

#最后要记得关闭

cursor.close()

connect.close()

我还找到一个视频解析网站,提供免费的解析视频观看,解析格式为

url = 'http://jx.598110.com/?url='+film['playUrl'],只不过视频不算特别高清,而且手机端打开的话还有很多垃圾广告,电脑端比较干净,不过也就提供参考,还是要支持正版,不要传播盗版。

完整代码:

import pymysql

import requests

import json

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36',

'Origin': 'https://list.iqiyi.com'

}

def check(name,cursor):

sql = "select count(1) from source where name = '{}'".format(name)

cursor.execute(sql)

res = cursor.fetchall()[0]

return res[0]

def main():

connect = pymysql.connect(host='39.105.111.183',port=3306,user='test_1',password='***',database='test_1',charset='utf8')

cursor = connect.cursor()

sql = "insert into source(name,imgUrl,url,score,player,text) values('{}','{}','{}','{}','{}','{}')"

for page in range(1,20):

url = 'https://pcw-api.iqiyi.com/search/video/videolists?access_play_control_platform=14&channel_id=1&data_type=1&from=pcw_list&is_album_finished=&is_purchase=&key=&market_release_date_level=&mode=11&pageNum={}&pageSize=48&site=iqiyi&source_type=&three_category_id=&without_qipu=1'

response = requests.get(url.format(page),headers=headers).content.decode('utf-8')

json_dict = json.loads(response)

print(url.format(page))

lists = json_dict['data']['list']

for film in lists:

name = film['name']

print(name)

imgUrl = film['imageUrl']

url = 'http://jx.598110.com/?url='+film['playUrl']

score = str(film['score'])

player = film['secondInfo']

try:

text = film['description']

except:

text=''

if check(name,cursor) >0:

continue

else:

cursor.execute(sql.format(name,imgUrl,url,score,player,text))

connect.commit()

#print(sql.format(name,imgUrl,url,score,player,text))

cursor.close()

connect.close()

if __name__ == "__main__":

main()

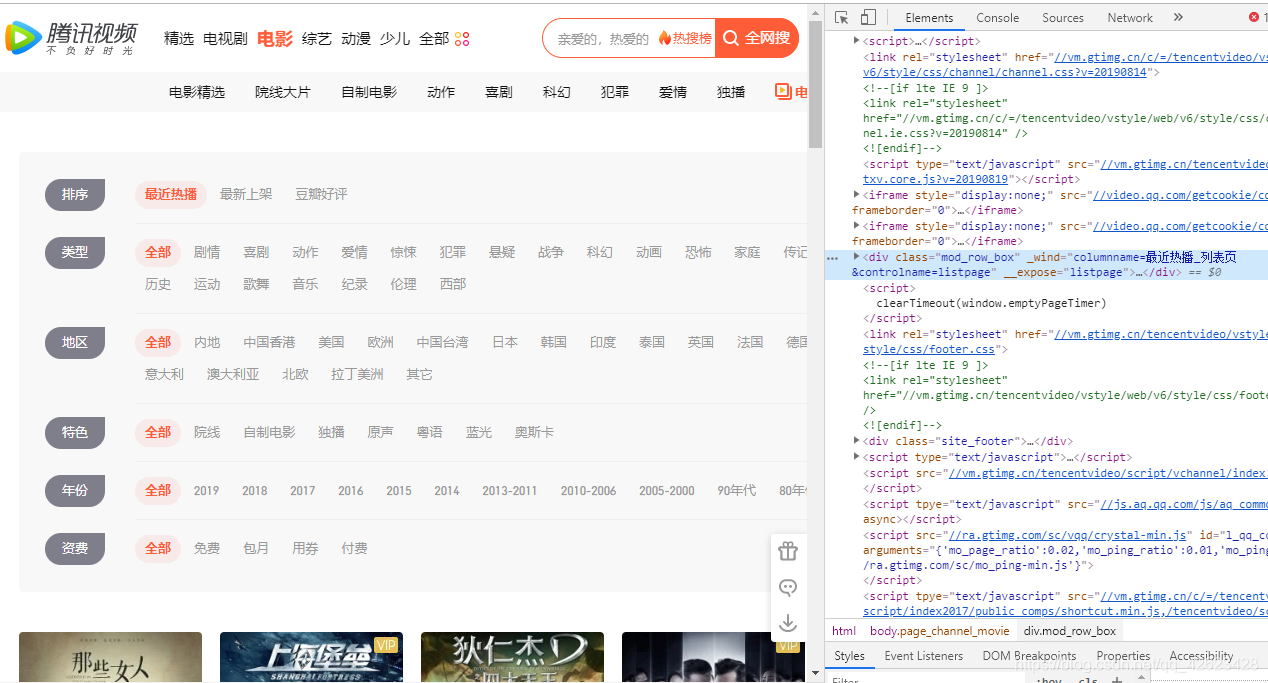

找完爱奇艺,接下来就是腾讯视频

大体步骤和之前没什么区别,唯一麻烦一点点的是返回的不是json格式的数据,我就直接从网页上提取所需内容了

url = 'https://v.qq.com/x/bu/pagesheet/list?_all=1&append=1&channel=movie&listpage=2&offset={}&pagesize=30&sort=18'

response = requests.get(url.format(page*30),headers=headers).content.decode('utf-8')

res = etree.HTML(response)

lists = res.xpath('//div[@class="list_item"]')

for film in lists:

name = film.xpath('div/a[@class="figure_title figure_title_two_row bold"]')[0].text

url = 'http://jx.598110.com/?url='+film.xpath('div/a[@class="figure_title figure_title_two_row bold"]/@href')[0]

player = film.xpath('div/div[@class="figure_desc"]')[0].text

imgUrl = film.xpath('a/img/@src')[0]

score = ''

text = ''

剩下的就和之前爱奇艺那个差不多了

完整代码:

import pymysql

import requests

from lxml import etree

import json

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/75.0.3770.100 Safari/537.36',

'cookie': 'pgv_pvi=5126339584; RK=kbJtlWx86Y; ptcz=dbf5ee71b810795fbdad167358b0615fb3eec7407c0b49fa3403b8b6d57dc562; pgv_pvid=2197259500; eas_sid=w1h596W3267237U6a2R1s7f3l9; uin_cookie=o2654177652; ied_qq=o2654177652; o_cookie=2654177652; pac_uid=1_2654177652; tvfe_boss_uuid=9a632281936182c2; ts_uid=8253800134; bucket_id=9231004; video_guid=81e2ae16f9492d94; video_platform=2; pgv_info=ssid=s8407541593; ts_refer=www.baidu.com/link; ptag=www_baidu_com; ts_last=v.qq.com/channel/movie; ad_play_index=56; qv_als=itk7ONQYBoSErLnMA11567319761AJe5Bw=='

}

def check(name,cursor):

sql = "select count(1) from source where name = '{}'".format(name)

cursor.execute(sql)

res = cursor.fetchall()[0]

return res[0]

def main():

connect = pymysql.connect(host='39.105.111.183',port=3306,user='test_1',password='***',database='test_1',charset='utf8')

cursor = connect.cursor()

sql = "insert into source(name,imgUrl,url,score,player,text) values('{}','{}','{}','{}','{}','{}')"

for page in range(1,167):

#话说腾讯视频片库比爱奇艺大好多啊,果然是有钱嘛

url = 'https://v.qq.com/x/bu/pagesheet/list?_all=1&append=1&channel=movie&listpage=2&offset={}&pagesize=30&sort=18'

response = requests.get(url.format(page*30),headers=headers).content.decode('utf-8')

res = etree.HTML(response)

lists = res.xpath('//div[@class="list_item"]')

for film in lists:

try:

name = film.xpath('div/a[@class="figure_title figure_title_two_row bold"]')[0].text

url = 'http://jx.598110.com/?url='+film.xpath('div/a[@class="figure_title figure_title_two_row bold"]/@href')[0]

player = film.xpath('div/div[@class="figure_desc"]')[0].text

imgUrl = film.xpath('a/img/@src')[0]

score = ''

text = ''

print(name)

if check(name,cursor) >0:

continue

else:

cursor.execute(sql.format(name,imgUrl,url,score,player,text))

connect.commit()

except:

pass

#print(sql.format(name,imgUrl,url,score,player,text))

cursor.close()

connect.close()

if __name__ == "__main__":

main()

这样就把大部分的电影信息爬取到了,除了一些老一点的电影因为页面格式和其他的格格不入没有抓取到,爬到的也保存到了数据库里

把这些url保存就可以了,下载虽然也不麻烦但是就还是算了,这么多电影我服务器也放不下,不看没事下这么多干嘛。

然后,我写了一个js文件放到服务器后台运行,就可以当接口在前端查询用,当然随便写的,就不太美观。。

var mysql = require('mysql');

var express = require('express');

var app = express();

var http = require('http');

const bodyParser = require('body-parser');

app.use(bodyParser.json());//数据JSON类型

app.use(bodyParser.urlencoded({ extended: false }));//解析post请求数据

var connection = mysql.createConnection({

host : '39.105.111.183',

user : 'test_1',

password : '***',

database : 'test_1',

port: '3306'

});

connection.connect();

app.post('/login',function (req,res) {

var name =req.body.name;

var list = [];

connection.query('SELECT * from source where name like ?',['%'+name+'%'], function (error, rows, fields) {

if (error){

res.send('无此电影');

throw error;

}

if(rows.length>0){

var a='<html lang="en" class="no-js">\n' +

'<head>\n' +

'<meta charset="UTF-8" />\n' +

'<meta http-equiv="X-UA-Compatible" content="IE=edge"> \n' +

'<meta name="viewport" content="width=device-width, initial-scale=1"> \n' +

'<title>Film</title>\n' +

'<link rel="stylesheet" type="text/css" href="css/normalize.css" />\n' +

'<link rel="stylesheet" type="text/css" href="css/demo.css" />\n' +

'<!--必要样式-->\n' +

'<link rel="stylesheet" type="text/css" href="css/component.css" />\n' +

'<!--[if IE]>\n' +

'<script src="js/html5.js"></script>\n' +

'<![endif]-->\n' +

'</head>\n' +

'<body>\n' +

'\t\t<div class="">\n' +

'\t\t\t<div class="">\n' +

'\t\t\t\t<div id="large-header">\n' +

'\t\t\t\t\t\n' +

'\t\t\t\t\t<div class="logo_box">\n'

var b='';

for(var i=0;i<rows.length;i++){

list[0] = rows[i].name;

list[1] = rows[i].imgurl;

list[2] = rows[i].url;

list[3] = rows[i].score;

list[4] = rows[i].player;

list[5] = rows[i].text;

b+='\t\t\t\t\t\t<a href="' + list[2] + '" ><img src="' + list[1] + '" >\n' +

'\t\t\t\t\t\t<br>\n' +

'\t\t\t\t\t\t<a href="' + list[2] + '" >' + list[0] + ' ' + list[3] + '</a>\n' +

'\t\t\t\t\t\t<br>\n' +

'\t\t\t\t\t\t' + list[4] + '\n' +

'\t\t\t\t\t\t<br>\n' +

'\t\t\t\t\t\t' + list[5] + '\n'

}

var c= '\t\t\t\t\t\t<br>\n' +

'\t\t\t\t\t</div>\n' +

'\t\t\t\t</div>\n' +

'\t\t\t</div>\n' +

'\t\t</div><!-- /container -->\n' +

'\t\t<script src="js/TweenLite.min.js"></script>\n' +

'\t\t<script src="js/EasePack.min.js"></script>\n' +

'\t\t<script src="js/rAF.js"></script>\n' +

'\t\t<script src="js/demo-1.js"></script>\n' +

'\t\t<div style="text-align:center;">\n' +

'</div>\n' +

'\t</body>\n' +

'</html>';

res.send(a+b+c);

}

else{

res.send('');

}

});

})

app.listen(3000);

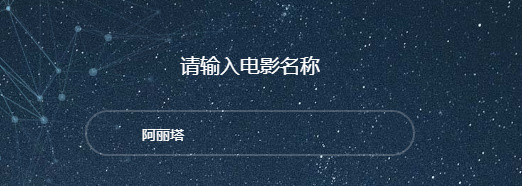

之后就可以了,可以在html网页上查一下,可以留着自用

效果:

确实挺丑的啧啧。。

总结一下,单单爬电影目录还是很简单的,只要找找url什么的就差不多,然后提取一下内容信息就可以了,不过最后还是说要支持正版吧毕竟正版有高清蓝光是吧哈哈哈。

来源:https://blog.csdn.net/qq_42623428/article/details/100542476