1.URL爬取

爬取一个站点的所有URL,大概有以下步骤:

1.确定好要爬取的入口链接。

2.根据需求构建好链接提取的正则表达式。

3.模拟成浏览器并爬取对应的网页。

4.根据2中的正则表达式提取出该网页中包含的链接。

5.过滤重复的链接。

6.后续操作,打印链接或存到文档上。

这里以获取 https://blog.csdn.net/ 网页上的链接为例,代码如下:

1 import re

2 import requests

3

4 def get_url(master_url):

5 header = {

6 'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

7 'Accept-Encoding':'gzip, deflate, br',

8 'Accept-Language':'zh-CN,zh;q=0.9',

9 'Cache-Control':'max-age=0',

10 'Connection':'keep-alive',

11 'Cookie':'uuid_tt_dd=10_20323105120-1520037625308-307643; __yadk_uid=mUVMU1b33VoUXoijSenERzS8A3dUIPpA; Hm_lvt_6bcd52f51e9b3dce32bec4a3997715ac=1521360621,1521381435,1521382138,1521382832; dc_session_id=10_1521941284960.535471; TY_SESSION_ID=7f1313b8-2155-4c40-8161-04981fa07661; ADHOC_MEMBERSHIP_CLIENT_ID1.0=51691551-e0e9-3a5e-7c5b-56b7c3f55f24; dc_tos=p64hf6',

12 'Host':'blog.csdn.net',

13 'Upgrade-Insecure-Requests':'1',

14 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36'

15 }

16 patten = r'https?://[^\s]*'

17 requests.Encoding = 'utf-8'

18 response = requests.get(url=master_url,headers=header)

19 text = response.text

20 result = re.findall(patten,text)

21 result = list(set(result))

22 url_list = []

23 for i in result:

24 url_list.append(i.strip('"'))#过滤掉url中的"

25 return url_list

26

27 url = get_url("https://blog.csdn.net/")

28 print(url,len(url))

29 for u in url:

30 with open('csdn_url.txt','a+') as f:

31 f.write(u)

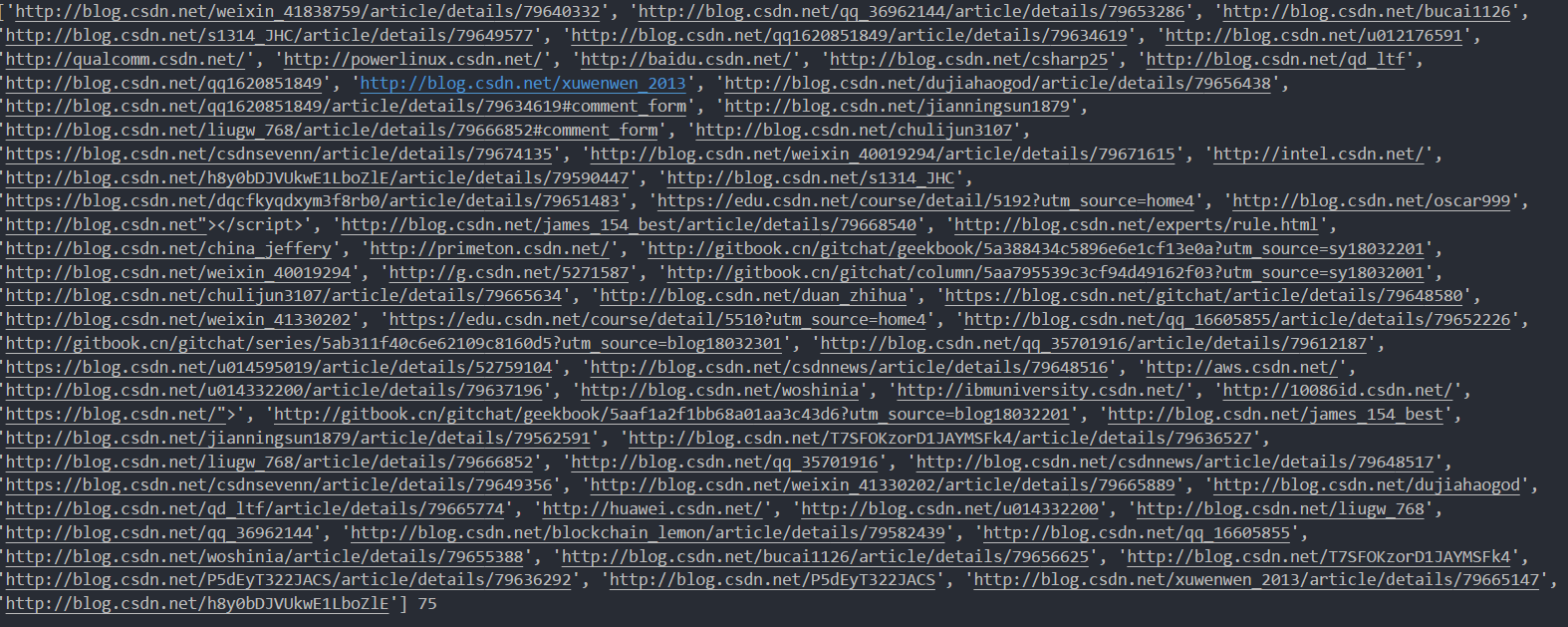

32 f.write('\n')打印结果:

2.糗事百科

具体思路如下:

1.分析网页间的网址规律,构建网址变量,并可以通过for循环实现多页面内容的爬取。

2.构建函数,提取用户以及用户的内容。

3.获取URL,调用函数,获取到段子。

1 import requests

2 from lxml import html

3

4 def get_content(page):

5 header = {

6 'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

7 'Accept-Encoding':'gzip, deflate, br',

8 'Accept-Language':'zh-CN,zh;q=0.9',

9 'Cache-Control':'max-age=0',

10 'Connection':'keep-alive',

11 'Cookie':'_xsrf=2|bc420b81|bc50e4d023b121bfcd6b2f748ee010e1|1521947174; Hm_lvt_2670efbdd59c7e3ed3749b458cafaa37=1521947176; Hm_lpvt_2670efbdd59c7e3ed3749b458cafaa37=1521947176',

12 'Host':'www.qiushibaike.com',

13 'If-None-Match':"91ded28d6f949ba8ab7ac47e3e3ce35bfa04d280",

14 'Upgrade-Insecure-Requests':'1',

15 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.36',

16 }

17 requests.Encoding='utf-8'

18 url_='https://www.qiushibaike.com/8hr/page/%s/'%page

19 response = requests.get(url=url_,headers=header)

20 selector = html.fromstring(response.content)

21 users = selector.xpath('//*[@id="content-left"]/div/div[1]/a[2]/h2/text()')

22 contents = []

23 #获取每条段子的全部内容

24 for x in range(1,25):

25 content = ''

26 try:

27 content_list = selector.xpath('//*[@id="content-left"]/div[%s]/a/div/span/text()'%x)

28 if isinstance(content_list,list):

29 for c in content_list:

30 content += c.strip('\n')

31 else:

32 content = content_list

33 contents.append(content)

34 except:

35 raise '该页没有25条段子'

36 result = {}

37 i = 0

38 for user in users:

39 result[user.strip('\n')] = contents[i].strip('\n')

40 i += 1

41 return result

42 if __name__ == '__main__':

43 for i in range(1,5):

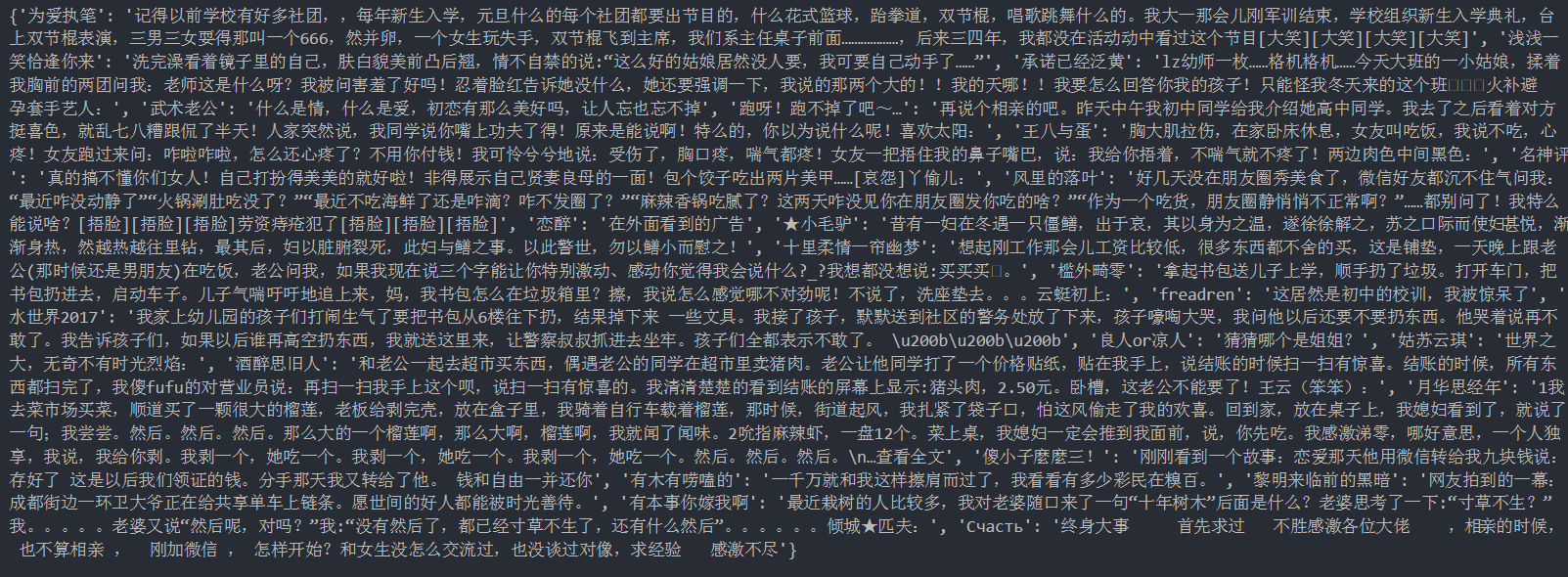

44 get_content(i)打印结果:

3.微信公众号文章爬取

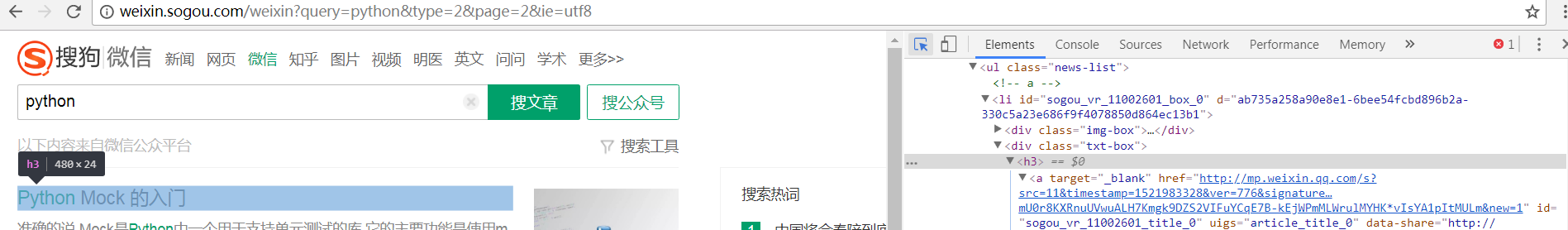

搜狗的微信搜索平台 http://weixin.sogou.com/ ,搜索Python,通过URL http://weixin.sogou.com/weixin?query=python&type=2&page=2&ie=utf8 分析搜索关键词为query,分页为page,为此已经可以构造出此次爬虫的主URL。

接下来分析文章的URL:

可以获取到文章URL的xpath://*[@class="news-box"] /ul/li/div[2]/h3/a/@href

这里大量访问很容易被封IP,所有我们在requests请求中添加了代理参数 proxies

1 import requests

2 from lxml import html

3 import random

4

5 def get_weixin(page):

6 url = "http://weixin.sogou.com/weixin?query=python&type=2&page=%s&ie=utf8"%page

7 header = {

8 'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

9 'Accept-Encoding':'gzip, deflate',

10 'Accept-Language':'zh-CN,zh;q=0.9',

11 'Cache-Control':'max-age=0',

12 'Connection':'keep-alive',

13 'Cookie':'SUV=1520147372713631; SMYUV=1520147372713157; UM_distinctid=161efd81d192bd-0b97d2781c94ca-454c062c-144000-161efd81d1b559; ABTEST=0|1521983141|v1; IPLOC=CN4403; SUID=CADF1E742423910A000000005AB79EA5; SUID=CADF1E743020910A000000005AB79EA5; weixinIndexVisited=1; sct=1; SNUID=E3F7375C282C40157F7CE81D2941A5AD; JSESSIONID=aaaXYaqQLrCiqei2IKOiw',

14 'Host':'weixin.sogou.com',

15 'Referer':url,

16 'Upgrade-Insecure-Requests':'1',

17 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.3'

18 }

19 requests.Encoding='utf-8'

20 proxy_list = get_proxy()

21 #使用代理并尝试请求,直到成功

22 while True:

23 proxy_ip = random.choice(proxy_list)

24 try:

25 response = requests.get(url=url,headers=header,proxies=proxy_ip).content

26 selector = html.fromstring(response)

27 content_url = selector.xpath('//*[@class="news-box"] /ul/li/div[2]/h3/a/@href')

28 break

29 except:

30 continue

31 return content_url

32

33 def get_proxy(url='http://api.xicidaili.com'):

34 requests.Encoding='utf-8'

35 header = {

36 'Accept':'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,image/apng,*/*;q=0.8',

37 'Accept-Encoding':'gzip, deflate',

38 'Accept-Language':'zh-CN,zh;q=0.9',

39 'Cache-Control':'max-age=0',

40 'Connection':'keep-alive',

41 'Cookie':'_free_proxy_session=BAh7B0kiD3Nlc3Npb25faWQGOgZFVEkiJTE1MWEzNGE0MzE1ODA3M2I3MDFkN2RhYjQ4MzZmODgzBjsAVEkiEF9jc3JmX3Rva2VuBjsARkkiMWZNM0MrZ0xic09JajRmVGpIUDB5Q29aSSs3SlAzSTM4TlZsLzNOKzVaQkE9BjsARg%3D%3D--eb08c6dcb3096d2d2c5a4bc77ce8dad2480268bd; Hm_lvt_0cf76c77469e965d2957f0553e6ecf59=1521984682; Hm_lpvt_0cf76c77469e965d2957f0553e6ecf59=1521984778',

42 'Host':'www.xicidaili.com',

43 'If-None-Match':'W/"df77cf304c5ba860bd40d8890267467b"',

44 'Referer':'http://www.xicidaili.com/api',

45 'Upgrade-Insecure-Requests':'1',

46 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.186 Safari/537.3'

47 }

48 response = requests.get(url=url,headers=header).content

49 selector = html.fromstring(response)

50 proxy_list = selector.xpath('//*[@id="ip_list"]/tr/td[2]/text()')

51 return proxy_list

52

53

54 if __name__ == '__main__':

55 for i in range(1,10):

56 print(get_weixin(i))通过这个爬虫,学会了如何使用代理IP请求。

来源:oschina

链接:https://my.oschina.net/u/4325884/blog/4034574