下载地址:https://atlas.apache.org/#/Downloads

上传到服务器并解压:

tar -zxvf apache-atlas-2.0.0-sources.tar.gz

编译安装

- 配置maven环境

#下载maven安装包

$ wget http://mirror.bit.edu.cn/apache/maven/maven-3/3.6.3/binaries/apache-maven-3.6.3-bin.tar.gz

$ tar -zxvf apache-maven-3.6.3-bin.tar.gz

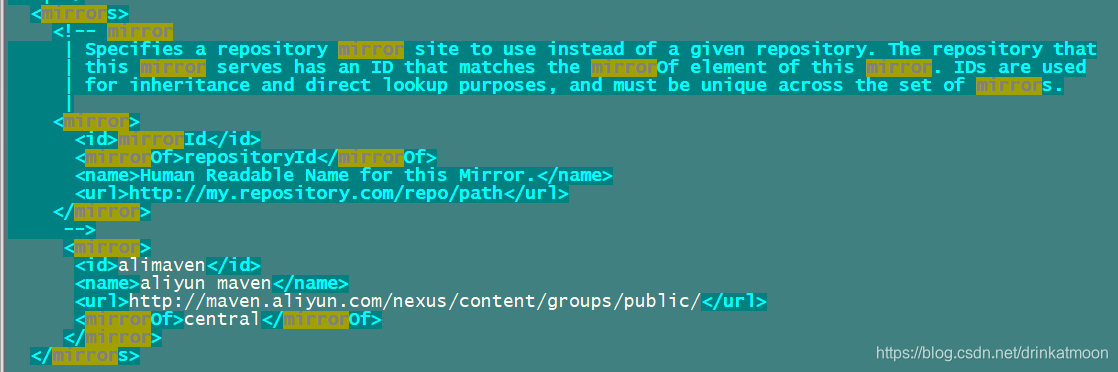

#修改setting.xml,添加阿里镜像:

$ cd apache-maven-3.6.3

$ vi conf/settings.xml

<mirror>

<id>alimaven</id>

<name>aliyun maven</name>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

<mirrorOf>central</mirrorOf>

</mirror>

#配置mvn环境变量

$ vi /etc/profile

export MAVEN_HOME=/root/apache-maven-3.6.3

export PATH=$MAVEN_HOME/bin:$PATH

$ source /etc/profile

#查看maven版本

$ mvn -v

#开始编译atlas,将hbase与solr一起进行编译

$ cd ~/apache-atlas-sources-2.0.0

#2.0 版本已经内部设置 MAVEN_OPTS,可省略该步

# export MAVEN_OPTS="-Xms2g -Xmx2g"

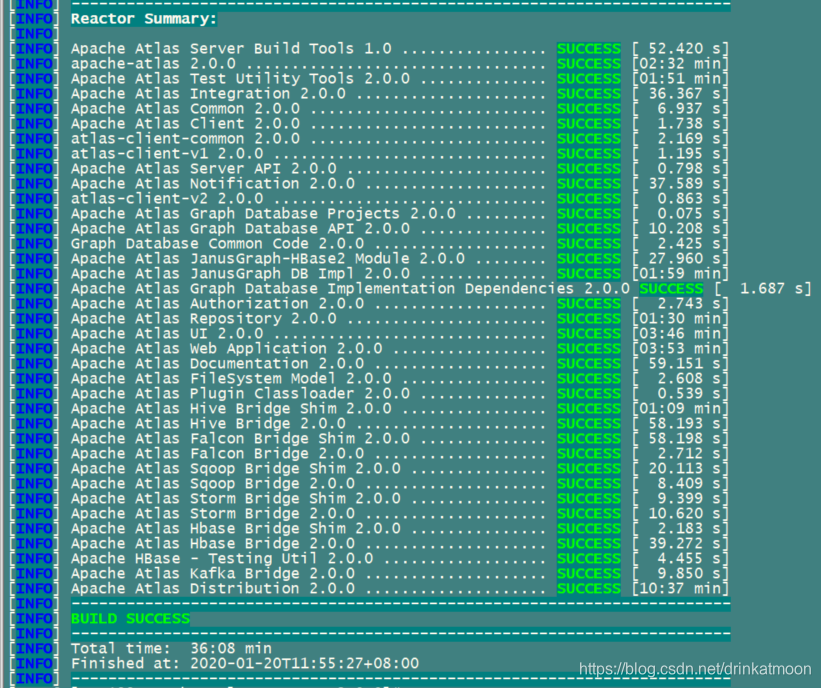

$ mvn clean -DskipTests package -Pdist,embedded-hbase-solr

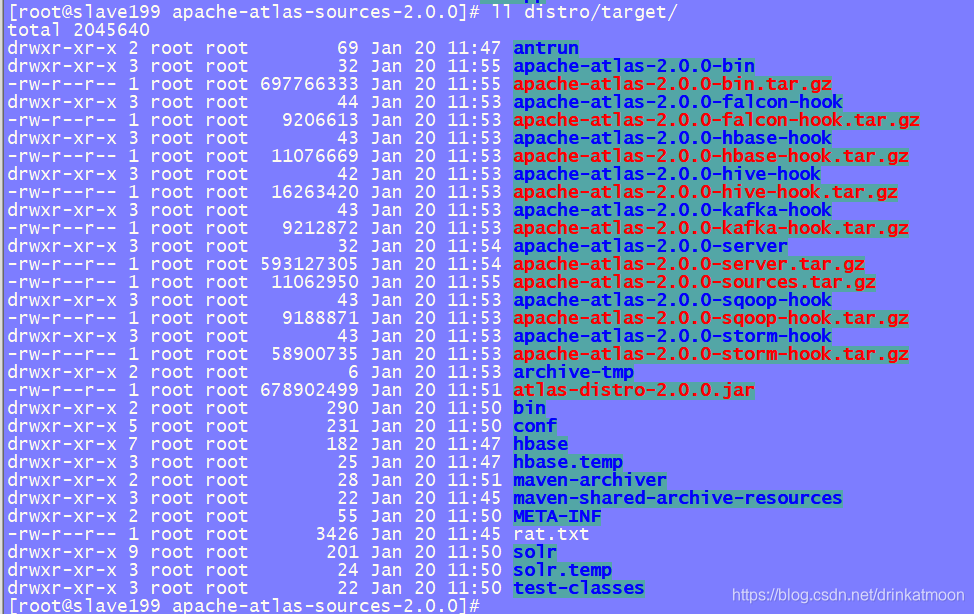

#编译完成后如下图,安装包位于distro/target目录下

#将target目录下的apache-atlas-2.0.0-bin.tar.gz解压到/opt

$ tar -zxvf distro/target/apache-atlas-2.0.0-bin.tar.gz -C /opt

$ chown big-data:big-data -R /opt/apache-atlas-2.0.0/

$ cd /opt/apache-atlas-2.0.0

$ vim conf/atlas-env.sh

export JAVA_HOME=/usr/java/jdk1.8.0_181-cloudera/

#如果没有使用内嵌的hbase,需要修改conf/atlas-application.properties中相关配置

#启动atlas(由于该节点上部署的impalad进程占用了21000端口,需要调整)

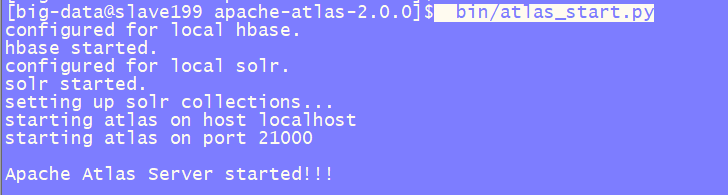

$ bin/atlas_start.py

#停止atlas服务

$ bin/atlas_stop.py

#通过日志可知atlas启动了内置的hbase,solr

访问atlas

启动成功后,浏览器输入:http://slave199:21000 ,默认用户名密码为admin/admin

solr的UI地址:http://slave199:9838/solr

运行示例数据:bin/quick_start.py

Enter username for atlas :- admin

Enter password for atlas :- admin

#过程日志如下:

log4j:WARN No such property [maxFileSize] in org.apache.log4j.PatternLayout.

log4j:WARN No such property [maxBackupIndex] in org.apache.log4j.PatternLayout.

log4j:WARN No such property [maxFileSize] in org.apache.log4j.PatternLayout.

log4j:WARN No such property [maxBackupIndex] in org.apache.log4j.PatternLayout.

log4j:WARN No such property [maxFileSize] in org.apache.log4j.PatternLayout.

log4j:WARN No such property [maxFileSize] in org.apache.log4j.PatternLayout.

log4j:WARN No such property [maxBackupIndex] in org.apache.log4j.PatternLayout.

Enter username for atlas :- admin

Enter password for atlas :-

Creating sample types:

Created type [DB]

Created type [Table]

Created type [StorageDesc]

Created type [Column]

Created type [LoadProcess]

Created type [View]

Created type [JdbcAccess]

Created type [ETL]

Created type [Metric]

Created type [PII]

Created type [Fact]

Created type [Dimension]

Created type [Log Data]

Created type [Table_DB]

Created type [View_DB]

Created type [View_Tables]

Created type [Table_Columns]

Created type [Table_StorageDesc]

Creating sample entities:

Created entity of type [DB], guid: 72bf5b6f-7eb7-4f80-8c7f-7ae7e9b0dbd1

Created entity of type [DB], guid: a4392aec-9b36-4d2f-b2d7-2ef2f4a83c10

Created entity of type [DB], guid: 0c18f24a-d157-4f85-bd56-c93965371338

Created entity of type [Table], guid: d1a30c78-2939-4624-8e17-abf16855e5e0

Created entity of type [Table], guid: 47527a26-f176-4bb5-8eb4-57b72c7928b4

Created entity of type [Table], guid: 8829432f-932f-43ec-9bab-38603b1bc5df

Created entity of type [Table], guid: 4faafd4d-2f6d-4046-8356-333837966e1f

Created entity of type [Table], guid: f9562fc1-8eab-4a99-be99-ffc22f632cfd

Created entity of type [Table], guid: 8e4fe39a-48ca-4cd4-8a51-6e359645859e

Created entity of type [Table], guid: 37a931d6-a4d2-439d-9401-668260fb7727

Created entity of type [Table], guid: 9e8a05dd-6ec8-4bd4-b42d-3d32f7c28f77

Created entity of type [View], guid: dfcf25d4-1081-41ca-9e88-15ec2ad5cce7

Created entity of type [View], guid: 3cd7452a-e2ab-495e-abe1-c826975a2757

Created entity of type [LoadProcess], guid: fd60947e-4235-4a45-9dd1-31fe702ca360

Created entity of type [LoadProcess], guid: 7e45dd12-da78-48c2-911f-88d09c1f3797

Created entity of type [LoadProcess], guid: a16c8a80-4c36-40d3-9702-57dbc427ee1d

Sample DSL Queries:

query [from DB] returned [3] rows.

query [DB] returned [3] rows.

query [DB where name=%22Reporting%22] returned [1] rows.

query [DB where name=%22encode_db_name%22] returned [ 0 ] rows.

query [Table where name=%2522sales_fact%2522] returned [1] rows.

query [DB where name="Reporting"] returned [1] rows.

query [DB where DB.name="Reporting"] returned [1] rows.

query [DB name = "Reporting"] returned [1] rows.

query [DB DB.name = "Reporting"] returned [1] rows.

query [DB where name="Reporting" select name, owner] returned [1] rows.

query [DB where DB.name="Reporting" select name, owner] returned [1] rows.

query [DB has name] returned [3] rows.

query [DB where DB has name] returned [3] rows.

query [DB is JdbcAccess] returned [ 0 ] rows.

query [from Table] returned [8] rows.

query [Table] returned [8] rows.

query [Table is Dimension] returned [5] rows.

query [Column where Column isa PII] returned [3] rows.

query [View is Dimension] returned [2] rows.

query [Column select Column.name] returned [10] rows.

query [Column select name] returned [9] rows.

query [Column where Column.name="customer_id"] returned [1] rows.

query [from Table select Table.name] returned [8] rows.

query [DB where (name = "Reporting")] returned [1] rows.

query [DB where DB is JdbcAccess] returned [ 0 ] rows.

query [DB where DB has name] returned [3] rows.

query [DB as db1 Table where (db1.name = "Reporting")] returned [ 0 ] rows.

query [Dimension] returned [9] rows.

query [JdbcAccess] returned [2] rows.

query [ETL] returned [6] rows.

query [Metric] returned [4] rows.

query [PII] returned [3] rows.

query [`Log Data`] returned [4] rows.

query [Table where name="sales_fact", columns] returned [4] rows.

query [Table where name="sales_fact", columns as column select column.name, column.dataType, column.comment] returned [4] rows.

query [from DataSet] returned [10] rows.

query [from Process] returned [3] rows.

Sample Lineage Info:

loadSalesDaily(LoadProcess) -> sales_fact_daily_mv(Table)

loadSalesMonthly(LoadProcess) -> sales_fact_monthly_mv(Table)

sales_fact(Table) -> loadSalesDaily(LoadProcess)

sales_fact_daily_mv(Table) -> loadSalesMonthly(LoadProcess)

time_dim(Table) -> loadSalesDaily(LoadProcess)

Sample data added to Apache Atlas Server.

引入hive hook

- 1.修改配置文件 atlas-application.properties

######### Hive Hook Configs ######### atlas.hook.hive.synchronous=false atlas.hook.hive.numRetries=3 atlas.hook.hive.queueSize=10000 atlas.cluster.name=primary - 2.将配置文件打包到atlas-plugin-classloader-2.0.0.jar中

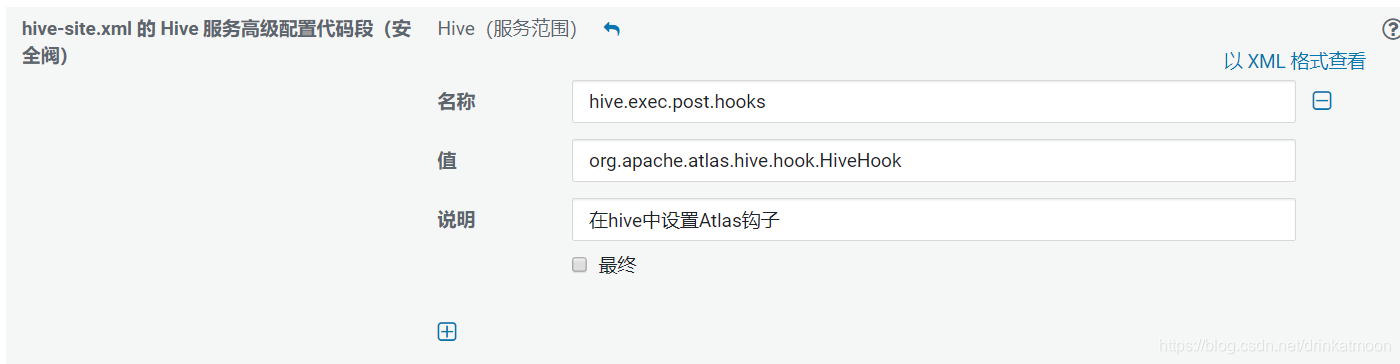

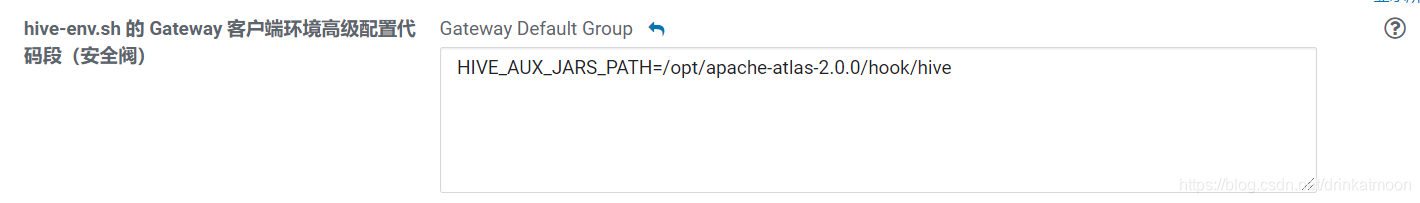

zip -u /opt/apache-atlas-2.0.0/hook/hive/atlas-plugin-classloader-2.0.0.jar /opt/apache-atlas-2.0.0/conf/atlas-application.properties - 3.hive-site.xml以及hive-env.sh,CM可通过界面进行,配置完成后需要重启

<property> <name>hive.exec.post.hooks</name> <value>org.apache.atlas.hive.hook.HiveHook</value> </property>

HIVE_AUX_JARS_PATH=/opt/apache-atlas-2.0.0/hook/hive

-

4.将配置文件atlas-application.properties复制到集群hive节点的/etc/hive/conf 目录下

sudo cp /opt/apache-atlas-2.0.0/conf/atlas-application.properties /etc/hive/conf/ -

5.执行import-hive.sh,用于将Apache Hive数据库和表的元数据导入Apache Atlas,该脚本支持导入特定表,特定数据库中的表或所有数据库和表的元数据:

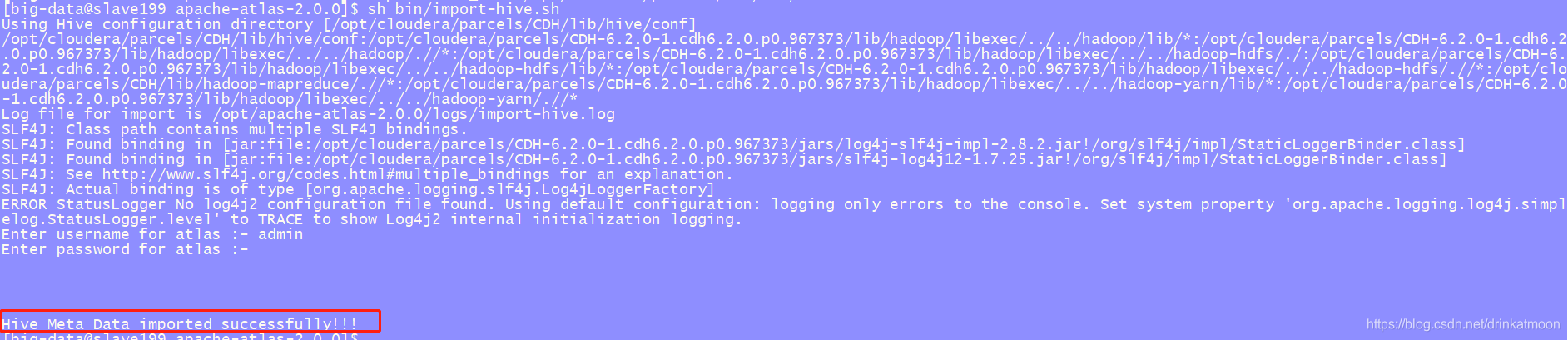

import-hive.sh [-d <database regex> OR --database <database regex>] [-t <table regex> OR --table <table regex>]$ export HIVE_HOME=/opt/cloudera/parcels/CDH/lib/hive $ sh bin/import-hive.sh #如果hive库中表很多,执行会花很长时间,可查看import日志: /opt/apache-atlas-2.0.0/logs/application.log ,执行完成后控制台会输出:Hive Meta Data imported successfully!!!

-

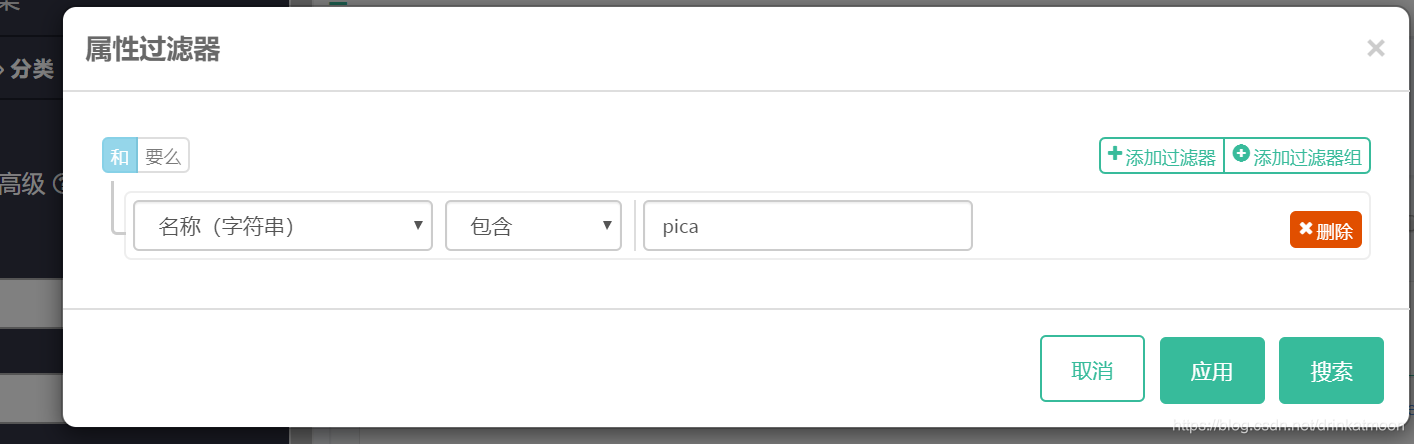

6.查看hive元数据导入结果,点击search功能按钮,在按类型选择下拉选里选择hive_table,点击漏斗图标,弹出属性过滤器,选择名称-包含-pica,点击搜索,结果中显示出了导入元数据的表

-

7.导入指定数据库表元数据

$ hive -e "create table test_atlas(id int ,name string)" $ sh bin/import-hive.sh -t test_atlas #然后在页面上搜索

来源:CSDN

作者:drinkatmoon

链接:https://blog.csdn.net/drinkatmoon/article/details/104070032