1 场景描述

在分布式应用, 往往存在多个进程提供同一服务. 这些进程有可能在相同的机器上, 也有可能分布在不同的机器上. 如果这些进程共享了一些资源, 可能就需要分布式锁来锁定对这些资源的访问。

2 思路

进程需要访问共享数据时, 就在"/locks"节点下创建一个sequence类型的子节点, 称为thisPath. 当thisPath在所有子节点中最小时, 说明该进程获得了锁. 进程获得锁之后, 就可以访问共享资源了. 访问完成后, 需要将thisPath删除. 锁由新的最小的子节点获得.

有了清晰的思路之后, 还需要补充一些细节. 进程如何知道thisPath是所有子节点中最小的呢? 可以在创建的时候, 通过getChildren方法获取子节点列表, 然后在列表中找到排名比thisPath前1位的节点, 称为waitPath, 然后在waitPath上注册监听, 当waitPath被删除后, 进程获得通知, 此时说明该进程获得了锁.

3 算法

-

lock操作过程:

首先为一个lock场景,在zookeeper中指定对应的一个根节点,用于记录资源竞争的内容;

每个lock创建后,会lazy在zookeeper中创建一个node节点,表明对应的资源竞争标识。 (小技巧:

node节点为EPHEMERAL_SEQUENTIAL,自增长的临时节点);进行lock操作时,获取对应lock根节点下的所有子节点,也即处于竞争中的资源标识;

按照

Fair(公平)竞争的原则,按照对应的自增内容做排序,取出编号最小的一个节点做为lock的owner,判断自己的节点id是否就为owner id,如果是则返回,lock成功。如果自己非owner id,按照排序的结果

找到序号比自己前一位的id,关注它锁释放的操作(也就是exist watcher),形成一个链式的触发过程; -

unlock操作过程:

将自己id对应的节点删除即可,对应的下一个排队的节点就可以收到Watcher事件,从而被唤醒得到锁后退出; -

其中的几个关键点:

node节点选择为EPHEMERAL_SEQUENTIAL很重要。

自增长的特性,可以方便构建一个基于Fair特性的锁,前一个节点唤醒后一个节点,形成一个链式的触发过程。可以有效的避免"惊群效应"(一个锁释放,所有等待的线程都被唤醒),有针对性的唤醒,提升性能。选择一个EPHEMERAL临时节点的特性。因为和zookeeper交互是一个网络操作,不可控因素过多,比如网络断了,上一个节点释放锁的操作会失败。临时节点是和对应的session挂接的,session一旦超时或者异常退出其节点就会消失,类似于ReentrantLock中等待队列Thread的被中断处理。获取lock操作是一个阻塞的操作,而对应的Watcher是一个异步事件,所以需要使用互斥信号共享锁BooleanMutex进行通知,可以比较方便的解决锁重入的问题。(锁重入可以理解为多次读操作,锁释放为写抢占操作) -

注意:

使用EPHEMERAL会引出一个风险:在非正常情况下,

网络延迟比较大会出现session timeout,zookeeper就会认为该client已关闭,从而销毁其id标示,竞争资源的下一个id就可以获取锁。这时可能会有两个process同时拿到锁在跑任务,所以设置好session timeout很重要。同样

使用PERSISTENT同样会存在一个死锁的风险,进程异常退出后,对应的竞争资源id一直没有删除,下一个id一直无法获取到锁对象。

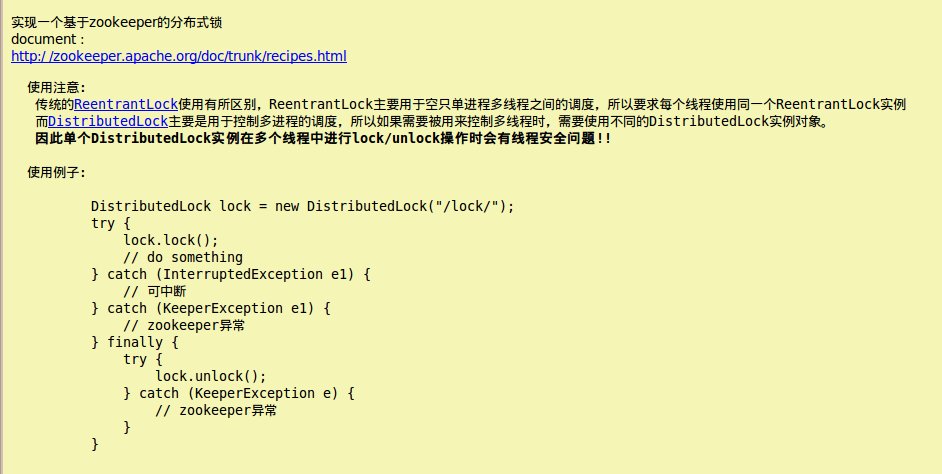

4 实现

1. DistributedLock.java源码:分布式锁

1 package com.king.lock;

2

3 import java.io.IOException;

4 import java.util.List;

5 import java.util.SortedSet;

6 import java.util.TreeSet;

7

8 import org.apache.commons.lang3.StringUtils;

9 import org.apache.zookeeper.*;

10 import org.apache.zookeeper.data.Stat;

11

12 /**

13 * Zookeeper 分布式锁

14 */

15 public class DistributedLock {

16

17 private static final int SESSION_TIMEOUT = 10000;

18

19 private static final int DEFAULT_TIMEOUT_PERIOD = 10000;

20

21 private static final String CONNECTION_STRING = "127.0.0.1:2180,127.0.0.1:2181,127.0.0.1:2182,127.0.0.1:2183";

22

23 private static final byte[] data = {0x12, 0x34};

24

25 private ZooKeeper zookeeper;

26

27 private String root;

28

29 private String id;

30

31 private LockNode idName;

32

33 private String ownerId;

34

35 private String lastChildId;

36

37 private Throwable other = null;

38

39 private KeeperException exception = null;

40

41 private InterruptedException interrupt = null;

42

43 public DistributedLock(String root) {

44 try {

45 this.zookeeper = new ZooKeeper(CONNECTION_STRING, SESSION_TIMEOUT, null);

46 this.root = root;

47 ensureExists(root);

48 } catch (IOException e) {

49 e.printStackTrace();

50 other = e;

51 }

52 }

53

54 /**

55 * 尝试获取锁操作,阻塞式可被中断

56 */

57 public void lock() throws Exception {

58 // 可能初始化的时候就失败了

59 if (exception != null) {

60 throw exception;

61 }

62

63 if (interrupt != null) {

64 throw interrupt;

65 }

66

67 if (other != null) {

68 throw new Exception("", other);

69 }

70

71 if (isOwner()) {// 锁重入

72 return;

73 }

74

75 BooleanMutex mutex = new BooleanMutex();

76 acquireLock(mutex);

77 // 避免zookeeper重启后导致watcher丢失,会出现死锁使用了超时进行重试

78 try {

79 // mutex.lockTimeOut(DEFAULT_TIMEOUT_PERIOD, TimeUnit.MICROSECONDS);// 阻塞等待值为true

80 mutex.lock();

81 } catch (Exception e) {

82 e.printStackTrace();

83 if (!mutex.state()) {

84 lock();

85 }

86 }

87

88 if (exception != null) {

89 throw exception;

90 }

91

92 if (interrupt != null) {

93 throw interrupt;

94 }

95

96 if (other != null) {

97 throw new Exception("", other);

98 }

99 }

100

101 /**

102 * 尝试获取锁对象, 不会阻塞

103 *

104 * @throws InterruptedException

105 * @throws KeeperException

106 */

107 public boolean tryLock() throws Exception {

108 // 可能初始化的时候就失败了

109 if (exception != null) {

110 throw exception;

111 }

112

113 if (isOwner()) { // 锁重入

114 return true;

115 }

116

117 acquireLock(null);

118

119 if (exception != null) {

120 throw exception;

121 }

122

123 if (interrupt != null) {

124 Thread.currentThread().interrupt();

125 }

126

127 if (other != null) {

128 throw new Exception("", other);

129 }

130

131 return isOwner();

132 }

133

134 /**

135 * 释放锁对象

136 */

137 public void unlock() throws KeeperException {

138 if (id != null) {

139 try {

140 zookeeper.delete(root + "/" + id, -1);

141 } catch (InterruptedException e) {

142 Thread.currentThread().interrupt();

143 } catch (KeeperException.NoNodeException e) {

144 // do nothing

145 } finally {

146 id = null;

147 }

148 } else {

149 //do nothing

150 }

151 }

152

153 /**

154 * 判断某path节点是否存在,不存在就创建

155 * @param path

156 */

157 private void ensureExists(final String path) {

158 try {

159 Stat stat = zookeeper.exists(path, false);

160 if (stat != null) {

161 return;

162 }

163 zookeeper.create(path, data, ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT);

164 } catch (KeeperException e) {

165 exception = e;

166 } catch (InterruptedException e) {

167 Thread.currentThread().interrupt();

168 interrupt = e;

169 }

170 }

171

172 /**

173 * 返回锁对象对应的path

174 */

175 public String getRoot() {

176 return root;

177 }

178

179 /**

180 * 判断当前是不是锁的owner

181 */

182 public boolean isOwner() {

183 return id != null && ownerId != null && id.equals(ownerId);

184 }

185

186 /**

187 * 返回当前的节点id

188 */

189 public String getId() {

190 return this.id;

191 }

192

193 // ===================== helper method =============================

194

195 /**

196 * 执行lock操作,允许传递watch变量控制是否需要阻塞lock操作

197 */

198 private Boolean acquireLock(final BooleanMutex mutex) {

199 try {

200 do {

201 if (id == null) { // 构建当前lock的唯一标识

202 long sessionId = zookeeper.getSessionId();

203 String prefix = "x-" + sessionId + "-";

204 // 如果第一次,则创建一个节点

205 String path = zookeeper.create(root + "/" + prefix, data, ZooDefs.Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL_SEQUENTIAL);

206 int index = path.lastIndexOf("/");

207 id = StringUtils.substring(path, index + 1);

208 idName = new LockNode(id);

209 }

210

211 if (id != null) {

212 List<String> names = zookeeper.getChildren(root, false);

213 if (names.isEmpty()) {

214 id = null; // 异常情况,重新创建一个

215 } else {

216 // 对节点进行排序

217 SortedSet<LockNode> sortedNames = new TreeSet<>();

218 for (String name : names) {

219 sortedNames.add(new LockNode(name));

220 }

221

222 if (!sortedNames.contains(idName)) {

223 id = null;// 清空为null,重新创建一个

224 continue;

225 }

226

227 // 将第一个节点做为ownerId

228 ownerId = sortedNames.first().getName();

229 if (mutex != null && isOwner()) {

230 mutex.unlock();// 直接更新状态,返回

231 return true;

232 } else if (mutex == null) {

233 return isOwner();

234 }

235

236 SortedSet<LockNode> lessThanMe = sortedNames.headSet(idName);

237 if (!lessThanMe.isEmpty()) {

238 // 关注一下排队在自己之前的最近的一个节点

239 LockNode lastChildName = lessThanMe.last();

240 lastChildId = lastChildName.getName();

241 // 异步watcher处理

242 Stat stat = zookeeper.exists(root + "/" + lastChildId, new Watcher() {

243 publicvoidprocess(WatchedEvent event) {

244 acquireLock(mutex);

245 }

246 });

247

248 if (stat == null) {

249 acquireLock(mutex);// 如果节点不存在,需要自己重新触发一下,watcher不会被挂上去

250 }

251 } else {

252 if (isOwner()) {

253 mutex.unlock();

254 } else {

255 id = null;// 可能自己的节点已超时挂了,所以id和ownerId不相同

256 }

257 }

258 }

259 }

260 } while (id == null);

261 } catch (KeeperException e) {

262 exception = e;

263 if (mutex != null) {

264 mutex.unlock();

265 }

266 } catch (InterruptedException e) {

267 interrupt = e;

268 if (mutex != null) {

269 mutex.unlock();

270 }

271 } catch (Throwable e) {

272 other = e;

273 if (mutex != null) {

274 mutex.unlock();

275 }

276 }

277

278 if (isOwner() && mutex != null) {

279 mutex.unlock();

280 }

281 return Boolean.FALSE;

282 }

283 }

2. BooleanMutex.java源码:互斥信号共享锁

1 package com.king.lock;

2

3 import java.util.concurrent.TimeUnit;

4 import java.util.concurrent.TimeoutException;

5 import java.util.concurrent.locks.AbstractQueuedSynchronizer;

6

7 /**

8 * 互斥信号共享锁

9 */

10 public class BooleanMutex {

11

12 private Sync sync;

13

14 public BooleanMutex() {

15 sync = new Sync();

16 set(false);

17 }

18

19 /**

20 * 阻塞等待Boolean为true

21 * @throws InterruptedException

22 */

23 public void lock() throws InterruptedException {

24 sync.innerLock();

25 }

26

27 /**

28 * 阻塞等待Boolean为true,允许设置超时时间

29 * @param timeout

30 * @param unit

31 * @throws InterruptedException

32 * @throws TimeoutException

33 */

34 public void lockTimeOut(long timeout, TimeUnit unit) throws InterruptedException, TimeoutException {

35 sync.innerLock(unit.toNanos(timeout));

36 }

37

38 public void unlock(){

39 set(true);

40 }

41

42 /**

43 * 重新设置对应的Boolean mutex

44 * @param mutex

45 */

46 public void set(Boolean mutex) {

47 if (mutex) {

48 sync.innerSetTrue();

49 } else {

50 sync.innerSetFalse();

51 }

52 }

53

54 public boolean state() {

55 return sync.innerState();

56 }

57

58 /**

59 * 互斥信号共享锁

60 */

61 private final class Sync extends AbstractQueuedSynchronizer {

62 private static final long serialVersionUID = -7828117401763700385L;

63

64 /**

65 * 状态为1,则唤醒被阻塞在状态为FALSE的所有线程

66 */

67 private static final int TRUE = 1;

68 /**

69 * 状态为0,则当前线程阻塞,等待被唤醒

70 */

71 private static final int FALSE = 0;

72

73 /**

74 * 返回值大于0,则执行;返回值小于0,则阻塞

75 */

76 protected int tryAcquireShared(int arg) {

77 return getState() == 1 ? 1 : -1;

78 }

79

80 /**

81 * 实现AQS的接口,释放共享锁的判断

82 */

83 protected boolean tryReleaseShared(int ignore) {

84 // 始终返回true,代表可以release

85 return true;

86 }

87

88 privatebooleaninnerState() {

89 return getState() == 1;

90 }

91

92 privatevoidinnerLock()throws InterruptedException {

93 acquireSharedInterruptibly(0);

94 }

95

96 privatevoidinnerLock(long nanosTimeout)throws InterruptedException, TimeoutException {

97 if (!tryAcquireSharedNanos(0, nanosTimeout))

98 throw new TimeoutException();

99 }

100

101 privatevoidinnerSetTrue() {

102 for (;;) {

103 int s = getState();

104 if (s == TRUE) {

105 return; // 直接退出

106 }

107 if (compareAndSetState(s, TRUE)) {// cas更新状态,避免并发更新true操作

108 releaseShared(0);// 释放一下锁对象,唤醒一下阻塞的Thread

109 }

110 }

111 }

112

113 privatevoidinnerSetFalse() {

114 for (;;) {

115 int s = getState();

116 if (s == FALSE) {

117 return; //直接退出

118 }

119 if (compareAndSetState(s, FALSE)) {//cas更新状态,避免并发更新false操作

120 setState(FALSE);

121 }

122 }

123 }

124 }

125 }

3. 相关说明:

4. 测试类:

1 package com.king.lock;

2

3 import java.util.concurrent.CountDownLatch;

4 import java.util.concurrent.ExecutorService;

5 import java.util.concurrent.Executors;

6

7 import org.apache.zookeeper.KeeperException;

8

9 /**

10 * 分布式锁测试

11 * @author taomk

12 * @version 1.0

13 * @since 15-11-19 上午11:48

14 */

15 public class DistributedLockTest {

16

17 public static void main(String [] args) {

18 ExecutorService executor = Executors.newCachedThreadPool();

19 final int count = 50;

20 final CountDownLatch latch = new CountDownLatch(count);

21 for (int i = 0; i < count; i++) {

22 final DistributedLock node = new DistributedLock("/locks");

23 executor.submit(new Runnable() {

24 public void run() {

25 try {

26 Thread.sleep(1000);

27 // node.tryLock(); // 无阻塞获取锁

28 node.lock(); // 阻塞获取锁

29 Thread.sleep(100);

30

31 System.out.println("id: " + node.getId() + " is leader: " + node.isOwner());

32 } catch (InterruptedException e) {

33 e.printStackTrace();

34 } catch (KeeperException e) {

35 e.printStackTrace();

36 } catch (Exception e) {

37 e.printStackTrace();

38 } finally {

39 latch.countDown();

40 try {

41 node.unlock();

42 } catch (KeeperException e) {

43 e.printStackTrace();

44 }

45 }

46

47 }

48 });

49 }

50

51 try {

52 latch.await();

53 } catch (InterruptedException e) {

54 e.printStackTrace();

55 }

56

57 executor.shutdown();

58 }

59 }

60 控制台输出:

61

62 id: x-239027745716109354-0000000248 is leader: true

63 id: x-22854963329433645-0000000249 is leader: true

64 id: x-22854963329433646-0000000250 is leader: true

65 id: x-166970151413415997-0000000251 is leader: true

66 id: x-166970151413415998-0000000252 is leader: true

67 id: x-166970151413415999-0000000253 is leader: true

68 id: x-166970151413416000-0000000254 is leader: true

69 id: x-166970151413416001-0000000255 is leader: true

70 id: x-166970151413416002-0000000256 is leader: true

71 id: x-22854963329433647-0000000257 is leader: true

72 id: x-239027745716109355-0000000258 is leader: true

73 id: x-166970151413416003-0000000259 is leader: true

74 id: x-94912557367427124-0000000260 is leader: true

75 id: x-22854963329433648-0000000261 is leader: true

76 id: x-239027745716109356-0000000262 is leader: true

77 id: x-239027745716109357-0000000263 is leader: true

78 id: x-166970151413416004-0000000264 is leader: true

79 id: x-239027745716109358-0000000265 is leader: true

80 id: x-239027745716109359-0000000266 is leader: true

81 id: x-22854963329433649-0000000267 is leader: true

82 id: x-22854963329433650-0000000268 is leader: true

83 id: x-94912557367427125-0000000269 is leader: true

84 id: x-22854963329433651-0000000270 is leader: true

85 id: x-94912557367427126-0000000271 is leader: true

86 id: x-239027745716109360-0000000272 is leader: true

87 id: x-94912557367427127-0000000273 is leader: true

88 id: x-94912557367427128-0000000274 is leader: true

89 id: x-166970151413416005-0000000275 is leader: true

90 id: x-94912557367427129-0000000276 is leader: true

91 id: x-166970151413416006-0000000277 is leader: true

92 id: x-94912557367427130-0000000278 is leader: true

93 id: x-94912557367427131-0000000279 is leader: true

94 id: x-239027745716109361-0000000280 is leader: true

95 id: x-239027745716109362-0000000281 is leader: true

96 id: x-166970151413416007-0000000282 is leader: true

97 id: x-94912557367427132-0000000283 is leader: true

98 id: x-22854963329433652-0000000284 is leader: true

99 id: x-166970151413416008-0000000285 is leader: true

100 id: x-239027745716109363-0000000286 is leader: true

101 id: x-239027745716109364-0000000287 is leader: true

102 id: x-166970151413416009-0000000288 is leader: true

103 id: x-166970151413416010-0000000289 is leader: true

104 id: x-239027745716109365-0000000290 is leader: true

105 id: x-94912557367427133-0000000291 is leader: true

106 id: x-239027745716109366-0000000292 is leader: true

107 id: x-94912557367427134-0000000293 is leader: true

108 id: x-22854963329433653-0000000294 is leader: true

109 id: x-94912557367427135-0000000295 is leader: true

110 id: x-239027745716109367-0000000296 is leader: true

111 id: x-239027745716109368-0000000297 is leader: true

5 升级版

实现了一个分布式lock后,可以解决多进程之间的同步问题,但设计多线程+多进程的lock控制需求,单jvm中每个线程都和zookeeper进行网络交互成本就有点高了,所以基于DistributedLock,实现了一个分布式二层锁。

大致原理就是ReentrantLock 和 DistributedLock的一个结合:

单jvm的多线程竞争时,首先需要先拿到第一层的ReentrantLock的锁;拿到锁之后这个线程再去和其他JVM的线程竞争锁,最后拿到之后锁之后就开始处理任务;

锁的释放过程是一个反方向的操作,先释放DistributedLock,再释放ReentrantLock。 可以思考一下,如果先释放ReentrantLock,假如这个JVM ReentrantLock竞争度比较高,一直其他JVM的锁竞争容易被饿死。

1. DistributedReentrantLock.java源码:多进程+多线程分布式锁

1 package com.king.lock;

2

3 import java.text.MessageFormat;

4 import java.util.concurrent.locks.ReentrantLock;

5

6 import org.apache.zookeeper.KeeperException;

7

8 /**

9 * 多进程+多线程分布式锁

10 */

11 public class DistributedReentrantLock extends DistributedLock {

12

13 private static final String ID_FORMAT = "Thread[{0}] Distributed[{1}]";

14 private ReentrantLock reentrantLock = new ReentrantLock();

15

16 public DistributedReentrantLock(String root) {

17 super(root);

18 }

19

20 public void lock() throws Exception {

21 reentrantLock.lock();//多线程竞争时,先拿到第一层锁

22 super.lock();

23 }

24

25 public boolean tryLock() throws Exception {

26 //多线程竞争时,先拿到第一层锁

27 return reentrantLock.tryLock() && super.tryLock();

28 }

29

30 public void unlock() throws KeeperException {

31 super.unlock();

32 reentrantLock.unlock();//多线程竞争时,释放最外层锁

33 }

34

35 @Override

36 public String getId() {

37 return MessageFormat.format(ID_FORMAT, Thread.currentThread().getId(), super.getId());

38 }

39

40 @Override

41 public boolean isOwner() {

42 return reentrantLock.isHeldByCurrentThread() && super.isOwner();

43 }

44 }

2. 测试代码:

1 package com.king.lock;

2

3 import java.util.concurrent.CountDownLatch;

4 import java.util.concurrent.ExecutorService;

5 import java.util.concurrent.Executors;

6

7 import org.apache.zookeeper.KeeperException;

8

9 /**

10 * @author taomk

11 * @version 1.0

12 * @since 15-11-23 下午12:15

13 */

14 public class DistributedReentrantLockTest {

15

16 public static void main(String [] args) {

17 ExecutorService executor = Executors.newCachedThreadPool();

18 final int count = 50;

19 final CountDownLatch latch = new CountDownLatch(count);

20

21 final DistributedReentrantLock lock = new DistributedReentrantLock("/locks"); //单个锁

22 for (int i = 0; i < count; i++) {

23 executor.submit(new Runnable() {

24 public void run() {

25 try {

26 Thread.sleep(1000);

27 lock.lock();

28 Thread.sleep(100);

29

30 System.out.println("id: " + lock.getId() + " is leader: " + lock.isOwner());

31 } catch (Exception e) {

32 e.printStackTrace();

33 } finally {

34 latch.countDown();

35 try {

36 lock.unlock();

37 } catch (KeeperException e) {

38 e.printStackTrace();

39 }

40 }

41 }

42 });

43 }

44

45 try {

46 latch.await();

47 } catch (InterruptedException e) {

48 e.printStackTrace();

49 }

50

51 executor.shutdown();

52 }

53 }

6 最后

其实再可以发散一下,实现一个分布式的read/write lock,也差不多就是这个理了。大致思路:

- 竞争资源标示:

read_自增id , write_自增id; - 首先按照自增id进行排序,

如果队列的前边都是read标识,对应的所有read都获得锁。如果队列的前边是write标识,第一个write节点获取锁; - watcher监听:

read监听距离自己最近的一个write节点的exist,write监听距离自己最近的一个节点(read或者write节点);

来源:https://www.cnblogs.com/wxd0108/p/6264748.html