sqoop简介:

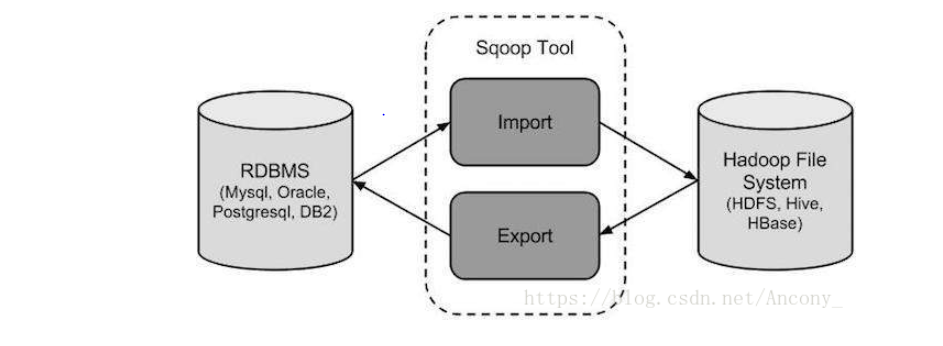

Sqoop is a tool designed to transfer data between Hadoop and relational databases or mainframes. You can use Sqoop to import data from a relational database management system (RDBMS) such as MySQL or Oracle or a mainframe into the Hadoop Distributed File System (HDFS), transform the data in Hadoop MapReduce, and then export the data back into an RDBMS.

Sqoop automates most of this process, relying on the database to describe the schema for the data to be imported. Sqoop uses MapReduce to import and export the data, which provides parallel operation as well as fault tolerance.

sqoop 在设计之初就被定义为数据传输的工具,你可以使用它在hadoop跟rdbms关系型数据库之间传输数据,例如MySQL,ORACLE数据导入到hadoop中,同时也支持把hadoop中的数据导入到rdbms中。

sqoop简化了数据导入,应用的流程。sqoop使用MR导入导出数据,提供了高容错特性。

传统的应用程序管理系统,即应用程序与使用RDBMS的关系数据库的交互,是产生大数据的来源之一。由RDBMS生成的这种大数据存储在关系数据库结构中的关系数据库服务器中。当大数据存储和Hadoop生态系统的MapReduce,Hive,HBase,Cassandra,Pig等分析器出现时,他们需要一种工具来与关系数据库服务器进行交互,以导入和导出驻留在其中的大数据。在这里,Sqoop在Hadoop生态系统中占据一席之地,以便在关系数据库服务器和Hadoop的HDFS之间提供可行的交互。

环境:

[hadoop@hd1 conf]$ more /etc/redhat-release

Red Hat Enterprise Linux Server release 6.6 (Santiago)

从上面的介绍我们了解到,sqoop 是一种数据传输工具,所以要部署sqoop就必须得有数据,这里采用hadoop存储结构化数据,MySQL存储关系型数据,为了更方便的操作hadoop里面的数据我们使用hive来实现。

hadoop:Hadoop 2.6.0-cdh5.7.0

启动hadoop所有组件:statr-all.sh

[hadoop@hd1 ~]$ start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

18/10/30 19:36:44 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting namenodes on [hd1]

hd1: starting namenode, logging to /home/hadoop/hadoop-2.6.0-cdh5.7.0/logs/hadoop-hadoop-namenode-hd1.out

hd4: starting datanode, logging to /home/hadoop/hadoop-2.6.0-cdh5.7.0/logs/hadoop-hadoop-datanode-hd4.out

hd3: starting datanode, logging to /home/hadoop/hadoop-2.6.0-cdh5.7.0/logs/hadoop-hadoop-datanode-hd3.out

hd2: starting datanode, logging to /home/hadoop/hadoop-2.6.0-cdh5.7.0/logs/hadoop-hadoop-datanode-hd2.out

Starting secondary namenodes [hd2]

hd2: starting secondarynamenode, logging to /home/hadoop/hadoop-2.6.0-cdh5.7.0/logs/hadoop-hadoop-secondarynamenode-hd2.out

18/10/30 19:37:04 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

starting yarn daemons

starting resourcemanager, logging to /home/hadoop/hadoop-2.6.0-cdh5.7.0/logs/yarn-hadoop-resourcemanager-hd1.out

hd2: starting nodemanager, logging to /home/hadoop/hadoop-2.6.0-cdh5.7.0/logs/yarn-hadoop-nodemanager-hd2.out

hd3: starting nodemanager, logging to /home/hadoop/hadoop-2.6.0-cdh5.7.0/logs/yarn-hadoop-nodemanager-hd3.out

hd4: starting nodemanager, logging to /home/hadoop/hadoop-2.6.0-cdh5.7.0/logs/yarn-hadoop-nodemanager-hd4.out

MySQL:5.7.20 MySQL Community Server (GPL)

/etc/init.d/mysqld start

Hive:hive-1.1.0-cdh5.7.0

Sqoop如何工作?

下图描述了Sqoop的工作流程。

首先需要部署hadoop集群,部署文档在 https://my.oschina.net/u/3862440/blog/1862524

hive部署在https://my.oschina.net/u/3862440/blog/2251273

sqoop部署:

1、解压安装包

tar -xvf sqoop-1.4.6-cdh5.7.0.tar.gz -C /home/hadoop/

(http://archive-primary.cloudera.com/cdh5/cdh/5/)

2、修改sqoop-env.sh

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/home/hadoop/hadoop-2.6.0-cdh5.7.0

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/home/hadoop/hadoop-2.6.0-cdh5.7.0

#set the path to where bin/hbase is available

#export HBASE_HOME=

#Set the path to where bin/hive is available

export HIVE_HOME=/home/hadoop/hive-1.1.0-cdh5.7.0

3、配置jdbc驱动

cp mysql-connector-java.jar /home/hadoop/sqoop-1.4.6-cdh5.7.0/lib/

4、配置sqoop PATH

export SQOOP_HOME=/home/hadoop/sqoop-1.4.6-cdh5.7.0

export PATH=$PATH:$SQOOP_HOME/bin

5、版本验证

[hadoop@hd1 conf]$ sqoop-version

18/10/30 19:52:06 INFO sqoop.Sqoop: Running Sqoop version: 1.4.6-cdh5.7.0

Sqoop 1.4.6-cdh5.7.0

git commit id

Compiled by jenkins on Wed Mar 23 11:30:51 PDT 2016

来源:oschina

链接:https://my.oschina.net/u/3862440/blog/2254670