要了解什么是URP,首先得先了解什么是SRP.而要了解什么是SRP,又得先了解什么是RenderPipeline.我们先来看看RenderPipeline究竟是什么.

RenderPipeline

在游戏场景中,有很多的模型效果需要绘制,如有不透明的(Opaque Object)、透明的(Transparencies)物体,有时候又需要使用屏幕深度贴图,后处理效果等.那么这些东西在什么时候来绘制,哪个阶段绘制什么东西,这就是由渲染管线来决定的.

默认渲染管线示意图

Unity默认的渲染管线如上图所示.我们来简单解释一下Forward Rendering的渲染管线.

在前向渲染中,相机开始绘制一帧图像时,如果你在代码中对一个摄像机设置了设置了

cam.depthTextureMode = DepthTextureMode.Depth;

那么该相机的渲染管线就会执行Depth Texture这一步操作.会把场景中所有RenderType=Opaque的Shader里面Tags{"LightMode" = "ShadowCaster"}的Pass全部执行一遍,把运行结果画到深度贴图中.所以如果相机开起了深度贴图,那么该相机的drawcall就会增加(所有Opaque的DrawCall翻了一倍).要是没有打开深度贴图,那么渲染管线就会略过这一步.

所以Shader 的Tags { "LightMode" "RenderType" "Queue"}等标签其实是和渲染管线息息相关的.Unity在进行绘制的时候,会根据这些标签,把Shader中对应的Pass放进上图中对应的步骤去执行.所以就有了先画不透明物体,再画透明物体这些绘制顺序.

总结来说,渲染管线就是定义好了一种绘制的顺序,相机在绘制每一帧的时候就是按这个定义的顺序去画场景中的一个个物体.

Scriptable Renderer Pipeline(SRP 可编程渲染管线)

SRP简单来说,就是之前定死的渲染管线现在可以自己来组织了.只要你乐意,就可以把不透明物体放到透明物体之后来画,或者在上面的步骤中可以插入很多的渲染步骤来满足你需要的效果.

SRP除了提供定义管线的自由度,还和很多更新的优化有关,比如SRP Batcher的使用等.

URP/LWRP

URP其实就是Unity定义出来的,适合大多数设备的一个SRP.它的具体实现细节可以通过代码来看到.

文件结构

在你添加完URP后,会看到如下的结构

URP的入口就是Universal RP/Runtime/UniversalRenderPipeline.Render函数

protected override void Render(ScriptableRenderContext renderContext, Camera[] cameras)

{

BeginFrameRendering(renderContext, cameras);

GraphicsSettings.lightsUseLinearIntensity = (QualitySettings.activeColorSpace == ColorSpace.Linear);

GraphicsSettings.useScriptableRenderPipelineBatching = asset.useSRPBatcher;

SetupPerFrameShaderConstants();

SortCameras(cameras);//根据相机深度把相机排好序

foreach (Camera camera in cameras)

{

BeginCameraRendering(renderContext, camera);

#if VISUAL_EFFECT_GRAPH_0_0_1_OR_NEWER

//It should be called before culling to prepare material. When there isn't any VisualEffect component, this method has no effect.

VFX.VFXManager.PrepareCamera(camera);

#endif

RenderSingleCamera(renderContext, camera);//渲染一个个相机

EndCameraRendering(renderContext, camera);

}

EndFrameRendering(renderContext, cameras);

}

关键的几步就是对摄像机根据深度排好序,然后调用RenderSingleCamera逐个去绘制每个摄像机.

RenderSingleCamera

public static void RenderSingleCamera(ScriptableRenderContext context, Camera camera)

{

//获取相机的裁剪参数

if (!camera.TryGetCullingParameters(IsStereoEnabled(camera), out var cullingParameters))

return;

var settings = asset;

UniversalAdditionalCameraData additionalCameraData = null;

if (camera.cameraType == CameraType.Game || camera.cameraType == CameraType.VR)

camera.gameObject.TryGetComponent(out additionalCameraData);

//根据PipelineAsset和UniversalAdditionalCameraData(相机上的设置)设置好摄像机参数

InitializeCameraData(settings, camera, additionalCameraData, out var cameraData);

//把相机的参数设置进shader共用变量,即shader中使用的一些内置shader变量

SetupPerCameraShaderConstants(cameraData);

//获取到该相机使用的ScriptableRenderer,即自己定义的SRP管线.

//该管线如果相机有自己定义好,就用相机定义的,没有则用asset设置的管线.

//URP中默认使用的是ForwardRenderer,就是在这里使用上了重新定义的渲染管线.

ScriptableRenderer renderer = (additionalCameraData != null) ? additionalCameraData.scriptableRenderer : settings.scriptableRenderer;

if (renderer == null)

{

Debug.LogWarning(string.Format("Trying to render {0} with an invalid renderer. Camera rendering will be skipped.", camera.name));

return;

}

//设置好FrameDebug中的标签名字

string tag = (asset.debugLevel >= PipelineDebugLevel.Profiling) ? camera.name: k_RenderCameraTag;

CommandBuffer cmd = CommandBufferPool.Get(tag);

using (new ProfilingSample(cmd, tag))

{

renderer.Clear();

//根据cameraData设置好cullingParameters

renderer.SetupCullingParameters(ref cullingParameters, ref cameraData);

context.ExecuteCommandBuffer(cmd);

cmd.Clear();

#if UNITY_EDITOR

// Emit scene view UI

if (cameraData.isSceneViewCamera)

ScriptableRenderContext.EmitWorldGeometryForSceneView(camera);

#endif

//根据裁剪参数计算出裁剪结果,管线中的所有渲染步骤都是从这个裁剪结果中筛选出自己要渲染的元素

var cullResults = context.Cull(ref cullingParameters);

//把前面获取到的所有参数都设置进renderingData

InitializeRenderingData(settings, ref cameraData, ref cullResults, out var renderingData);

//设置好管线中的所有设置

renderer.Setup(context, ref renderingData);

//执行管线中的一个个步骤

renderer.Execute(context, ref renderingData);

}

context.ExecuteCommandBuffer(cmd);

CommandBufferPool.Release(cmd);

context.Submit();

}

在这个函数中有几个函数可以说明一下

SetupPerCameraShaderConstants中把shader常用的几个内置变量给设置了

PerCameraBuffer._InvCameraViewProj = Shader.PropertyToID("_InvCameraViewProj");

PerCameraBuffer._ScreenParams = Shader.PropertyToID("_ScreenParams");

PerCameraBuffer._ScaledScreenParams = Shader.PropertyToID("_ScaledScreenParams");

PerCameraBuffer._WorldSpaceCameraPos = Shader.PropertyToID("_WorldSpaceCameraPos");

之前这些都是unity在背后给做了,现在这些都可以看到.所以现在Shader中使用的所有内置变量都可以找到出处,用的是那些数据进行设置的.

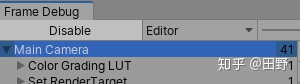

string tag = (asset.debugLevel >= PipelineDebugLevel.Profiling) ? camera.name: k_RenderCameraTag;这个tag决定了Frame Debug中显示的最顶层的名字.如果你设置了DebugLevel

那么你在FrameDebug中就可以看到相应摄像机的渲染步骤.

其实这个函数最关键的两步就是

//设置好管线中的所有设置

renderer.Setup(context, ref renderingData);

//执行管线中的一个个步骤

renderer.Execute(context, ref renderingData);从这里进入了ScriptableRenderer,URP自定义的渲染管线ForwardRenderer.

在ForwardRenderer中,定义了一堆的ScriptableRenderPass.ScriptableRenderPass其实就相当于渲染管线中的一个个步骤.如m_DepthPrepass就相当于开始图片中Depth Texture这一步,m_RenderOpaqueForwardPass就相当于默认管线中的Opaque Object这一步.

而SetUp函数做的就是把这些Pass组织起来,按什么顺序来定义这个渲染管线.下面截取一些来说明一下.

public override void Setup(ScriptableRenderContext context, ref RenderingData renderingData)

{

...

//这些rendererFeatures是在RenderData的配置文件中设置的,相当于我们自己如果要在管线中做一些添加

//就自己写上renderPass,添加到这些feature里.Forward管线在这里把我们自己的pass加进来.

for (int i = 0; i < rendererFeatures.Count; ++i)

{

rendererFeatures[i].AddRenderPasses(this, ref renderingData);

}

...

//这里就是把一个个的步骤加进管线,在FrameDebug中可以看到一个个的步骤

if (mainLightShadows)

EnqueuePass(m_MainLightShadowCasterPass);

if (additionalLightShadows)

EnqueuePass(m_AdditionalLightsShadowCasterPass);

if (requiresDepthPrepass)

{

m_DepthPrepass.Setup(cameraTargetDescriptor, m_DepthTexture);

EnqueuePass(m_DepthPrepass);

}

if (resolveShadowsInScreenSpace)

{

m_ScreenSpaceShadowResolvePass.Setup(cameraTargetDescriptor);

EnqueuePass(m_ScreenSpaceShadowResolvePass);

}

if (postProcessEnabled)

{

m_ColorGradingLutPass.Setup(m_ColorGradingLut);

EnqueuePass(m_ColorGradingLutPass);

}

EnqueuePass(m_RenderOpaqueForwardPass);

if (camera.clearFlags == CameraClearFlags.Skybox && RenderSettings.skybox != null)

EnqueuePass(m_DrawSkyboxPass);

// If a depth texture was created we necessarily need to copy it, otherwise we could have render it to a renderbuffer

if (createDepthTexture)

{

m_CopyDepthPass.Setup(m_ActiveCameraDepthAttachment, m_DepthTexture);

EnqueuePass(m_CopyDepthPass);

}

if (renderingData.cameraData.requiresOpaqueTexture)

{

// TODO: Downsampling method should be store in the renderer isntead of in the asset.

// We need to migrate this data to renderer. For now, we query the method in the active asset.

Downsampling downsamplingMethod = UniversalRenderPipeline.asset.opaqueDownsampling;

m_CopyColorPass.Setup(m_ActiveCameraColorAttachment.Identifier(), m_OpaqueColor, downsamplingMethod);

EnqueuePass(m_CopyColorPass);

}

EnqueuePass(m_RenderTransparentForwardPass);

EnqueuePass(m_OnRenderObjectCallbackPass);

bool afterRenderExists = renderingData.cameraData.captureActions != null ||

hasAfterRendering;

bool requiresFinalPostProcessPass = postProcessEnabled &&

renderingData.cameraData.antialiasing == AntialiasingMode.FastApproximateAntialiasing;

// if we have additional filters

// we need to stay in a RT

if (afterRenderExists)

{

bool willRenderFinalPass = (m_ActiveCameraColorAttachment != RenderTargetHandle.CameraTarget);

// perform post with src / dest the same

if (postProcessEnabled)

{

m_PostProcessPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment, m_AfterPostProcessColor, m_ActiveCameraDepthAttachment, m_ColorGradingLut, requiresFinalPostProcessPass, !willRenderFinalPass);

EnqueuePass(m_PostProcessPass);

}

//now blit into the final target

if (m_ActiveCameraColorAttachment != RenderTargetHandle.CameraTarget)

{

if (renderingData.cameraData.captureActions != null)

{

m_CapturePass.Setup(m_ActiveCameraColorAttachment);

EnqueuePass(m_CapturePass);

}

if (requiresFinalPostProcessPass)

{

m_FinalPostProcessPass.SetupFinalPass(m_ActiveCameraColorAttachment);

EnqueuePass(m_FinalPostProcessPass);

}

else

{

m_FinalBlitPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment);

EnqueuePass(m_FinalBlitPass);

}

}

}

else

{

if (postProcessEnabled)

{

if (requiresFinalPostProcessPass)

{

m_PostProcessPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment, m_AfterPostProcessColor, m_ActiveCameraDepthAttachment, m_ColorGradingLut, true, false);

EnqueuePass(m_PostProcessPass);

m_FinalPostProcessPass.SetupFinalPass(m_AfterPostProcessColor);

EnqueuePass(m_FinalPostProcessPass);

}

else

{

m_PostProcessPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment, RenderTargetHandle.CameraTarget, m_ActiveCameraDepthAttachment, m_ColorGradingLut, false, true);

EnqueuePass(m_PostProcessPass);

}

}

else if (m_ActiveCameraColorAttachment != RenderTargetHandle.CameraTarget)

{

m_FinalBlitPass.Setup(cameraTargetDescriptor, m_ActiveCameraColorAttachment);

EnqueuePass(m_FinalBlitPass);

}

}

#if UNITY_EDITOR

if (renderingData.cameraData.isSceneViewCamera)

{

m_SceneViewDepthCopyPass.Setup(m_DepthTexture);

EnqueuePass(m_SceneViewDepthCopyPass);

}

#endif

}

其实总结来说,SetUp()就是决定了渲染管线中会有哪些步骤,这些步骤的渲染顺序是什么.而每一步里面该怎么渲染,该渲染什么,都是在这一步的pass中自己定义的.

public void EnqueuePass(ScriptableRenderPass pass)

{

m_ActiveRenderPassQueue.Add(pass);

}EnqueuePass就是把这些pass都加进m_ActiveRenderPassQueue里面,后面的Execute部分则把这些pass都执行一遍,画出他们对应的东西.

public void Execute(ScriptableRenderContext context, ref RenderingData renderingData)

{

...

//把m_ActiveRenderPassQueue中的pass都进行排序.

SortStable(m_ActiveRenderPassQueue);

// Cache the time for after the call to `SetupCameraProperties` and set the time variables in shader

// For now we set the time variables per camera, as we plan to remove `SetupCamearProperties`.

// Setting the time per frame would take API changes to pass the variable to each camera render.

// Once `SetupCameraProperties` is gone, the variable should be set higher in the call-stack.

#if UNITY_EDITOR

float time = Application.isPlaying ? Time.time : Time.realtimeSinceStartup;

#else

float time = Time.time;

#endif

float deltaTime = Time.deltaTime;

float smoothDeltaTime = Time.smoothDeltaTime;

//shader中使用的内置time变量都在这边设置.

SetShaderTimeValues(time, deltaTime, smoothDeltaTime);

// Upper limits for each block. Each block will contains render passes with events below the limit.

NativeArray<RenderPassEvent> blockEventLimits = new NativeArray<RenderPassEvent>(k_RenderPassBlockCount, Allocator.Temp);

blockEventLimits[RenderPassBlock.BeforeRendering] = RenderPassEvent.BeforeRenderingPrepasses;

blockEventLimits[RenderPassBlock.MainRendering] = RenderPassEvent.AfterRenderingPostProcessing;

blockEventLimits[RenderPassBlock.AfterRendering] = (RenderPassEvent)Int32.MaxValue;

NativeArray<int> blockRanges = new NativeArray<int>(blockEventLimits.Length + 1, Allocator.Temp);

//把m_ActiveRenderPassQueue中的pass分成几块(BeforeRendering,MainRendering,AfterRendering)来执行.

FillBlockRanges(blockEventLimits, blockRanges);

blockEventLimits.Dispose();

//设置好shader中使用的内置light数据

SetupLights(context, ref renderingData);

// Before Render Block. This render blocks always execute in mono rendering.

// Camera is not setup. Lights are not setup.

// Used to render input textures like shadowmaps.

ExecuteBlock(RenderPassBlock.BeforeRendering, blockRanges, context, ref renderingData);

...

// Override time values from when `SetupCameraProperties` were called.

// They might be a frame behind.

// We can remove this after removing `SetupCameraProperties` as the values should be per frame, and not per camera.

SetShaderTimeValues(time, deltaTime, smoothDeltaTime);

...

// In this block main rendering executes.

ExecuteBlock(RenderPassBlock.MainRendering, blockRanges, context, ref renderingData);

...

// In this block after rendering drawing happens, e.g, post processing, video player capture.

ExecuteBlock(RenderPassBlock.AfterRendering, blockRanges, context, ref renderingData);

if (stereoEnabled)

EndXRRendering(context, camera);

DrawGizmos(context, camera, GizmoSubset.PostImageEffects);

//if (renderingData.resolveFinalTarget)

InternalFinishRendering(context);

blockRanges.Dispose();

}

Execute函数中把m_ActiveRenderPassQueue中的pass进行排序,并分成几块,设置好一些共用的shader内置变量后,把这几块pass都跑一遍.

void ExecuteBlock(int blockIndex, NativeArray<int> blockRanges,

ScriptableRenderContext context, ref RenderingData renderingData, bool submit = false)

{

int endIndex = blockRanges[blockIndex + 1];

for (int currIndex = blockRanges[blockIndex]; currIndex < endIndex; ++currIndex)

{

//找到对应的pass去执行

var renderPass = m_ActiveRenderPassQueue[currIndex];

ExecuteRenderPass(context, renderPass, ref renderingData);

}

if (submit)

context.Submit();

}

void ExecuteRenderPass(ScriptableRenderContext context, ScriptableRenderPass renderPass, ref RenderingData renderingData)

{

//设置好该renderPass的渲染目标,是往摄像机默认缓存里输出,还是输出到对应的renderTexture中.

...

//去执行这个renderPass的渲染

renderPass.Execute(context, ref renderingData);

}

所以我们现在已经知道了一个SRP到底是怎么把一个个渲染步骤联系起来执行的.

每一个步骤中怎么执行,到底渲染哪些东西,则是在每一个步骤里面决定的.

我们挑一个DepthOnlyPass来说明一下.

public override void Execute(ScriptableRenderContext context, ref RenderingData renderingData)

{

CommandBuffer cmd = CommandBufferPool.Get(m_ProfilerTag);

//string m_ProfilerTag = "Depth Prepass";可以在Frame Debug中找到这一步骤

using (new ProfilingSample(cmd, m_ProfilerTag))

{

context.ExecuteCommandBuffer(cmd);

cmd.Clear();

var sortFlags = renderingData.cameraData.defaultOpaqueSortFlags;

//ShaderTagId m_ShaderTagId = new ShaderTagId("DepthOnly");

//筛选出在裁剪结果里shader中LightMode="DepthOnly"的Pass来绘制.

var drawSettings = CreateDrawingSettings(m_ShaderTagId, ref renderingData, sortFlags);

drawSettings.perObjectData = PerObjectData.None;

ref CameraData cameraData = ref renderingData.cameraData;

Camera camera = cameraData.camera;

if (cameraData.isStereoEnabled)

context.StartMultiEye(camera);

//根据设置好的筛选条件来绘制物体.

context.DrawRenderers(renderingData.cullResults, ref drawSettings, ref m_FilteringSettings);

}

context.ExecuteCommandBuffer(cmd);

CommandBufferPool.Release(cmd);

}

其实每一个渲染步骤中,都会设置好这一步的绘制条件.比如在DepthOnly这一步骤,就会在cullResults筛选出来的所有东西里面找到那些有写了DepthOnly的Pass来绘制.所以你打开一个shader文件,会有好多个pass,每个pass都有对应的LightMode,这就是为了让这一个东西在渲染管线中对应的步骤去做相应的绘制.

所以总结来说,SRP其实就是把原先藏起来的渲染过程暴露出来,可以让我们清楚的看到在一个渲染流程中到底经历了哪些步骤,每一步骤都对哪些东西进行绘制.这些步骤的顺序,绘制的东西也都与FrameDebug窗口中的每一个条目一一对应.相当于从FrameDebug中就可以看到这个渲染管线的具体渲染步骤细节.

来源:oschina

链接:https://my.oschina.net/u/4349634/blog/4872972