- master主节点配置:

1.在server1上安装master和cgi等软件

[root@server1 ~]# ls

3.0.103

[root@server1 ~]# cd 3.0.103/

[root@server1 3.0.103]# ls

moosefs-cgi-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-cgiserv-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-cli-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-client-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm

moosefs-metalogger-3.0.103-1.rhsystemd.x86_64.rpm

[root@server1 3.0.103]# yum install -y moosefs-master-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cli-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cgi-3.0.103-1.rhsystemd.x86_64.rpm moosefs-cgiserv-3.0.103-1.rhsystemd.x86_64.rpm

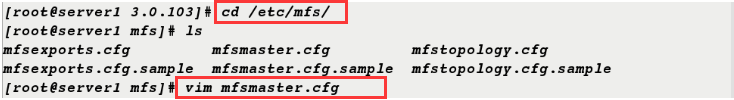

2.查看mfsmaster.cfg配置文件,只是查看不做任何修改

[root@server1 3.0.103]# cd /etc/mfs/

[root@server1 mfs]# ls

mfsexports.cfg mfsmaster.cfg mfstopology.cfg

mfsexports.cfg.sample mfsmaster.cfg.sample mfstopology.cfg.sample

[root@server1 mfs]# vim mfsmaster.cfg

3.打开moosefs-master服务并查看端口是否开启

[root@server1 ~]# cd 3.0.103/

[root@server1 3.0.103]# systemctl start moosefs-master

[root@server1 3.0.103]# netstat -antlp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:9419 0.0.0.0:* LISTEN 10499/mfsmaster

tcp 0 0 0.0.0.0:9420 0.0.0.0:* LISTEN 10499/mfsmaster

tcp 0 0 0.0.0.0:9421 0.0.0.0:* LISTEN 10499/mfsmaster

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 644/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 849/master

tcp 0 0 172.25.21.1:22 172.25.21.250:33508 ESTABLISHED 1004/sshd: root@pts

tcp6 0 0 :::22 :::* LISTEN 644/sshd

tcp6 0 0 ::1:25 :::* LISTEN 849/master

9419 metalogger 监听的端口地址(默认是9419),和源数据日志结合。定期和master端同步数据

9420 用于chunkserver 连接的端口地址(默认是9420),通信节点

9421 用于客户端对外连接的端口地址(默认是9421)

4.打开moosefs-cgiserv服务,并查看端口9425是否开启

[root@server1 3.0.103]# systemctl start moosefs-cgiserv

[root@server1 3.0.103]# netstat -antlp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:9419 0.0.0.0:* LISTEN 10499/mfsmaster

tcp 0 0 0.0.0.0:9420 0.0.0.0:* LISTEN 10499/mfsmaster

tcp 0 0 0.0.0.0:9421 0.0.0.0:* LISTEN 10499/mfsmaster

tcp 0 0 0.0.0.0:9425 0.0.0.0:* LISTEN 10509/python

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 644/sshd

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 849/master

tcp 0 0 172.25.21.1:22 172.25.21.250:33508 ESTABLISHED 1004/sshd: root@pts

tcp6 0 0 :::22 :::* LISTEN 644/sshd

tcp6 0 0 ::1:25 :::* LISTEN 849/master

5.做主节点解析

[root@server1 3.0.103]# vim /etc/hosts

172.25.21.1 server1 mfsmaster

6.浏览器中访问http://172.25.21.1:9425

- 配置从节点(chunkserver)server2

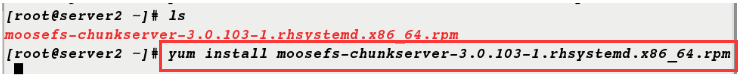

1.安装moosefs-chunkserver软件

[root@server2 ~]# ls

moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm

[root@server2 ~]# yum install moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm

2.添加解析

[root@server2 ~]# vim /etc/hosts

172.25.21.1 server1 mfsmaster

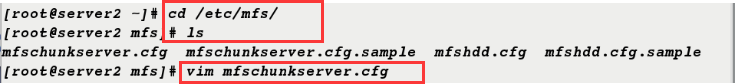

3.进入/etc/mfs目录下,查看mfschunkserver.cfg文件,(只是查看,不做任何修改)

[root@server2 ~]# cd /etc/mfs/

[root@server2 mfs]# ls

mfschunkserver.cfg mfschunkserver.cfg.sample mfshdd.cfg mfshdd.cfg.sample

[root@server2 mfs]# vim mfschunkserver.cfg

4.在/mnt/下新建目录chunk1

[root@server2 mfs]# mkdir /mnt/chunk1

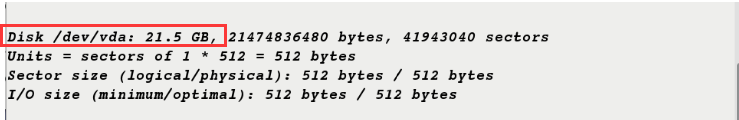

5.在虚拟机管理界面新建一块大小为20G的硬盘,fdisk-l查看,硬盘添加成功

[root@server2 mfs]# fdisk -l

6.新建分区

[root@server2 mfs]# fdisk /dev/vda

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0xa59b98df.

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p):

Using default response p

Partition number (1-4, default 1):

First sector (2048-41943039, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-41943039, default 41943039):

Using default value 41943039

Partition 1 of type Linux and of size 20 GiB is set

Command (m for help): p

Disk /dev/vda: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0xa59b98df

Device Boot Start End Blocks Id System

/dev/vda1 2048 41943039 20970496 83 Linux

Command (m for help): wq

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

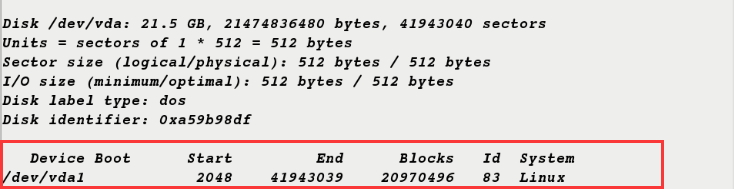

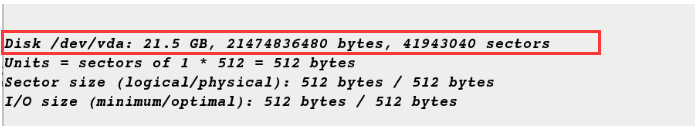

7.fdisk -l查看,新建分区成功

[root@server2 mfs]# fdisk -l

Disk /dev/vda: 21.5 GB, 21474836480 bytes, 41943040 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk label type: dos

Disk identifier: 0xa59b98df

Device Boot Start End Blocks Id System

/dev/vda1 2048 41943039 20970496 83 Linux

8.格式化新建分区

[root@server2 mfs]# mkfs.xfs /dev/vda1

meta-data=/dev/vda1 isize=512 agcount=4, agsize=1310656 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=5242624, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

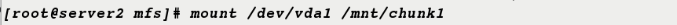

9.把/dev/vda1挂载到/mnt/chunk1,挂载成功

[root@server2 mfs]# mount /dev/vda1 /mnt/chunk1

- 模拟硬盘分区被破坏,该如何恢复的过程

10.切换到/mnt/chunk1目录下,复制/etc/下所有文件到当前路径下

[root@server2 mfs]# cd /mnt/chunk1

[root@server2 chunk1]# cp /etc/* .

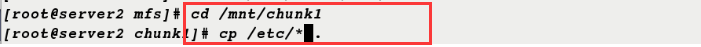

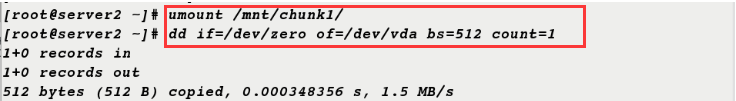

11.卸载/mnt/chunk1/,重新获取分区/dev/vda

[root@server2 ~]# umount /mnt/chunk1/

[root@server2 ~]# dd if=/dev/zero of=/dev/vda bs=512 count=1

1+0 records in

1+0 records out

512 bytes (512 B) copied, 0.000348356 s, 1.5 MB/s

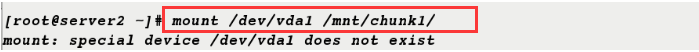

11.把/dev/vda1挂载到/mnt/chunk1,挂载失败

[root@server2 ~]# mount /dev/vda1 /mnt/chunk1/

mount: special device /dev/vda1 does not exist

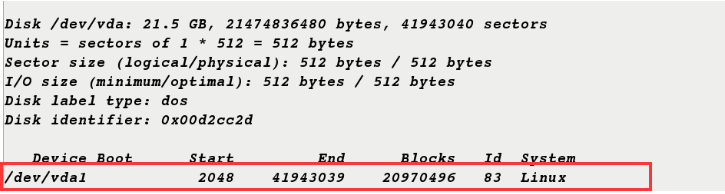

12.fdisk-l查看,分区表不存在但数据依然存在

[root@server2 ~]# fdisk -l

13.重新分区,fdisk -l查看

[root@server2 ~]# fdisk /dev/vda

[root@server2 ~]# fdisk -l

14.把/dev/vda1挂载到/mnt/chunk1,挂载成功(注意,新建分区后不用再次格式化,直接挂载即可)

[root@server2 ~]# mount /dev/vda1 /mnt/chunk1/

[root@server2 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/rhel_foundation184-root 17811456 1094116 16717340 7% /

devtmpfs 497300 0 497300 0% /dev

tmpfs 508264 0 508264 0% /dev/shm

tmpfs 508264 13104 495160 3% /run

tmpfs 508264 0 508264 0% /sys/fs/cgroup

/dev/sda1 1038336 123364 914972 12% /boot

tmpfs 101656 0 101656 0% /run/user/0

/dev/vda1 20960256 34072 20926184 1% /mnt/chunk1

15.编辑配置文件

[root@server2 ~]# vim /etc/mfs/mfshdd.cfg

最后一行添加:/mnt/chunk1

16.查看/mnt/chunk1/目录的权限,修改该目录的所有人和所有组,这样才可以在目录进行读写操作

[root@server2 ~]# ll -d /mnt/chunk1/

drwxr-xr-x 2 root root 4096 May 18 10:36 /mnt/chunk1/

[root@server2 ~]# chown mfs.mfs /mnt/chunk1/

[root@server2 ~]# df

17.开启服务

[root@server2 ~]# systemctl start moosefs-chunkserver

18.刷新浏览器可以查看到server2已经添加进去,大小为20G

注意:要先挂载在修改目录的所以人和所有组

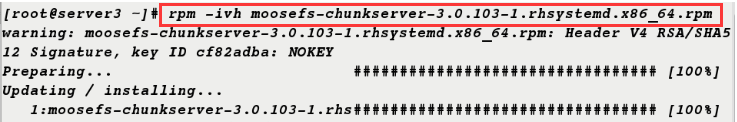

- 配置从节点(chunkserver)server3

1.安装moosefs-chunkserver软件

[root@server3 ~]# rpm -ivh moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm

warning: moosefs-chunkserver-3.0.103-1.rhsystemd.x86_64.rpm: Header V4 RSA/SHA512 Signature, key ID cf82adba: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:moosefs-chunkserver-3.0.103-1.rhs################################# [100%]

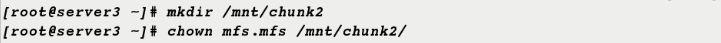

2.在/mnt/下新建目录chunk2,修改该目录的所以人和所有组

[root@server3 ~]# mkdir moosefs-chunkserver

[root@server3 ~]# chown mfs.mfs /mnt/chunk2/

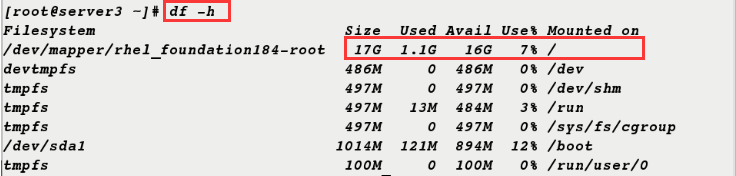

3.df-h查看挂载信息,默认挂载到根目录下

[root@server3 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/rhel_foundation184-root 17G 1.1G 16G 7% /

devtmpfs 486M 0 486M 0% /dev

tmpfs 497M 0 497M 0% /dev/shm

tmpfs 497M 13M 484M 3% /run

tmpfs 497M 0 497M 0% /sys/fs/cgroup

/dev/sda1 1014M 121M 894M 12% /boot

tmpfs 100M 0 100M 0% /run/user/0

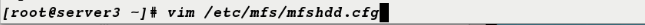

4.编辑配置文件

[root@server3 ~]# vim /etc/mfs/mfshdd.cfg

最后一行添加:/mnt/chunk2

5.添加解析

[root@server3 ~]# vim /etc/hosts

172.25.21.1 server1 mfsmaster

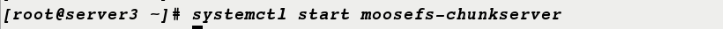

6.开启服务

[root@server3 ~]# systemctl start moosefs-chunkserver

7.刷新浏览器可以查看到server3已经添加进去,大小为17G

- 配置客户端(真机)

1.安装moosefs-client软件

[root@foundation21 3.0.103]# yum install moosefs-client-3.0.103-1.rhsystemd.x86_64.rpm

2.编辑配置文件

[root@foundation21 3.0.103]# vim /etc/mfs/mfsmount.cfg

/mnt/mfs

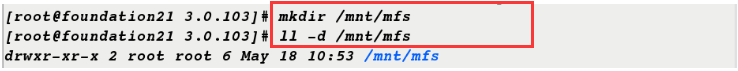

3.新建目录/mnt/mfs用于挂载数据

[root@foundation21 3.0.103]# mkdir /mnt/mfs

[root@foundation21 3.0.103]# ll -d /mnt/mfs

drwxr-xr-x 2 root root 6 May 18 10:53 /mnt/mfs

[root@foundation21 3.0.103]# ls /mnt/mfs

4.添加解析

[root@foundation21 3.0.103]# vim /etc/hosts

172.25.21.1 mfsmaster

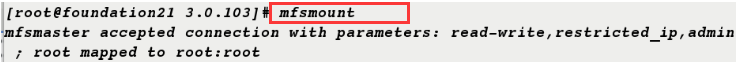

5.客户端挂载文件系统,df查看已经挂载上

[root@foundation21 3.0.103]# mfsmount

mfsmaster accepted connection with parameters: read-write,restricted_ip,admin ; root mapped to root:root

[root@foundation21 3.0.103]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/rhel_foundation21-root 225268256 69067808 156200448 31% /

devtmpfs 3932872 0 3932872 0% /dev

tmpfs 3946212 488 3945724 1% /dev/shm

tmpfs 3946212 9100 3937112 1% /run

tmpfs 3946212 0 3946212 0% /sys/fs/cgroup

/dev/loop0 3704296 3704296 0 100% /var/www/html/rhel7.3

/dev/loop1 3762278 3762278 0 100% /var/www/html/rhel6.5

/dev/sda1 1038336 143396 894940 14% /boot

tmpfs 789244 28 789216 1% /run/user/1000

mfsmaster:9421 38771712 1629568 37142144 5% /mnt/mfs

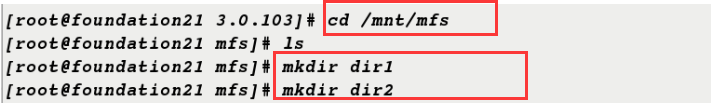

6.在挂载的目录下新建两个目录,并查看数据储存服务器

[root@foundation21 3.0.103]# cd /mnt/mfs

[root@foundation21 mfs]# ls

[root@foundation21 mfs]# mkdir dir1

[root@foundation21 mfs]# mkdir dir2

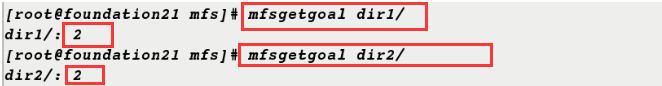

[root@foundation21 mfs]# mfsgetgoal dir1/

dir1/: 2

[root@foundation21 mfs]# mfsgetgoal dir2/

dir2/: 2

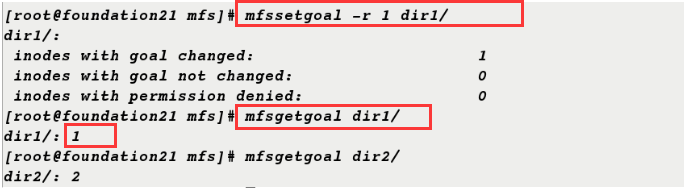

7.修改dir1的文件备份数量为1,再次查看修改成功(修改为1是为了做实验对比)

[root@foundation21 mfs]# mfssetgoal -r 1 dir1/

dir1/:

inodes with goal changed: 1

inodes with goal not changed: 0

inodes with permission denied: 0

[root@foundation21 mfs]# mfsgetgoal dir1/

dir1/: 1

[root@foundation21 mfs]# mfsgetgoal dir2/

dir2/: 2

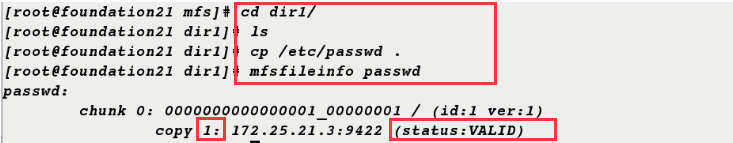

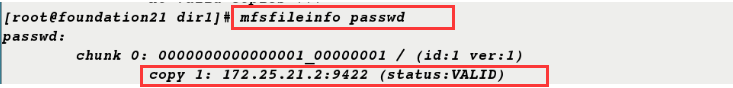

8.在dir1目录下,复制/etc/passwd文件到当前路径下,并查看文件信息

[root@foundation21 mfs]# cd dir1/

[root@foundation21 dir1]# ls

[root@foundation21 dir1]# cp /etc/passwd .

[root@foundation21 dir1]# mfsfileinfo passwd

passwd:

chunk 0: 0000000000000001_00000001 / (id:1 ver:1)

copy 1: 172.25.21.3:9422 (status:VALID) ##存储passwd的chunkserver为server3,数据存储默认为1份

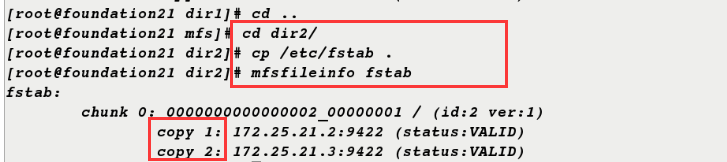

9.在dir2目录下,复制/etc/fstab文件到当前路径下,并查看文件信息

[root@foundation21 dir1]# cd ..

[root@foundation21 mfs]# cd dir2/

[root@foundation21 dir2]# cp /etc/fstab .

[root@foundation21 dir2]# mfsfileinfo fstab

fstab:

chunk 0: 0000000000000002_00000001 / (id:2 ver:1)

copy 1: 172.25.21.2:9422 (status:VALID) ##数据存储默认为2份

copy 2: 172.25.21.3:9422 (status:VALID)

10.在dir1目录下,重新获取一个文件bigfile,并查看文件信息,可以看到数据存储默认为1份

[root@foundation21 dir2]# cd ../dir1/

[root@foundation21 dir1]# ls

passwd

[root@foundation21 dir1]# dd if=/dev/zero of=bigfile bs=1M count=200

200+0 records in

200+0 records out

209715200 bytes (210 MB) copied, 0.344183 s, 609 MB/s

[root@foundation21 dir1]# mfsfileinfo bigfile

bigfile:

chunk 0: 0000000000000003_00000001 / (id:3 ver:1)

copy 1: 172.25.21.2:9422 (status:VALID)

chunk 1: 0000000000000004_00000001 / (id:4 ver:1)

copy 1: 172.25.21.3:9422 (status:VALID)

chunk 2: 0000000000000005_00000001 / (id:5 ver:1)

copy 1: 172.25.21.2:9422 (status:VALID)

chunk 3: 0000000000000006_00000001 / (id:6 ver:1)

copy 1: 172.25.21.3:9422 (status:VALID)

11.在dir2目录下,重新获取一个文件bigfile2,并查看文件信息,可以看到数据存储默认为2份

[root@foundation21 dir1]# cd ../dir2/

[root@foundation21 dir2]# dd if=/dev/zero of=bigfile2 bs=1M count=200

200+0 records in

200+0 records out

209715200 bytes (210 MB) copied, 0.676968 s, 310 MB/s

[root@foundation21 dir2]# mfsfileinfo bigfile2

bigfile2:

chunk 0: 0000000000000007_00000001 / (id:7 ver:1)

copy 1: 172.25.21.2:9422 (status:VALID)

copy 2: 172.25.21.3:9422 (status:VALID)

chunk 1: 0000000000000008_00000001 / (id:8 ver:1)

copy 1: 172.25.21.2:9422 (status:VALID)

copy 2: 172.25.21.3:9422 (status:VALID)

chunk 2: 0000000000000009_00000001 / (id:9 ver:1)

copy 1: 172.25.21.2:9422 (status:VALID)

copy 2: 172.25.21.3:9422 (status:VALID)

chunk 3: 000000000000000A_00000001 / (id:10 ver:1)

copy 1: 172.25.21.2:9422 (status:VALID)

copy 2: 172.25.21.3:9422 (status:VALID)

12.关闭server2的moosefs-chunkserver服务

[root@server2 ~]# systemctl stop moosefs-chunkserver

13.再次查看文件bigfile2的信息,数据存储变为1份

[root@foundation21 dir2]# mfsfileinfo bigfile2

bigfile2:

chunk 0: 0000000000000007_00000001 / (id:7 ver:1)

copy 1: 172.25.21.3:9422 (status:VALID)

chunk 1: 0000000000000008_00000001 / (id:8 ver:1)

copy 1: 172.25.21.3:9422 (status:VALID)

chunk 2: 0000000000000009_00000001 / (id:9 ver:1)

copy 1: 172.25.21.3:9422 (status:VALID)

chunk 3: 000000000000000A_00000001 / (id:10 ver:1)

copy 1: 172.25.21.3:9422 (status:VALID)

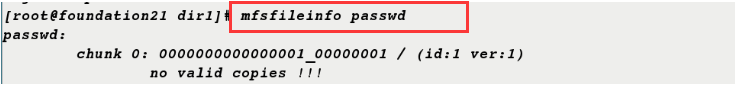

14.在/dir1/目录下,查看不到passwd的数据,如果此时打开passwd文件 电脑会卡住,因为数据已经不在这个主机上存储了

[root@foundation21 dir1]# mfsfileinfo passwd

passwd:

chunk 0: 0000000000000001_00000001 / (id:1 ver:1)

no valid copies !!!

查看文件bigfile信息,会出现以下情况

[root@foundation21 dir1]# mfsfileinfo bigfile

bigfile:

chunk 0: 0000000000000003_00000001 / (id:3 ver:1)

no valid copies !!!

chunk 1: 0000000000000004_00000001 / (id:4 ver:1)

copy 1: 172.25.21.3:9422 (status:VALID)

chunk 2: 0000000000000005_00000001 / (id:5 ver:1)

no valid copies !!!

chunk 3: 0000000000000006_00000001 / (id:6 ver:1)

copy 1: 172.25.21.3:9422 (status:VALID)

15.开启server2的moosefs-chunkserver服务,查看文件信息都恢复正常

[root@server2 ~]# systemctl start moosefs-chunkserver

[root@foundation21 dir1]# mfsfileinfo passwd

passwd:

chunk 0: 0000000000000001_00000001 / (id:1 ver:1)

copy 1: 172.25.21.2:9422 (status:VALID)

[root@foundation21 dir1]# mfsfileinfo bigfile

bigfile:

chunk 0: 0000000000000003_00000001 / (id:3 ver:1)

copy 1: 172.25.21.2:9422 (status:VALID)

chunk 1: 0000000000000004_00000001 / (id:4 ver:1)

copy 1: 172.25.21.3:9422 (status:VALID)

chunk 2: 0000000000000005_00000001 / (id:5 ver:1)

copy 1: 172.25.21.2:9422 (status:VALID)

chunk 3: 0000000000000006_00000001 / (id:6 ver:1)

copy 1: 172.25.21.3:9422 (status:VALID)

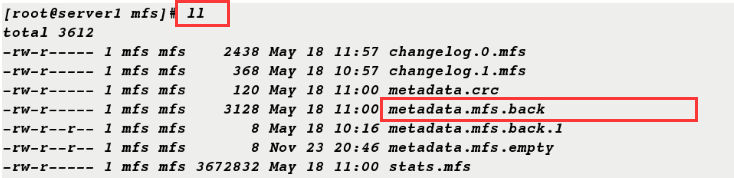

- mfs服务的开启与关闭

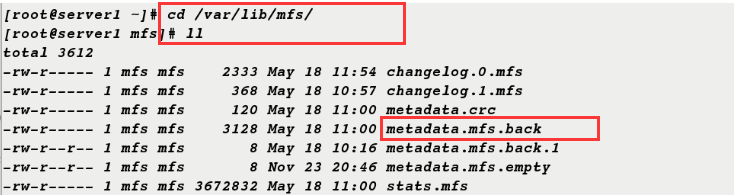

1.在master(server1)上,数据信息默认放在/var/lib/mfs/目录下,如果服务正常开启会出现metadata.mfs.back文件,如果服务正常关闭,metadata.mfs.back文件会变成metadata.mfs文件

[root@server1 ~]# cd /var/lib/mfs/

[root@server1 mfs]# ll

total 3612

-rw-r----- 1 mfs mfs 2333 May 18 11:54 changelog.0.mfs

-rw-r----- 1 mfs mfs 368 May 18 10:57 changelog.1.mfs

-rw-r----- 1 mfs mfs 120 May 18 11:00 metadata.crc

-rw-r----- 1 mfs mfs 3128 May 18 11:00 metadata.mfs.back

-rw-r--r-- 1 mfs mfs 8 May 18 10:16 metadata.mfs.back.1

-rw-r--r-- 1 mfs mfs 8 Nov 23 20:46 metadata.mfs.empty

-rw-r----- 1 mfs mfs 3672832 May 18 11:00 stats.mfs

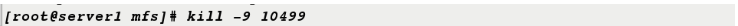

2.查看进程,强制关闭mfsmaster进程

[root@server1 mfs]# ps ax

[root@server1 mfs]# kill -9 10499

3.因为是非正常关闭,所以metadata.mfs.back文件没有发生改变

[root@server1 mfs]# ll

total 3612

-rw-r----- 1 mfs mfs 2438 May 18 11:57 changelog.0.mfs

-rw-r----- 1 mfs mfs 368 May 18 10:57 changelog.1.mfs

-rw-r----- 1 mfs mfs 120 May 18 11:00 metadata.crc

-rw-r----- 1 mfs mfs 3128 May 18 11:00 metadata.mfs.back

-rw-r--r-- 1 mfs mfs 8 May 18 10:16 metadata.mfs.back.1

-rw-r--r-- 1 mfs mfs 8 Nov 23 20:46 metadata.mfs.empty

-rw-r----- 1 mfs mfs 3672832 May 18 11:00 stats.mfs

4.开启mfsmaster进程失败

[root@server1 mfs]# mfsmaster start

open files limit has been set to: 16384

working directory: /var/lib/mfs

lockfile created and locked

initializing mfsmaster modules ...

exports file has been loaded

topology file has been loaded

loading metadata ...

can't find metadata.mfs - try using option '-a'

init: metadata manager failed !!!

error occurred during initialization - exiting

5.用mfsmaster -a可以成功开启

[root@server1 mfs]# mfsmaster -a

open files limit has been set to: 16384

working directory: /var/lib/mfs

lockfile created and locked

initializing mfsmaster modules ...

exports file has been loaded

topology file has been loaded

loading metadata ...

loading sessions data ... ok (0.0000)

loading storage classes data ... ok (0.0000)

loading objects (files,directories,etc.) ... ok (0.0210)

loading names ... ok (0.0000)

loading deletion timestamps ... ok (0.0000)

loading quota definitions ... ok (0.0000)

loading xattr data ... ok (0.0000)

loading posix_acl data ... ok (0.0000)

loading open files data ... ok (0.0000)

loading flock_locks data ... ok (0.0000)

loading posix_locks data ... ok (0.0000)

loading chunkservers data ... ok (0.0000)

loading chunks data ... ok (0.0000)

checking filesystem consistency ... ok

connecting files and chunks ... ok

all inodes: 1

directory inodes: 1

file inodes: 0

chunks: 0

metadata file has been loaded

stats file has been loaded

master <-> metaloggers module: listen on *:9419

master <-> chunkservers module: listen on *:9420

main master server module: listen on *:9421

mfsmaster daemon initialized properly

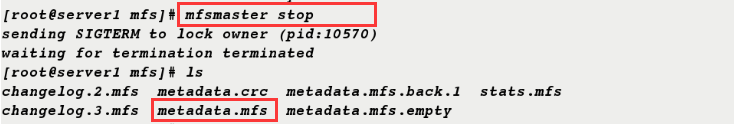

6.正常关闭mfs服务,查看/var/lib/mfs/目录下文件,出现metadata.mfs文件

[root@server1 mfs]# mfsmaster stop

sending SIGTERM to lock owner (pid:10570)

waiting for termination terminated

[root@server1 mfs]# ls

changelog.2.mfs metadata.crc metadata.mfs.back.1 stats.mfs

changelog.3.mfs metadata.mfs metadata.mfs.empty

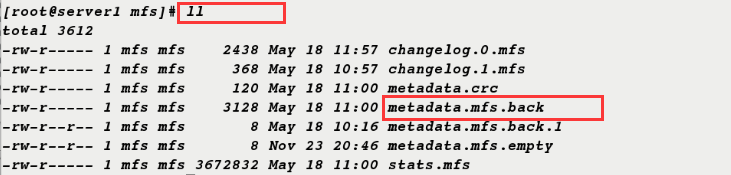

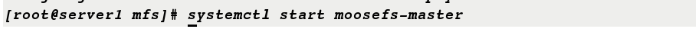

7.正常开启mfs服务,出现metadata.mfs.back文件

[root@server1 mfs]# systemctl start moosefs-master

[root@server1 mfs]# ll

total 3612

-rw-r----- 1 mfs mfs 2438 May 18 11:57 changelog.0.mfs

-rw-r----- 1 mfs mfs 368 May 18 10:57 changelog.1.mfs

-rw-r----- 1 mfs mfs 120 May 18 11:00 metadata.crc

-rw-r----- 1 mfs mfs 3128 May 18 11:00 metadata.mfs.back

-rw-r--r-- 1 mfs mfs 8 May 18 10:16 metadata.mfs.back.1

-rw-r--r-- 1 mfs mfs 8 Nov 23 20:46 metadata.mfs.empty

-rw-r----- 1 mfs mfs 3672832 May 18 11:00 stats.mfs

注意:用命令开启和用脚本开启都可以

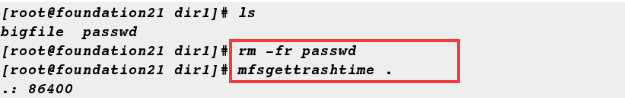

- 数据恢复

1.删除passwd文件并查看回收时间

[root@foundation21 dir1]# ls

bigfile passwd

[root@foundation21 dir1]# rm -fr passwd

[root@foundation21 dir1]# mfsgettrashtime . 回收时间

.: 86400

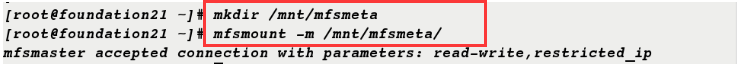

2.新建目录/mnt/mfsmeta,并挂载目录/mnt/mfsmeta

[root@foundation21 dir1]# cd

[root@foundation21 ~]# mkdir /mnt/mfsmeta

[root@foundation21 ~]# mfsmount -m /mnt/mfsmeta/

mfsmaster accepted connection with parameters: read-write,restricted_ip

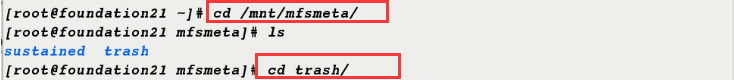

3.进入/mnt/mfsmeta/trash目录下,查看到目录下有很多文件

[root@foundation21 ~]# cd /mnt/mfsmeta/

[root@foundation21 mfsmeta]# ls

sustained trash

[root@foundation21 mfsmeta]# cd trash/

[root@foundation21 trash]# ls | wc -l

4097

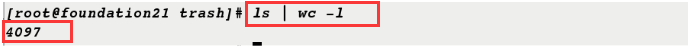

4.查找要恢复的文件,把要恢复的文件移到undel/目录下

[root@foundation21 trash]# find -name *passwd*

./004/00000004|dir1|passwd

[root@foundation21 trash]# cd 004/

[root@foundation21 004]# ls

00000004|dir1|passwd undel

[root@foundation21 004]# mv 00000004\|dir1\|passwd undel/

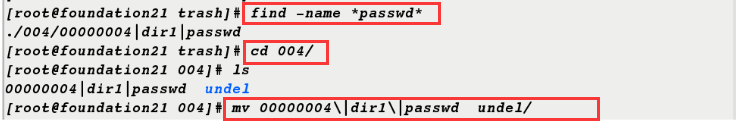

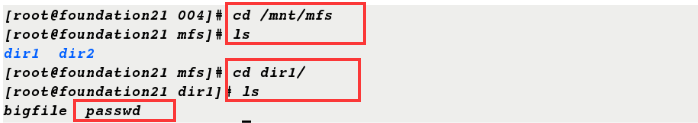

5.进入/mnt/mfs/dir1/目录下查看,之前删除的文件被找回来了

[root@foundation21 004]# cd /mnt/mfs

[root@foundation21 mfs]# ls

dir1 dir2

[root@foundation21 mfs]# cd dir1/

[root@foundation21 dir1]# ls

bigfile passwd

来源:https://blog.csdn.net/qq_44236589/article/details/90379628