CNN

Convolution neural networks or con nets or CNNS can do some pretty cool things。If you feed them a bunch of pictures of faces for instance, don’t learn some basic things like edges and dots bright spots dark spots, and then because they are a multi-layer neural network,that’s what gets lowered in the first layer the second layer are things that are recognized as eyes noses mouths ,and third layer are things that look like faces.

Similarly if you feed it a bunch of images of cars down to the lowest layer, you’ll get things again that look like edges. And then higher up look at things that look lie tires and wheel wells and hoods. And at a level above that thins that are clearly identifiable as cars.

CNN NS can even learn to play video games by forming patterns of the pixels as they appear on the screen, and learning what is the best action to take when it sees a certain pattern。

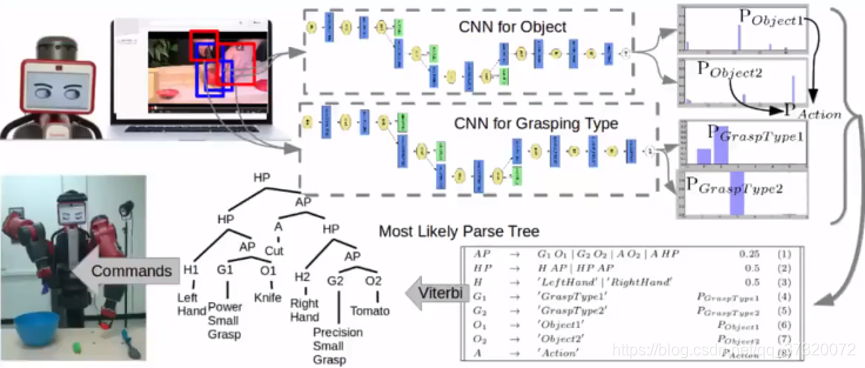

A CNN can learn to play video games in some cases far better than a human ever could,and have them set to watch YouTube Videos , one can learn objects by again, picking out patters and the other one can learn types of grasps, this then coupled with some other execution software

A toy ConvNet : X’s and O’s --say whether a picture is of an X or an O

So there’s no doubt CNN NS are powerful usually when we talk about them. We do so in the same way, we might talk about magic but they’re not magic what they do is based on some pretty basic ideas. Apply it in a clever way, so to illustrate these.

We’ll talk about a very simple toy convolutional neural network, what this one does is taken in an image a two-dimensional array of pixels, you can think of it as a check board, and each square on the checkboard is either light or dark. Whether it’s a picture of an X or an O. so for instance on top there we are image with an X drawn in white pixels on a black background. So how a CNN does this is has several steps in it, what makes it tricky is if the X is not exactly the same every time. The extra the O can be shifted it can be bigger or smaller can be rotated a little bit thicker or thinner.

And in every case we would still like to identify whether it’s an X or O。

this is challenging is because for us deciding whether these two things are similar is straightforward,for computer ,it’s very hard. What computer see is this checkboard this two-dimensional array. As a bunch of numbers ones and minus ones,1 is bright pixel ,-1 is black pixel. And what it can do is go through pixel by pixel, and compare whether they match or not. So computer to a computer it looks like there are a lot of pixels that match, but some that don’t quite a few that don’t actually, and so it might look at this and say :I’m really not sure whether these are the same. And so it was because a computer is so literal would say uncertain:”I can’t say that they’re equal”。 Now one of the tricks that convolution neural network use is to match parts of the image rather than the whole thing, so if you break it down into this smaller parts or features, then is becomes much more clear whether these two things are similar. So examples of these little features are little mini images. In this case, just three pixels by three pixels, 3 * 3. The one on the left is a diagonal line,slanting downward from left to right, the one on the right is also a diagonal line, slanting in the other direction, and the one in the middle is a little X. these are little pieces of the bigger image. And you can see as we go through, if you choose the right feature and put it in the right place, it matches the image exactly, so okey we have the bits and pieces, now to take a step deeper。

**

The math behind the match :Filtering

**

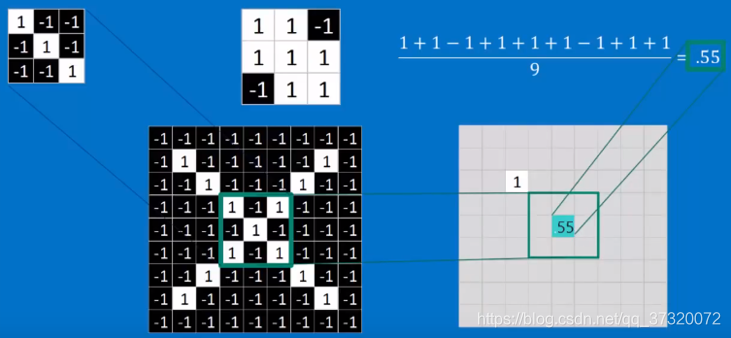

There the math behind matching these is called filtering, and the way this is done is a feature is lined up with the little patch of the image . and then one by one the pixels are compared, they’re multiplied by each other, and then add it up ,and divide it by the total number of pixels.

1. Line up the feature and the image patch

2. Multiply each image pixel by the corresponding feature pixel

3. Add them up

4. Divide by the total number of pixels in the feature

So to step through this to see why it makes sense to do this, you can see starting the upper left-hand pixel in both, multiplying the 1 by a 1 gives you a 1 1*1=1.

And we can keep track of that by putting that in the position of the pixel that we’re comparing.

We step to the next one:-1 *-1 = 1,and we continue to step through pixel by pixel multiplying all by each other. And because they are always the same the answer is always 1, when we’re done, we take all these ones and add them up and divide by 9, and the answer is 1. So now we want to keep track of where that feature was is the image, and we put a 1 there say when we put the feature here. We get a match of what that is filtering.

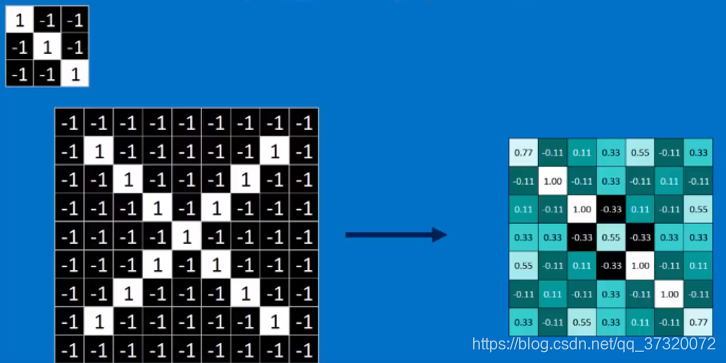

Now we can take that same feature and move it to another position and perform the filtering again, the first pixel match the second pitch, we’ll Pacha choose the third pixel does not match. -1 * 1 = 1,so we record that in our results, and we go through and do that through the rest of the image patch, and when we’re notice we have tow -1 this time, so we add up all the pixels to add up to five divided by 9, and we get 0.55. So this is very different than our one, and we can record the 0.55 in that position where we were where it occurred. So by moving our filter around to different places in the image, we actually find different values for how well that filter matches, or how well that feature is represented at that position. So this became a map of where the feature occurs by moving it around to every possible position, we do convolution that is just the repeated application of this feature, this filter over and over again。What we get is a nice map across the whole image of where this feature occurs, and if we look at it makes sense, this feature is a diagonal line slightly downward left to right, which matches the downward left-right diagonal of the X , so if we look at our filtered image, we see that all of the high numbers:1 and 0.77 are all right along that diagonal. That suggests that feature matches along that diagonal much better than it does elsewhere in the image.

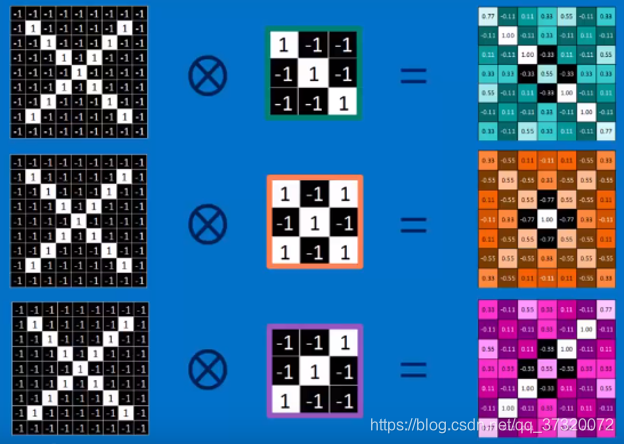

To use shorthand notation here, we’ll do x with a circle in it to represent convolution. The act of trying every possible match. We repeat that with other features, we can repeat that with our X filter in the middle and with our upward slanting diagonal line in the bottom. And in each case, the map that we get of where that feature occurs is consistent what we would expect is what we know about the X and about where our features match.

This act of convolving an image with a bunch of filters, a bunch of features and creating a stack of filtered images is well call a convolution layer. A layer because it’s an operation , that we can stack with others as we’ll show in a minute. In convolution one image becomes a stack of filtered images. We get as many filtered images out as we have filters.

Shrinking the image stack:Pooling

- Pick a window size()usually 2 or 3

- Pick a stride (usually 2)

- Walk your window across your filtered images

- From each window ,take the maximum value

So convolution layer is one trick that we have next big trick, that we have is called pooling. This is how we shrink the image stack, and this is pretty straightforward. We start with the window size usually 22 or 33, and destroyed usually 2 pixels just in practice those work best. Then we that window and walk it in strides across each of the filtered images from each window. We take the maximum value ,

We start with our first filtered image. We have our 2 * 2 window within that pixel the maximum values is 1。 So we track that and then move to our stride of 2 pixels . we move 2pixels to the right and repeat out of that window。

We have to be creative, we have don’t have all the pixels representative, so we take the max of what’s there and we continue dong this across the whole image. When we done it, what we end up with is a similar pattern but smaller. We can still see our high valus are all on the diagonal,but instead of 77 in our filtered image. now, we have a 44 image. So as half as big as it was ,this makes a lot of sense to do .if you can imagine, if instead of starting with a 99 image, we have started with a 90009000 image, shrinking it is convenient for working with it makes it smaller. The other thing it does is pooling doesn’t care where in that window that maximum values occurs. So that makes it a little less sensitive to postion, and the way this plays out is that if you’re looking for a particular feature in an image, it can be a little to the left a little to the right maybe a little rotated. And it’ll get picked up. So we do max pooling with all of our stack of filtered images. And get in every case smaller set of filtered images。

#Normalization :Rectified Linear Units(ReLUs)

Keep the math of from breaking by tweaking each of the values just a bit .

Change everything negative to zero.

If we are looking back at our filtered image, we have those what are called rectified linear units. That is the little computational unit, that does this but all it does is steps through everywhere, there is a negative value change it to zero.by the time you are done, you have a very similar looking image, except there is no negative values, they are just zeros and we do this with all of our images. And this becomes another type of layer , so in a rectified linear unit a stack of images becomes a stack of images with no negative values.

Now what’s really fun the magic starts to happen here.

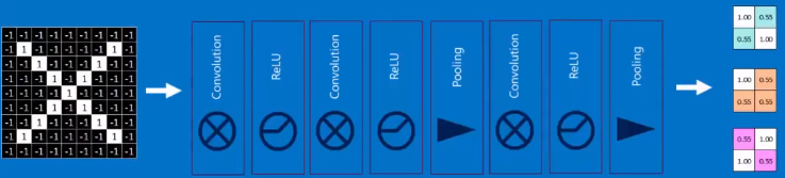

When we take these layers convolution, layers rectified linear units and pooling layers, and we stack them up so that the output of one becomes the input of the next. You will notice that what goes into each of these, and what comes out of these looks like an array of pixels or an array of an array of pixels. We can tack that nicely, we can use the output of one for the input of the next.,and by stacking them, we get these operation building on top of each other. What’s more ,we can repeat the stacks . we can do deep stacking, you can imagin making a sandwich, that is not just one patty in one slice of cheese and one lettuce in one tomato. But a whole bunch of let layers double triple triple, quadruple decker as manty times as you want ,each time the image gets more filtered as it goes through convolution layers, and it get smaller as it goes through pooling layers.

#Full connected layer

Now the final layer in our toolbox ,it’s called fully connected layer here, every values gets a vote on what the answer is going to be,so we take our now much filtered and much reduced in size stack of images. We break then out we just rearrange and put them into a single list, because it’s easier to visualize that way. and then each of those connects to one of our answers that we’re going to vote for. when we feed this in X will be certain values here that tend to be high. they tend to predict very strongly, this is going to be an X., they get a lot of vote for the X outcome.

Similarly when we feed in a picture of an O to our convolutional neural network, there are certain values here at the end , that tend to be very high and tend to predict strongly。 When we’re going to have an O at the end, so they get a lot of weight a strong vote for the category,. Now when we get a new input, and we don’t know what it is, and we want to decide, the way of this works is the input gees through all of our convolutional are rectified linear unit our pooling layers comes out to the end here, we get a series of votes and then based on weights, that each values gets to vote with we get a nice average vote at the end.

In this case ,there is this particular set of inputs votes for an X with a strength of 0912. And an O with the strength of 0.51,so here definitely X is the winner, and so the neural network would categorized this input as an X.

视频链接:https://www.bilibili.com/video/av16175135?from=search&seid=9915077106712393932

来源:CSDN

作者:IQ智商不在线

链接:https://blog.csdn.net/qq_37320072/article/details/104552911