webmagic框架:http://webmagic.io/

WebMagic的结构分为Downloader、PageProcessor、Scheduler、Pipeline四大组件

PageProcessor主要分为三个部分,分别是爬虫的配置、页面元素的抽取和链接的发现。

Pipeline用于保存结果的组件,下面我们实现自定义Pipeline,可以实现保存结果到文件、数据库等一系列功能

很多功能自己进去慢慢研究哈,这里就不一一赘述了。

下面直接进入主题,爬我的博客首页的数据:https://www.cnblogs.com/loaderman/

查看首页的源码研究一下下:

第一步:maven配置webmagic 详见:http://webmagic.io/docs/zh/posts/ch2-install/with-maven.html

第二步:直接根据文档进行编码实战:

定义实体类

public class LoadermanModel {

private String title;

private String detailUrl;

private String content;

private String date;

public LoadermanModel() {

}

public LoadermanModel(String title, String detailUrl, String content, String date) {

this.title = title;

this.detailUrl = detailUrl;

this.content = content;

this.date = date;

}

public String getTitle() {

return title;

}

public void setTitle(String title) {

this.title = title;

}

public String getDetailUrl() {

return detailUrl;

}

public void setDetailUrl(String detailUrl) {

this.detailUrl = detailUrl;

}

public String getContent() {

return content;

}

public void setContent(String content) {

this.content = content;

}

public String getDate() {

return date;

}

public void setDate(String date) {

this.date = date;

}

}

自定义PageProcessor

import us.codecraft.webmagic.Page;

import us.codecraft.webmagic.Site;

import us.codecraft.webmagic.processor.PageProcessor;

import us.codecraft.webmagic.selector.Html;

import java.util.ArrayList;

import java.util.List;

public class LoadermanPageProcessor implements PageProcessor {

// 部分一:抓取网站的相关配置,包括编码、抓取间隔、重试次数等

private Site site = Site.me().setRetryTimes(5).setUserAgent("User-Agent: Mozilla/5.0 (Windows NT 6.1; Win64; x64; rv:70.0) Gecko/20100101 Firefox/70.0");

@Override

public void process(Page page) {

List<String> pageItemList = page.getHtml().xpath("//div[@class='post']").all();

ArrayList<LoadermanModel> list = new ArrayList<>();

for (int i = 0; i < pageItemList.size(); i++) {

Html html = Html.create(pageItemList.get(i));

LoadermanModel loadermanModel=new LoadermanModel();

loadermanModel.setTitle(html.xpath("//a[@class='postTitle2']/text()").toString() );

loadermanModel.setDetailUrl(html.xpath("//a[@class='postTitle2']").links().toString());

loadermanModel.setContent(html.xpath("//div[@class='c_b_p_desc']/text()").toString() );

loadermanModel.setDate(html.xpath("//p[@class='postfoot']/text()").toString() );

list.add(loadermanModel);

}

page.putField("data", list);

if (page.getResultItems().get("data") == null) {

//skip this page

page.setSkip(true);

}

}

@Override

public Site getSite() {

return site;

}

}

自定义Pipeline,,对爬取后的数据提取和处理

import com.alibaba.fastjson.JSON;

import us.codecraft.webmagic.ResultItems;

import us.codecraft.webmagic.Task;

import us.codecraft.webmagic.pipeline.Pipeline;

import us.codecraft.webmagic.utils.FilePersistentBase;

import java.io.FileWriter;

import java.io.IOException;

import java.io.PrintWriter;

public class LoadermanlPipeline extends FilePersistentBase implements Pipeline {

public LoadermanlPipeline(String path) {

this.setPath(path);

}

public void process(ResultItems resultItems, Task task) {

String path = "LoadermanlPipelineGetData";

try {

PrintWriter printWriter = new PrintWriter(new FileWriter(this.getFile(path+ ".json")));

printWriter.write(JSON.toJSONString(resultItems.get("data")));

printWriter.close();

} catch (IOException var5) {

}

}

}

开启爬虫:

Spider.create(new LoadermanPageProcessor())

.addUrl("https://www.cnblogs.com/loaderman/")

//自定义Pipeline,保存json文件到本地

.addPipeline(new LoadermanlPipeline("D:\\loaderman\\"))

//开启5个线程抓取

.thread(5)

//启动爬虫

.run();

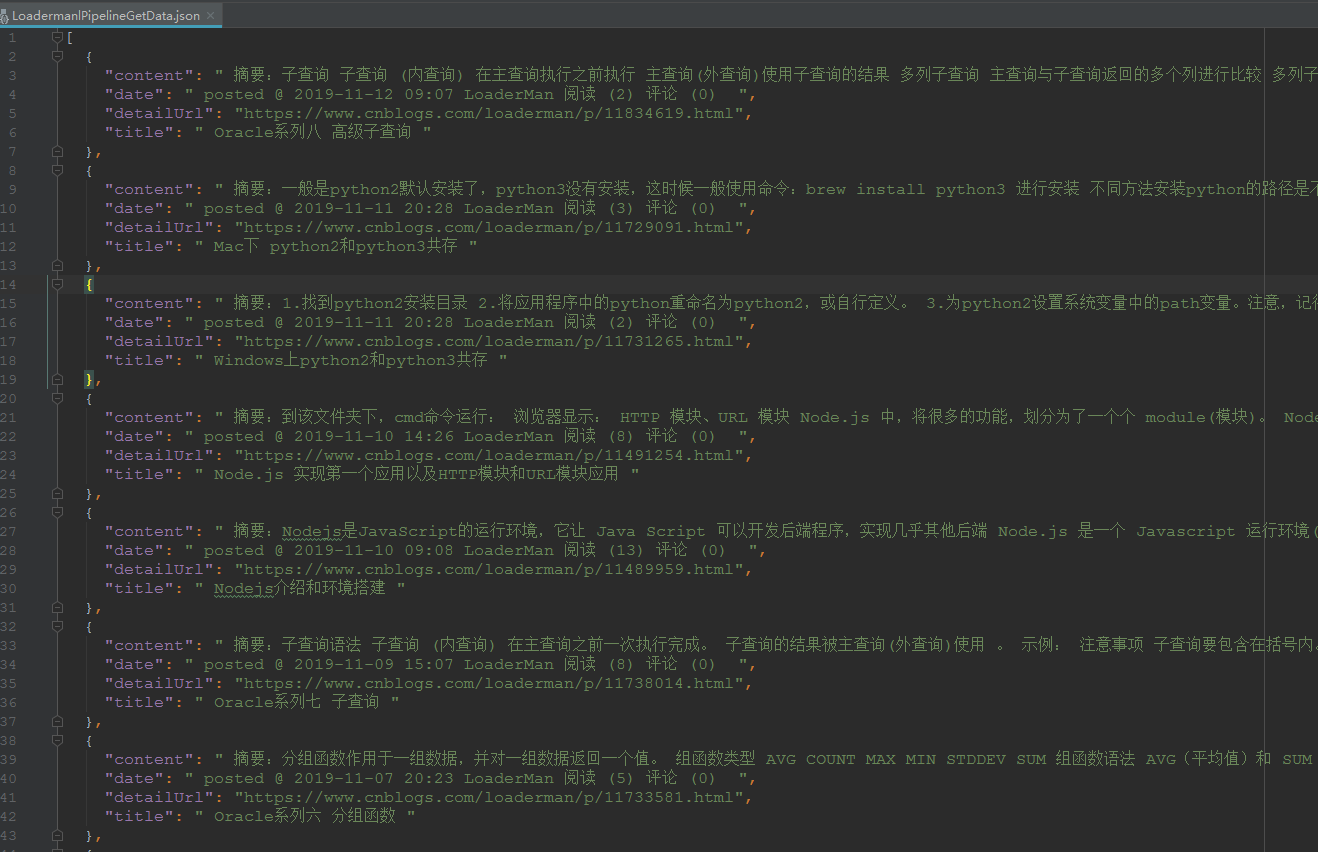

效果如下:

搞定!