Helm 部署EFK

这里关注一下helm elasticsearch 和kibana 的版本,必须一致

每个节点的/var/log/containers里存放着日志

[root@k8s-master efk]# pwd

/root/efk

[root@k8s-master elasticsearch]# kubectl create namespace efk

添加仓库

[root@k8s-master efk]# helm repo add incubator https://charts.helm.sh/incubator

"incubator" has been added to your repositories

部署 Elasticsearch

[root@k8s-master efk]# helm search elasticsearch

NAME CHART VERSION APP VERSION DESCRIPTION

incubator/elasticsearch 1.10.2 6.4.2 DEPRECATED Flexible and powerful open source, distributed...

后面的kibana 的也必须是6.4.2版本

[root@k8s-master efk]# helm search incubator/kibana --version 0.14.8

No results found

添加仓库

[root@k8s-master efk]# helm repo add stable https://charts.helm.sh/stable

"stable" has been added to your repositories

[root@k8s-master elasticsearch]# helm search stable/kibana --version 0.14.8

NAME CHART VERSION APP VERSION DESCRIPTION

stable/kibana 0.14.8 6.4.2 Kibana is an open source data visualization plugin for El...

找到相同版本的kibana6.4.2

开始安装

[root@k8s-master efk]# helm fetch incubator/elasticsearch

[root@k8s-master efk]# ls

elasticsearch-1.10.2.tgz

[root@k8s-master efk]# tar -zxvf elasticsearch-1.10.2.tgz

[root@k8s-master efk]# cd elasticsearch

[root@k8s-master elasticsearch]# ls

Chart.yaml ci README.md templates values.yaml

[root@k8s-master elasticsearch]# vi values.yaml

修改以下内容:

MINIMUM_MASTER_NODES: "1"

client:

replicas: 1

master:

replicas: 1

persistence:

enabled: false

data:

replicas: 1

persistence:

enabled: false

[root@k8s-master elasticsearch]# vi values.yaml

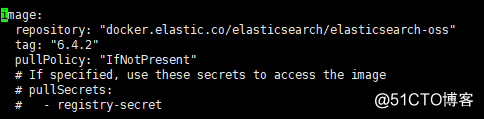

查看values.yaml文件,发现需要拉取镜像是docker.elastic.co/elasticsearch/elasticsearch-oss:6.4.2。

所有节点的/root下拉取镜像docker.elastic.co/elasticsearch/elasticsearch-oss:6.4.2

[root@k8s-master fluentd-elasticsearch]# cd /root

[root@k8s-master ~]# pwd

/root

[root@k8s-master ~]# docker pull docker.elastic.co/elasticsearch/elasticsearch-oss:6.4.2

[root@k8s-master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.elastic.co/elasticsearch/elasticsearch-oss 6.4.2 11e335c1a714 2 years ago 715MB

[root@k8s-master elasticsearch]# helm install --name els1 --namespace=efk -f values.yaml incubator/elasticsearch

NAME: els1

LAST DEPLOYED: Thu Dec 10 12:37:05 2020

NAMESPACE: efk

STATUS: DEPLOYED

RESOURCES:

==> v1/ConfigMap

NAME DATA AGE

els1-elasticsearch 4 0s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

els1-elasticsearch-client-59bcdcbfb7-ck8mb 0/1 Init:0/1 0 0s

els1-elasticsearch-data-0 0/1 Init:0/2 0 0s

els1-elasticsearch-master-0 0/1 Init:0/2 0 0s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

els1-elasticsearch-client ClusterIP 10.99.78.35 <none> 9200/TCP 0s

els1-elasticsearch-discovery ClusterIP None <none> 9300/TCP 0s

==> v1beta1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

els1-elasticsearch-client 0/1 1 0 0s

==> v1beta1/StatefulSet

NAME READY AGE

els1-elasticsearch-data 0/1 0s

els1-elasticsearch-master 0/1 0s

NOTES:

The elasticsearch cluster has been installed.

Please note that this chart has been deprecated and moved to stable.

Going forward please use the stable version of this chart.

Elasticsearch can be accessed:

-

Within your cluster, at the following DNS name at port 9200:

els1-elasticsearch-client.efk.svc

-

From outside the cluster, run these commands in the same shell:

export POD_NAME=$(kubectl get pods --namespace efk -l "app=elasticsearch,component=client,release=els1" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:9200 to use Elasticsearch"

kubectl port-forward --namespace efk $POD_NAME 9200:9200

[root@k8s-master ~]# kubectl get pod -n efk

NAME READY STATUS RESTARTS AGE

els1-elasticsearch-client-59bcdcbfb7-ck8mb 1/1 Running 0 112s

els1-elasticsearch-data-0 1/1 Running 0 112s

els1-elasticsearch-master-0 1/1 Running 0 112s

[root@k8s-master ~]# kubectl get svc -n efk

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

els1-elasticsearch-client ClusterIP 10.99.78.35 <none> 9200/TCP 3m45s

els1-elasticsearch-discovery ClusterIP None <none> 9300/TCP 3m45s

[root@k8s-master elasticsearch]# kubectl run cirror-$RANDOM --rm -it --image=cirros -- /bin/sh

kubectl run --generator=deployment/apps.v1 is DEPRECATED and will be removed in a future version. Use kubectl run --generator=run-pod/v1 or kubectl create instead.

If you don't see a command prompt, try pressing enter.

/ # curl 10.99.78.35:9200/_cat/nodes

10.244.0.73 5 97 22 2.33 2.29 1.50 di - els1-elasticsearch-data-0

10.244.0.74 16 97 16 2.33 2.29 1.50 mi * els1-elasticsearch-master-0

10.244.0.72 16 97 31 2.33 2.29 1.50 i - els1-elasticsearch-client-59bcdcbfb7-ck8mb

/ # exit

Session ended, resume using 'kubectl attach cirror-14088-7f65bc86-6pjsz -c cirror-14088 -i -t' command when the pod is running

deployment.apps "cirror-14088" deleted

以上测试成功。

部署 Fluentd

[root@k8s-master elasticsearch]# cd ..

[root@k8s-master efk]# helm fetch stable/fluentd-elasticsearch

[root@k8s-master efk]# ls

elasticsearch elasticsearch-1.10.2.tgz fluentd-elasticsearch-2.0.7.tgz

[root@k8s-master efk]# tar -zxvf fluentd-elasticsearch-2.0.7.tgz

[root@k8s-master efk]# cd fluentd-elasticsearch

更改其中 Elasticsearch 访问地址

[root@k8s-master fluentd-elasticsearch]# vi values.yaml

elasticsearch:

host: '10.99.78.35'

[root@k8s-master fluentd-elasticsearch]# helm install --name flu1 --namespace=efk -f values.yaml stable/fluentd-elasticsearch

NAME: flu1

LAST DEPLOYED: Thu Dec 10 12:44:46 2020

NAMESPACE: efk

STATUS: DEPLOYED

RESOURCES:

==> v1/ClusterRole

NAME AGE

flu1-fluentd-elasticsearch 0s

==> v1/ClusterRoleBinding

NAME AGE

flu1-fluentd-elasticsearch 0s

==> v1/ConfigMap

NAME DATA AGE

flu1-fluentd-elasticsearch 6 1s

==> v1/DaemonSet

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

flu1-fluentd-elasticsearch 3 3 0 3 0 <none> 0s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

flu1-fluentd-elasticsearch-4sdsd 0/1 ContainerCreating 0 0s

flu1-fluentd-elasticsearch-6478z 0/1 ContainerCreating 0 0s

flu1-fluentd-elasticsearch-jq4zt 0/1 ContainerCreating 0 0s

==> v1/ServiceAccount

NAME SECRETS AGE

flu1-fluentd-elasticsearch 1 1s

NOTES:

-

To verify that Fluentd has started, run:

kubectl --namespace=efk get pods -l "app.kubernetes.io/name=fluentd-elasticsearch,app.kubernetes.io/instance=flu1"

THIS APPLICATION CAPTURES ALL CONSOLE OUTPUT AND FORWARDS IT TO elasticsearch . Anything that might be identifying,

including things like IP addresses, container images, and object names will NOT be anonymized.

[root@k8s-master fluentd-elasticsearch]# kubectl get pod -n efk

NAME READY STATUS RESTARTS AGE

els1-elasticsearch-client-5678fb458d-cnb9c 1/1 Running 0 22m

els1-elasticsearch-data-0 1/1 Running 0 22m

els1-elasticsearch-master-0 1/1 Running 0 22m

flu1-fluentd-elasticsearch-crhrl 0/1 ImagePullBackOff 0 3m21s

flu1-fluentd-elasticsearch-mm2tk 0/1 ImagePullBackOff 0 3m21s

flu1-fluentd-elasticsearch-pcpmw 0/1 ImagePullBackOff 0 3m21s

[root@k8s-master fluentd-elasticsearch]# kubectl describe pod flu1-fluentd-elasticsearch-crhrl -n efk

Events:

Type Reason Age From Message

Normal Scheduled 2m54s default-scheduler Successfully assigned efk/flu1-fluentd-elasticsearch-crhrl to k8s-node2

Normal Pulling 40s (x4 over 2m47s) kubelet, k8s-node2 Pulling image "gcr.io/google-containers/fluentd-elasticsearch:v2.3.2"

Warning Failed 20s (x4 over 2m32s) kubelet, k8s-node2 Failed to pull image "gcr.io/google-containers/fluentd-elasticsearch:v2.3.2": rpc error: code = Unknown desc = Error response from daemon: Get https://gcr.io/v2/: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

Warning Failed 20s (x4 over 2m32s) kubelet, k8s-node2 Error: ErrImagePull

Normal BackOff 8s (x5 over 2m31s) kubelet, k8s-node2 Back-off pulling image "gcr.io/google-containers/fluentd-elasticsearch:v2.3.2"

Warning Failed 8s (x5 over 2m31s) kubelet, k8s-node2 Error: ImagePullBackOff

[root@k8s-master fluentd-elasticsearch]# vi values.yaml

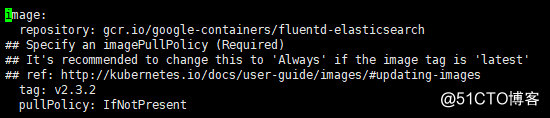

查看values.yaml文件,发现拉取的是fluentd-elasticsearch:v2.3.2。

使用***去下载gcr.io/google-containers/fluentd-elasticsearch:v2.3.2

docker pull gcr.io/google-containers/fluentd-elasticsearch:v2.3.2

然后传到所有节点的/root下,导入

[root@k8s-master fluentd-elasticsearch]# cd /root

[root@k8s-master ~]# pwd

/root

[root@k8s-master ~]# docker load < fluentd-elasticsearch.tar

[root@k8s-master ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

gcr.io/google-containers/fluentd-elasticsearch v2.3.2 c212b82d064e 2 years ago 140MB

[root@k8s-master fluentd-elasticsearch]# cd /root/efk/fluentd-elasticsearch

删除前面错误的flu1

[root@k8s-master fluentd-elasticsearch]# helm del --purge flu1

再次创建

[root@k8s-master fluentd-elasticsearch]# helm install --name flu1 --namespace=efk -f values.yaml stable/fluentd-elasticsearch

NAME: flu1

LAST DEPLOYED: Thu Dec 10 09:00:54 2020

NAMESPACE: efk

STATUS: DEPLOYED

RESOURCES:

==> v1/ClusterRole

NAME AGE

flu1-fluentd-elasticsearch 3s

==> v1/ClusterRoleBinding

NAME AGE

flu1-fluentd-elasticsearch 3s

==> v1/ConfigMap

NAME DATA AGE

flu1-fluentd-elasticsearch 6 3s

==> v1/DaemonSet

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

flu1-fluentd-elasticsearch 3 3 0 3 0 <none> 3s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

flu1-fluentd-elasticsearch-6fh96 0/1 ContainerCreating 0 2s

flu1-fluentd-elasticsearch-cvs57 0/1 ContainerCreating 0 2s

flu1-fluentd-elasticsearch-ssw47 0/1 ContainerCreating 0 2s

==> v1/ServiceAccount

NAME SECRETS AGE

flu1-fluentd-elasticsearch 1 3s

NOTES:

-

To verify that Fluentd has started, run:

kubectl --namespace=efk get pods -l "app.kubernetes.io/name=fluentd-elasticsearch,app.kubernetes.io/instance=flu1"

THIS APPLICATION CAPTURES ALL CONSOLE OUTPUT AND FORWARDS IT TO elasticsearch . Anything that might be identifying,

including things like IP addresses, container images, and object names will NOT be anonymized.

[root@k8s-master fluentd-elasticsearch]# kubectl get pod -n efk

NAME READY STATUS RESTARTS AGE

els1-elasticsearch-client-5678fb458d-cnb9c 1/1 Running 0 15h

els1-elasticsearch-data-0 1/1 Running 0 15h

els1-elasticsearch-master-0 1/1 Running 0 15h

flu1-fluentd-elasticsearch-6fh96 1/1 Running 0 33s

flu1-fluentd-elasticsearch-cvs57 1/1 Running 0 33s

flu1-fluentd-elasticsearch-ssw47 1/1 Running 0 33s

部署 kibana

[root@k8s-master elasticsearch]# cd ..

[root@k8s-master efk]# helm fetch stable/kibana --version 0.14.8

[root@k8s-master efk]# ls

elasticsearch elasticsearch-1.10.2.tgz fluentd-elasticsearch fluentd-elasticsearch-2.0.7.tgz kibana-0.14.8.tgz

[root@k8s-master efk]# tar -zxvf kibana-0.14.8.tgz

[root@k8s-master efk]# cd kibana

[root@k8s-master kibana]# vi values.yaml

修改如下

files:

kibana.yml:

elasticsearch.hosts: http://10.99.78.35:9200

[root@k8s-master kibana]# vi values.yaml

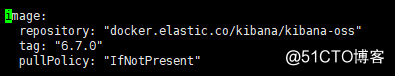

查看所需镜像

所有节点拉取docker.elastic.co/kibana/kibana-oss:6.7.0

[root@k8s-master kibana]# docker pull docker.elastic.co/kibana/kibana-oss:6.7.0

[root@k8s-master kibana]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.elastic.co/kibana/kibana-oss 6.7.0 c50123b45502 20 months ago 453MB

[root@k8s-master kibana]# helm install --name kib1 --namespace=efk -f values.yaml stable/kibana --version 0.14.8

NAME: kib1

LAST DEPLOYED: Thu Dec 10 12:52:08 2020

NAMESPACE: efk

STATUS: DEPLOYED

RESOURCES:

==> v1/ConfigMap

NAME DATA AGE

kib1-kibana 1 1s

==> v1/Pod(related)

NAME READY STATUS RESTARTS AGE

kib1-kibana-6c49f68cf-ttd96 0/1 ContainerCreating 0 1s

==> v1/Service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kib1-kibana ClusterIP 10.111.158.168 <none> 443/TCP 1s

==> v1beta1/Deployment

NAME READY UP-TO-DATE AVAILABLE AGE

kib1-kibana 0/1 1 0 1s

NOTES:

To verify that kib1-kibana has started, run:

kubectl --namespace=efk get pods -l "app=kibana"

Kibana can be accessed:

-

From outside the cluster, run these commands in the same shell:

export POD_NAME=$(kubectl get pods --namespace efk -l "app=kibana,release=kib1" -o jsonpath="{.items[0].metadata.name}")

echo "Visit http://127.0.0.1:5601 to use Kibana"

kubectl port-forward --namespace efk $POD_NAME 5601:5601

[root@k8s-master kibana]# kubectl get pod -n efk

NAME READY STATUS RESTARTS AGE

els1-elasticsearch-client-59bcdcbfb7-ck8mb 1/1 Running 0 16m

els1-elasticsearch-data-0 1/1 Running 0 16m

els1-elasticsearch-master-0 1/1 Running 0 16m

flu1-fluentd-elasticsearch-4sdsd 1/1 Running 0 8m47s

flu1-fluentd-elasticsearch-6478z 1/1 Running 0 8m47s

flu1-fluentd-elasticsearch-jq4zt 1/1 Running 0 8m47s

kib1-kibana-6c49f68cf-ttd96 1/1 Running 0 84s

[root@k8s-master ~]# kubectl get svc -n efk

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

els1-elasticsearch-client ClusterIP 10.99.78.35 <none> 9200/TCP 16m

els1-elasticsearch-discovery ClusterIP None <none> 9300/TCP 16m

kib1-kibana ClusterIP 10.111.158.168 <none> 443/TCP 65s

[root@k8s-master kibana]# kubectl edit svc kib1-kibana -n efk

修改为

type: NodePort

[root@k8s-master ~]# kubectl get svc -n efk

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

els1-elasticsearch-client ClusterIP 10.99.78.35 <none> 9200/TCP 17m

els1-elasticsearch-discovery ClusterIP None <none> 9300/TCP 17m

kib1-kibana NodePort 10.111.158.168 <none> 443:31676/TCP 2m5s

访问http://10.10.21.8:31676

来源:oschina

链接:https://my.oschina.net/u/4417091/blog/4794131