前一篇文章通过docker-compose 直接部署是最简单的方式,但是要兼容k8s、k3s集群环境,必须转化为yaml或者Helm来部署,之前yaml部署之后出现labels not found,采集日志为空。本文采用Helm来部署。

1. Helm安装部署,本文基于v2.14.3

Helm包含:HelmClient 和 TillerServer

a)下载HelmClient

wget https://get.helm.sh/helm-v2.14.3-linux-amd64.tar.gz && tar zxvf helm-v2.14.3-linux-amd64.tar.gz

cd helm-v2.14.3-linux-amd64

chmod +x helm

cp helm /usr/local/bin

helm version

Client: &version.Version{SemVer:"v2.14.3", GitCommit:"0e7f3b6637f7af8fcfddb3d2941fcc7cbebb0085", GitTreeState:"clean"}

b)安装TillerServer,在k8s,k3s集群中需要配置ServiceAccount: tiller,并赋予cluster-admin角色权限,采用rbac.yaml配置

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

kubectl apply -f rbac.yaml

再通过helm init命令来创建TillerServer

#k8s集群中

helm init --service-account tiller --tiller-image registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.14.3

#k3s集群中,由于版本原因,deployment资源的template需要修改一下,否则会报错

helm init --tiller-image registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.14.3 --service-account tiller --override spec.selector.matchLabels.'name'='tiller',spec.selector.matchLabels.'app'='helm' --output yaml | sed 's@apiVersion: extensions/v1beta1@apiVersion: apps/v1@' | k3s kubectl apply -f -

综上,a),b)两步之后,通过helm version

Client: &version.Version{SemVer:"v2.14.3", GitCommit:"0e7f3b6637f7af8fcfddb3d2941fcc7cbebb0085", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.14.3", GitCommit:"0e7f3b6637f7af8fcfddb3d2941fcc7cbebb0085", GitTreeState:"clean"}

注意: 在创建完tiller用户之后,helm init之后,执行helm version报错:

root@aaa:~/helm# helm version

Client: &version.Version{SemVer:"v2.14.3", GitCommit:"0e7f3b6637f7af8fcfddb3d2941fcc7cbebb0085", GitTreeState:"clean"}

Error: Get http://localhost:8080/api/v1/namespaces/kube-system/pods?labelSelector=app%3Dhelm%2Cname%3Dtiller: dial tcp [::1]:8080: connect: connection refused

#解决办法:

kubectl config view --raw > ~/.kube/config

#猜想helm需要访问k8s的KUBECONFIG文件,而默认文件的位置在~/.kube/config下。所以很多时候这个位置的默认文件不能丢

2. 通过Helm部署grafana-loki

先通过命令来安装repo

#helm repo add stable https://kubernetes.oss-cn-hangzhou.aliyuncs.com/charts

#helm repo add loki https://grafana.github.io/loki/charts

helm repo add stable https://mirror.azure.cn/kubernetes/charts/

helm repo add incubator https://mirror.azure.cn/kubernetes/charts-incubator/

helm repo list

官网上通过命令安装默认配置的loki

helm upgrade --install loki loki/loki-stack

在对应的目录:/root/.helm/cache/archive

#解压tar.gz文件,并修改配置

tar zxvf loki-stack-0.38.3.tgz

vim ~/.helm/cache/archive/loki-stack/charts/promtail/values.yaml

#在文件中找到volumes

- name: docker

hostPath:

path: /var/lib/docker/containers

- name: pods

hostPath:

path: /var/log/pods

#在文件中找到volumeMounts

- name: docker

mountPath: /var/lib/docker/containers

- name: pods

mountPath: /var/log/pods

readOnly: true

#注意正常不用改,因为docker默认的data-dir:/var/lib/docker

#但是这里在openwrt中的docker默认的data-dir:/opt/docker

#而且,在/var/log/pods/目录的文件是/opt/docker的软连接

root@OpenWrt:~/.helm/cache/archive# ls -lh /var/log/pods/kube-system_coredns-6c6bb68b64-nflwx_75536291-77d8-41fc-aae5-3a0c83fa0949/coredns/

lrwxrwxrwx 1 root root 161 Aug 5 16:38 17.log -> /opt/docker/containers/90d84a79e0655ecf03e3f620eb9921290c8f7917d190f8a1398fc4903504286f/90d84a79e0655ecf03e3f620eb9921290c8f7917d190f8a1398fc4903504286f-json.log

#promtail一直报错Unable to find any logs to tail. Please verify permissions

#所以这里修改容器中的目录,对应/var/log/pods的软连接

#volumes

- name: docker

hostPath:

path: /opt/docker/containers

- name: pods

hostPath:

path: /var/log/pods

#volumeMounts

- name: docker

mountPath: /opt/docker/containers

- name: pods

mountPath: /var/log/pods

readOnly: true

确定/var/lib/docker目录就是宿主机docker的容器日志目录,这样promtail就可以采集到宿主机上所有运行容器的日志。

修改配置之后,再通过helm安装loki

helm install ./loki-stack --name=loki --namespace=default

#查看运行容器

kubectl get po -o wide

loki-promtail-6n9zj 1/1 Running 0 14h 10.42.0.30 aaa

loki-0 1/1 Running 0 14h 10.42.0.31 aaa

#查看删除

helm ls --all

helm del --purge loki

3. 安装grafana,并接入loki

grafana因为集群中本身已经安装了,可以直接配置。或者通过helm 安装grafana

#helm安装grafana

#1. 下载values.yaml,注意其中的server.persistentVolume.enabled配置,要么设置为fasle,要么准备好pv

wget https://raw.githubusercontent.com/helm/charts/master/stable/grafana/values.yaml

#2. helm install

helm install --name grafana stable/grafana -f values.yaml

#这种方式虽然简便,但是会出现kubectl版本不一致,k8s资源对象不匹配的问题,例如deployment的apiVersion:extensions/v1beta1-->apps/v1

#以下命令,在loki-stack同级目录执行

helm install --name loki loki-stack/

helm upgrade loki loki-stack/

#实践证明,helm安装grafana正确方式如下

#1. 添加国内仓库

helm repo add stable https://mirror.azure.cn/kubernetes/charts/

helm repo add incubator https://mirror.azure.cn/kubernetes/charts-incubator/

#2. 可列出grafana版本,先更新

helm repo update

helm search grafana

NAME CHART VERSION APP VERSION DESCRIPTION

incubator/grafana 0.1.4 0.0.1 DEPRECATED - incubator/grafana

stable/grafana 5.5.2 7.1.1 The leading tool for querying and visualizing time series...

#3.安装

helm install --name=grafana stable/grafana --namespace=monitoring

或者直接通过配置yaml安装grafana

#cat grafana-dep.yaml

#cat grafana-ing.yaml

如果是第一次登录grafana,需要获取admin用户的初始密码:

#base64解密

kubectl get secret grafana -o jsonpath="{.data.admin-password}" | base64 --decode ; echo

#获者获取到secret,通过base64命令解码

echo OVVhM3JRWnI5Vg== | base64 -d

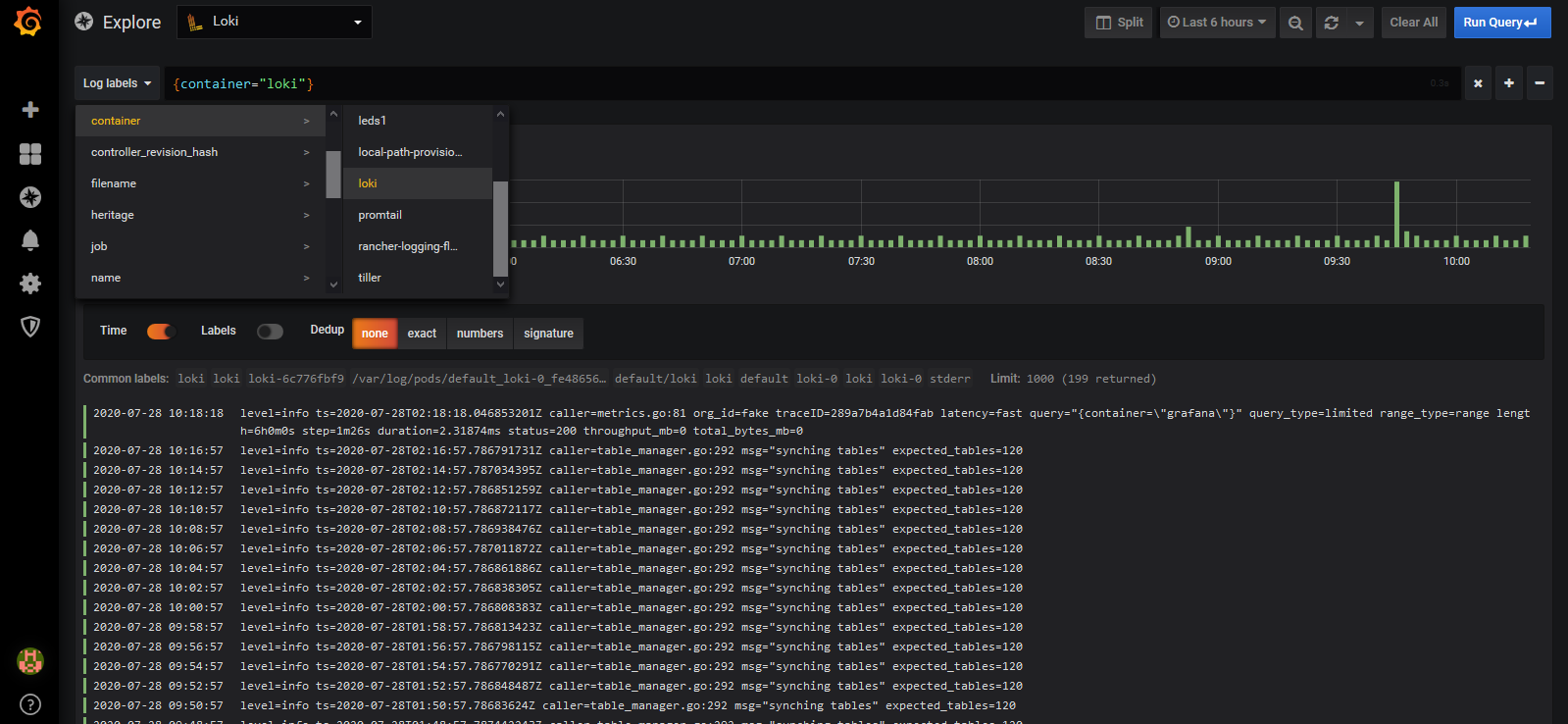

登录grafana页面后,在expore中添加datasource,loki,注意配置url: http://loki.default:3100,保存测试即可。

问题:集群重启或者grafana重启之后,需要重新配置密码,导入loki数据源,配置url: http://loki.default:3100,考虑采用pvc持久化grafana配置数据,具体修改grafana-deployment.yaml

#cat grafana-deployment.yaml

volumeMounts:

- name: grafana-persistent-storage

mountPath: /var

volumes:

- name: grafana-persistent-storage

emptyDir: {}

#修改为如下:

volumeMounts:

- mountPath: /var/lib/grafana

name: storage

volumes:

- name: storage

persistentVolumeClaim:

claimName: grafana

以上需要创建名为grafana的pvc,注意namespace要对应上,比如grafana-deployment部署在namespace: grafana,则pvc也要部署在grafana的命名空间。

#cat grafana-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana

namespace: grafana

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

#cat grafana-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: grafana

spec:

capacity:

storage: 1Gi

accessModes:

- ReadWriteOnce

# Recycle Delete Retain

persistentVolumeReclaimPolicy: Delete

hostPath:

path: /opt/grafana/data

type: DirectoryOrCreate

这样grafana服务重启后,就不会丢失数据源配置了。

由于采用helm来安装grafana,则可直接在values.yaml中修改配置,即可支持Presistent

#vim ~/.helm/cache/archive/grafana/values.yaml

persistence:

type: pvc

enabled: true

# storageClassName: default

accessModes:

- ReadWriteOnce

size: 1Gi

# annotations: {}

finalizers:

- kubernetes.io/pvc-protection

# subPath: ""

# existingClaim:

#只需要将enabled置为true,修改size大小,默认它会找到storageClass:default

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

local-path (default) rancher.io/local-path Delete WaitForFirstConsumer false 27h

#自动分配pv, pvc

#kubectl get pv -o wide

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE VOLUMEMODE

pvc-70c45ab8-3f42-4deb-aef6-f4f43432130d 1Gi RWO Delete Bound default/grafana local-path 109m Filesystem

#kubectl get pvc -o wide -n default

NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE

default grafana Bound pvc-70c45ab8-3f42-4deb-aef6-f4f43432130d 1Gi RWO local-path 110m Filesystem

#并且通过describe pv查看hostpath: /root/rancher/k3s/storage/

k3s kubectl describe pv pvc-70c45ab8-3f42-4deb-aef6-f4f43432130d

Source:

Type: HostPath (bare host directory volume)

Path: /root/rancher/k3s/storage/pvc-70c45ab8-3f42-4deb-aef6-f4f43432130d

HostPathType: DirectoryOrCreate

来源:oschina

链接:https://my.oschina.net/beyondken/blog/4443839