神经机器翻译

机器翻译的目标是将文本从一种语言自动翻译成另一种语言,给定一个待翻译的语言的文本序列, 不存在一个翻译是当前文本的最佳翻译。

这是因为人类语言天生的模糊性和灵活性.这使得自动机器翻译这一挑战变得困难, 也许这是人工智能中最难的一项挑战。

常规的机器翻译方法有统计机器翻译和神经机器翻译,这里我们主要讨论神经机器翻译。

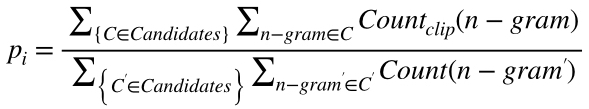

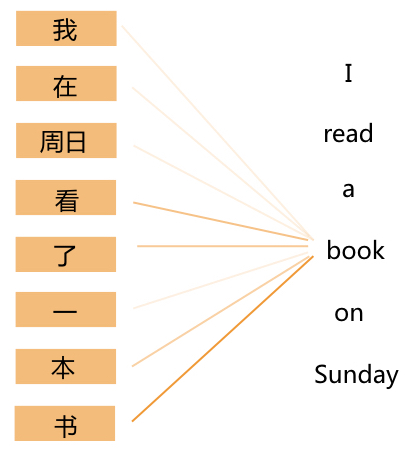

从上图中我们可以看到,翻译的主要任务是在学习源端词到目标端词的一种映射关系,同时还包括调序,例如先翻译了read a book 而不是on Sunday。

那么如何评价翻译质量如何呢?

- 翻译专员人工评价(准确度更高,但费时费力)

- 自动评价(速度快,方便模型迭代,但存在缺陷)

实验操作1

# run prediction

!tar -zxf /home/aistudio/data/data13032/ddle_ai_course.t -C /home/aistudio

WORK_PATH = "/home/aistudio/paddle_ai_course"

# decompress pretrained models

!tar -zxf {WORK_PATH}/model_big.tgz -C {WORK_PATH}

!tar -zxf {WORK_PATH}/model_small.tgz -C {WORK_PATH}

!cd {WORK_PATH} && sh infer_small.sh model_small trans_res eval/test_enzh FILE

!cd {WORK_PATH}/eval && sh eval.sh {WORK_PATH}/trans_res/small_trans_res test_reference FILE

!cd {WORK_PATH}/eval && sed -r 's/(@@ )|(@@ ?$)//g' test_enzh > input.tok.txt && head -1 input.tok.txt && head -1 predict.tok.txtmemory_optimize is deprecated. Use CompiledProgram and Executor W0920 13:58:19.488519 2083 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0 W0920 13:58:19.492918 2083 device_context.cc:267] device: 0, cuDNN Version: 7.3. I0920 13:58:19.634228 2083 parallel_executor.cc:329] The number of CUDAPlace, which is used in ParallelExecutor, is 1. And the Program will be copied 1 copies I0920 13:58:19.638108 2083 build_strategy.cc:340] SeqOnlyAllReduceOps:0, num_trainers:1 BLEU = 22.21, 57.5/29.8/16.6/8.6 (BP=1.000, ratio=1.037, hyp_len=2318, ref_len=2236) last week the US Senate Finance Committee overwhelmingly approved a bill requiring the Treasury to identify a list of countries with " fundamentally misaligned " currency exchange rates . this opens the door for potential economic sanctions to be brought against Beijing . 上周 , 美国参议院 财务 委员会 以 压倒性 多数 批准 了 一项 法案 , 要求 财政部 确定 一个 “ 根本 错位 ” 汇率 国家 名单 , 这 开启 了 可能 对 北京 实施 经济制裁 的 大门 。

自动评价

在机器翻译中,常见的自动评价指标是BLEU,在介绍具体做法之前我们先引入一些基础概念

N-gram

惩罚因子

BLEU算法

1. N-gram

N-gram是一种统计语言模型,该模型可以将一句话表示n个连续的单词序列,利用上下文中相邻词间的搭配信息,计算出句子的概率,从而判断一句话是否通顺。

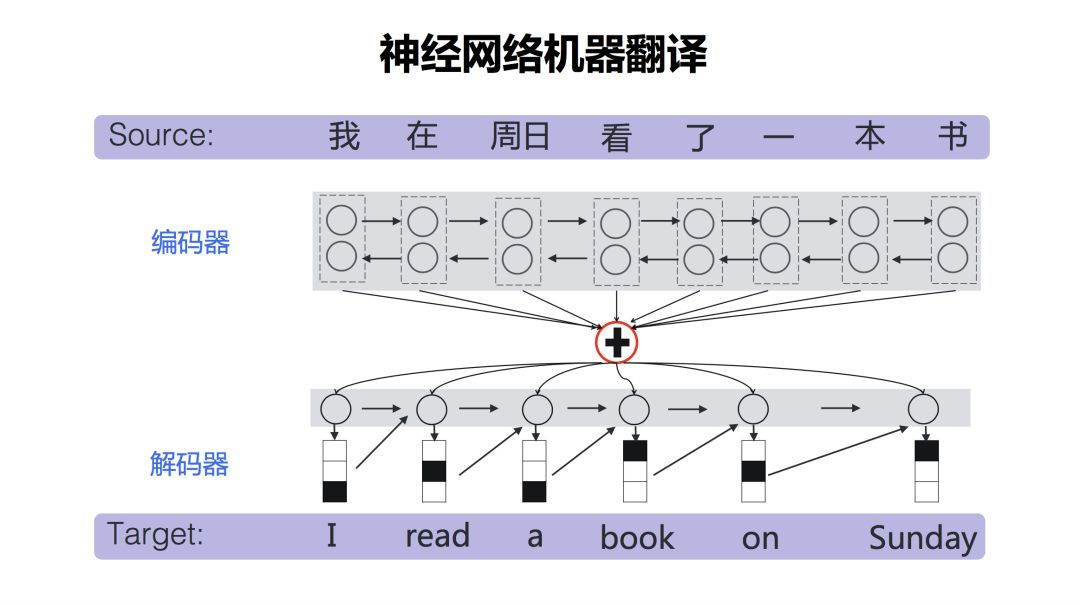

BLEU也是采用了N-gram的匹配规则,通过它能够算出比较译文和参考译文之间n组词的相似的一个占比。

例如:

1.1 1-gram

可以看到机器翻译6个词,有5个词命中参考以为,那么它的匹配度为 5/6。

1.2 2-gram

2元词组的匹配度则是 3/5。

1.3 3-gram

3元词组的匹配度则是 1/4,4元及以上均为0

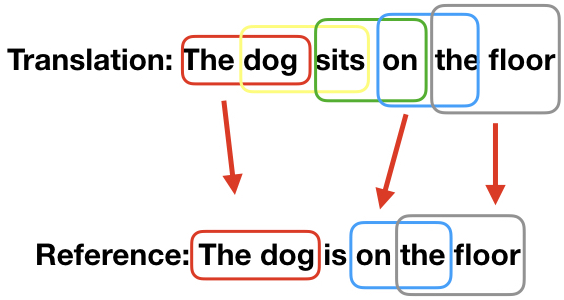

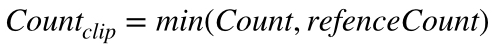

1.4 计算方法的修正

但是还存在一些情况,通过n-gram是没办法反映译文的正确性的,例如:

如果计算1-gram,所有的the都匹配上了,匹配度是7/7,这个显然是错误的,所以BLEU修正了这个算法,算N-gram出现次数变为译文中和参考译文中出现次数的最小值

所以上面的例子中,1-gram的匹配度为2/7。

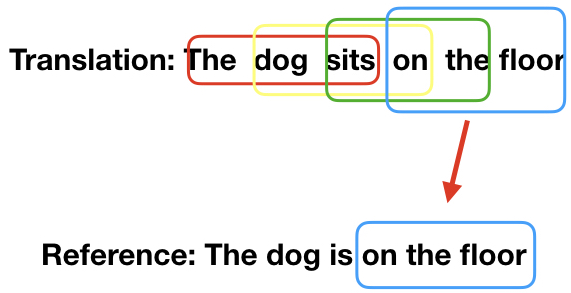

所以n-gram的计算方式如下:(公式中的i代表长度为i-gram)

表示取n-gram在翻译译文和参考译文中出现的最小次数

表示取n-gram在翻译译文中出现次数

2. 惩罚因子

我们再举一个例子,比如翻译的句子为: The dog,参考译文是: The dog is on the floor. 如果根据上面的公式来计算,得分最后应该是1。

但这个句子翻译不完整,理论上得分应该比较低,所以我们引入下面的式子来对得分做一些惩罚。

这里c为机器翻译译文的词数,r是参考译文的词数。如果c同r的差距很大,那边BP的值就会很小,那么最后的得分也会变得很小。

3. BLEU算法

最终BLEU的计算方式如下:

这里Wi代表了i-gram的权重,一般认为所有的i-gram的权重相当,为1/N。

实验操作2

# 训练模型

!cd {WORK_PATH} && sh train_small.sh+ export FLAGS_eager_delete_tensor_gb=0.0

+ export FLAGS_fraction_of_gpu_memory_to_use=0.98

+ CUDA_VISIBLE_DEVICES=0 python -u src/train.py --src_vocab_fpath ./data/vocab.source --trg_vocab_fpath ./data/vocab.target --train_file_pattern ./data/translate-train-000* --token_delimiter --use_token_batch True --batch_size 4096 --sort_type pool --pool_size 200000 --fetch_steps 50 save_freq 50 n_head 8 d_model 256 d_inner_hid 1024 n_layer 3 prepostprocess_dropout 0.1 ckpt_path ./model_small

14

['save_freq', '50', 'n_head', '8', 'd_model', '256', 'd_inner_hid', '1024', 'n_layer', '3', 'prepostprocess_dropout', '0.1', 'ckpt_path', './model_small']

10

[2019-09-20 13:58:34,031 INFO train.py:656] Namespace(batch_size=4096, device='GPU', enable_ce=False, fetch_steps=50, local=True, opts=['save_freq', '50', 'n_head', '8', 'd_model', '256', 'd_inner_hid', '1024', 'n_layer', '3', 'prepostprocess_dropout', '0.1', 'ckpt_path', './model_small'], pool_size=200000, shuffle=True, shuffle_batch=True, sort_type='pool', special_token=['0', '<EOS>', 'UNK'], src_vocab_fpath='./data/vocab.source', sync=True, token_delimiter=' ', train_file_pattern='./data/translate-train-000*', trg_vocab_fpath='./data/vocab.target', update_method='pserver', use_mem_opt=True, use_py_reader=False, use_token_batch=True, val_file_pattern=None)

[2019-09-20 13:58:34,158 INFO train.py:707] before adam

memory_optimize is deprecated. Use CompiledProgram and Executor

[2019-09-20 13:58:40,254 INFO train.py:725] local start_up:

W0920 13:58:41.012140 2131 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0

W0920 13:58:41.016459 2131 device_context.cc:267] device: 0, cuDNN Version: 7.3.

[2019-09-20 13:58:41,043 INFO train.py:505] load checkpoint from ./model_small

[2019-09-20 13:58:41,165 INFO train.py:512] begin reader

[2019-09-20 13:58:46,721 INFO train.py:539] begin executor

I0920 13:58:46.764124 2131 parallel_executor.cc:329] The number of CUDAPlace, which is used in ParallelExecutor, is 1. And the Program will be copied 1 copies

I0920 13:58:46.832293 2131 build_strategy.cc:340] SeqOnlyAllReduceOps:0, num_trainers:1

[2019-09-20 13:58:46,870 INFO train.py:561] begin train

[2019-09-20 13:58:47,462 INFO train.py:594] step_idx: 0, epoch: 0, batch: 0, avg loss: 3.179328, normalized loss: 1.775048

[2019-09-20 13:58:52,159 INFO train.py:602] step_idx: 50, epoch: 0, batch: 50, avg loss: 3.173360, normalized loss: 1.769080, speed: 10.64 step/s

[2019-09-20 13:58:57,980 INFO train.py:602] step_idx: 100, epoch: 0, batch: 100, avg loss: 3.126150, normalized loss: 1.721869, speed: 8.59 step/s

[2019-09-20 13:59:06,231 INFO train.py:602] step_idx: 150, epoch: 0, batch: 150, avg loss: 3.107801, normalized loss: 1.703520, speed: 6.06 step/s

[2019-09-20 13:59:14,703 INFO train.py:602] step_idx: 200, epoch: 0, batch: 200, avg loss: 3.102083, normalized loss: 1.697803, speed: 5.90 step/s

[2019-09-20 13:59:23,036 INFO train.py:602] step_idx: 250, epoch: 0, batch: 250, avg loss: 3.259453, normalized loss: 1.855173, speed: 6.00 step/s

^C

Traceback (most recent call last):

File "src/train.py", line 807, in <module>

train(args)

File "src/train.py", line 727, in train

token_num, predict, pyreader)

File "src/train.py", line 579, in train_loop

feed=feed_dict_list)

File "/opt/conda/envs/python27-paddle120-env/lib/python2.7/site-packages/paddle/fluid/parallel_executor.py", line 280, in run

return_numpy=return_numpy)

File "/opt/conda/envs/python27-paddle120-env/lib/python2.7/site-packages/paddle/fluid/executor.py", line 666, in run

return_numpy=return_numpy)

File "/opt/conda/envs/python27-paddle120-env/lib/python2.7/site-packages/paddle/fluid/executor.py", line 521, in _run_parallel

tmp.set(tensor, program._places[i])

KeyboardInterrupt

# 使用开发集挑选模型

!cd {WORK_PATH} && rm trans_res/*

!cd {WORK_PATH} && sh infer_small.sh trained_models trans_res eval/dev_enzh DIR

!cd {WORK_PATH}/eval && sh eval.sh ../trans_res dev_reference DIRmemory_optimize is deprecated. Use CompiledProgram and Executor W0920 13:59:38.703399 2178 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0 W0920 13:59:38.708161 2178 device_context.cc:267] device: 0, cuDNN Version: 7.3. I0920 13:59:38.845857 2178 parallel_executor.cc:329] The number of CUDAPlace, which is used in ParallelExecutor, is 1. And the Program will be copied 1 copies I0920 13:59:38.849900 2178 build_strategy.cc:340] SeqOnlyAllReduceOps:0, num_trainers:1 memory_optimize is deprecated. Use CompiledProgram and Executor W0920 13:59:45.884814 2216 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0 W0920 13:59:45.889055 2216 device_context.cc:267] device: 0, cuDNN Version: 7.3. I0920 13:59:46.049649 2216 parallel_executor.cc:329] The number of CUDAPlace, which is used in ParallelExecutor, is 1. And the Program will be copied 1 copies I0920 13:59:46.053624 2216 build_strategy.cc:340] SeqOnlyAllReduceOps:0, num_trainers:1 memory_optimize is deprecated. Use CompiledProgram and Executor W0920 13:59:53.189880 2254 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0 W0920 13:59:53.194249 2254 device_context.cc:267] device: 0, cuDNN Version: 7.3. I0920 13:59:53.344911 2254 parallel_executor.cc:329] The number of CUDAPlace, which is used in ParallelExecutor, is 1. And the Program will be copied 1 copies I0920 13:59:53.348949 2254 build_strategy.cc:340] SeqOnlyAllReduceOps:0, num_trainers:1 memory_optimize is deprecated. Use CompiledProgram and Executor W0920 14:00:00.514881 2292 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0 W0920 14:00:00.518779 2292 device_context.cc:267] device: 0, cuDNN Version: 7.3. I0920 14:00:00.664440 2292 parallel_executor.cc:329] The number of CUDAPlace, which is used in ParallelExecutor, is 1. And the Program will be copied 1 copies I0920 14:00:00.668455 2292 build_strategy.cc:340] SeqOnlyAllReduceOps:0, num_trainers:1 memory_optimize is deprecated. Use CompiledProgram and Executor W0920 14:00:07.745339 2330 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0 W0920 14:00:07.749605 2330 device_context.cc:267] device: 0, cuDNN Version: 7.3. I0920 14:00:07.892396 2330 parallel_executor.cc:329] The number of CUDAPlace, which is used in ParallelExecutor, is 1. And the Program will be copied 1 copies I0920 14:00:07.896674 2330 build_strategy.cc:340] SeqOnlyAllReduceOps:0, num_trainers:1 iter_100.infer.model BLEU = 11.22, 43.9/16.2/6.9/3.2 (BP=1.000, ratio=1.057, hyp_len=2436, ref_len=2305) iter_150.infer.model BLEU = 11.54, 44.0/16.5/7.2/3.4 (BP=1.000, ratio=1.057, hyp_len=2437, ref_len=2305) iter_200.infer.model BLEU = 10.84, 43.8/16.0/6.6/3.0 (BP=1.000, ratio=1.050, hyp_len=2420, ref_len=2305) iter_250.infer.model BLEU = 11.23, 44.0/16.3/6.9/3.2 (BP=1.000, ratio=1.051, hyp_len=2422, ref_len=2305) iter_50.infer.model BLEU = 11.56, 44.0/16.4/7.1/3.5 (BP=1.000, ratio=1.054, hyp_len=2430, ref_len=2305)

#运行训练代码

# 根据挑选出来的训练模型跑预测,查看在测试集上的表现

!cd {WORK_PATH} && rm trans_res/*

!cd {WORK_PATH} && sh infer_small.sh trained_models/iter_50.infer.model trans_res eval/test_enzh FILE

!cd {WORK_PATH}/eval && sh eval.sh {WORK_PATH}/trans_res/small_trans_res test_reference FILE

!cd {WORK_PATH}/eval && sed -r 's/(@@ )|(@@ ?$)//g' test_enzh > input.tok.txt && head -1 input.tok.txt && head -1 predict.tok.txtmemory_optimize is deprecated. Use CompiledProgram and Executor W0920 14:00:24.761777 2385 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0 W0920 14:00:24.765815 2385 device_context.cc:267] device: 0, cuDNN Version: 7.3. I0920 14:00:24.912528 2385 parallel_executor.cc:329] The number of CUDAPlace, which is used in ParallelExecutor, is 1. And the Program will be copied 1 copies I0920 14:00:24.916476 2385 build_strategy.cc:340] SeqOnlyAllReduceOps:0, num_trainers:1 BLEU = 22.04, 57.3/29.6/16.4/8.5 (BP=1.000, ratio=1.038, hyp_len=2320, ref_len=2236) last week the US Senate Finance Committee overwhelmingly approved a bill requiring the Treasury to identify a list of countries with " fundamentally misaligned " currency exchange rates . this opens the door for potential economic sanctions to be brought against Beijing . 上周 , 美国参议院 财务 委员会 以 压倒性 多数 批准 了 一项 法案 , 要求 财政部 确定 一个 “ 根本 错位 ” 汇率 国家 名单 , 这 开启 了 可能 对 北京 实施 经济制裁 的 大门 。

Transformer解析

这一节我们介绍Transformer的整体流程和self-attention的机制

整体架构分为两个部分,一部分为encoder,主要是用来对输入的源语言进行语义化的向量表示;另一部分为decoder,解码器,用来生成目标端的句子。 从图上可以看出,Transformer主要由Multi-Head Attention和MLP组成,图中的Nx表示重复N次,这里重复表示堆叠多层,

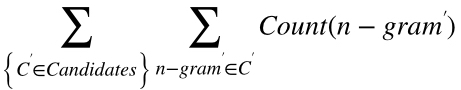

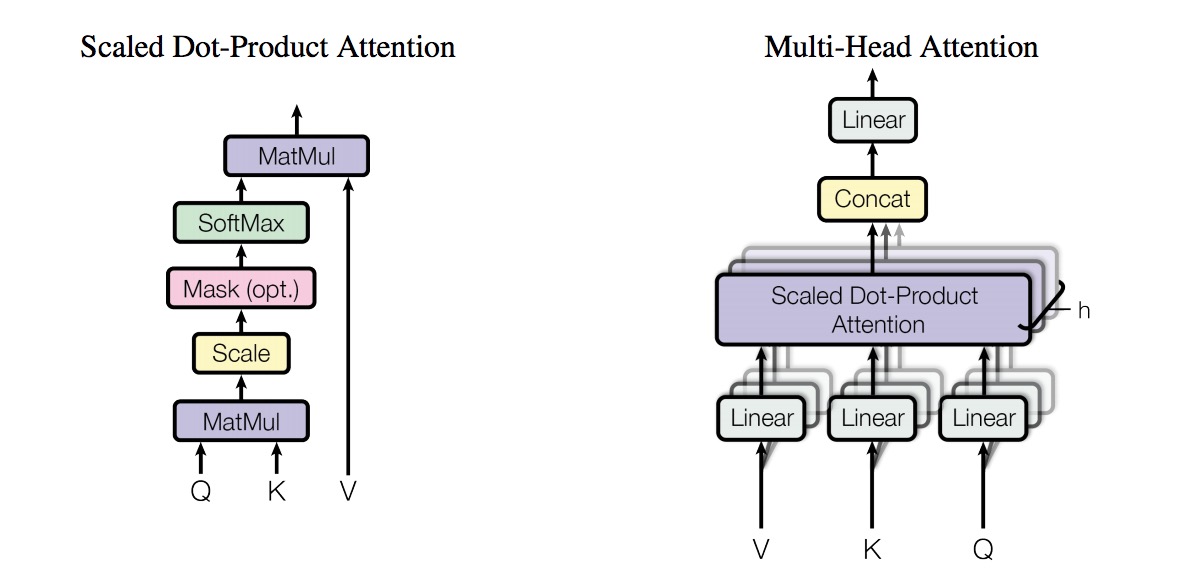

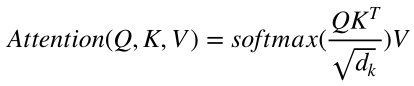

下面我们具体来看一下Multi-Head Attention的实现:

Multi-Head Attention是将输入的Q,K,V切分成多个通道,然后在每个通道上分别计算Scaled Dot-Product Attention,最后再concat起来,Scaled Dot-Product Attention的主要目的是通过Q和K来计算出V中值的权重,对应到翻译任务中即,在翻译目标端第T个词的时候,我需要着重看源端的那些词来决定翻译的结果。

BeamSearch

在解码的过程中,因为搜索空间巨大(指数量级),所以一般会采用剪枝的方式来减少搜索空间,常见的算法为BeamSearch,下图为beam为2的一个示例。

综上,Transformer + BeamSearch即可完成生成序列任务的预测。

Deeper/Bigger is Better

在Transformer模型结构下,更宽(hidden_size),更深(层数)的结构一般会显著带来效果的变化,接下来,我们简单的将hidden_size,layers和heads参数增大,观察一下在英中翻译任务上BLEU的变化。

Go Further

- bpe(一种新的切词方法,可以很大程度缓解oov的问题):

https://arxiv.org/abs/1508.07909;

github: https://github.com/rsennrich/subword-nmt - T2T模型论文:https://papers.nips.cc/paper/7181-attention-is-all-you-need.pdf

- 更改模型结构 src/model.py -> def dense_encoder

实验操作3

# 训练更深更宽的模型

!cd {WORK_PATH} && rm -rf trained_*

!cd {WORK_PATH} && sh train.sh+ export FLAGS_eager_delete_tensor_gb=0.0

+ export FLAGS_fraction_of_gpu_memory_to_use=0.98

+ CUDA_VISIBLE_DEVICES=0 python -u src/train.py --src_vocab_fpath ./data/vocab.source --trg_vocab_fpath ./data/vocab.target --train_file_pattern ./data/translate-train-000* --token_delimiter --use_token_batch True --batch_size 4096 --sort_type pool --pool_size 200000 --fetch_steps 10 save_freq 20 n_head 16 d_model 1024 d_inner_hid 4096 prepostprocess_dropout 0.3 ckpt_path ./model_big

12

['save_freq', '20', 'n_head', '16', 'd_model', '1024', 'd_inner_hid', '4096', 'prepostprocess_dropout', '0.3', 'ckpt_path', './model_big']

10

[2019-09-20 14:00:42,344 INFO train.py:656] Namespace(batch_size=4096, device='GPU', enable_ce=False, fetch_steps=10, local=True, opts=['save_freq', '20', 'n_head', '16', 'd_model', '1024', 'd_inner_hid', '4096', 'prepostprocess_dropout', '0.3', 'ckpt_path', './model_big'], pool_size=200000, shuffle=True, shuffle_batch=True, sort_type='pool', special_token=['0', '<EOS>', 'UNK'], src_vocab_fpath='./data/vocab.source', sync=True, token_delimiter=' ', train_file_pattern='./data/translate-train-000*', trg_vocab_fpath='./data/vocab.target', update_method='pserver', use_mem_opt=True, use_py_reader=False, use_token_batch=True, val_file_pattern=None)

[2019-09-20 14:00:42,665 INFO train.py:707] before adam

memory_optimize is deprecated. Use CompiledProgram and Executor

[2019-09-20 14:01:04,859 INFO train.py:725] local start_up:

W0920 14:01:05.594372 2435 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0

W0920 14:01:05.598951 2435 device_context.cc:267] device: 0, cuDNN Version: 7.3.

[2019-09-20 14:01:05,667 INFO train.py:505] load checkpoint from ./model_big

[2019-09-20 14:01:06,284 INFO train.py:512] begin reader

[2019-09-20 14:01:11,938 INFO train.py:539] begin executor

I0920 14:01:12.019623 2435 parallel_executor.cc:329] The number of CUDAPlace, which is used in ParallelExecutor, is 1. And the Program will be copied 1 copies

I0920 14:01:12.219225 2435 build_strategy.cc:340] SeqOnlyAllReduceOps:0, num_trainers:1

[2019-09-20 14:01:12,312 INFO train.py:561] begin train

[2019-09-20 14:01:13,236 INFO train.py:594] step_idx: 0, epoch: 0, batch: 0, avg loss: 2.630473, normalized loss: 1.226193

[2019-09-20 14:01:19,283 INFO train.py:602] step_idx: 10, epoch: 0, batch: 10, avg loss: 2.690705, normalized loss: 1.286424, speed: 1.65 step/s

[2019-09-20 14:01:25,591 INFO train.py:602] step_idx: 20, epoch: 0, batch: 20, avg loss: 2.393026, normalized loss: 0.988745, speed: 1.59 step/s

[2019-09-20 14:01:39,230 INFO train.py:602] step_idx: 30, epoch: 0, batch: 30, avg loss: 2.518420, normalized loss: 1.114139, speed: 0.73 step/s

[2019-09-20 14:01:45,339 INFO train.py:602] step_idx: 40, epoch: 0, batch: 40, avg loss: 2.413766, normalized loss: 1.009486, speed: 1.64 step/s

[2019-09-20 14:02:40,083 INFO train.py:602] step_idx: 50, epoch: 0, batch: 50, avg loss: 2.590959, normalized loss: 1.186678, speed: 0.18 step/s

^C

Traceback (most recent call last):

File "src/train.py", line 807, in <module>

train(args)

File "src/train.py", line 727, in train

token_num, predict, pyreader)

File "src/train.py", line 575, in train_loop

init_flag, dev_count)

File "src/train.py", line 375, in prepare_feed_dict_list

ModelHyperParams.d_model)

File "src/train.py", line 250, in prepare_batch_input

[inst[0] for inst in insts], src_pad_idx, n_head, is_target=False)

File "src/train.py", line 233, in pad_batch_data

return_list += [slf_attn_bias_data.astype("float32")]

KeyboardInterrupt

# 使用开发集挑选模型,并在测试集上验证效果

!cd {WORK_PATH} && rm trans_res/*

!cd {WORK_PATH} && sh infer.sh trained_models trans_res eval/dev_enzh DIR

!cd {WORK_PATH}/eval && sh eval.sh ../trans_res dev_reference DIRmemory_optimize is deprecated. Use CompiledProgram and Executor W0920 14:02:52.170383 2482 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0 W0920 14:02:52.174947 2482 device_context.cc:267] device: 0, cuDNN Version: 7.3. I0920 14:02:52.953883 2482 parallel_executor.cc:329] The number of CUDAPlace, which is used in ParallelExecutor, is 1. And the Program will be copied 1 copies I0920 14:02:52.961632 2482 build_strategy.cc:340] SeqOnlyAllReduceOps:0, num_trainers:1 memory_optimize is deprecated. Use CompiledProgram and Executor W0920 14:03:05.511006 2520 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0 W0920 14:03:05.514842 2520 device_context.cc:267] device: 0, cuDNN Version: 7.3. I0920 14:03:06.248040 2520 parallel_executor.cc:329] The number of CUDAPlace, which is used in ParallelExecutor, is 1. And the Program will be copied 1 copies I0920 14:03:06.256811 2520 build_strategy.cc:340] SeqOnlyAllReduceOps:0, num_trainers:1 iter_20.infer.model BLEU = 14.35, 47.1/19.3/9.4/5.0 (BP=1.000, ratio=1.046, hyp_len=2412, ref_len=2305) iter_40.infer.model BLEU = 14.51, 47.1/19.5/9.5/5.1 (BP=1.000, ratio=1.046, hyp_len=2411, ref_len=2305)

# 根据挑选出来的训练模型跑预测,查看在测试集上的表现

!cd {WORK_PATH} && rm trans_res/*

!cd {WORK_PATH} && sh infer.sh trained_models/iter_40.infer.model trans_res eval/test_enzh FILE

!cd {WORK_PATH}/eval && sh eval.sh {WORK_PATH}/trans_res/big_trans_res test_reference FILE

!cd {WORK_PATH}/eval && sed -r 's/(@@ )|(@@ ?$)//g' test_enzh > input.tok.txt && head -1 input.tok.txt && head -1 predict.tok.txtmemory_optimize is deprecated. Use CompiledProgram and Executor W0920 14:05:38.702764 2713 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0 W0920 14:05:38.706779 2713 device_context.cc:267] device: 0, cuDNN Version: 7.3. I0920 14:05:39.428685 2713 parallel_executor.cc:329] The number of CUDAPlace, which is used in ParallelExecutor, is 1. And the Program will be copied 1 copies I0920 14:05:39.436707 2713 build_strategy.cc:340] SeqOnlyAllReduceOps:0, num_trainers:1

实验效果

| Base Model | Big Model | |

|---|---|---|

| BLEU | 22.21 | 30.39 |

可以看出,更宽更深的模型效果提升很明显

点击链接,使用AI Studio一键上手实践项目吧:https://aistudio.baidu.com/aistudio/projectdetail/120044

下载安装命令

## CPU版本安装命令

pip install -f https://paddlepaddle.org.cn/pip/oschina/cpu paddlepaddle

## GPU版本安装命令

pip install -f https://paddlepaddle.org.cn/pip/oschina/gpu paddlepaddle-gpu

>> 访问 PaddlePaddle 官网,了解更多相关内容。

来源:oschina

链接:https://my.oschina.net/u/4067628/blog/3434345