编写脚本:

import os

import threading

import time

import requests

from bs4 import BeautifulSoup

headers = {

'user-agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_3) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/80.0.3987.132 Safari/537.36',

}

def do_request(url, tries=3):

# 添加重试机制

i = 0

while i < tries:

try:

res = requests.get(url, timeout=5)

return res

except requests.exceptions.RequestException as e:

i += 1

print('request error.', e)

return False

def download_local(url, img_file):

'''

下载图片到本地

:param url: 远程图片地址

:param img_file: 本地存储地址

'''

res = do_request(url)

if res is False:

print(f'请求失败:', url)

return

img = open(img_file, 'wb')

img.write(res.content)

img.close()

def save_img(url, name, page):

'''

保存图片

:param url: 远程图片地址

:param name: 图片名称

:param page: 页码

'''

print(url, name)

# 目录不存在,则创建

img_path = f'./imgs/{page}/'

if not os.path.isdir(img_path):

os.mkdir(img_path, mode=755)

img_ext = os.path.splitext(url)[1] # 获取图片后缀

img_file = f'{img_path}{name}.{img_ext}'

print(img_file)

# 文件已经存在,无需下载

if os.path.isfile(img_file):

print(f'img is exist. img: {img_file}')

return True

download_local(url, img_file)

def crawl_images(page):

'''

抓取图片

:param page: 分页

'''

url = f'https://www.2717.com/ent/meinvtupian/list_11_{page}.html'

print(url)

response = requests.get(url, headers=headers)

response.encoding = response.apparent_encoding # 避免乱码(默认iso-8859-1)

# print(response.text)

soup = BeautifulSoup(response.text, 'lxml')

lists = soup.find('div', class_='MeinvTuPianBox').find_all('a', class_='MMPic')

for item in lists:

print('###'*20)

img_url = item.find('img')['src']

img_name = item.find('img')['alt']

# save_img(img_url, img_name)

# 多线程,加快抓取

t = threading.Thread(target=save_img, args=[img_url, img_name, page])

t.start()

def main():

for page in range(251, 1, -1):

# for page in [34]:

print(f'current page: {page}')

crawl_images(page)

time.sleep(0.01)

if __name__ == '__main__':

print('=============== crawl images start ==============')

start = time.time()

main()

print('=============== crawl images end ==============')

print('Time usage:{0:.3f}'.format(time.time()-start))

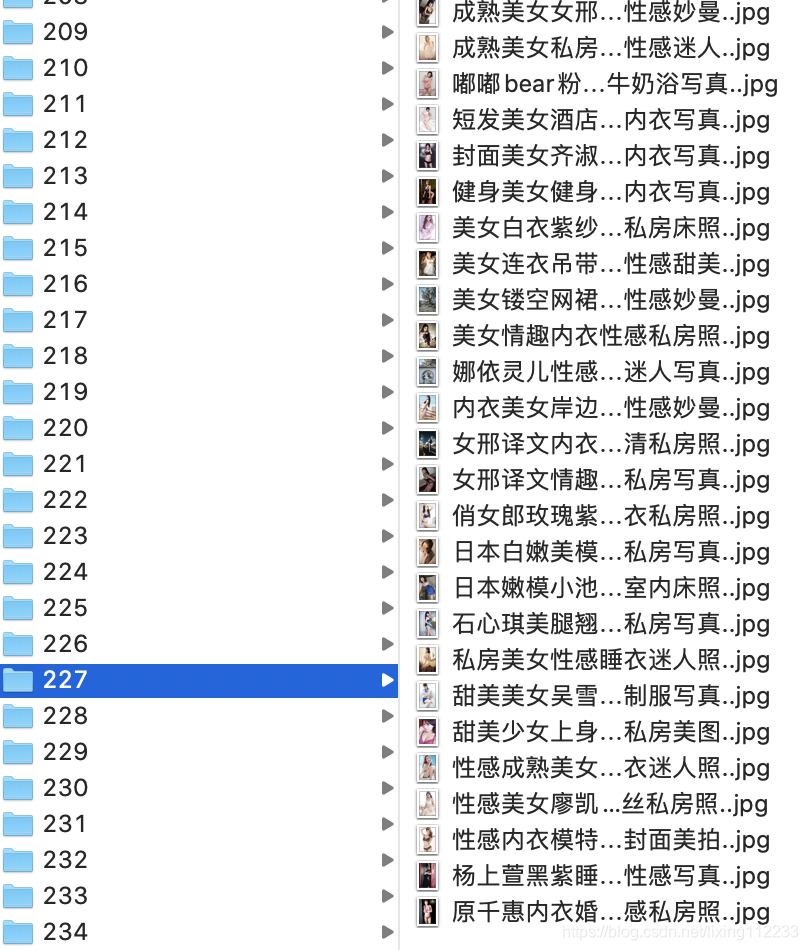

抓取效果:

来源:CSDN

作者:codsing

链接:https://blog.csdn.net/lixing112233/article/details/104734898