本篇记录一下TensorFlow中张量的排序方法

tf.sort和tf.argsort

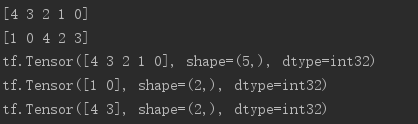

# 声明tensor a是由1到5打乱顺序组成的 a = tf.random.shuffle(tf.range(5)) # 打印排序后的tensor print(tf.sort(a,direction='DESCENDING').numpy()) # 打印从大到小排序后,数字对应原来的索引 print(tf.argsort(a,direction='DESCENDING').numpy()) index = tf.argsort(a,direction='DESCENDING') # 按照索引序列取值 print(tf.gather(a,index)) # 返回最大的两个值信息 res = tf.math.top_k(a,2) # indices返回索引 print(res.indices) # values返回值 print(res.values)

计算准确率实例:

# 定义模型输出预测概率

prob = tf.constant([[0.1,0.2,0.7],[0.2,0.7,0.1]])

# 定义y标签

target = tf.constant([2,0])

# 求top3的索引

k_b = tf.math.top_k(prob,3).indices

# 将矩阵进行转置,即把top-1,top-2,top-3分组

print(tf.transpose(k_b,[1,0]))

# 将y标签扩展成与top矩阵相同维度的tensor,方便比较

target = tf.broadcast_to(target,[3,2])

# 实现求准确率的方法

def accuracy(output,target,topk=(1,)):

maxk = max(topk)

batch_size = target.shape[0]

pred = tf.math.top_k(output,maxk).indices

pred = tf.transpose(pred,perm=[1,0])

target_ = tf.broadcast_to(target,pred.shape)

correct = tf.equal(pred,target_)

res = []

for k in topk:

correct_k = tf.cast(tf.reshape(correct[:k],[-1]),dtype=tf.float32)

correct_k = tf.reduce_sum(correct_k)

acc = float(correct_k/batch_size)

res.append(acc)

return res

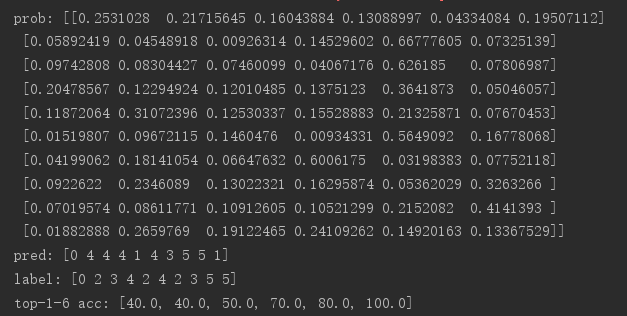

import tensorflow as tf

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

tf.random.set_seed(2467)

def accuracy(output, target, topk=(1,)):

maxk = max(topk)

batch_size = target.shape[0]

pred = tf.math.top_k(output, maxk).indices

pred = tf.transpose(pred, perm=[1, 0])

target_ = tf.broadcast_to(target, pred.shape)

# [10, b]

correct = tf.equal(pred, target_)

res = []

for k in topk:

correct_k = tf.cast(tf.reshape(correct[:k], [-1]), dtype=tf.float32)

correct_k = tf.reduce_sum(correct_k)

acc = float(correct_k* (100.0 / batch_size) )

res.append(acc)

return res

output = tf.random.normal([10, 6])

output = tf.math.softmax(output, axis=1)

target = tf.random.uniform([10], maxval=6, dtype=tf.int32)

print('prob:', output.numpy())

pred = tf.argmax(output, axis=1)

print('pred:', pred.numpy())

print('label:', target.numpy())

acc = accuracy(output, target, topk=(1,2,3,4,5,6))

print('top-1-6 acc:', acc)

来源:https://www.cnblogs.com/zdm-code/p/12231585.html