今天我们开始讲下A3C。

解决问题

收敛速度慢是之前Actor-Critic算法的问题。

对此提出三点改进:

1.还记得之前的参数更新公式:

A3C使用了另外的优势函数形式:

参数更新形式:

以上是对Actor的更新。

还有有一个小的优化点就是在Actor-Critic策略函数的损失函数中,加入了策略π的熵项,系数为c, 即策略参数的梯度更新和Actor-Critic相比变成了这样:

上面看到对熵进行求导,减小熵值,尽量不要让策略输出每一个行为的概率相等。

以上是对Actor网络参数的更新

我们着重看下

因为

所以

当然以上是单步采样的结果

A3C使用多步采样

以上是对Critic参数的更新

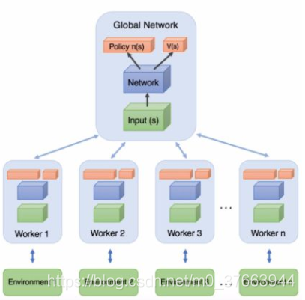

2.异步训练框架

图中上面的Global Network就是上一节说的共享的公共部分,主要是一个公共的神经网络模型,这个神经网络包括Actor网络和Critic网络两部分的功能。下面有n个worker线程,每个线程里有和公共的神经网络一样的网络结构,每个线程会独立的和环境进行交互得到经验数据,这些线程之间互不干扰,独立运行。

每个线程和环境交互到一定量的数据后,就计算在自己线程里的神经网络损失函数的梯度,但是这些梯度却并不更新自己线程里的神经网络,而是去更新公共的神经网络。也就是n个线程会独立的使用累积的梯度分别更新公共部分的神经网络模型参数。每隔一段时间,线程会将自己的神经网络的参数更新为公共神经网络的参数,进而指导后面的环境交互

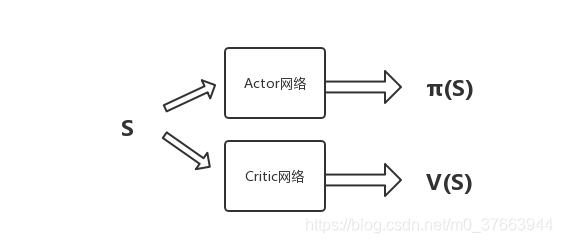

3.接下来是网络结构优化

这里我们将两个网络放到了一起,同时算出和

算法流程

这里我们对A3C算法流程做一个总结,由于A3C是异步多线程的,我们这里给出任意一个线程的算法流程。

输入:公共部分的A3C神经网络结构,对应参数位,,本线程的A3C神经网络结构,对应参数,, 全局共享的迭代轮数,全局最大迭代次数, 线程内单次迭代时间序列最大长度,状态特征维度, 动作集, 步长,,熵系数, 衰减因子, 探索率

输入:公共部分的A3C神经网络参数,

1. 更新时间序列=1

2. 重置Actor和Critic的梯度更新量:←0,←0

3. 从公共部分的A3C神经网络同步参数到本线程的神经网络:,

4. ,初始化状态

5. 基于策略选择出动作

6. 执行动作得到奖励和新状态

7.,

8. 如果st是终止状态,或,则进入步骤9,否则回到步骤5

9. 计算最后一个时间序列位置的:

10. for :

1) 计算每个时刻的:

2) 累计Actor的本地梯度更新:

3) 累计Critic的本地梯度更新:

11. 更新全局神经网络的模型参数:

12. 如果T>Tmax,则算法结束,输出公共部分的A3C神经网络参数θ,w,否则进入步骤3

以上就是A3C算法单个线程的算法流程。

代码

改代码感觉还有错误,还望指正,根据tensorflow代码,Pytorch实现

改代码需命令行输入 python a3c.py

# -*- coding: utf-8 -*-

"""

Created on Wed Dec 11 10:54:30 2019

@author: asus

"""

#######################################################################

# Copyright (C) #

# 2016 - 2019 Pinard Liu(liujianping-ok@163.com) #

# https://www.cnblogs.com/pinard #

# Permission given to modify the code as long as you keep this #

# declaration at the top #

#######################################################################

## reference from MorvanZhou's A3C code on Github, minor update:##

##https://github.com/MorvanZhou/Reinforcement-learning-with-tensorflow/blob/master/contents/10_A3C/A3C_discrete_action.py ##

## https://www.cnblogs.com/pinard/p/10334127.html ##

## 强化学习(十五) A3C ##

import numpy as np

import gym

import matplotlib.pyplot as plt

import torch

from torch import nn

from torch.nn import functional as F

import torch.multiprocessing as mp

GAME = 'CartPole-v0'

OUTPUT_GRAPH = True

LOG_DIR = './log'

N_WORKERS = 3

MAX_GLOBAL_EP = 3000

GLOBAL_NET_SCOPE = 'Global_Net'

UPDATE_GLOBAL_ITER = 100

GAMMA = 0.9

ENTROPY_BETA = 0.001

LR_A = 0.001 # learning rate for actor

LR_C = 0.001 # learning rate for critic

GLOBAL_RUNNING_R = []

GLOBAL_EP = 0

STEP = 3000 # Step limitation in an episode

TEST = 10 # The number of experiment test every 100 episode

GLOBAL_EP = 0

env = gym.make(GAME)

N_S = env.observation_space.shape[0]

N_A = env.action_space.n

def ensure_shared_grads(model, shared_model):

for param, shared_param in zip(model.parameters(),

shared_model.parameters()):

shared_param._grad = param.grad

class Actor(torch.nn.Module):

def __init__(self):

super(Actor, self).__init__()

self.actor_fc1 = nn.Linear(N_S, 200)

self.actor_fc1.weight.data.normal_(0, 0.6)

self.actor_fc2 = nn.Linear(200, N_A)

self.actor_fc2.weight.data.normal_(0, 0.6)

def forward(self, s):

l_a = F.relu6(self.actor_fc1(s))

a_prob = F.softmax(self.actor_fc2(l_a))

return a_prob

class Critic(torch.nn.Module):

def __init__(self):

super(Critic, self).__init__()

self.critic_fc1 = nn.Linear(N_S, 100)

self.critic_fc1.weight.data.normal_(0, 0.6)

self.critic_fc2 = nn.Linear(100, 1)

self.critic_fc2.weight.data.normal_(0, 0.6)

def forward(self, s):

l_c = F.relu6(self.critic_fc1(s))

v = self.critic_fc2(l_c)

return v

class ACNet(torch.nn.Module):

def __init__(self):

super(ACNet, self).__init__()

self.actor = Actor()

self.critic = Critic()

self.actor_optimizer = torch.optim.Adam(params=self.actor.parameters(), lr=0.001)

self.critic_optimizer = torch.optim.Adam(params=self.critic.parameters(), lr=0.001)

def return_td(self, s, v_target):

self.v = self.critic(s)

td = v_target - self.v

return td.detach()

def return_c_loss(self, s, v_target):

self.v = self.critic(s)

self.td = v_target - self.v

self.c_loss = (self.td**2).mean()

return self.c_loss

def return_a_loss(self, s, td, a_his):

self.a_prob = self.actor(s)

a_his = a_his.unsqueeze(1)

one_hot = torch.zeros(a_his.shape[0], N_A).scatter_(1, a_his, 1)

log_prob = torch.sum(torch.log(self.a_prob + 1e-5) * one_hot, dim=1, keepdim=True)

exp_v = log_prob*td

entropy = -torch.sum(self.a_prob * torch.log(self.a_prob + 1e-5),dim=1, keepdim=True)

self.exp_v = ENTROPY_BETA * entropy + exp_v

self.a_loss = (-self.exp_v).mean()

return self.a_loss

def choose_action(self, s): # run by a local

prob_weights = self.actor(torch.FloatTensor(s[np.newaxis, :]))

action = np.random.choice(range(prob_weights.shape[1]),

p=prob_weights.detach().numpy().ravel()) # select action w.r.t the actions prob

return action

def work(name, AC, lock):

env = gym.make(GAME).unwrapped

global GLOBAL_RUNNING_R, GLOBAL_EP

total_step = 1

buffer_s, buffer_a, buffer_r = [], [], []

model = ACNet()

actor_optimizer = AC.actor_optimizer

critic_optimizer = AC.critic_optimizer

while GLOBAL_EP < MAX_GLOBAL_EP:

lock.acquire()

model.load_state_dict(AC.state_dict())

s = env.reset()

ep_r = 0

while True:

# if self.name == 'W_0':

# self.env.render()

a = model.choose_action(s)

s_, r, done, info = env.step(a)

if done: r = -5

ep_r += r

buffer_s.append(s)

buffer_a.append(a)

buffer_r.append(r)

if total_step % UPDATE_GLOBAL_ITER == 0 or done: # update global and assign to local net

if done:

v_s_ = 0 # terminal

else:

#if not done, v(s)

v_s_ = model.critic(torch.FloatTensor(s_[np.newaxis, :]))[0, 0]

buffer_v_target = []

#create buffer_v_target

for r in buffer_r[::-1]: # reverse buffer r

v_s_ = r + GAMMA * v_s_

buffer_v_target.append(v_s_)

buffer_v_target.reverse()

buffer_s, buffer_a, buffer_v_target = torch.FloatTensor(buffer_s), torch.LongTensor(buffer_a), torch.FloatTensor(buffer_v_target)

td_error = model.return_td(buffer_s, buffer_v_target)

c_loss = model.return_c_loss(buffer_s, buffer_v_target)

critic_optimizer.zero_grad()

c_loss.backward()

ensure_shared_grads(model, AC)

critic_optimizer.step()

a_loss = model.return_a_loss(buffer_s, td_error, buffer_a)

actor_optimizer.zero_grad()

a_loss.backward()

ensure_shared_grads(model, AC)

actor_optimizer.step()

buffer_s, buffer_a, buffer_r = [], [], []

s = s_

total_step += 1

if done:

if len(GLOBAL_RUNNING_R) == 0: # record running episode reward

GLOBAL_RUNNING_R.append(ep_r)

else:

GLOBAL_RUNNING_R.append(0.99 * GLOBAL_RUNNING_R[-1] + 0.01 * ep_r)

# print(

# self.name,

# "Ep:", GLOBAL_EP,

# "| Ep_r: %i" % GLOBAL_RUNNING_R[-1],

# )

print("name: %s" % name, "| Ep_r: %i" % GLOBAL_RUNNING_R[-1])

print(name)

GLOBAL_EP += 1

break

lock.release()

if __name__ == "__main__":

GLOBAL_AC = ACNet() # we only need its params

GLOBAL_AC.share_memory()

workers = []

lock = mp.Lock()

# Create worker

for i in range(N_WORKERS):

p = mp.Process(target=work, args=(i,GLOBAL_AC,lock,))

p.start()

workers.append(p)

for p in workers:

p.join()

total_reward = 0

for i in range(TEST):

state = env.reset()

for j in range(STEP):

# env.render()

action = GLOBAL_AC.choose_action(state) # direct action for test

state, reward, done, _ = env.step(action)

total_reward += reward

if done:

break

ave_reward = total_reward / TEST

print('episode: ', GLOBAL_EP, 'Evaluation Average Reward:', ave_reward)

plt.plot(np.arange(len(GLOBAL_RUNNING_R)), GLOBAL_RUNNING_R)

plt.xlabel('step')

plt.ylabel('Total moving reward')

plt.show()

来源:CSDN

作者:m0_37663944

链接:https://blog.csdn.net/m0_37663944/article/details/103596336