kubeadm安装k8s高可用集群

系统版本:CentOS7.5

Kubernetes版本:v1.15.0

docker版本:18.06

| k8s-vip | k8s-m1 | k8s-m2 | k8s-m3 |

| 192.168.1.200 | 192.168.1.201 | 192.168.1.202 | 192.168.1.203 |

配置主机名

cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 192.168.1.201 k8s-m1 192.168.1.202 k8s-m2 192.168.1.203 k8s-m3 hostnamectl set-hostname k8s-m1/2/3

ssh免秘钥登录

ssh-keygen ssh-copy-id root@192.168.1.201/202/203

初始化脚本

cat init_env.sh

#!/bin/bash

#关闭防火墙 SELINUX

systemctl stop firewalld && systemctl disable firewalld

setenforce 0

sed -i 's/^SELINUX=enforcing$/SELINUX=disable/' /etc/selinux/config

#关闭swap

swapoff -a && sysctl -w vm.swappiness=0

sed -i 's/.*swap.*/#&/g' /etc/fstab

#设置Docker所需参数

cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

modprobe br_netfilter

sysctl -p /etc/sysctl.d/k8s.conf

#加载ip_vs模块

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

#安装docker18.06版本

yum -y install yum-utils device-mapper-persistent-data lvm2 wget epel-release ipvsadm vim ntpdate

yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

yum install -y docker-ce-18.06.1.ce-3.el7

cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://gco4rcsp.mirror.aliyuncs.com"],

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "3"

}

}

EOF

systemctl enable docker && systemctl daemon-reload && systemctl restart docker

#安装kube组件

cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

#所有节点时间同步计划任务 0 * * * * ntpdate 202.112.10.36

#设置环境变量 export ETCD_version=v3.3.12 export ETCD_SSL_DIR=/etc/etcd/ssl export APISERVER_IP=192.168.1.200 export SYSTEM_SERVICE_DIR=/usr/lib/systemd/system

#cfssl下载 wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/local/bin/cfssl wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/local/bin/cfssljson chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson

#ETCD下载

wget https://github.com/etcd-io/etcd/releases/download/${ETCD_version}/etcd-${ETCD_version}-linux-amd64.tar.gz

tar -zxvf etcd-${ETCD_version}-linux-amd64.tar.gz && cd etcd-${ETCD_version}-linux-amd64

#复制可执行文件到其余master节点

for node in k8s-m{1,2,3};do scp etcd* root@$node:/usr/local/bin/;done

#生成etcd CA、etcd证书

mkdir -p ${ETCD_SSL_DIR} && cd ${ETCD_SSL_DIR}

cat > ca-config.json <<EOF

{"signing":{"default":{"expiry":"87600h"},"profiles":{"kubernetes":{"usages":["signing","key encipherment","server auth","client auth"],"expiry":"87600h"}}}}

EOF

cat > etcd-ca-csr.json <<EOF

{"CN":"etcd","key":{"algo":"rsa","size":2048},"names":[{"C":"CN","ST":"BeiJing","L":"BeiJing","O":"etcd","OU":"etcd"}]}

EOF

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare etcd-ca

cfssl gencert -ca=etcd-ca.pem -ca-key=etcd-ca-key.pem -config=ca-config.json -hostname=127.0.0.1,192.168.1.201,192.168.1.202,192.168.1.203 -profile=kubernetes etcd-ca-csr.json | cfssljson -bare etcd

rm -rf *.json *.csr

for node in k8s-m{2,3};do ssh root@$node mkdir -p ${ETCD_SSL_DIR} /var/lib/etcd;scp * root@$node:${ETCD_SSL_DIR};done

#ETCD配置文件

cat /etc/etcd/config

#[Member]

ETCD_NAME="etcd01"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.1.201:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.1.201:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.1.201:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.1.201:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.1.201:2380,etcd02=https://192.168.1.202:2380,etcd03=https://192.168.1.203:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

cat ${SYSTEM_SERVICE_DIR}/etcd.service

[Unit]

Description=Etcd Server

After=neCNork.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/etc/etcd/config

ExecStart=/usr/local/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER_TOKEN} \

--initial-cluster-state=new \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--peer-cert-file=/etc/etcd/ssl/etcd.pem \

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \

--trusted-ca-file=/etc/etcd/ssl/etcd-ca.pem \

--peer-trusted-ca-file=/etc/etcd/ssl/etcd-ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

#复制配置文件及启动文件到其余主节点

for node in k8s-m{2,3};do scp /etc/etcd/config root@$node:/etc/etcd/;scp ${SYSTEM_SERVICE_DIR}/etcd.service root@$node:${SYSTEM_SERVICE_DIR};done

#修改配置文件后,主节点分别启动etcd systemctl enable --now etcd

#检查etcd集群状态 etcdctl \ --ca-file=/etc/etcd/ssl/etcd-ca.pem \ --cert-file=/etc/etcd/ssl/etcd.pem \ --key-file=/etc/etcd/ssl/etcd-key.pem \ --endpoints="https://192.168.1.201:2379,\ https://192.168.1.202:2379,\ https://192.168.1.203:2379" cluster-health

#apiserver高可用部署 Haproxy+Keepalived

for node in k8s-m{1,2,3};do ssh root@$node yum -y install haproxy keepalived;done

#keepalived配置文件,其余节点修改state为BACKUP,priority小于主节点即可;检查网卡名称并修改

cat > /etc/keepalived/keepalived.conf << EOF

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 3

}

vrrp_instance VI_1 {

state MASTER

interface ens192

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

${APISERVER_IP}

}

track_script {

check_haproxy

}

}

EOF

cat > /etc/keepalived/check_haproxy.sh <<EOF

#!/bin/bash

systemctl status haproxy > /dev/null

if [[ \$? != 0 ]];then

echo "haproxy is down,close the keepalived"

systemctl stop keepalived

fi

EOF

cat > /etc/haproxy/haproxy.cfg << EOF

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

frontend k8s-api

bind *:8443

mode tcp

default_backend apiserver

#---------------------------------------------------------------------

backend apiserver

balance roundrobin

mode tcp

server k8s-m1 192.168.1.201:6443 check weight 1 maxconn 2000 check inter 2000 rise 2 fall 3

server k8s-m2 192.168.1.202:6443 check weight 1 maxconn 2000 check inter 2000 rise 2 fall 3

server k8s-m3 192.168.1.203:6443 check weight 1 maxconn 2000 check inter 2000 rise 2 fall 3

EOF

#复制配置文件到其余主节点

for node in k8s-m{2,3};do scp /etc/keepalived/* root@$node:/etc/keepalived;scp /etc/haproxy/haproxy.cfg root@$node:/etc/haproxy;done

#修改keepalived.conf文件并启动服务

#修改过程省略

for node in k8s-m{1,2,3};do ssh root@$node systemctl enable --now keepalived haproxy;done

#查看VIP是否工作正常ping ${APISERVER_IP} -c 3

#kubeadm初始化

#查看需要拉取的容器镜像 kubeadm config images list

images=(kube-apiserver:v1.15.0 kube-controller-manager:v1.15.0 kube-scheduler:v1.15.0 kube-proxy:v1.15.0 pause:3.1 etcd:3.3.10 coredns:1.3.1)

for image in ${images[@]};do docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/${image};docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/${image} k8s.gcr.io/${image};docker rmi registry.cn-hangzhou.aliyuncs.com/google_containers/${image};done

cat kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: v1.15.0

controlPlaneEndpoint: "192.168.1.200:8443"

etcd:

external:

endpoints:

- https://192.168.1.201:2379

- https://192.168.1.202:2379

- https://192.168.1.203:2379

caFile: /etc/etcd/ssl/etcd-ca.pem

certFile: /etc/etcd/ssl/etcd.pem

keyFile: /etc/etcd/ssl/etcd-key.pem

networking:

podSubnet: 10.244.0.0/16

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

kubeadm init --config=kubeadm-config.yaml --upload-certs

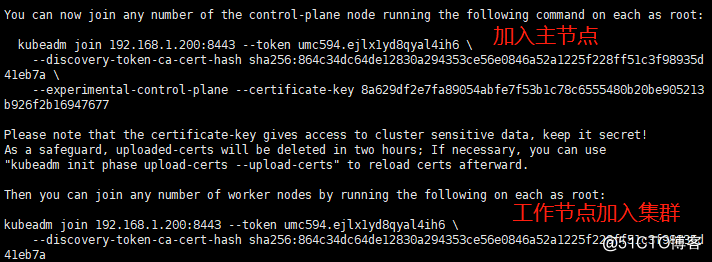

完成会生成加入集群命令

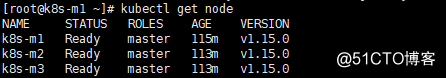

在其余节点执行加入集群命令

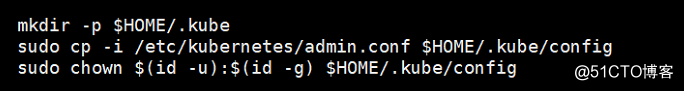

配置admin.conf文件来操作集群

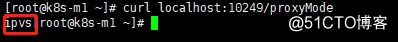

查看是否为IPVS模式

#部署flannel网络 kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/62e44c867a2846fefb68bd5f178daf4da3095ccb/Documentation/kube-flannel.yml

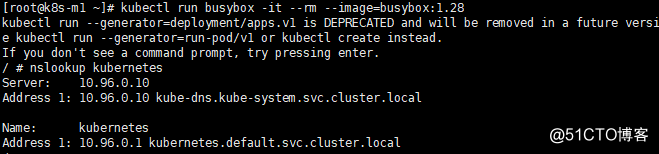

验证dns

参考:

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/high-availability/

https://blog.51cto.com/13740724/2393698

https://godoc.org/k8s.io/kubernetes/cmd/kubeadm/app/apis/kubeadm/v1beta2

©著作权归作者所有:来自51CTO博客作者疯狂小二丶的原创作品,如需转载,请注明出处,否则将追究法律责任

https://blog.51cto.com/13740724/2412370