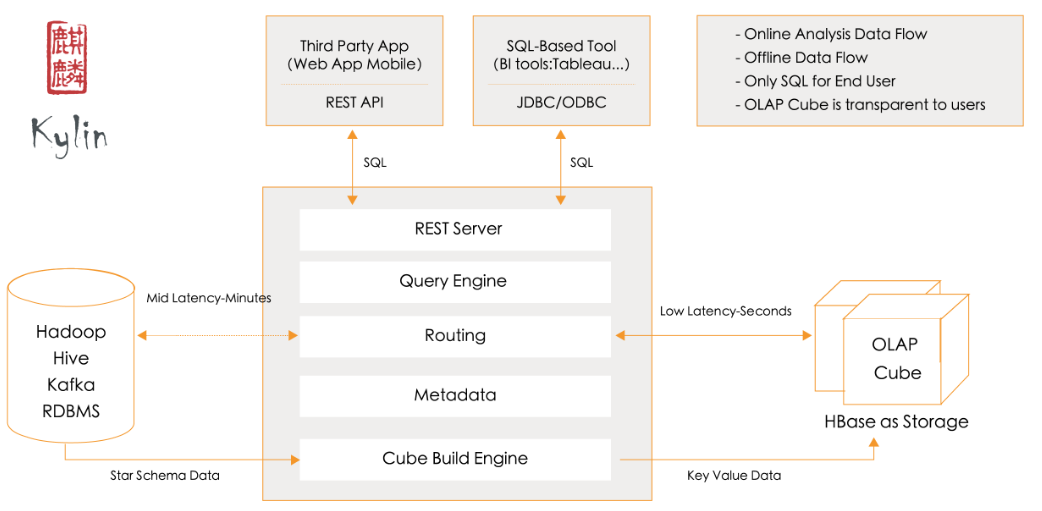

Apache Kylin是一个开源的分布式分析引擎,提供Hadoop/Spark之上的SQL查询接口及多维分析(OLAP)能力以支持超大规模数据,最初由eBay Inc. 开发并贡献至开源社区。它能在亚秒内查询巨大的Hive表。

伪分布式环境搭建

hadoop-2.7.7安装

http://hadoop.apache.org/docs/stable/hadoop-project-dist/hadoop-common/SingleCluster.html

hive2.1.1

https://my.oschina.net/peakfang/blog/2236971

hbase1.2.6

http://hbase.apache.org/book.html#_introduction

kylin-2.2.0

http://kylin.apache.org/docs/install/index.html

如果所用的spark为(hive on spark)源码编译不带hive jar包,或者1.6.3版本时,因SPARK_HOME目录下无jars目录,启动kylin时会报如下错误

find: ‘/usr/local/spark-1.6.3/jars’: No such file or directory

[root@node222 local]# vi kylin-2.5.0/bin/find-spark-dependency.sh

# 38行 jars 改成lib[root@node222 local]# kylin-2.5.0/bin/kylin.sh start

Retrieving hadoop conf dir...

KYLIN_HOME is set to /usr/local/kylin-2.5.0

Retrieving hive dependency...

Retrieving hbase dependency...

Retrieving hadoop conf dir...

Retrieving kafka dependency...

Retrieving Spark dependency...

Start to check whether we need to migrate acl tables

Retrieving hadoop conf dir...

KYLIN_HOME is set to /usr/local/kylin-2.5.0

Retrieving hive dependency...

Retrieving hbase dependency...

Retrieving hadoop conf dir...

Retrieving kafka dependency...

Retrieving Spark dependency...

......

A new Kylin instance is started by root. To stop it, run 'kylin.sh stop'

Check the log at /usr/local/kylin-2.5.0/logs/kylin.log

Web UI is at http://<hostname>:7070/kylin快速入门

http://kylin.apache.org/docs/tutorial/kylin_sample.html

[root@node222 local]# kylin-2.5.0/bin/sample.sh

Retrieving hadoop conf dir...

Loading sample data into HDFS tmp path: /tmp/kylin/sample_cube/data

......

Loading data to table default.kylin_sales

OK

Time taken: 1.257 seconds

Loading data to table default.kylin_account

OK

Time taken: 0.455 seconds

Loading data to table default.kylin_country

OK

Time taken: 0.385 seconds

Loading data to table default.kylin_cal_dt

OK

Time taken: 0.579 seconds

Loading data to table default.kylin_category_groupings

OK

Time taken: 0.502 seconds

......

Sample cube is created successfully in project 'learn_kylin'.

Restart Kylin Server or click Web UI => System Tab => Reload Metadata to take effect通过web ui build kylin_sales_cube 如果提示如下错误,则需要启动historyserver服务

ll From node222/192.168.0.222 to localhost:10020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

java.io.IOException: java.net.ConnectException: Call From node222/192.168.0.222 to localhost:10020 failed on connection exception: java.net.ConnectException: Connection refused; For more details see: http://wiki.apache.org/hadoop/ConnectionRefused

at org.apache.hadoop.mapred.ClientServiceDelegate.invoke(ClientServiceDelegate.java:334)

at org.apache.hadoop.mapred.ClientServiceDelegate.getJobCounters(ClientServiceDelegate.java:371)

#启动historyserver服务,再执行成功

[root@node222 ~]# /usr/local/hadoop-2.7.7/sbin/mr-jobhistory-daemon.sh start historyserver

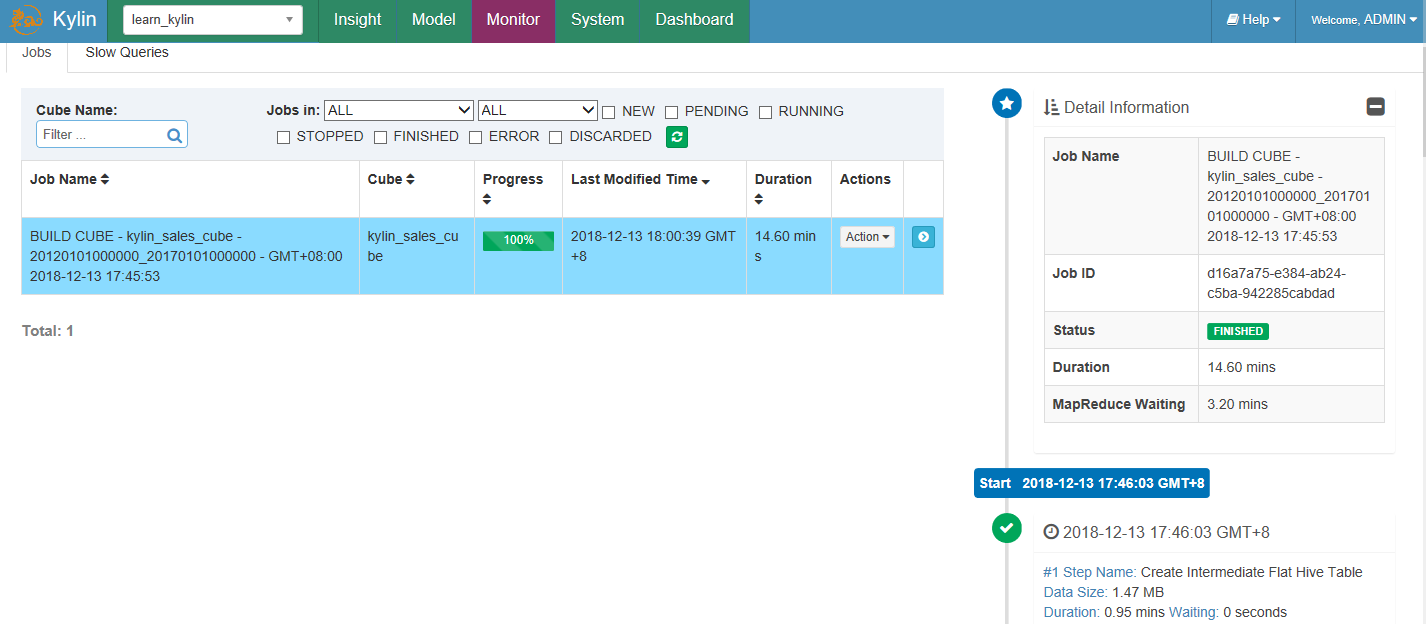

starting historyserver, logging to /usr/local/hadoop-2.7.7/logs/mapred-root-historyserver-node222.outbuild 过程可通过monitor界面监控执行进度

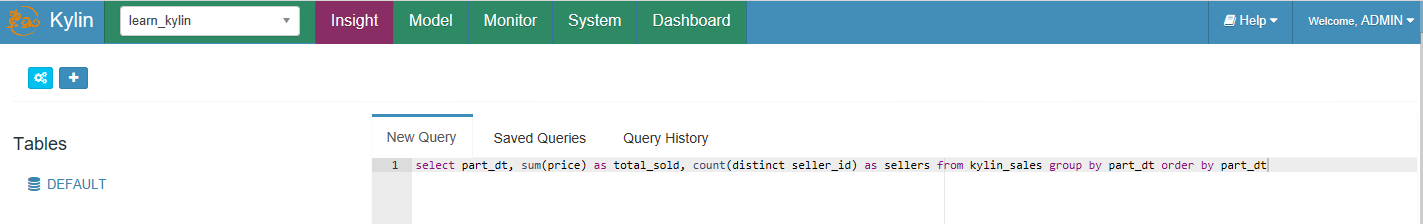

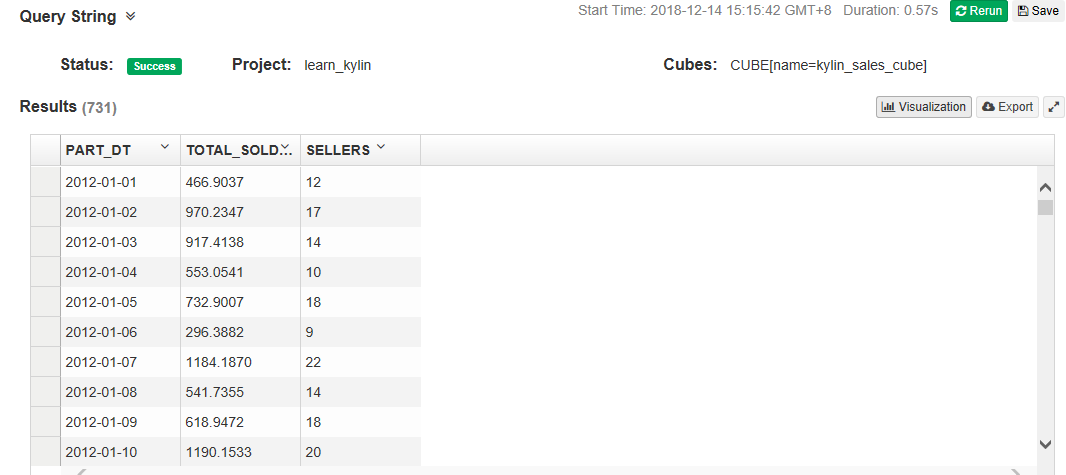

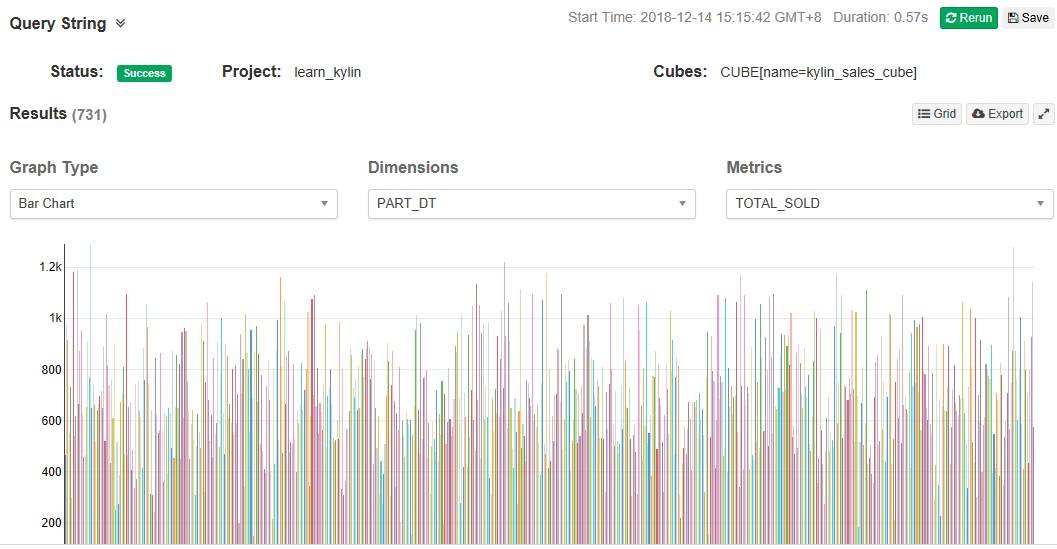

通过insight界面执行SQL

kylin能执行的查询与model定义的连接类型一致,如model中定义的都是inner join 则insight中只能执行inner join 不能执行left join

结果可简单的通过可视化展示

安装系统模型

http://kylin.apache.org/docs/tutorial/setup_systemcube.html

在KYLIN_HOME目录下创建配置文件,SCSinkTools.json

[

[

"org.apache.kylin.tool.metrics.systemcube.util.HiveSinkTool",

{

"storage_type": 2,

"cube_desc_override_properties": [

"java.util.HashMap",

{

"kylin.cube.algorithm": "INMEM",

"kylin.cube.max-building-segments": "1"

}

]

}

]

]生成元数据

[root@node222 kylin-2.5.0]# ./bin/kylin.sh org.apache.kylin.tool.metrics.systemcube.SCCreator -inputConfig SCSinkTools.json -output system_cube

Retrieving hadoop conf dir...

KYLIN_HOME is set to /usr/local/kylin-2.5.0

Retrieving hive dependency...

Retrieving hbase dependency...

Retrieving hadoop conf dir...

Retrieving kafka dependency...

Retrieving Spark dependency...

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/kylin-2.5.0/tool/kylin-tool-2.5.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.7.7/share/hadoop/common/lib/slf4j-log4j12-1.7.10.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

2018-12-14 10:56:36,096 INFO [main] common.KylinConfig:332 : Loading kylin-defaults.properties from file:/usr/local/kylin-2.5.0/tool/kylin-tool-2.5.0.jar!/kylin-defaults.properties

2018-12-14 10:56:36,136 DEBUG [main] common.KylinConfig:291 : KYLIN_CONF property was not set, will seek KYLIN_HOME env variable

2018-12-14 10:56:36,144 INFO [main] common.KylinConfig:99 : Initialized a new KylinConfig from getInstanceFromEnv : 1987083830

Running org.apache.kylin.tool.metrics.systemcube.SCCreator -inputConfig SCSinkTools.json -output system_cube

2018-12-14 10:56:36,931 INFO [main] measure.MeasureTypeFactory:116 : Checking custom measure types from kylin config

2018-12-14 10:56:36,934 INFO [main] measure.MeasureTypeFactory:145 : registering COUNT_DISTINCT(hllc), class org.apache.kylin.measure.hllc.HLLCMeasureType$Factory

2018-12-14 10:56:36,985 INFO [main] measure.MeasureTypeFactory:145 : registering COUNT_DISTINCT(bitmap), class org.apache.kylin.measure.bitmap.BitmapMeasureType$Factory

2018-12-14 10:56:37,001 INFO [main] measure.MeasureTypeFactory:145 : registering TOP_N(topn), class org.apache.kylin.measure.topn.TopNMeasureType$Factory

2018-12-14 10:56:37,006 INFO [main] measure.MeasureTypeFactory:145 : registering RAW(raw), class org.apache.kylin.measure.raw.RawMeasureType$Factory

2018-12-14 10:56:37,009 INFO [main] measure.MeasureTypeFactory:145 : registering EXTENDED_COLUMN(extendedcolumn), class org.apache.kylin.measure.extendedcolumn.ExtendedColumnMeasureType$Factory

2018-12-14 10:56:37,011 INFO [main] measure.MeasureTypeFactory:145 : registering PERCENTILE_APPROX(percentile), class org.apache.kylin.measure.percentile.PercentileMeasureType$Factory

2018-12-14 10:56:37,014 INFO [main] measure.MeasureTypeFactory:145 : registering COUNT_DISTINCT(dim_dc), class org.apache.kylin.measure.dim.DimCountDistinctMeasureType$Factory

[root@node222 kylin-2.5.0]# ll system_cube/

total 20

-rw-r--r-- 1 root root 3282 Dec 14 10:56 create_hive_tables_for_system_cubes.sql

drwxr-xr-x 2 root root 4096 Dec 14 10:56 cube

drwxr-xr-x 2 root root 4096 Dec 14 10:56 cube_desc

drwxr-xr-x 2 root root 4096 Dec 14 10:56 model_desc

drwxr-xr-x 2 root root 30 Dec 14 10:56 project

drwxr-xr-x 2 root root 4096 Dec 14 10:56 table

创建hive表

[root@node222 kylin-2.5.0]# hive -f system_cube/create_hive_tables_for_system_cubes.sql

Logging initialized using configuration in jar:file:/usr/local/hive-2.1.1/lib/hive-common-2.1.1.jar!/hive-log4j2.properties Async: true

OK

Time taken: 2.099 seconds

OK

Time taken: 0.162 seconds

OK

Time taken: 0.741 seconds

OK

Time taken: 0.028 seconds

OK

Time taken: 0.169 seconds

OK

Time taken: 0.027 seconds

OK

Time taken: 0.134 seconds

OK

Time taken: 0.033 seconds

OK

Time taken: 0.15 seconds

OK

Time taken: 0.026 seconds

OK

Time taken: 0.116 seconds

hive> use kylin;

OK

Time taken: 0.053 seconds

hive> show tables;

OK

hive_metrics_job_exception_qa

hive_metrics_job_qa

hive_metrics_query_cube_qa

hive_metrics_query_qa

hive_metrics_query_rpc_qa

Time taken: 0.11 seconds, Fetched: 5 row(s)

更新元数据

[root@node222 kylin-2.5.0]# ./bin/metastore.sh restore system_cube

Starting restoring system_cube

Retrieving hadoop conf dir...

KYLIN_HOME is set to /usr/local/kylin-2.5.0

......

2018-12-14 11:02:40,126 INFO [main-EventThread] zookeeper.ClientCnxn:512 : EventThread shut down刷新元数据

在system页面reload metadata

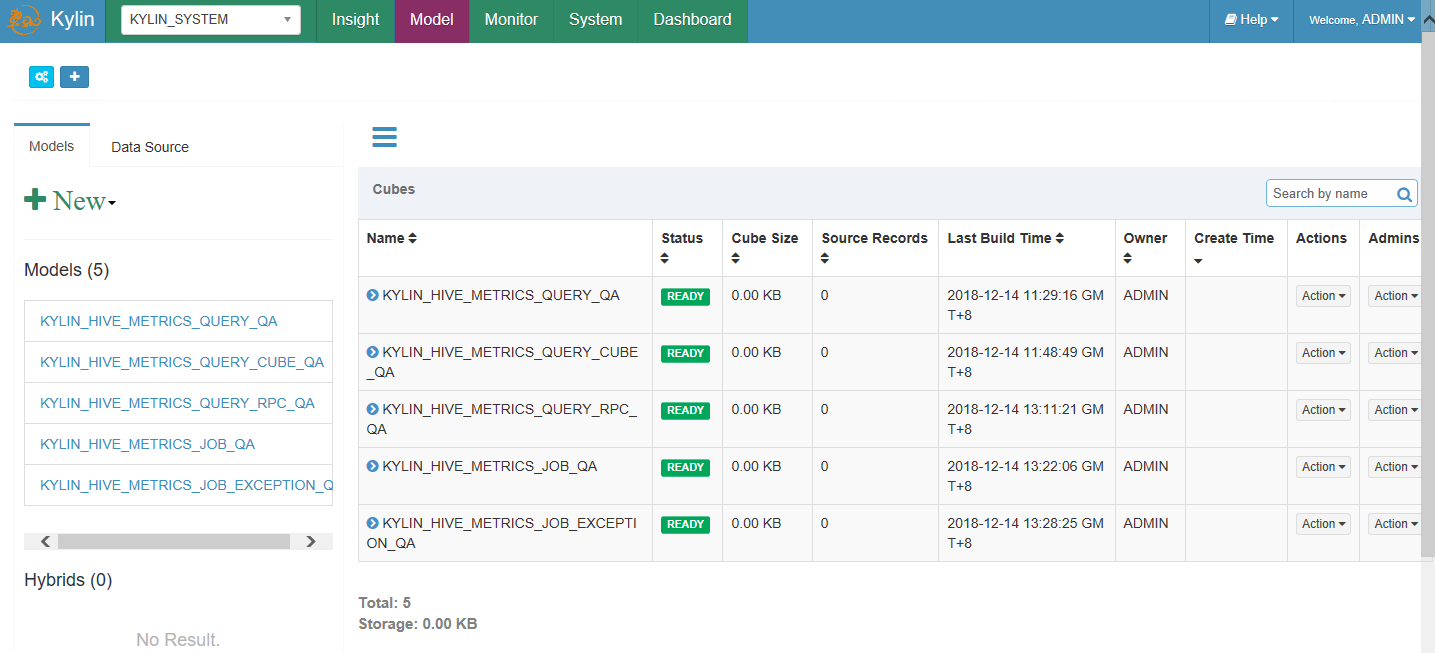

构建system cube

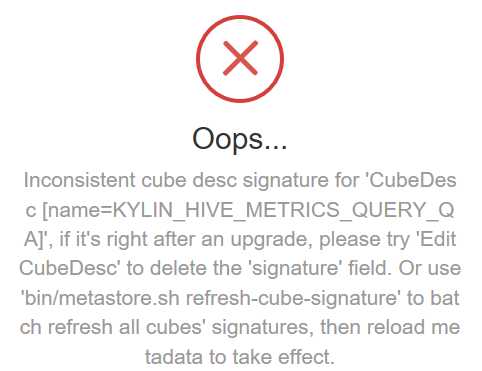

直接通过webui或者脚本构建时都会报错

查看/usr/local/kylin-2.5.0/logs/system_cube_KYLIN_HIVE_METRICS_QUERY_QA_1544756400000.log

2018-12-14 11:17:51,783 ERROR [main] job.CubeBuildingCLI:134 : error start cube building

java.lang.RuntimeException: error execute org.apache.kylin.tool.job.CubeBuildingCLI. Root cause: Inconsistent cube desc signature for CubeDesc [name=KYLIN_HIVE

_METRICS_QUERY_QA]

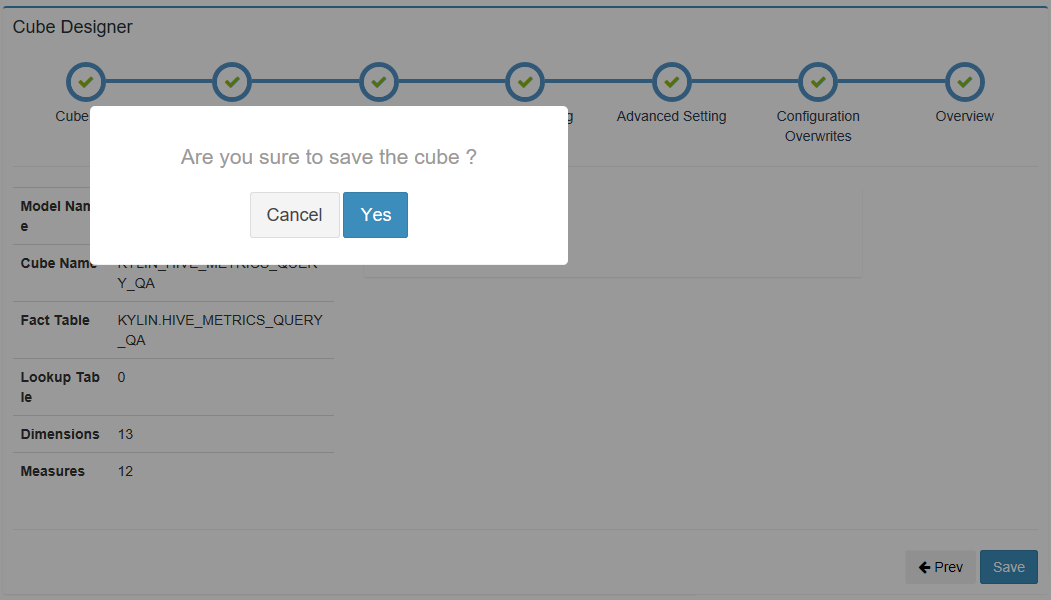

在webui 上重新保存各个cube,再构建即可。

创建构建脚本

#!/bin/bash

dir=$(dirname ${0})

export KYLIN_HOME=${dir}/../

CUBE=$1

INTERVAL=$2

DELAY=$3

CURRENT_TIME_IN_SECOND=`date +%s`

CURRENT_TIME=$((CURRENT_TIME_IN_SECOND * 1000))

END_TIME=$((CURRENT_TIME-DELAY))

END=$((END_TIME - END_TIME%INTERVAL))

ID="$END"

echo "building for ${CUBE}_${ID}" >> ${KYLIN_HOME}/logs/build_trace.log

sh ${KYLIN_HOME}/bin/kylin.sh org.apache.kylin.tool.job.CubeBuildingCLI --cube ${CUBE} --endTime ${END} > ${KYLIN_HOME}/logs/system_cube_${CUBE}_${END}.log 2>&1 &测试构建:

[root@node222 kylin-2.5.0]# ./bin/system_cube_build.sh KYLIN_HIVE_METRICS_QUERY_QA 3600000 1200000

配置成定时任务自动运行

[root@node222 kylin-2.5.0]# cat conf/schedule_system_cube_build.cron

0 */2 * * * sh ${KYLIN_HOME}/bin/system_cube_build.sh KYLIN_HIVE_METRICS_QUERY_QA 3600000 1200000

20 */2 * * * sh ${KYLIN_HOME}/bin/system_cube_build.sh KYLIN_HIVE_METRICS_QUERY_CUBE_QA 3600000 1200000

40 */4 * * * sh ${KYLIN_HOME}/bin/system_cube_build.sh KYLIN_HIVE_METRICS_QUERY_RPC_QA 3600000 1200000

30 */4 * * * sh ${KYLIN_HOME}/bin/system_cube_build.sh KYLIN_HIVE_METRICS_JOB_QA 3600000 1200000

50 */12 * * * sh ${KYLIN_HOME}/bin/system_cube_build.sh KYLIN_HIVE_METRICS_JOB_EXCEPTION_QA 3600000 12000

[root@node222 kylin-2.5.0]# crontab conf/schedule_system_cube_build.cron

[root@node222 kylin-2.5.0]# crontab -l编译完成后

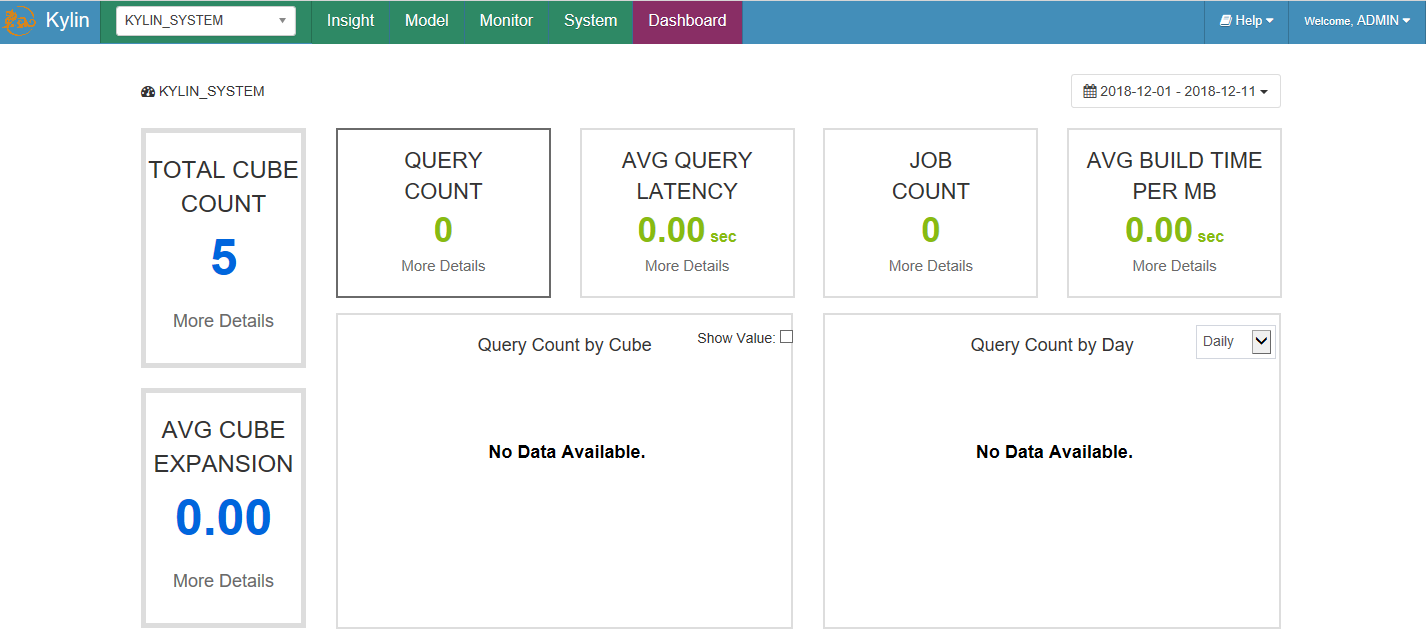

启用仪表板

http://kylin.apache.org/docs/tutorial/use_dashboard.html

整个过程可以通过KYLIN_HOME/logs/kylin.log文件查看执行日志信息

如果server关闭,再重启kylin服务时报如下错误

[root@node222 ~]# kylin.sh start

Retrieving hadoop conf dir...

......

Exception in thread "main" java.lang.IllegalArgumentException: Failed to find metadata store by url: kylin_metadata@hbase

at org.apache.kylin.common.persistence.ResourceStore.createResourceStore(ResourceStore.java:98)

at org.apache.kylin.common.persistence.ResourceStore.getStore(ResourceStore.java:110)

at org.apache.kylin.rest.service.AclTableMigrationTool.checkIfNeedMigrate(AclTableMigrationTool.java:98)

at org.apache.kylin.tool.AclTableMigrationCLI.main(AclTableMigrationCLI.java:41)

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at org.apache.kylin.common.persistence.ResourceStore.createResourceStore(ResourceStore.java:92)

... 3 more

此时通过hive 命令进入hive提示如下错误

[root@node222 ~]# hive

Exception in thread "main" java.lang.RuntimeException: org.apache.hadoop.ipc.RemoteException(org.apache.hadoop.hdfs.server.namenode.SafeModeException): Cannot create directory /tmp/hive/root/c5870040-12ed-47ab-bbc2-84d6ff3f2d24. Name node is in safe mode.

The reported blocks 709 needs additional 410 blocks to reach the threshold 0.9990 of total blocks 1120.

The number of live datanodes 1 has reached the minimum number 0. Safe mode will be turned off automatically once the thresholds have been reached.

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.checkNameNodeSafeMode(FSNamesystem.java:1335)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.mkdirs(FSNamesystem.java:3874)

at org.apache.hadoop.hdfs.server.namenode.NameNodeRpcServer.mkdirs(NameNodeRpcServer.java:984)

at org.apache.hadoop.hdfs.protocolPB.ClientNamenodeProtocolServerSideTranslatorPB.mkdirs(ClientNamenodeProtocolServerSideTranslatorPB.java:634)

at org.apache.hadoop.hdfs.protocol.proto.ClientNamenodeProtocolProtos$ClientNamenodeProtocol$2.callBlockingMethod(ClientNamenodeProtocolProtos.java)

at org.apache.hadoop.ipc.ProtobufRpcEngine$Server$ProtoBufRpcInvoker.call(ProtobufRpcEngine.java:616)

at org.apache.hadoop.ipc.RPC$Server.call(RPC.java:982)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2217)

at org.apache.hadoop.ipc.Server$Handler$1.run(Server.java:2213)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1762)

at org.apache.hadoop.ipc.Server$Handler.run(Server.java:2211)

退出安全模式

[root@node222 ~]# hdfs dfsadmin -safemode leave

Safe mode is OFF

再重启即正常启动了

[root@node222 ~]# kylin.sh start

A new Kylin instance is started by root. To stop it, run 'kylin.sh stop'

Check the log at /usr/local/kylin-2.5.0/logs/kylin.log

Web UI is at http://<hostname>:7070/kylin

jar cv0f spark-libs.jar -C $KYLIN_HOME/spark/jars/ .

hadoop fs -mkdir -p /kylin/spark/

hadoop fs -put spark-libs.jar /kylin/spark/

来源:oschina

链接:https://my.oschina.net/u/3967174/blog/2988545