导入Hadoop相关源码,真是一件不容易的事情,各种错误,各种红,让你体验一下解决万里江山一片红的爽快!

一、准备

1、相关软件

Hadoop源码:本人这里选择的是hadoop-2.7.1-src.tar.gz

下载地址:https://archive.apache.org/dist/hadoop/common/

JDK:2.7版本的Hadoop建议使用1.7的JDK。本人这里选择的是:jdk-7u80-windows-x64.exe

Eclipse:Oxygen.2 Release (4.7.2)

Maven:apache-maven-3.3.1.zip

下载地址:http://mirrors.shu.edu.cn/apache/maven/

历史下载地址:https://archive.apache.org/dist/maven/binaries/

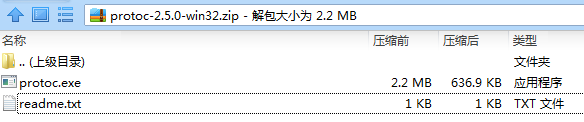

libprotoc:protoc-2.5.0-win32.zip

推荐版本下载地址:https://github.com/protocolbuffers/protobuf/releases

2.5.0版本下载地址:https://github.com/protocolbuffers/protobuf/releases?after=v3.0.0-alpha-4.1

2、安装

1.JDK

本人这里安装的是jdk-7u80-windows-x64.exe,安装步骤忽略。

2.Eclipse

直接解压就可以使用。

3.Maven

安装参见:Maven介绍及安装

最好将Maven的远程仓库地址设置成国内的仓库,这样下载速度会快一些。以下提供国内的远程仓库地址:

阿里:

<mirror>

<id>nexus-aliyun</id>

<mirrorOf>*</mirrorOf>

<name>Nexus aliyun</name>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

</mirror>4.libprotoc安装

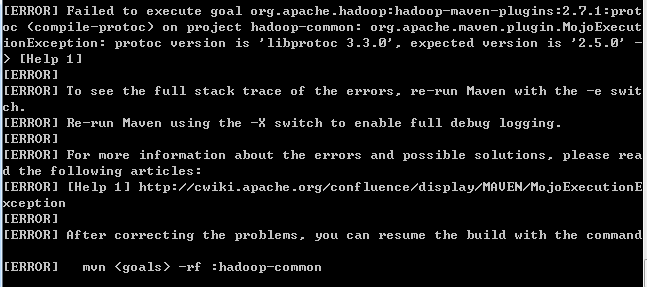

现在官方有很多版本,但是Hadoop2.7.1版本只能使用protoc2.5.0版本。

这个版本比较简单,解压之后只有两个文件,一个是执行文件:protoc.exe,一个是说明文件:readme.txt。如下图:

这里有两种方式添加环境变量:

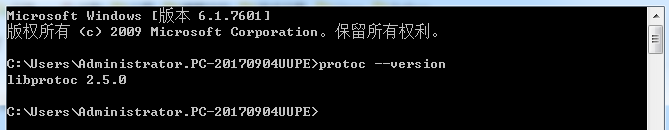

第一:将文件解压到自己指定的目录,然后将路径添加到环境变量Path中。使用以下命令测试安装是否成功:

protoc --version如下图表示安装成功:

第二:将可执行文件protoc.exe直接放入Maven的bin目录中即可。

此可执行文件没有多余的依赖,只要系统能够找到此可执行文件执行即可。

二、导入

安装好上面的软件,就可以开始进行源码导入的步骤了!

1、解压

将Hadoop的源码解压到自己规划的目录,最好是根目录。

2、执行Maven命令

进入Hadoop源码中的hadoop-maven-plugins文件夹中,打开cmd命令窗口,执行如下命令:

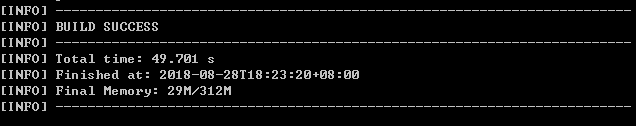

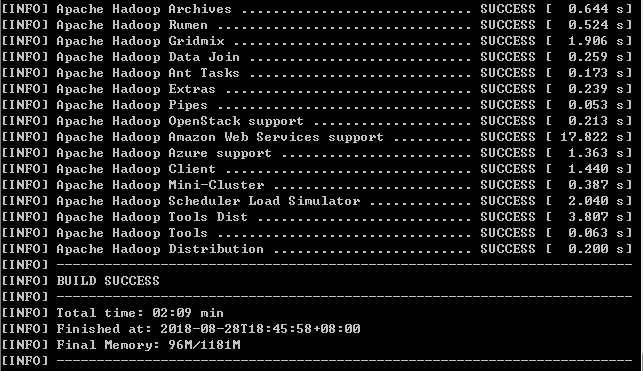

mvn install这个过程中,会下载很多东西,会因为某些东西下载不成功而执行失败,重复执行此命令,看到如下界面,证明这个过程执行成功。

如下界面的错误就是为什么必须使用libprotoc2.5.0的原因了,本人使用3.3.0版本试过,不行,而且指明需要2.5.0版本。

3、添加Eclipse目录

在Hadoop源码的根目录打开cmd命令窗口,执行如下命令:

mvn eclipse:eclipse -DskipTests出现如下界面即为成功,如不成功,继续执行上述命令即可。

4、创建workspace并导入

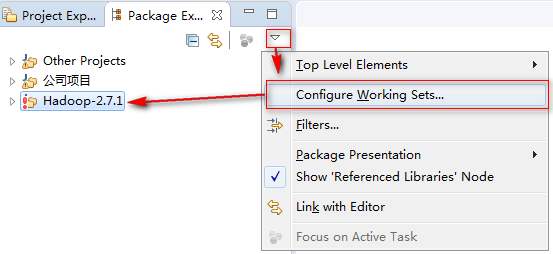

为了方便管理,在Eclipse中创建一个目录用于存放Hadoop相关的源码。创建步骤如下图:

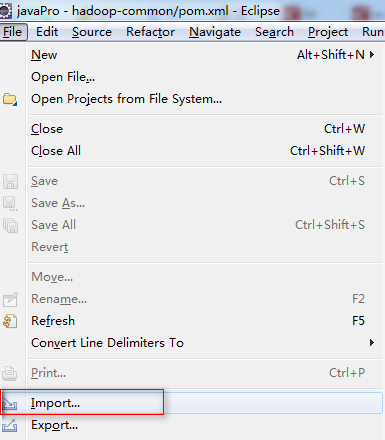

然后点击File->Import,如下图:

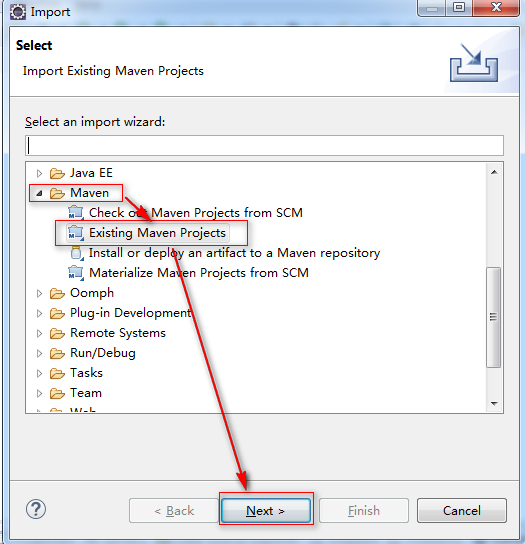

弹出对话框,在Maven中查找Existing Maven Projects,点击next,如下图:

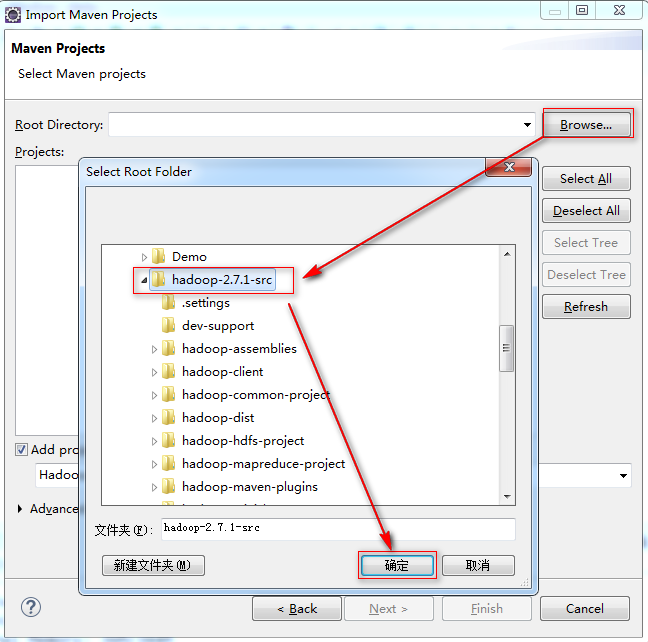

出现如下图的界面,按图中操作即可,注意选择路径的时候,选择到源码的根路径,不然如果有其他项目,导入选择对勾的时候会很麻烦。

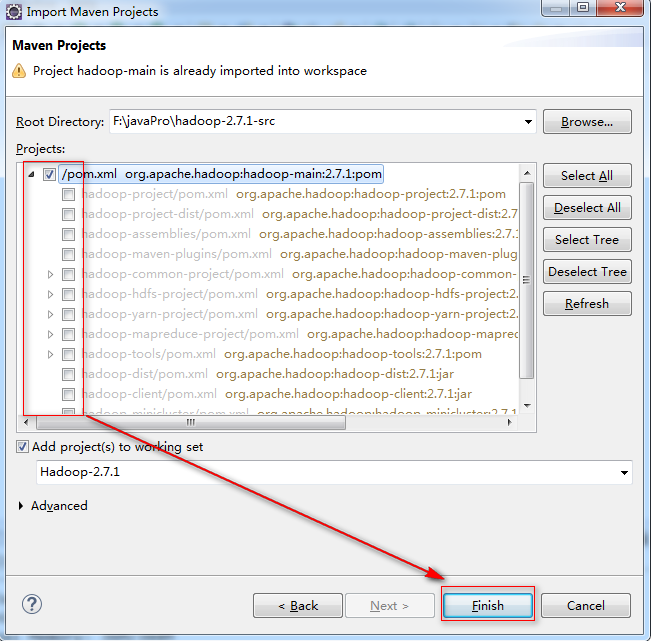

上面说的麻烦就是下图,如果你选到Hadoop源码的根目录,那么直接点击select All即可点击完成。

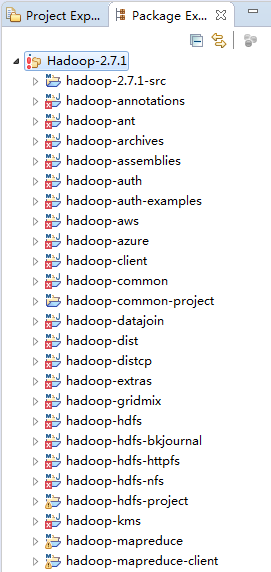

导入之后,本人的界面是这样的,如下图:

万里江山一片红,看到就头疼啊,但是观看源码是没问题的。

5、项目顺序

由上述生成导入Eclipse中目录的命令中可以看出,Hadoop的项目排序应该是如下这样的:

[INFO] Apache Hadoop Main

[INFO] Apache Hadoop Project POM

[INFO] Apache Hadoop Annotations

[INFO] Apache Hadoop Project Dist POM

[INFO] Apache Hadoop Assemblies

[INFO] Apache Hadoop Maven Plugins

[INFO] Apache Hadoop MiniKDC]

[INFO] Apache Hadoop Auth

[INFO] Apache Hadoop Auth Examples

[INFO] Apache Hadoop Common

[INFO] Apache Hadoop NFS

[INFO] Apache Hadoop KMS

[INFO] Apache Hadoop Common Project

[INFO] Apache Hadoop HDFS

[INFO] Apache Hadoop HttpFS

[INFO] Apache Hadoop HDFS BookKeeper Journal

[INFO] Apache Hadoop HDFS-NFS

[INFO] Apache Hadoop HDFS Project

[INFO] hadoop-yarn

[INFO] hadoop-yarn-api

[INFO] hadoop-yarn-common

[INFO] hadoop-yarn-server

[INFO] hadoop-yarn-server-common

[INFO] hadoop-yarn-server-nodemanager

[INFO] hadoop-yarn-server-web-proxy

[INFO] hadoop-yarn-server-applicationhistoryservice

[INFO] hadoop-yarn-server-resourcemanager

[INFO] hadoop-yarn-server-tests

[INFO] hadoop-yarn-client

[INFO] hadoop-yarn-server-sharedcachemanager

[INFO] hadoop-yarn-applications

[INFO] hadoop-yarn-applications-distributedshell

[INFO] hadoop-yarn-applications-unmanaged-am-launcher

[INFO] hadoop-yarn-site

[INFO] hadoop-yarn-registry

[INFO] hadoop-yarn-project

[INFO] hadoop-mapreduce-client

[INFO] hadoop-mapreduce-client-core

[INFO] hadoop-mapreduce-client-common

[INFO] hadoop-mapreduce-client-shuffle

[INFO] hadoop-mapreduce-client-app

[INFO] hadoop-mapreduce-client-hs

[INFO] hadoop-mapreduce-client-jobclient

[INFO] hadoop-mapreduce-client-hs-plugins

[INFO] Apache Hadoop MapReduce Examples

[INFO] hadoop-mapreduce

[INFO] Apache Hadoop MapReduce Streaming

[INFO] Apache Hadoop Distributed Copy

[INFO] Apache Hadoop Archives

[INFO] Apache Hadoop Rumen

[INFO] Apache Hadoop Gridmix

[INFO] Apache Hadoop Data Join

[INFO] Apache Hadoop Ant Tasks

[INFO] Apache Hadoop Extras

[INFO] Apache Hadoop Pipes

[INFO] Apache Hadoop OpenStack support

[INFO] Apache Hadoop Amazon Web Services support

[INFO] Apache Hadoop Azure support

[INFO] Apache Hadoop Client

[INFO] Apache Hadoop Mini-Cluster

[INFO] Apache Hadoop Scheduler Load Simulator

[INFO] Apache Hadoop Tools Dist

[INFO] Apache Hadoop Tools

[INFO] Apache Hadoop Distribution三、排错

1、修改项目细节

这里的两步是每个项目都需要执行的。

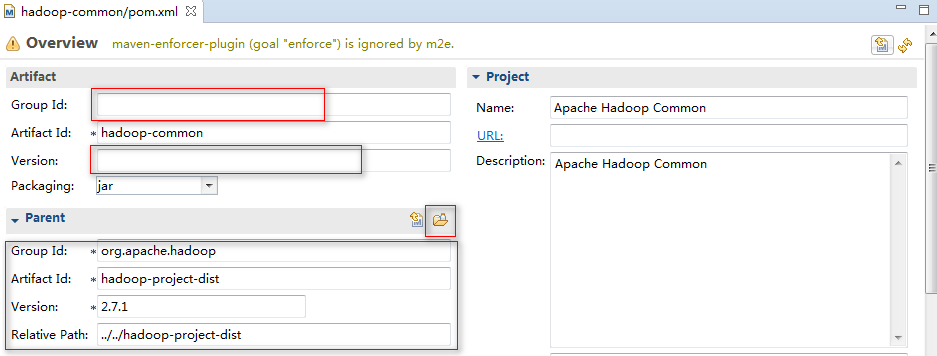

1.修改pom文件

将所有的项目修改pom.xml的继承关系进行重新赋予,让项目有统一的Group Id和version号。

如下图:打开pom文件重新选一下parent即可。

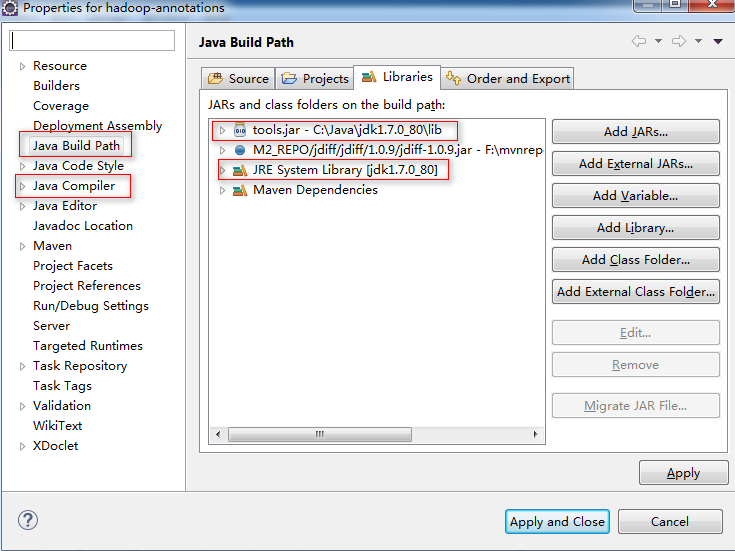

2.修改Java Build Path

将Java Build Path中的Libraries里的JRE和tools.jar修改成自己的版本,本人这里是1.7.0_80,如下图所示:

修改完成Java Build Path之后修改Java Compiler,将其修改为对应的版本即可,本人这里依然是1.7版本。

2、hadoop-common项目问题

1.修改xml

如下图:将此xml文件的头挪到第一行。

具体信息可参见:xml文件错误之指令不允许匹配

2.更新avro

hadoop-common项目中有一个错误,其中avsc文件是avro的模式文件,这里需要通过以下方式,生成相应的.java文件。

1>下载jar包

jar包:avro-tools-1.7.4.jar

下载地址:https://archive.apache.org/dist/maven/binaries/

2>执行命令

进入源码根目录下的“hadoop-common-project\hadoop-common\src\test\avro”中,打开cmd执行如下命令:

java -jar <所在目录>\avro-tools-1.7.4.jar compile schema avroRecord.avsc ..\java

#例如:本人这里将此jar包放入了F:\\bigdata\hadoop中

#相应的命令如下:

java -jar F:\\bigdata\hadoop\avro-tools-1.7.4.jar compile schema avroRecord.avsc ..\java

3>刷新项目

右键单击Eclipse中的hadoop-common项目,然后refresh。如果refresh不成功,直接refresh出错源码文件所在的包,再不成功则重启Eclipse

3.proto

进入源码根目录下的“hadoop-common-project\hadoop-common\src\test\proto”,打开cmd命令窗口,执行如下命令:

protoc --java_out=..\java *.proto这里的protoc就是在上面下载的protoc程序。

右键单击Eclipse中的hadoop-common,然后refresh。如果refresh不成功,直接refresh出错源码文件所在的包,再不成功则重启Eclipse。

3、hadoop-streaming项目问题

在eclipse中,右键单击hadoop-streaming项目,选择“Properties”,左侧栏选择Java Build Path,然后右边选择Source标签页,删除出错的路径。

点击“Link Source按钮”,选择被链接的目录为“<你的源代码根目录>/hadoop-yarn-project/hadoop-yarn/hadoop-yarn-server/hadoop-yarn-server-resourcemanager/conf”,链接名可以使用显示的(也可以随便取);

inclusion patterns中添加capacity-scheduler.xml,exclusion patters中添加**/*.java,这个信息与出错的那项一样;完毕后将出错的项删除。刷新hadoop-streaming项目。

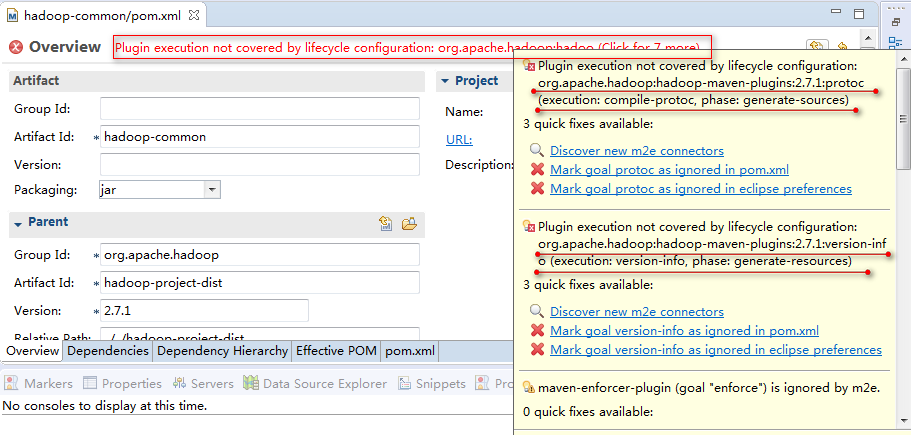

4、Maven 插件

1.错误可见位置

做完上面的排错,还有很多错误,这些错误在pom.xml就能看见,如下图:

这些错误同样在Maven的Lifecycle Mapping中也能看到,如下图的位置:

上图是我处理完了错误,所以全是绿的了。

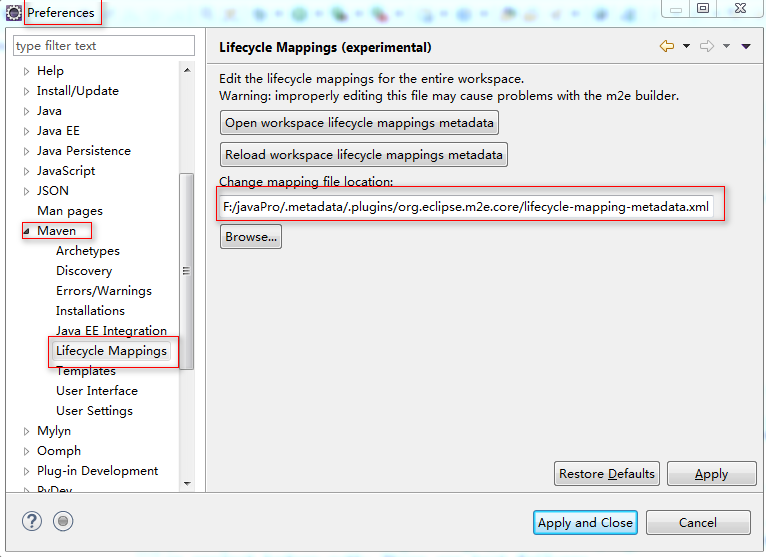

2.解决方法

Eclipse中的Windows-Preferences,找到Maven-Lifecycle Mappings如下图:

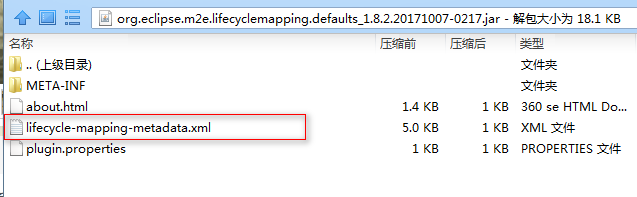

上图红框中的路径中其实没有lifecycle-mapping-metadata.xml文件的,这个文件存放于Eclipse的安装目录中的一个jar包里,位置如下:

eclipse\plugins\org.eclipse.m2e.lifecyclemapping.defaults_xxxxxxxxxxxx.jar,如下图:

将此文件解压出来,放置到Change mapping file location所示的路径中去,然后添加缺失的插件,格式文件中都有。

3.lifecycle-mapping-metadata.xml

这里是本人添加的一份文件。文件内容如下:

<?xml version="1.0" encoding="UTF-8"?>

<lifecycleMappingMetadata>

<lifecycleMappings>

<lifecycleMapping>

<packagingType>war</packagingType>

<lifecycleMappingId>org.eclipse.m2e.jdt.JarLifecycleMapping</lifecycleMappingId>

</lifecycleMapping>

</lifecycleMappings>

<pluginExecutions>

<!-- standard maven plugins -->

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-resources-plugin</artifactId>

<goals>

<goal>resources</goal>

<goal>testResources</goal>

<goal>copy-resources</goal>

</goals>

<versionRange>[2.4,)</versionRange>

</pluginExecutionFilter>

<action>

<execute>

<runOnIncremental>true</runOnIncremental>

</execute>

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-resources-plugin</artifactId>

<goals>

<goal>resources</goal>

<goal>testResources</goal>

<goal>copy-resources</goal>

</goals>

<versionRange>[0.0.1,2.4)</versionRange>

</pluginExecutionFilter>

<action>

<error>

<message>maven-resources-plugin prior to 2.4 is not supported by m2e. Use maven-resources-plugin version 2.4 or later.</message>

</error>

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-enforcer-plugin</artifactId>

<goals>

<goal>enforce</goal>

</goals>

<versionRange>[1.0-alpha-1,)</versionRange>

</pluginExecutionFilter>

<action>

<ignore>

<message>maven-enforcer-plugin (goal "enforce") is ignored by m2e.</message>

</ignore>

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-invoker-plugin</artifactId>

<goals>

<goal>install</goal>

</goals>

<versionRange>[1.6-SONATYPE-r940877,)</versionRange>

</pluginExecutionFilter>

<action>

<ignore>

<message>maven-invoker-plugin (goal "install") is ignored by m2e.</message>

</ignore>

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-remote-resources-plugin</artifactId>

<versionRange>[1.0,)</versionRange>

<goals>

<goal>process</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore>

<message>maven-remote-resources-plugin (goal "process") is ignored by m2e.</message>

</ignore>

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-eclipse-plugin</artifactId>

<versionRange>[0,)</versionRange>

<goals>

<goal>configure-workspace</goal>

<goal>eclipse</goal>

<goal>clean</goal>

<goal>to-maven</goal>

<goal>install-plugins</goal>

<goal>make-artifacts</goal>

<goal>myeclipse</goal>

<goal>myeclipse-clean</goal>

<goal>rad</goal>

<goal>rad-clean</goal>

</goals>

</pluginExecutionFilter>

<action>

<error>

<message>maven-eclipse-plugin is not compatible with m2e</message>

</error>

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-source-plugin</artifactId>

<versionRange>[2.0,)</versionRange>

<goals>

<goal>jar-no-fork</goal>

<goal>test-jar-no-fork</goal>

<!-- theoretically, the following goals should not be bound to lifecycle, but ignore them just in case -->

<goal>jar</goal>

<goal>aggregate</goal>

<goal>test-jar</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore/>

</action>

</pluginExecution>

<!--our add start******************************************************-->

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-antrun-plugin</artifactId>

<versionRange>[1.7,)</versionRange>

<goals>

<goal>run</goal>

<goal>create-testdirs</goal>

<goal>validate</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore />

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<versionRange>[3.1,)</versionRange>

<goals>

<goal>testCompile</goal>

<goal>compile</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore />

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-jar-plugin</artifactId>

<versionRange>[2.5,)</versionRange>

<goals>

<goal>test-compile</goal>

<goal>test-jar</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore />

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-maven-plugins</artifactId>

<versionRange>[2.7.1,)</versionRange>

<goals>

<goal>protoc</goal>

<goal>compile-protoc</goal>

<goal>generate-sources</goal>

<goal>compile-test-protoc</goal>

<goal>generate-test-sources</goal>

<goal>version-info</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore />

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-plugin-plugin</artifactId>

<versionRange>[3.4,)</versionRange>

<goals>

<goal>descriptor</goal>

<goal>default-descriptor</goal>

<goal>process-classes</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore />

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.apache.avro</groupId>

<artifactId>avro-maven-plugin</artifactId>

<versionRange>[1.7.4,)</versionRange>

<goals>

<goal>schema</goal>

<goal>generate-avro-test-sources</goal>

<goal>generate-test-sources</goal>

<goal>protocol</goal>

<goal>default</goal>

<goal>generate-sources</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore />

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.codehaus.mojo</groupId>

<artifactId>exec-maven-plugin</artifactId>

<versionRange>[1.3.1,)</versionRange>

<goals>

<goal>exec</goal>

<goal>compile-ms-native-dll</goal>

<goal>compile-ms-winutils</goal>

<goal>compile</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore />

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.codehaus.mojo</groupId>

<artifactId>native-maven-plugin</artifactId>

<versionRange>[1.0-alpha-8,)</versionRange>

<goals>

<goal>javah</goal>

<goal>compile</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore />

</action>

</pluginExecution>

<!--our add end****************************************-->

<!-- commonly used codehaus plugins -->

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.codehaus.mojo</groupId>

<artifactId>animal-sniffer-maven-plugin</artifactId>

<versionRange>[1.0,)</versionRange>

<goals>

<goal>check</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore />

</action>

</pluginExecution>

<pluginExecution>

<pluginExecutionFilter>

<groupId>org.codehaus.mojo</groupId>

<artifactId>buildnumber-maven-plugin</artifactId>

<versionRange>[1.0-beta-1,)</versionRange>

<goals>

<goal>create</goal>

</goals>

</pluginExecutionFilter>

<action>

<ignore />

</action>

</pluginExecution>

</pluginExecutions>

</lifecycleMappingMetadata>5、update

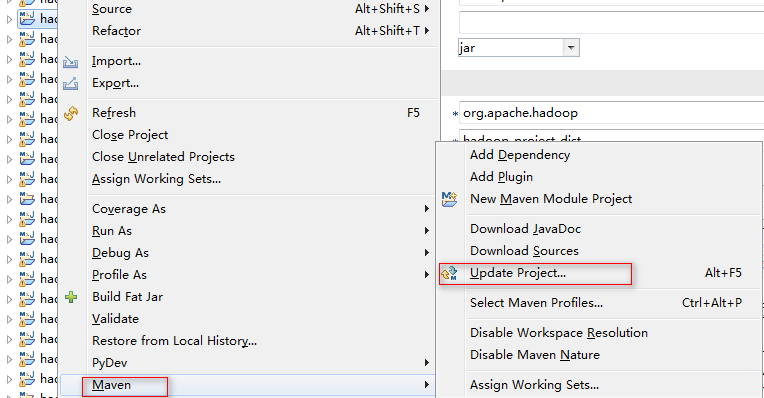

进行完上面的步骤之后,将所有的项目都Update一下,操作如下图:

经过上述的步骤之后,所有的问题应该都能解决了。

以上是本人导入源码的过程,基本上就这些错误,除了那三个典型的错误,还出现了多余的几个错误!

在运行源码的时候也出现了一些错误,后续会进行更新!

上一篇:Hadoop-MapReduce的shuffle过程及其他

下一篇:

来源:oschina

链接:https://my.oschina.net/u/3754001/blog/1940530