安装Java

参见Hadoop 1.2.1 伪分布式模式安装中Java安装部分

配置SSH免密码验证

我们还是以spark-master, ubuntu-worker, spark-worker1三台机器为例。

下载hadoop

下载地址:http://hadoop.apache.org/releases.html#Download

解压文件: tar -zxvf hadoop-2.4.1.tar.gz

修改配置文件

进入hadoop-2.4.1/etc/hadoop目录下,需要配置以下7个文件有:

hadoop-env.sh, yarn-env.sh, slaves, core-site.xml, hdfs-site.xml, maprd-site.xml, yarn-site.xml

1. hadoop-env.sh配置JAVA_HOME

export JAVA_HOME=/home/mupeng/java/jdk1.6.0_352. yarn-env.sh配置JAVA_HOME

# some Java parameters

export JAVA_HOME=/home/mupeng/java/jdk1.6.0_353. slaves配置slave结点

ubuntu-worker

spark-worker14. core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://spark-master:9000</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>131072</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/home/mupeng/opt/hadoop-2.4.0/tmp</value>

<description>Abasefor other temporary directories.</description>

</property>

<property>

<name>hadoop.proxyuser.spark.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.spark.groups</name>

<value>*</value>

</property>

</configuration>5. hdfs-site.xml

<configuration>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>spark-master:9001</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/home/mupeng/opt/hadoop-2.4.0/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/home/mupeng/opt/hadoop-2.4.0/dfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>6. maprd-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>spark-master:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>spark-master:19888</value>

</property>

</configuration>7. yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.resourcemanager.address</name>

<value>spark-master:8032</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address</name>

<value>spark-master:8030</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>spark-master:8035</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address</name>

<value>spark-master:8033</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address</name>

<value>spark-master:8088</value>

</property>

</configuration>最后将配置好的hadoop-2.4.1文件夹拷贝到另外两个结点即可。

检查安装是否成功

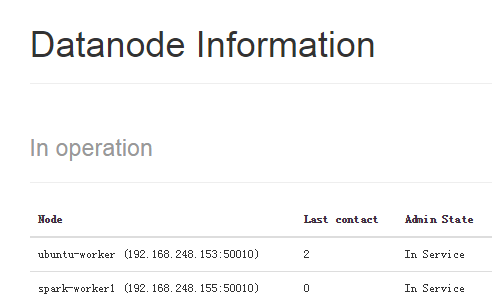

查看hdfs:http://192.168.248.150:50070/dfshealth.html#tab-datanode,可以看到有两个结点:

查看yarn:http://192.168.248.150:8088/cluster/nodes

OK, 我们的Hadoop2.4.1集群搭建成功。接下来搭建spark集群参见博客Spark1.2.1集群环境搭建——Standalone模式

来源:oschina

链接:https://my.oschina.net/u/76720/blog/387608