问题

I am trying to use VNDetectRectangleRequest from Apple's Vision framework to automatically grab a picture of a card. However when I convert the points to draw the rectangle, it is misshapen and does not follow the rectangle is it should. I have been following this article pretty closely

One major difference is I am embedding my CameraCaptureVC in another ViewController so that the card will be scanned only when it is in this smaller window.

Below is how I set up the camera vc in the parent vc (called from viewDidLoad).

func configureSubviews() {

clearView.addSubview(cameraVC.view)

cameraVC.view.autoPinEdgesToSuperviewEdges()

self.addChild(cameraVC)

cameraVC.didMove(toParent: self)

}

Below is the code to draw the rectangle

func createLayer(in rect: CGRect) {

let maskLayer = CAShapeLayer()

maskLayer.frame = rect

maskLayer.cornerRadius = 10

maskLayer.opacity = 0.75

maskLayer.borderColor = UIColor.red.cgColor

maskLayer.borderWidth = 5.0

previewLayer.insertSublayer(maskLayer, at: 1)

}

func removeMask() {

if let sublayer = previewLayer.sublayers?.first(where: { $0 as? CAShapeLayer != nil }) {

sublayer.removeFromSuperlayer()

}

}

func drawBoundingBox(rect : VNRectangleObservation) {

let transform = CGAffineTransform(scaleX: 1, y: -1).translatedBy(x: 0, y: -finalFrame.height)

let scale = CGAffineTransform.identity.scaledBy(x: finalFrame.width, y: finalFrame.height)

let bounds = rect.boundingBox.applying(scale).applying(transform)

createLayer(in: bounds)

}

func detectRectangle(in image: CVPixelBuffer) {

let request = VNDetectRectanglesRequest { (request: VNRequest, error: Error?) in

DispatchQueue.main.async {

guard let results = request.results as? [VNRectangleObservation],

let rect = results.first else { return }

self.removeMask()

self.drawBoundingBox(rect: rect)

}

}

request.minimumAspectRatio = 0.0

request.maximumAspectRatio = 1.0

request.maximumObservations = 0

let imageRequestHandler = VNImageRequestHandler(cvPixelBuffer: image, options: [:])

try? imageRequestHandler.perform([request])

}

This is my result. The red rectangle should follow the borders of the card but it is much too short and the origin is not at the top of the card even.

I have tried changing values in the drawBoundingBox function but nothing seems to help. I have also tried converting the bounds in a different way like below but it is the same result, and changing these values gets hacky.

let scaledHeight: CGFloat = originalFrame.width / finalFrame.width * finalFrame.height

let boundingBox = rect.boundingBox

let x = finalFrame.width * boundingBox.origin.x

let height = scaledHeight * boundingBox.height

let y = scaledHeight * (1 - boundingBox.origin.y) - height

let width = finalFrame.width * boundingBox.width

let bounds = CGRect(x: x, y: y, width: width, height: height)

createLayer(in: bounds)

Would appreciate any help. Maybe since I am embedding it as a child VC I need to transform the coordinates a second time? I tried something like this to no avail but maybe I did it wrong or was missing something

回答1:

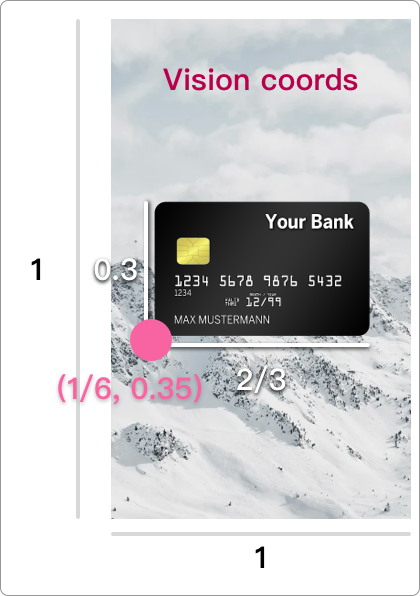

First let's look at boundingBox, which is a "normalized" rectangle. Apple says

The coordinates are normalized to the dimensions of the processed image, with the origin at the image's lower-left corner.

This means that:

- The

originis at the bottom-left, not the top-left - The

origin.xandwidthare in terms of a fraction of the entire image's width - The

origin.yandheightare in terms of a fraction of the entire image's height

Hopefully this diagram makes it clearer:

| What you are used to | What Vision returns |

|---|---|

|

|

Your function above converts boundingBox to the coordinates of finalFrame, which I assume is the entire view's frame. That is much larger than your small CameraCaptureVC.

Also, your CameraCaptureVC's preview layer probably has a aspectFill video gravity. You will also need to account for the overflowing parts of the displayed image.

Try this converting function instead.

func getConvertedRect(boundingBox: CGRect, inImage imageSize: CGSize, containedIn containerSize: CGSize) -> CGRect {

let rectOfImage: CGRect

let imageAspect = imageSize.width / imageSize.height

let containerAspect = containerSize.width / containerSize.height

if imageAspect > containerAspect { /// image extends left and right

let newImageWidth = containerSize.height * imageAspect /// the width of the overflowing image

let newX = -(newImageWidth - containerSize.width) / 2

rectOfImage = CGRect(x: newX, y: 0, width: newImageWidth, height: containerSize.height)

} else { /// image extends top and bottom

let newImageHeight = containerSize.width * (1 / imageAspect) /// the width of the overflowing image

let newY = -(newImageHeight - containerSize.height) / 2

rectOfImage = CGRect(x: 0, y: newY, width: containerSize.width, height: newImageHeight)

}

let newOriginBoundingBox = CGRect(

x: boundingBox.origin.x,

y: 1 - boundingBox.origin.y - boundingBox.height,

width: boundingBox.width,

height: boundingBox.height

)

var convertedRect = VNImageRectForNormalizedRect(newOriginBoundingBox, Int(rectOfImage.width), Int(rectOfImage.height))

/// add the margins

convertedRect.origin.x += rectOfImage.origin.x

convertedRect.origin.y += rectOfImage.origin.y

return convertedRect

}

This takes into account the frame of the image view and also aspect fill content mode.

Example (I'm using a static image instead of a live camera feed for simplicity):

/// inside your Vision request completion handler...

guard let image = self.imageView.image else { return }

let convertedRect = self.getConvertedRect(

boundingBox: observation.boundingBox,

inImage: image.size,

containedIn: self.imageView.bounds.size

)

self.drawBoundingBox(rect: convertedRect)

func drawBoundingBox(rect: CGRect) {

let uiView = UIView(frame: rect)

imageView.addSubview(uiView)

uiView.backgroundColor = UIColor.clear

uiView.layer.borderColor = UIColor.orange.cgColor

uiView.layer.borderWidth = 3

}

I made an example project here.

来源:https://stackoverflow.com/questions/64759383/bounding-box-from-vndetectrectanglerequest-is-not-correct-size-when-used-as-chil