介绍

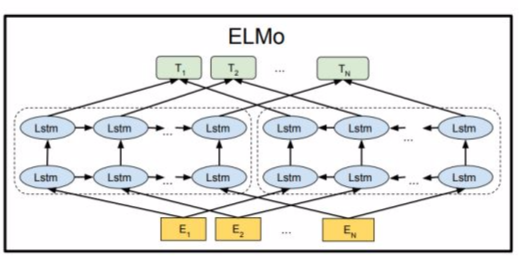

ELMo(Embeddings from Language Models) 是重要的通用语义表示模型之一,以双向 LSTM 为网路基本组件,以 Language Model 为训练目标,通过预训练得到通用的语义表示,将通用的语义表示作为 Feature 迁移到下游 NLP 任务中,会显著提升下游任务的模型性能。本项目是 ELMo 在 Paddle Fluid 上的开源实现, 基于百科类数据训练并发布了预训练模型。模型结构如下:

原文链接:Deep contextualized word representations

参考博客:https://blog.csdn.net/triplemeng/article/details/82380202

注意

本项目代码需要使用GPU环境来运行:

并且检查相关参数设置, 例如use_gpu, fluid.CUDAPlace(0)等处是否设置正确.

# 解压数据

!tar xf /home/aistudio/data/data9504/data.tar.gz -C /home/aistudio数据预处理

将文档按照句号、问号、感叹切分成句子,然后对句子进行切词。预处理后的数据文件中每行为一个分词后的句子。我们给出了示例训练数据 data/train 和测试数据 data/dev ,数据示例如下:

本 书 介绍 了 中国 经济 发展 的 内外 平衡 问题 、 亚洲 金融 危机 十 周年 回顾 与 反思 、 实践 中 的 城乡 统筹 发展 、 未来 十 年 中国 需要 研究 的 重大 课题 、 科学 发展 与 新型 工业 化 等 方面 。

吴 敬 琏 曾经 提出 中国 股市 “ 赌场 论 ” , 主张 维护 市场 规则 , 保护 草根 阶层 生计 , 被 誉 为 “ 中国 经济 学界 良心 ” , 是 媒体 和 公众 眼中 的 学术 明星

利用提供的示例数据来进行单机训练,具体的启动方式可以查看run.sh文件,默认的训练参数除了在命令行输入外还可以在args.py文件中进行修改,默认使用gpu进行训练。训练过程中每隔'save_interval'个steps将模型写入'para_save_dir'文件夹中,并且每隔'log_interval'个steps输出一次训练阶段的各项指标

!chmod +x run.sh

!./run.shrandom seed is None

INFO:lm:Running with args : Namespace(all_train_tokens=35479, batch_size=128, cell_clip=3.0, data_path=None, dev_interval=10000, dropout=0.1, embed_size=512, enable_ce=False, hidden_size=4096, learning_rate=0.2, load_dir='', load_pretraining_params='', local=True, log_interval=10, max_epoch=10, max_grad_norm=10.0, n_negative_samples_batch=8000, num_layers=2, num_steps=20, optim='adagrad', para_save_dir='checkpoints_model', proj_clip=3.0, random_seed=None, sample_softmax=False, save_interval=10000, shuffle=False, test_nccl=False, test_path='data/dev/sentence_file_*', train_path='data/train/sentence_file_*', update_method='nccl2', use_custom_samples=False, use_gpu=True, vocab_path='data/vocabulary_min5k.txt')

INFO:lm:Running paddle : 401c03fc20478f5cc067440422fc3a7b306d0e32

INFO:lm:begin to load vocab

INFO:lm:finished load vocab

INFO:lm:build the model...

INFO:lm:local start_up:

W0815 17:54:24.260010 386 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0

W0815 17:54:24.263931 386 device_context.cc:267] device: 0, cuDNN Version: 7.3.

INFO:lm:Training the model...

WARNING:root:

You can try our memory optimize feature to save your memory usage:

# create a build_strategy variable to set memory optimize option

build_strategy = compiler.BuildStrategy()

build_strategy.enable_inplace = True

build_strategy.memory_optimize = True

# pass the build_strategy to with_data_parallel API

compiled_prog = compiler.CompiledProgram(main).with_data_parallel(

loss_name=loss.name, build_strategy=build_strategy)

!!! Memory optimize is our experimental feature !!!

some variables may be removed/reused internal to save memory usage,

in order to fetch the right value of the fetch_list, please set the

persistable property to true for each variable in fetch_list

# Sample

conv1 = fluid.layers.conv2d(data, 4, 5, 1, act=None)

# if you need to fetch conv1, then:

conv1.persistable = True

I0815 17:54:24.307185 386 parallel_executor.cc:329] The number of CUDAPlace, which is used in ParallelExecutor, is 1. And the Program will be copied 1 copies

I0815 17:54:24.313653 386 build_strategy.cc:340] SeqOnlyAllReduceOps:0, num_trainers:1

INFO:lm:begin to load data

Found 1 shards at data/train/sentence_file_*

sampled 4 shards at data/train/sentence_file_*

Loading data from: data/train/sentence_file_1.txt

Loaded 1000 sentences.

Finished loading

Found 1 shards at data/train/sentence_file_*

sampled 4 shards at data/train/sentence_file_*

Loading data from: data/train/sentence_file_1.txt

Loaded 1000 sentences.

Finished loading

INFO:lm:finished load vocab

INFO:lm:[train] step:10, loss:10.787, ppl:45101.208, smoothed_ppl:48392.440, speed:3.514

Loading data from: data/train/sentence_file_1.txt

Loaded 1000 sentences.

Finished loading

Loading data from: data/train/sentence_file_1.txt

Loaded 1000 sentences.

Finished loading

INFO:lm:[train] step:20, loss:17.213, ppl:12725415.675, smoothed_ppl:29897358.136, speed:3.511

Loading data from: data/train/sentence_file_1.txt

Loaded 1000 sentences.

Finished loading

Loading data from: data/train/sentence_file_1.txt

Loaded 1000 sentences.

Finished loading

INFO:lm:[train] step:30, loss:10.745, ppl:16140.931, smoothed_ppl:46405.757, speed:3.459

INFO:lm:[train] step:40, loss:9.203, ppl:4930.345, smoothed_ppl:9923.126, speed:3.483

Loading data from: data/train/sentence_file_1.txt

Loaded 1000 sentences.

Finished loading

Loading data from: data/train/sentence_file_1.txt

Loaded 1000 sentences.

Finished loading

INFO:lm:[train] step:50, loss:8.790, ppl:3463.886, smoothed_ppl:6567.158, speed:3.475

Found 1 shards at data/dev/sentence_file_*

sampled 4 shards at data/dev/sentence_file_*

Loading data from: data/dev/sentence_file_2.txt

Loaded 1000 sentences.

Finished loading

Found 1 shards at data/dev/sentence_file_*

sampled 4 shards at data/dev/sentence_file_*

Loading data from: data/dev/sentence_file_2.txt

Loaded 1000 sentences.

Finished loading

INFO:lm:Average dev loss from batch 1 to 10 is 8.6397351265

Loading data from: data/dev/sentence_file_2.txt

Loaded 1000 sentences.

Finished loading

Loading data from: data/dev/sentence_file_2.txt

Loaded 1000 sentences.

Finished loading

INFO:lm:Average dev loss from batch 11 to 20 is 8.5503274918

Loading data from: data/dev/sentence_file_2.txt

Loaded 1000 sentences.

Finished loading

Loading data from: data/dev/sentence_file_2.txt

Loaded 1000 sentences.

Finished loading

INFO:lm:Average dev loss from batch 21 to 30 is 8.5320512772

Loading data from: data/dev/sentence_file_2.txt

Loaded 1000 sentences.

Finished loading

Loading data from: data/dev/sentence_file_2.txt

Loaded 1000 sentences.

Finished loading

INFO:lm:Average dev loss from batch 31 to 40 is 8.5205733299

INFO:lm:Average dev loss from batch 41 to 50 is 8.5188814163

INFO:lm:valid ppl 5149.21093063

我们在 bilm.py (./LAC_demo/bilm.py) 中提供了 elmo_encoder 接口获取 ELMo 预训练模型的语义表示, 便于用户将 ELMo 语义表示快速迁移到下游任务;以 LAC任务为示例, 将 ELMo 预训练模型的语义表示迁移到 LAC 任务的主要步骤如下:

1) 搭建 LAC 网络结构,并加载 ELMo 预训练模型参数; 我们在 bilm.py (./LAC_demo/bilm.py) 中提供了加载预训练模型的接口函数 init_pretraining_params

#step1: create_lac_model()

#step2: load pretrained ELMo model

from bilm import init_pretraining_params

init_pretraining_params(exe, args.pretrain_elmo_model_path,

fluid.default_main_program())

2) 基于 ELMo 字典 (data/vocabulary_min5k.txt) 将输入数据转化为 word_ids

3)利用 elmo_encoder 接口获取 ELMo embedding

from bilm import elmo_encoder

elmo_embedding = elmo_encoder(word_ids)

4) ELMo embedding 与 LAC 原有 word_embedding 拼接得到最终的 embedding

word_embedding=fluid.layers.concat(input=[elmo_embedding, word_embedding], axis=1)

具体的例子可以通过运行LAC_demo目录下的train.py来进行训练,也可以使用run.sh快速开始 训练中的参数可以自行修改也可以从命令台中传递 详细的参数信息可以通过python train.py -h 来获得

!chmod 777 LAC_demo/run.sh

!./LAC_demo/run.sh----------- Configuration Arguments ----------- base_learning_rate: 0.001 batch_size: 32 bigru_num: 2 corpus_proportion_list: [1.0] corpus_type_list: ['train'] crf_learning_rate: 0.2 elmo_dict_dir: data/vocabulary_min5k.txt elmo_l2_coef: 0.001 emb_learning_rate: 5 eval_window: 20 grnn_hidden_dim: 256 label_dict_path: LAC_demo/data/tag.dic model_save_dir: LAC_demo/model num_iterations: 200 pretrain_elmo_model_path: checkpoints_model/4 save_model_per_batchs: 10 testdata_dir: LAC_demo/data/dev traindata_dir: LAC_demo/data/train traindata_shuffle_buffer: 200000 use_gpu: 1 word_dict_path: data/vocabulary_min5k.txt word_emb_dim: 128 word_rep_dict_path: LAC_demo/conf/q2b.dic ------------------------------------------------ W0815 17:17:41.046203 146 device_context.cc:259] Please NOTE: device: 0, CUDA Capability: 70, Driver API Version: 9.2, Runtime API Version: 9.0 W0815 17:17:41.049320 146 device_context.cc:267] device: 0, cuDNN Version: 7.3. Load model: checkpoints_model/4/embedding_para Load model: checkpoints_model/4/fw_layer1_gate_w Load model: checkpoints_model/4/lstmp_0.w_0 Load model: checkpoints_model/4/lstmp_0.w_1 Load model: checkpoints_model/4/lstmp_0.b_0 Load model: checkpoints_model/4/fw_layer2_gate_w Load model: checkpoints_model/4/lstmp_1.w_0 Load model: checkpoints_model/4/lstmp_1.w_1 Load model: checkpoints_model/4/lstmp_1.b_0 Load model: checkpoints_model/4/bw_layer1_gate_w Load model: checkpoints_model/4/lstmp_2.w_0 Load model: checkpoints_model/4/lstmp_2.w_1 Load model: checkpoints_model/4/lstmp_2.b_0 Load model: checkpoints_model/4/bw_layer2_gate_w Load model: checkpoints_model/4/lstmp_3.w_0 Load model: checkpoints_model/4/lstmp_3.w_1 Load model: checkpoints_model/4/lstmp_3.b_0 Load model: checkpoints_model/4/concat_1.tmp_0 Load model: checkpoints_model/4/concat_2.tmp_0 Load model: checkpoints_model/4/learning_rate_0 Load pretraining parameters from checkpoints_model/4. batch_id:0, avg_cost:67.85599 [train] batch_id:1, precision:[0.00982318], recall:[0.01136364], f1:[0.01053741] [test] batch_id:1, precision:[0.], recall:[0.], f1:0 cur_batch_id:1, last 10 batchs, time_cost:4.88927698135 batch_id:1, avg_cost:169.2442 batch_id:2, avg_cost:368.46252 batch_id:3, avg_cost:176.41377 batch_id:4, avg_cost:342.08368 batch_id:5, avg_cost:543.21936 batch_id:6, avg_cost:664.42163 train corpus finish a pass of training batch_id:7, avg_cost:928.9917 batch_id:8, avg_cost:1002.1571 batch_id:9, avg_cost:1357.6613 batch_id:10, avg_cost:1109.4237 [train] batch_id:11, precision:[0.05791167], recall:[0.04997476], f1:[0.05365127] [test] batch_id:11, precision:[0.03685801], recall:[0.02287214], f1:[0.02822767] cur_batch_id:11, last 10 batchs, time_cost:6.34379911423 batch_id:11, avg_cost:937.8706 batch_id:12, avg_cost:796.0739 batch_id:13, avg_cost:512.14636 train corpus finish a pass of training batch_id:14, avg_cost:791.7085 batch_id:15, avg_cost:581.1838 batch_id:16, avg_cost:610.3627 batch_id:17, avg_cost:414.55243 batch_id:18, avg_cost:503.47357 batch_id:19, avg_cost:484.46002 batch_id:20, avg_cost:343.6926 [train] batch_id:21, precision:[0.07392197], recall:[0.06077659], f1:[0.06670784] [test] batch_id:21, precision:[0.05386139], recall:[0.05099363], f1:[0.05238829] cur_batch_id:21, last 10 batchs, time_cost:6.21827197075 train corpus finish a pass of training batch_id:21, avg_cost:397.268 batch_id:22, avg_cost:410.65955 batch_id:23, avg_cost:402.19443 batch_id:24, avg_cost:347.045 batch_id:25, avg_cost:352.65454 batch_id:26, avg_cost:415.6413 batch_id:27, avg_cost:240.83746 train corpus finish a pass of training batch_id:28, avg_cost:364.97473 batch_id:29, avg_cost:370.4625 batch_id:30, avg_cost:350.3642 [train] batch_id:31, precision:[0.12244898], recall:[0.10928681], f1:[0.1154941] [test] batch_id:31, precision:[0.13845376], recall:[0.10273716], f1:[0.11795093] cur_batch_id:31, last 10 batchs, time_cost:6.15953683853 batch_id:31, avg_cost:327.22958 batch_id:32, avg_cost:302.98547 batch_id:33, avg_cost:364.05322 batch_id:34, avg_cost:327.11533 train corpus finish a pass of training batch_id:35, avg_cost:256.9819 batch_id:36, avg_cost:396.3713 batch_id:37, avg_cost:305.85535 batch_id:38, avg_cost:286.81573 batch_id:39, avg_cost:297.61414 batch_id:40, avg_cost:325.46326 [train] batch_id:41, precision:[0.16746682], recall:[0.15073716], f1:[0.15866221] [test] batch_id:41, precision:[0.13368549], recall:[0.12335958], f1:[0.12831513] cur_batch_id:41, last 10 batchs, time_cost:6.11847496033 batch_id:41, avg_cost:367.14523 train corpus finish a pass of training batch_id:42, avg_cost:272.13287 batch_id:43, avg_cost:280.3763 batch_id:44, avg_cost:299.41714 batch_id:45, avg_cost:316.0221 batch_id:46, avg_cost:385.01553 batch_id:47, avg_cost:302.57825 batch_id:48, avg_cost:386.66562 train corpus finish a pass of training batch_id:49, avg_cost:319.39206 batch_id:50, avg_cost:243.25087 [train] batch_id:51, precision:[0.17085137], recall:[0.16188132], f1:[0.16624544] [test] batch_id:51, precision:[0.08670715], recall:[0.09636295], f1:[0.09128041] cur_batch_id:51, last 10 batchs, time_cost:6.47385501862 batch_id:51, avg_cost:278.06604 batch_id:52, avg_cost:356.03763 batch_id:53, avg_cost:245.61984 batch_id:54, avg_cost:272.50836 batch_id:55, avg_cost:165.03854 train corpus finish a pass of training batch_id:56, avg_cost:261.45743 batch_id:57, avg_cost:330.75977 batch_id:58, avg_cost:287.66693 batch_id:59, avg_cost:244.21954 batch_id:60, avg_cost:313.50772 [train] batch_id:61, precision:[0.18973561], recall:[0.18851404], f1:[0.18912285] [test] batch_id:61, precision:[0.20518519], recall:[0.20772403], f1:[0.2064468] cur_batch_id:61, last 10 batchs, time_cost:6.45753288269 batch_id:61, avg_cost:233.46622 batch_id:62, avg_cost:320.61487 train corpus finish a pass of training batch_id:63, avg_cost:237.17879 batch_id:64, avg_cost:238.57405 batch_id:65, avg_cost:198.45706 batch_id:66, avg_cost:220.49417 batch_id:67, avg_cost:259.89157 batch_id:68, avg_cost:280.78397 batch_id:69, avg_cost:251.13873 train corpus finish a pass of training batch_id:70, avg_cost:181.54883 [train] batch_id:71, precision:[0.18741336], recall:[0.18903803], f1:[0.18822219] [test] batch_id:71, precision:[0.21155347], recall:[0.2032246], f1:[0.20730541] cur_batch_id:71, last 10 batchs, time_cost:6.2163169384 batch_id:71, avg_cost:204.51677 batch_id:72, avg_cost:225.99988 batch_id:73, avg_cost:220.11269 batch_id:74, avg_cost:233.32338 batch_id:75, avg_cost:225.42218 batch_id:76, avg_cost:234.40227 train corpus finish a pass of training batch_id:77, avg_cost:185.35623 batch_id:78, avg_cost:188.9462 batch_id:79, avg_cost:235.40083 batch_id:80, avg_cost:185.37497 [train] batch_id:81, precision:[0.21812822], recall:[0.22555245], f1:[0.22177822] [test] batch_id:81, precision:[0.19681677], recall:[0.19010124], f1:[0.19340072] cur_batch_id:81, last 10 batchs, time_cost:6.11792683601 batch_id:81, avg_cost:177.65024 batch_id:82, avg_cost:177.92648 batch_id:83, avg_cost:182.11859 train corpus finish a pass of training batch_id:84, avg_cost:155.43636 batch_id:85, avg_cost:165.91559 batch_id:86, avg_cost:143.97029 batch_id:87, avg_cost:192.8786 batch_id:88, avg_cost:222.1808 batch_id:89, avg_cost:178.76294 batch_id:90, avg_cost:163.18944 [train] batch_id:91, precision:[0.2292534], recall:[0.22723521], f1:[0.22823985] [test] batch_id:91, precision:[0.21018624], recall:[0.20734908], f1:[0.20875802] cur_batch_id:91, last 10 batchs, time_cost:6.23571896553 train corpus finish a pass of training batch_id:91, avg_cost:167.80344 batch_id:92, avg_cost:199.44887 batch_id:93, avg_cost:174.96165 batch_id:94, avg_cost:151.32129 batch_id:95, avg_cost:206.15904 batch_id:96, avg_cost:194.63501 batch_id:97, avg_cost:187.91647 train corpus finish a pass of training batch_id:98, avg_cost:160.79263 batch_id:99, avg_cost:112.24432 batch_id:100, avg_cost:157.16174 [train] batch_id:101, precision:[0.25387548], recall:[0.25291139], f1:[0.25339252] [test] batch_id:101, precision:[0.17872178], recall:[0.17510311], f1:[0.17689394] cur_batch_id:101, last 10 batchs, time_cost:6.46575498581 batch_id:101, avg_cost:134.96227 batch_id:102, avg_cost:139.1122 batch_id:103, avg_cost:142.30022 batch_id:104, avg_cost:92.17198 train corpus finish a pass of training batch_id:105, avg_cost:146.72908 batch_id:106, avg_cost:157.94135 batch_id:107, avg_cost:132.82204 batch_id:108, avg_cost:120.6241 batch_id:109, avg_cost:135.41524 batch_id:110, avg_cost:124.86472 [train] batch_id:111, precision:[0.27025], recall:[0.27739287], f1:[0.27377485] [test] batch_id:111, precision:[0.23], recall:[0.22422197], f1:[0.22707424] cur_batch_id:111, last 10 batchs, time_cost:6.20078611374 batch_id:111, avg_cost:163.32486 train corpus finish a pass of training batch_id:112, avg_cost:155.48419 batch_id:113, avg_cost:138.0616 batch_id:114, avg_cost:147.8273 batch_id:115, avg_cost:118.42804 batch_id:116, avg_cost:97.26342 batch_id:117, avg_cost:145.0313 batch_id:118, avg_cost:125.519844 train corpus finish a pass of training batch_id:119, avg_cost:150.58965 batch_id:120, avg_cost:153.0031 [train] batch_id:121, precision:[0.26091587], recall:[0.26893524], f1:[0.26486486] [test] batch_id:121, precision:[0.24603175], recall:[0.23247094], f1:[0.23905919] cur_batch_id:121, last 10 batchs, time_cost:6.15653705597 batch_id:121, avg_cost:142.9573 batch_id:122, avg_cost:150.14717 batch_id:123, avg_cost:127.08771 batch_id:124, avg_cost:194.92033 batch_id:125, avg_cost:232.15514 train corpus finish a pass of training batch_id:126, avg_cost:136.94482 batch_id:127, avg_cost:166.16107 batch_id:128, avg_cost:168.60643 batch_id:129, avg_cost:143.25623 batch_id:130, avg_cost:179.61394 [train] batch_id:131, precision:[0.27293696], recall:[0.27483871], f1:[0.27388453] [test] batch_id:131, precision:[0.2263029], recall:[0.24259468], f1:[0.23416576] cur_batch_id:131, last 10 batchs, time_cost:6.04580497742 batch_id:131, avg_cost:225.20033 batch_id:132, avg_cost:266.11258 train corpus finish a pass of training batch_id:133, avg_cost:194.00279 batch_id:134, avg_cost:206.44022 batch_id:135, avg_cost:198.67245 batch_id:136, avg_cost:119.586914 batch_id:137, avg_cost:224.02942 batch_id:138, avg_cost:164.80438 batch_id:139, avg_cost:172.94305 train corpus finish a pass of training batch_id:140, avg_cost:157.12622 [train] batch_id:141, precision:[0.2584885], recall:[0.25884288], f1:[0.25866557] [test] batch_id:141, precision:[0.15638016], recall:[0.15485564], f1:[0.15561417] cur_batch_id:141, last 10 batchs, time_cost:6.27458119392 batch_id:141, avg_cost:151.66109 batch_id:142, avg_cost:182.27028 batch_id:143, avg_cost:225.22607 batch_id:144, avg_cost:175.37704 batch_id:145, avg_cost:237.0043 batch_id:146, avg_cost:184.9229 train corpus finish a pass of training batch_id:147, avg_cost:198.18666 batch_id:148, avg_cost:201.46677 batch_id:149, avg_cost:218.22818 batch_id:150, avg_cost:206.15642 [train] batch_id:151, precision:[0.23468576], recall:[0.22292191], f1:[0.22865263] [test] batch_id:151, precision:[0.20527961], recall:[0.2215973], f1:[0.21312658] cur_batch_id:151, last 10 batchs, time_cost:6.52291107178 batch_id:151, avg_cost:150.58075 batch_id:152, avg_cost:213.98236 batch_id:153, avg_cost:229.63696 train corpus finish a pass of training batch_id:154, avg_cost:211.92928 batch_id:155, avg_cost:213.10037 batch_id:156, avg_cost:166.47794 batch_id:157, avg_cost:169.2164 batch_id:158, avg_cost:185.70042 batch_id:159, avg_cost:145.64087 batch_id:160, avg_cost:140.44785 [train] batch_id:161, precision:[0.24801812], recall:[0.24766751], f1:[0.24784269] [test] batch_id:161, precision:[0.26692607], recall:[0.25721785], f1:[0.26198205] cur_batch_id:161, last 10 batchs, time_cost:6.10014605522 train corpus finish a pass of training batch_id:161, avg_cost:147.52998 batch_id:162, avg_cost:168.52232 batch_id:163, avg_cost:180.75197 batch_id:164, avg_cost:142.51047 batch_id:165, avg_cost:175.90273 batch_id:166, avg_cost:127.813576 batch_id:167, avg_cost:254.80716 train corpus finish a pass of training batch_id:168, avg_cost:136.56233 batch_id:169, avg_cost:160.04794 batch_id:170, avg_cost:163.88916 [train] batch_id:171, precision:[0.25535625], recall:[0.26002029], f1:[0.25766717] [test] batch_id:171, precision:[0.24231465], recall:[0.2512186], f1:[0.2466863] cur_batch_id:171, last 10 batchs, time_cost:6.39605212212 batch_id:171, avg_cost:152.44135 batch_id:172, avg_cost:150.23276 batch_id:173, avg_cost:154.77087 batch_id:174, avg_cost:150.43054 train corpus finish a pass of training batch_id:175, avg_cost:157.85165 batch_id:176, avg_cost:158.63647 batch_id:177, avg_cost:148.5528 batch_id:178, avg_cost:133.47305 batch_id:179, avg_cost:131.14462 batch_id:180, avg_cost:142.40317 [train] batch_id:181, precision:[0.26027066], recall:[0.274815], f1:[0.26734517] [test] batch_id:181, precision:[0.28191298], recall:[0.29396325], f1:[0.28781204] cur_batch_id:181, last 10 batchs, time_cost:6.65478801727 batch_id:181, avg_cost:100.20147 train corpus finish a pass of training batch_id:182, avg_cost:142.5812 batch_id:183, avg_cost:133.92157 batch_id:184, avg_cost:101.42218 batch_id:185, avg_cost:129.38632 batch_id:186, avg_cost:128.76445 batch_id:187, avg_cost:143.26361 batch_id:188, avg_cost:124.07858 train corpus finish a pass of training batch_id:189, avg_cost:126.99987 batch_id:190, avg_cost:123.342804 [train] batch_id:191, precision:[0.25712763], recall:[0.26798112], f1:[0.26244221] [test] batch_id:191, precision:[0.20256992], recall:[0.20097488], f1:[0.20176925] cur_batch_id:191, last 10 batchs, time_cost:6.3188970089 batch_id:191, avg_cost:105.64111 batch_id:192, avg_cost:112.42756 batch_id:193, avg_cost:131.16568 batch_id:194, avg_cost:108.90467 batch_id:195, avg_cost:130.49854 train corpus finish a pass of training batch_id:196, avg_cost:142.66708 batch_id:197, avg_cost:124.18568 batch_id:198, avg_cost:101.083374 batch_id:199, avg_cost:118.57515

# 使用固化的参数来进行Infer 具体的参数详情可以通过 python infer.py -h来获得

!python LAC_demo/infer.py --corpus_type_list test --corpus_proportion_list 11 sample's result: <UNK>/n 电脑/vn 对/v-I 胎儿/v-I 影响/vn-B 大/v-I 吗/a 2 sample's result: 这个/r 跟/p 我们/ns 一直/p 传承/n 《/p 易经/n 》/n 的/u 精神/n 是/v-I 分/v 不/d 开/v 的/u 3 sample's result: 他们/p 不/r 但/ad 上/v-I 名医/v-I 门诊/n ,/w 还/n 兼/ns-I 作/ns-I 门诊/n 医生/v-I 的/n 顾问/v-I 团/nt 4 sample's result: 负责/n 外商/v-I 投资/v-I 企业/n 和/v-I 外国/v-I 企业/n 的/u 税务/nr-I 登记/v-I ,/w 纳税/n 申报/vn 和/n 税收/vn 资料/n 的/u 管理/n ,/w 全面/c 掌握/n 税收/vn 信息/n 5 sample's result: 采用/ns-I 弹性/ns-I 密封/ns-I 结构/n ,/w 实现/n 零/v-B 间隙/v-I 6 sample's result: 要/r 做/n 好/p 这/n 三/p 件/vn 事/n ,/w 支行/q 从/q 风险/n 管理/p 到/a 市场/q 营销/n 策划/c 都/p 必须/vn 专业/n 到位/vn 7 sample's result: 那么/nz-B ,/r 请/v-I 你/v-I 一定/nz-B 要/d-I 幸福/ad ./v-I 8 sample's result: 叉车/ns-I 在/ns-I 企业/n 的/u 物流/n 系统/vn 中/ns-I 扮演/ns-I 着/v-I 非常/q 重要/n 的/u 角色/n ,/w 是/u 物料/vn 搬运/ns-I 设备/n 中/vn 的/u 主力/ns-I 军/v-I 9 sample's result: 我/r 真/t 的/u 能够/vn 有/ns-I 机会/ns-I 拍摄/v-I 这部/vn 电视/ns-I 剧/v-I 么/vn 10 sample's result: 这种/r 情况/n 应该/v-I 是/v-I 没/n 有/p 危害/n 的/u

点击链接,使用AI Studio一键上手实践项目吧:https://aistudio.baidu.com/aistudio/projectdetail/124374

来源:oschina

链接:https://my.oschina.net/u/4067628/blog/4256791