Android Framework:Binder(5)-Native Service的跨进程调用

一、Native Service调用概述

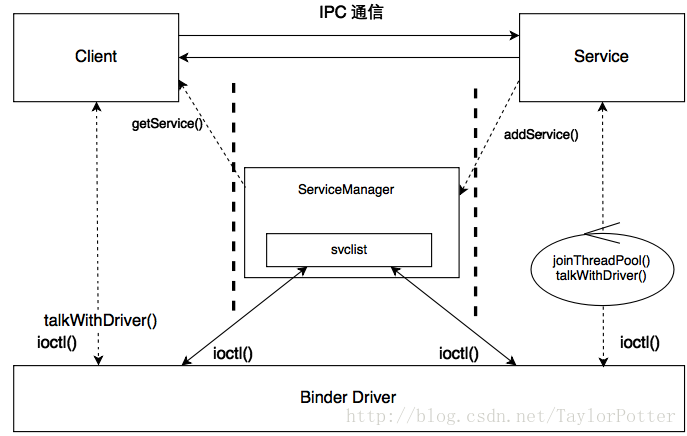

在上一篇Native service的注册就已经可以看到Client端请求Server端的过程,Native Service是Client端,ServiceManager是Server端。

本篇从Native Service调用的角度来学习Client端是如何通过Binder驱动跨进程调用Server端的方法的。还是以Camera Service作为案例分析。

废话不说先上图:

上图主要有以下几个重点:

1. Client端跨进程调用Service端需要先跨进程向ServiceManager进程查询该Service,并获取到该包含该Service的handle值的扁平的binder对象,进而在Client端构造出Service的代理对象,通过该Service代理对象调用Service的方法。

2. Service在初始化时需要跨进程向ServiceManager注册自己,之后搭建了自己的线程池机制不断访问binder驱动查看是否有发向自己的Client端请求。

3. Client,Service,ServiceManager是运行在用户空间的独立进程,binder驱动运行在内核空间,用户进程无法互相访问对方的用户空间,但用户进程可以访问内核空间,跨进程实质即是通过binder驱动的内核空间进行中转传输指令与数据。用户空间进程与binder驱动数据交换的方法是ioctl()。

二、Native Service调用细节

Client进程调用CameraSerice的方法:

在frameworks/av/camera/CameraBase.cpp中有看到调用camera service的方法:

template <typename TCam, typename TCamTraits>

int CameraBase<TCam, TCamTraits>::getNumberOfCameras() {

const sp<::android::hardware::ICameraService> cs = getCameraService();

if (!cs.get()) {

// as required by the public Java APIs

return 0;

}

int32_t count;

binder::Status res = cs->getNumberOfCameras(

::android::hardware::ICameraService::CAMERA_TYPE_BACKWARD_COMPATIBLE,

&count);

if (!res.isOk()) {

ALOGE("Error reading number of cameras: %s",

res.toString8().string());

count = 0;

}

return count;

}

从上面可以看到主要分为两步:

1. 获取Cameraservice的代理对象;

2. 通过代理对象来调用Service的对应方法。

而获取CameraService的代理对象是需要先跨进程向ServiceManager的查询该Service,以获取到该Service的handle值来构造该Service的代理对象,再通过代理对象调用Service的接口方法。

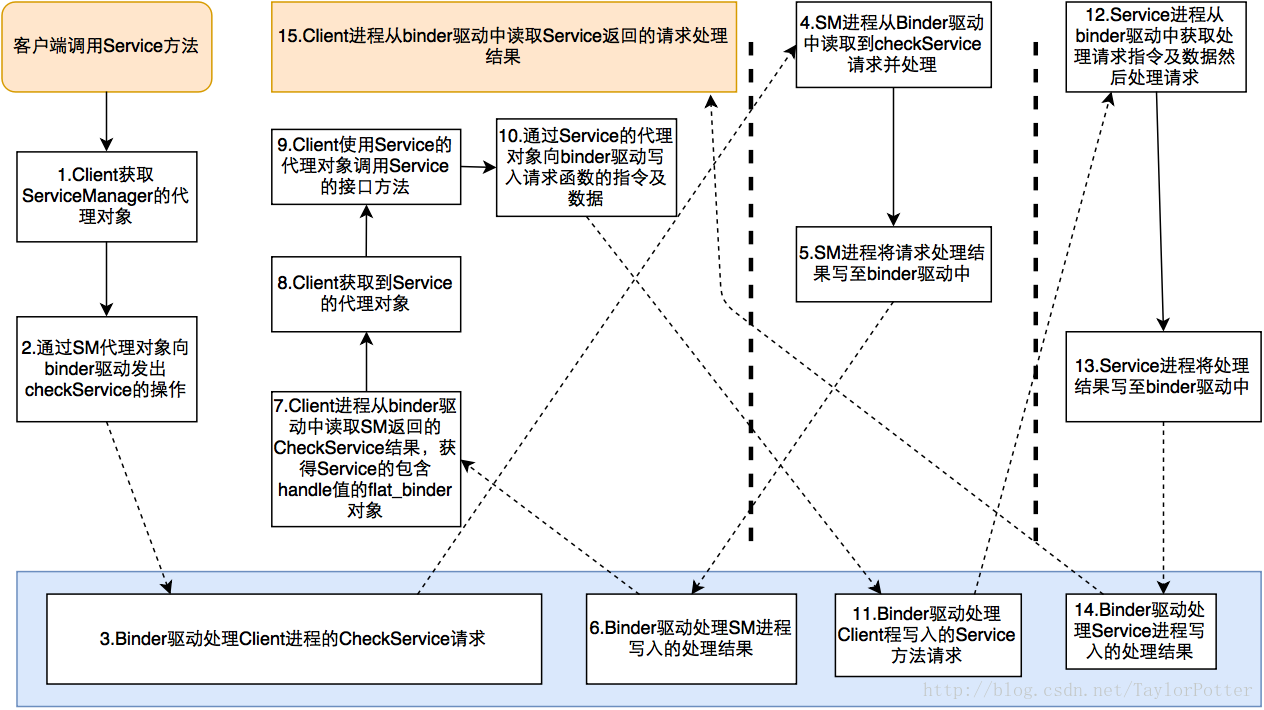

先上一张图进行流程大概梳理,方便我们在下面的学习中定位:

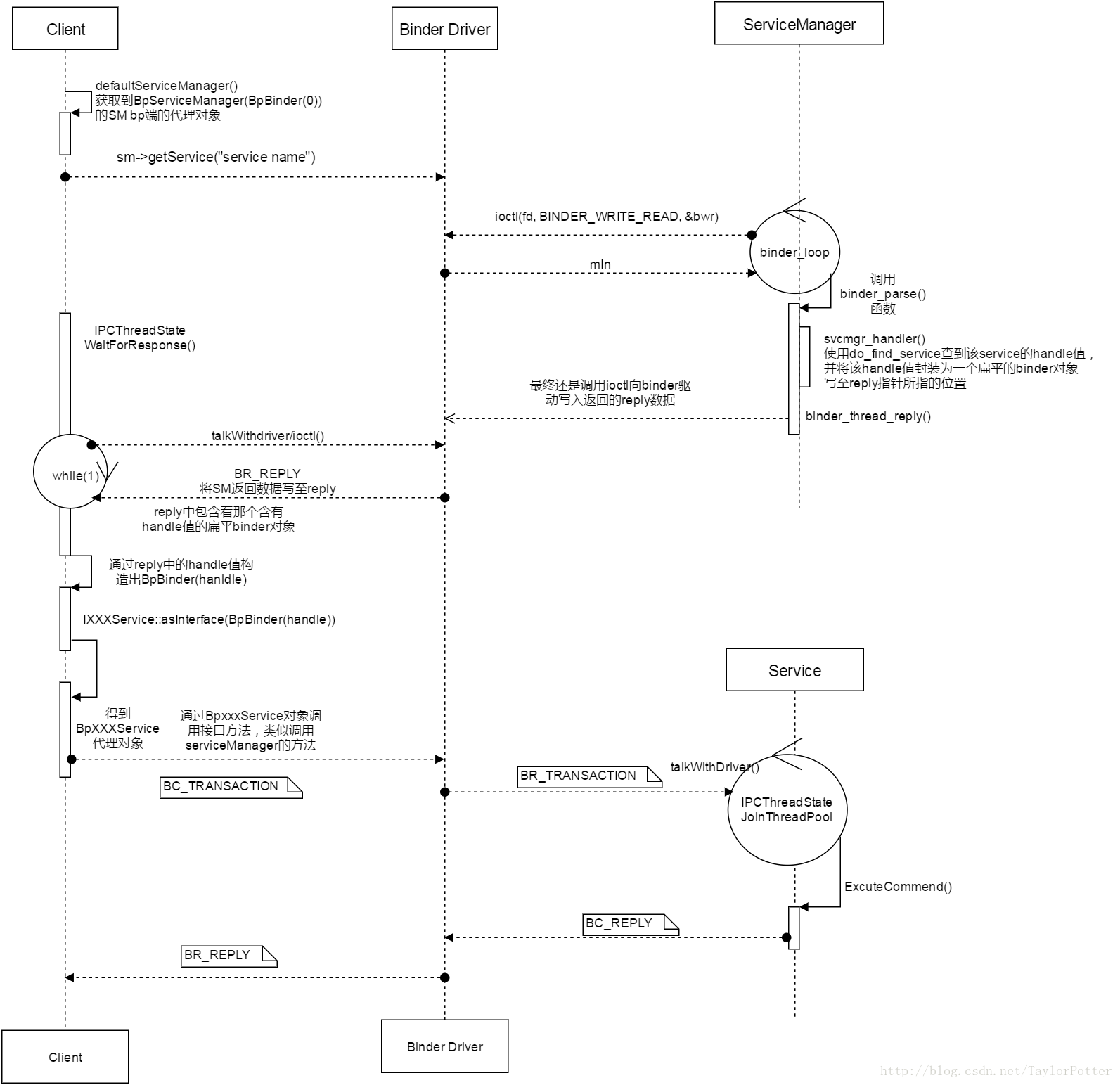

1. Client 获取ServiceManager的代理对象:

//frameworks/av/camera/ndk/impl/ACameraManager.h

const char* kCameraServiceName = "media.camera";

const sp<::android::hardware::ICameraService> CameraBase<TCam, TCamTraits>::getCameraService()

{

if (gCameraService.get() == 0) {

//上篇博客已分析获取了ServiceManager的代理BpServiceManager(BpBinder(0))

sp<IServiceManager> sm = defaultServiceManager();

sp<IBinder> binder;

do {

binder = sm->getService(String16(kCameraServiceName));

} while(true);

binder->linkToDeath(gDeathNotifier);

gCameraService = interface_cast<::android::hardware::ICameraService>(binder);

}

return gCameraService;

}

2. 利用SM的代理对象查询该Service:

2.1 调用到ServiceManager的getService接口:

//frameworks/native/libs/binder/IServiceManager.cpp

virtual sp<IBinder> getService(const String16& name) const

{

unsigned n;

for (n = 0; n < 5; n++){//最多尝试5次

sp<IBinder> svc = checkService(name);

if (svc != NULL) return svc;

}

return NULL;

}

再看checkService():

//frameworks/native/libs/binder/IServiceManager.cpp:: BpServiceManager

virtual sp<IBinder> checkService( const String16& name) const

{

Parcel data, reply;

//向data中写入IServiceManager::descriptor,即""android.os.IServiceManager""

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

data.writeString16(name);

//remote()得到是BpBinder(0)

remote()->transact(CHECK_SERVICE_TRANSACTION, data, &reply);

return reply.readStrongBinder();//下面注意reply值是怎么来的

}

2.2 由前面的一篇学习文章知道,这里的remote()得到的是BpBinder(0),BpBinder(0)->transact()会调用到IPCThreadState中的transact()方法,将请求数据封装成Parcel类型的数据发送到binder驱动中。

//

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)//注意这个

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

//mHandle由BpBinder构造函数中赋值,即此时为0

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

transact中的flags在BpBinder的头文件中已默认赋值为0即是非one_way的方式(异步无需等待继续执行),

//frameworks/native/libs/binder/BpBinder.cpp

class BpBinder : public IBinder

{

public:

BpBinder(int32_t handle);

virtual status_t transact(uint32_t code,

const Parcel& data,

Parcel* reply,

uint32_t flags = 0);

...

}

//Flag的几个值,定义在binder.h

enum transaction_flags {

TF_ONE_WAY = 0x01, /* this is a one-way call: async, no return */

TF_ROOT_OBJECT = 0x04, /* contents are the component's root object */

TF_STATUS_CODE = 0x08, /* contents are a 32-bit status code */

TF_ACCEPT_FDS = 0x10, /* allow replies with file descriptors */

};

2.3 BpBinder(0)中调用IPCThreadState中transact方法,transact方法中先将需传给binder驱动的数据重新解析打包至mOut中,然后调用writeTransactionData()方法向binder驱动中传递数据并等待binder驱动的返回结果:

//frameworks/native/libs/binder/IPCThreadState.cpp

//handle是0,

//code是CHECK_SERVICE_TRANSACTION,

//data是descriptor: "android.os.IServiceManager" 和 service name

//reply是返回结果

//flag是transaction类型

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

//看到使用了binder协议中能的BC_TRANSACTION

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL/*status_t* statusBuffer*/);

if (reply) {

err = waitForResponse(reply);

}

}

2.4 writeTransactionData中,需传给binder驱动的数据重新打包至一个binder_transaction_data的数据结构中,然后将binder协议命令cmd和数据tr依次写至mOut中,这里的cmd即是上一步传下来的BC_TRANSACTION:

//frameworks/native/libs/binder/IPCThreadState.cpp

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr;

tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */

tr.target.handle = handle;

tr.code = code;

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

...

mOut.writeInt32(cmd);

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

}

2.5 再看下waitForResponse()方法和talkWithDriver()方法:

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

//循环中先talkWithDriver,在talkWithDriver中向binder驱动写入指令和数据

if ((err=talkWithDriver()) < NO_ERROR) break;

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;//没有返回数据继续读

//直到有返回数据取指令及数据进行处理

cmd = (uint32_t)mIn.readInt32();

switch (cmd) {

...

//处理返回的结果

...

}

}

...

}

talkWithDriver()中将mOut中的数据写到一个binder_write_read的数据结构bwr中,通过ioctl()向binder驱动中写入bwr中的数据:

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

binder_write_read bwr;

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t)mOut.data();//将mOut中的data赋值给bwr中的write_buffer变量中

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

}

3. Binder驱动处理Client发向SM的CheckService请求:

由Binder Driver的学习中得知,这里的ioctl()方法会调用到binder驱动中的binder_ioctl()方法,下面我们借此篇再快速的复习下之前学的东西:

3.1 binder_ioctl():

static long binder_ioctl(struct file *filp, unsigned int cmd, unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;//arg里即包含着binder协议指令及数据

ret = wait_event_interruptible(binder_user_error_wait, binder_stop_on_user_error < 2);

if (ret)

goto err_unlocked;

binder_lock(__func__);

//得到目标进程的目标线程

thread = binder_get_thread(proc);

...

switch (cmd) {

case BINDER_WRITE_READ:

ret = binder_ioctl_write_read(filp, cmd, arg, thread);

if (ret)

goto err;

break;

}

...

}

3.2 binder_ioctl_write_read()函数:

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;//arg里即包含着binder协议指令及数据

struct binder_write_read bwr;

if (bwr.write_size > 0) {

ret = binder_thread_write(...);

goto out;

}

}

if (bwr.read_size > 0) {

ret = binder_thread_read(...);

if (!list_empty(&proc->todo))//如果进程todo列表中还有任务则唤醒

wake_up_interruptible(&proc->wait);//唤醒

}

}

out:

return ret;

}

3.3 binder_thread_write:

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error == BR_OK) {

if (get_user(cmd, (uint32_t __user *)ptr))//取出数据结构中首32位中的binder协议指令

return -EFAULT;

ptr += sizeof(uint32_t);//数据结构32位之后的是需要传给binder驱动的数据

...

switch (cmd) {

...

case BC_FREE_BUFFER: break;

//从上面分析,传入的binder协议指令是BC_TRANSACTION

case BC_TRANSACTION:

case BC_REPLY: break;

...

}

}

return 0;

}

3.4 下面来看下binder_transaction方法:

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply)

{

struct binder_transaction *t;

struct binder_work *tcomplete;

struct binder_proc *target_proc;

struct binder_thread *target_thread = NULL;

struct binder_node *target_node = NULL;

struct list_head *target_list;

wait_queue_head_t *target_wait;

struct binder_transaction *in_reply_to = NULL;

...

if (reply) {

in_reply_to = thread->transaction_stack;

binder_set_nice(in_reply_to->saved_priority);

thread->transaction_stack = in_reply_to->to_parent;

target_thread = in_reply_to->from;

...

target_proc = target_thread->proc;

} else {

if (tr->target.handle) {//handle不为0是serviceManager之外的server端

struct binder_ref *ref;

//根据tr中的target.handle值在红黑树中找到对应的目标node

ref = binder_get_ref(proc, tr->target.handle, true);

...

target_node = ref->node;

} else {

target_node = binder_context_mgr_node;

...

}

target_proc = target_node->proc;//得到目标进程

...

}

//如果有目标线程则取目标线程的todo列表,否则取目标进程的todo列表

if (target_thread) {

e->to_thread = target_thread->pid;

target_list = &target_thread->todo;

target_wait = &target_thread->wait;

} else {

target_list = &target_proc->todo;

target_wait = &target_proc->wait;

}

t = kzalloc(sizeof(*t), GFP_KERNEL);//创建binder_transaction节点

...

tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);//创建一个binder_work节点

...

//thread来自binder_ioctl(...)中的binder_get_thread(proc)返回当前线程

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;

else

t->from = NULL;

...

//构造t,将传输数据封装至t中

...

t->work.type = BINDER_WORK_TRANSACTION;//设置本次的Binder_work type

// 把binder_transaction节点插入target_list(即目标todo队列,这里即是ServiceManager进程里的的todo队列)

list_add_tail(&t->work.entry, target_list);

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

list_add_tail(&tcomplete->entry, &thread->todo);

if (target_wait) {

if (reply || !(t->flags & TF_ONE_WAY)) {

preempt_disable();

wake_up_interruptible_sync(target_wait);

preempt_enable_no_resched();

}

else {

wake_up_interruptible(target_wait); //唤醒

}

}

return;

...

}

binder_transaction的基本目标就是在发起端建立一个binder_transaction节点,并把这个节点插入目标进程或其合适的子线程的todo队列中。

4. SM进程从Binder驱动获取并处理checkService请求:

在Android Framework:Binder(2)-Service Manager文章中我们知道ServiceManager在初始化后就一直循环binder_loop(),binder_loop()中会进入一个死循环,在死循环中不断的向binder驱动查询是否有向自己发的请求,当收到Client端的请求数据后,利用binder_parse()解析数据,解析数据后使用svcmgr_handler函数进行处理:

4.1 svcmgr_handler()方法:

int svcmgr_handler(struct binder_state *bs,

struct binder_transaction_data *txn,

struct binder_io *msg,

struct binder_io *reply)

{

struct svcinfo *si;

uint16_t *s;

size_t len;

uint32_t handle;

uint32_t strict_policy;

int allow_isolated;

...

switch(txn->code) {

case SVC_MGR_GET_SERVICE:

case SVC_MGR_CHECK_SERVICE:

s = bio_get_string16(msg, &len);

//根据msg找到Service的handle值

handle = do_find_service(s, len, txn->sender_euid, txn->sender_pid);

if (!handle)

break;

//将handle封装至一个flat_binder_object类型的对象中写至reply指针所指的位置

bio_put_ref(reply, handle);

return 0;

case SVC_MGR_ADD_SERVICE: break;

case SVC_MGR_LIST_SERVICES: ... return -1;

...

}

}

4.2 bio_put_ref将新建了一个flat_binder_object类型的对象,将获取到的handle值打包转换成一个扁平的binder对象:

//frameworks/native/cmds/servicemanager/binder.c

void bio_put_ref(struct binder_io *bio, uint32_t handle)

{

struct flat_binder_object *obj;

if (handle) obj = bio_alloc_obj(bio);

else obj = bio_alloc(bio, sizeof(*obj));

if (!obj)

return;

obj->flags = 0x7f | FLAT_BINDER_FLAG_ACCEPTS_FDS;

obj->type = BINDER_TYPE_HANDLE;

obj->handle = handle;

obj->cookie = 0;

}

4.3 再回到binder_parse()中:

//frameworks\native\cmds\servicemanager\binder.c

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

while (ptr < end) {

switch(cmd) {

...

case BR_TRANSACTION: {

//強转类型,保证处理前将数据转为binder_transaction_data类型

struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr;

if (func) {

//将数据源txn解析成binder_io类型数据,写至msg指针的地址上

bio_init_from_txn(&msg, txn);

//使用svcmgr_handler将处理返回的数据赋值写至指针reply所指的地址

res = func(bs, txn, &msg, &reply);

if (txn->flags & TF_ONE_WAY) {

binder_free_buffer(bs, txn->data.ptr.buffer);

} else {

//在这里将返回Client端的数据send回binder驱动,重点注意到reply中的flat_binder_object对象

//txn中有本次binder_transaction_data的详细信息

binder_send_reply(bs, &reply, txn->data.ptr.buffer, res);

}

}

...

}

case BR_REPLY: ... break;

...

default:

ALOGE("parse: OOPS %d\n", cmd);

return -1;

}

}

return r;

}

4.4 接着看binder_send_reply():

frameworks\native\cmds\servicemanager\binder.c

void binder_send_reply(struct binder_state *bs,

struct binder_io *reply,

binder_uintptr_t buffer_to_free,

int status)

{

struct {

uint32_t cmd_free;

binder_uintptr_t buffer;

uint32_t cmd_reply;

struct binder_transaction_data txn;

} __attribute__((packed)) data;

data.cmd_free = BC_FREE_BUFFER;

data.buffer = buffer_to_free;//上一步的binder_transaction_data

data.cmd_reply = BC_REPLY; //注意到这里是BC_REPLY,ServiceManager进程向binder驱动发送

...

data.txn.data.ptr.buffer = (uintptr_t)reply->data0;

data.txn.data.ptr.offsets = (uintptr_t)reply->offs0;

}

binder_write(bs, &data, sizeof(data));//将封装的新的data写给binder驱动

}

在来看binder_write(),binder_write()中调用ioctl()将封装好的数据写给binder驱动:

frameworks/native/cmds/servicemanager/binder.c

int binder_write(struct binder_state *bs, void *data, size_t len)

{

struct binder_write_read bwr;

int res;

bwr.write_size = len;

bwr.write_consumed = 0;

bwr.write_buffer = (uintptr_t) data;

bwr.read_size = 0;

bwr.read_consumed = 0;

bwr.read_buffer = 0;

//我们看到ServiceManager进程同样也使用了ioctl将reply及txn的信息封装成binder_write_read数据结构后的数据写给binder驱动

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

...

return res;

}

5. Binder驱动处理SM发给Client的处理结果:

5.1 ioctl()向binder中写调用到binder驱动中的binder_thread_write()函数:

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error == BR_OK) {

switch (cmd) {

...

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

//看到这里又走到binder_transaction()函数中,第4个参数是int reply,此时从上面

//来看,cmd此时是BC_REPLY

binder_transaction(proc, thread, &tr, cmd == BC_REPLY);

break;

}

...

}

}

return 0;

}

5.2 这里再次调用到binder_transaction()函数,注意方向是SM->Client进程,主要是将SM的checkService的请求处理结果先封装至一个binder_transaction的结构体中,然后将该结构体插入到请求进程Client进程的todo列表中,等待Client进程中对应的binder线程进行处理:

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply)

{

struct binder_transaction *t;

struct binder_work *tcomplete;

struct binder_proc *target_proc;

struct binder_thread *target_thread = NULL;

struct binder_node *target_node = NULL;

struct list_head *target_list;

wait_queue_head_t *target_wait;

//可以通过in_reply_to得到最终发出这个事务请求的线程和进程

struct binder_transaction *in_reply_to = NULL;

//此时应走reply为true的分支

if (reply) {

in_reply_to = thread->transaction_stack;

binder_set_nice(in_reply_to->saved_priority);

...

thread->transaction_stack = in_reply_to->to_parent;

target_thread = in_reply_to->from;

...

target_proc = target_thread->proc;

}

...

tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);

...

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;//获取源线程

else

t->from = NULL;

t->sender_euid = task_euid(proc->tsk);

t->to_proc = target_proc;

t->to_thread = target_thread;

t->code = tr->code;

t->flags = tr->flags;

t->priority = task_nice(current);

t->buffer = binder_alloc_buf(target_proc, tr->data_size,

tr->offsets_size, !reply && (t->flags & TF_ONE_WAY));

t->buffer->allow_user_free = 0;

t->buffer->debug_id = t->debug_id;

t->buffer->transaction = t;

//如果reply为true,这里的target_node依然为NULL

t->buffer->target_node = target_node;

...

if (reply) {

binder_pop_transaction(target_thread, in_reply_to);

}

...

t->work.type = BINDER_WORK_TRANSACTION;

// 把binder_transaction节点t->work.entry和tcomplete插入target_list(即目标todo队列)

list_add_tail(&t->work.entry, target_list);

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

list_add_tail(&tcomplete->entry, &thread->todo);

if (target_wait) {

if (reply || !(t->flags & TF_ONE_WAY)) {

preempt_disable();

wake_up_interruptible_sync(target_wait);

preempt_enable_no_resched();

}

else {

wake_up_interruptible(target_wait);

}

}

return;

...

}

我们看到binder_transaction()中主要将含有含有reply数据的tr封装至binder_transaction结构体对象t中,并将该t中的binder_work对象加到了源线程的todo队列中;

6. Client进程从Binder驱动中读取返回结果

6.1 再回到之前Client进程中的的2.3步骤,我们看到的是在waitForResponse()函数中能够在不断的talkWithDriver,talkWithDriver中在做ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr)操作

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

//将从binder驱动中读取到的server端的返回数据写到mIn中,这里的数据及包含上面reply及txn的信息

return err;

}

此时调用binder.c中的binder_ioctl()方法,在下面的binder_ioctl_write_read()中,bwr.read_size>0,会走binder_thread_read

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

if (t->buffer->target_node) {

struct binder_node *target_node = t->buffer->target_node;

...

cmd = BR_TRANSACTION;

} else {//从上面的分析我们得知t->buffer->target_node是NULL

tr.target.ptr = 0;

tr.cookie = 0;

cmd = BR_REPLY;因此这里的cmd是BR_REPLY

}

}

6.2 接着看IPCThreadState::waitForResponse()下面的步骤,从mIn中读出的cmd是BR_REPLY:

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

if ((err=talkWithDriver()) < NO_ERROR) break;

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = (uint32_t)mIn.readInt32();

switch (cmd) {

case BR_TRANSACTION_COMPLETE:if (!reply && !acquireResult) goto finish; break;

...

case BR_REPLY: {

binder_transaction_data tr;

//从mIn中读取数据值至tr指针位置

err = mIn.read(&tr, sizeof(tr));

if (reply) {

//如果不是TF_STATE_CODE,flag在前面介绍过默认值是0x0

if ((tr.flags & TF_STATUS_CODE) == 0) {

//接收线程解析tr.data.ptr字段为server返回的binder实体的指针

//reinterpret_cast强行指针转换,转换为binder实体,binder作为ipc

//的唯一token。

reply->ipcSetDataReference(

//binder实体的起始地址

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

//binder实体的基地址偏移量

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t),

freeBuffer, this);

} else {

...

}

} else {

//释放掉缓存池的控件;

continue;

}

}

goto finish;

//执行其他指令

default: err = executeCommand(cmd);if (err != NO_ERROR) goto finish; break;

}

}

finish:

...

return err;

}

至此,checkService中的 remote()->transact(CHECK_SERVICE_TRANSACTION, data, &reply);的reply即得到了binder驱动传过来的serviceManager的返回值。有点长,额,==#。

7. Client获取到Service的代理对象:

7.1 接下来,将reply中的数据转换为IBinder的强引用对象返回:

virtual sp<IBinder> checkService( const String16& name) const

{

Parcel data, reply;

//向data中写入IServiceManager::descriptor,即""android.os.IServiceManager""

data.writeInterfaceToken(IServiceManager::getInterfaceDescriptor());

data.writeString16(name);

//remote()得到是BpBinder(0)

remote()->transact(CHECK_SERVICE_TRANSACTION, data, &reply);

return reply.readStrongBinder();//下面注意reply值是怎么来的

}

看下readStongBinder()函数:

frameworks/native/libs/binder/Parcel.cpp

sp<IBinder> Parcel::readStrongBinder() const

{

sp<IBinder> val;

readStrongBinder(&val);

return val;

}

接着看readStongBinder():

frameworks/native/libs/binder/Parcel.cpp

status_t Parcel::readStrongBinder(sp<IBinder>* val) const

{

return unflatten_binder(ProcessState::self(), *this, val);

}

7.2 通过unflatten_binder()返回一个Binder对象,我们应该隐约地记得前面ServiceManager在查到Service的handle后将该Service的handle封装成一个扁平的binder对象,再封装到数据载体中传给binder驱动,然后binder驱动将这个扁平的binder对象传给了Client进程,这里应该就是将得到的扁平的binder对象再还原:

frameworks/native/libs/binder/Parcel.cpp

status_t unflatten_binder(const sp<ProcessState>& proc,

const Parcel& in, sp<IBinder>* out)

{

const flat_binder_object* flat = in.readObject(false);

if (flat) {

switch (flat->type) {

case BINDER_TYPE_BINDER:

*out = reinterpret_cast<IBinder*>(flat->cookie);

return finish_unflatten_binder(NULL, *flat, in);

case BINDER_TYPE_HANDLE:

//取出返回的偏平的binder对象中的handle值构造BpBinder对象

*out = proc->getStrongProxyForHandle(flat->handle);

return finish_unflatten_binder(

static_cast<BpBinder*>(out->get()), *flat, in);

}

}

return BAD_TYPE;

}

可以看到从flat_binder_object类型的对象中中取到了ServiceManager传过来的handle值。

7.3 下面会调用ProcessState中的getStrongProxyForHandle()方法构造出对应handle值的BpBinder,依稀记得当时学习ServiceManager时获取ServiceManager的BpBinder(0)也是在这里构造的:

frameworks/native/libs/binder/ProcessState.cpp

sp<IBinder> ProcessState::getStrongProxyForHandle(int32_t handle)

{

sp<IBinder> result;

AutoMutex _l(mLock);

handle_entry* e = lookupHandleLocked(handle);

if (e != NULL) {

IBinder* b = e->binder;

if (b == NULL || !e->refs->attemptIncWeak(this)) {

//这里handle不为0,构建以handle参数的BpBinder(handle)对象

b = new BpBinder(handle);

e->binder = b;

if (b) e->refs = b->getWeakRefs();

result = b;

} else {

result.force_set(b);

e->refs->decWeak(this);

}

}

return result;

}

到这里,Client端即得到了一个IBinder类型的对象,即BpBinder(handle)对象,在之前Native Service注册的时候为了获取ServiceManager的实例,我们得到的是BpBinder(0),我们知道ServiceManager在ServiceManager的svglist中handle值即为0,而其他所有的service在ServiceManager中都有一个对应的handle值;因此我们获取其他服务的功能事实上与获取ServiceManager服务的过程类似,在client端会通过Binder驱动传递,得到一个从ServiceManager返回的含有handle值的扁平的binder对象,在client端我们通过这个handle值构建出对应service的BpBinder对象。

7.4 然后利用这个binder = BpBinder(handle)对象,经过interface_cast函数模板的转换

gCameraService = interface_cast<::android::hardware::ICameraService>(binder);

这套函数模板的替换在上一篇文章中Android Framework:Binder(4)-Native Service的注册的”2.2 模板类的替换” 中讲述获取ServiceManager的代理实例时详细讲述过,这里不再赘述;

我们可以得到的结论:

gCameraService = interface_cast<::android::hardware::ICameraService>(binder);

//可以替换为:

gCameraService = android::hardware::ICameraService::asInterface(BpBinder(handle));

最终可以知道ICameraService::asInterface等价于:

android::sp<ICameraService> ICameraService::asInterface(

const android::sp<android::IBinder>& obj)

{

android::sp<ICameraService> intr;

if (obj != NULL) {

intr = static_cast<ICameraService*>(

obj->queryLocalInterface(ICameraService::descriptor).get());

if (intr == NULL) {

intr = new BpCameraService(obj);//obj及之前创建的BpBinder(handle)

}

}

return intr;

}

gCameraService最终得到的是一个BpCameraService(BpBinder(handle))实例,在BpCameraService中实现了对CameraService端的方法封装。

至此,Client端获取到Service的代理对象,及可以使用该代理对象调用Service的接口方法。

本来想搜ICameraService的接口文件和BpCameraService的实现却在源码中未找到,原来ICameraService的接口函数是在编译中生成的,在out/target目录下搜索结果如下:

nick@bf-rm-18:~/work/nick/code/pollux-5-30-eng/out/target/product$ find -name "*CameraService*"

./pollux/obj/SHARED_LIBRARIES/libcameraservice_intermediates/CameraService.o

./pollux/obj/SHARED_LIBRARIES/libcamera_client_intermediates/aidl-generated/include/android/hardware/BpCameraService.h

./pollux/obj/SHARED_LIBRARIES/libcamera_client_intermediates/aidl-generated/include/android/hardware/ICameraServiceListener.h

./pollux/obj/SHARED_LIBRARIES/libcamera_client_intermediates/aidl-generated/include/android/hardware/ICameraService.h

./pollux/obj/SHARED_LIBRARIES/libcamera_client_intermediates/aidl-generated/include/android/hardware/BnCameraServiceListener.h

./pollux/obj/SHARED_LIBRARIES/libcamera_client_intermediates/aidl-generated/include/android/hardware/BpCameraServiceListener.h

./pollux/obj/SHARED_LIBRARIES/libcamera_client_intermediates/aidl-generated/include/android/hardware/BnCameraService.h

./pollux/obj/SHARED_LIBRARIES/libcamera_client_intermediates/aidl-generated/src/aidl/android/hardware/ICameraServiceListener.cpp

./pollux/obj/SHARED_LIBRARIES/libcamera_client_intermediates/aidl-generated/src/aidl/android/hardware/ICameraService.cpp

./pollux/obj/SHARED_LIBRARIES/libcamera_client_intermediates/aidl-generated/src/aidl/android/hardware/ICameraServiceListener.o

./pollux/obj/SHARED_LIBRARIES/libcamera_client_intermediates/aidl-generated/src/aidl/android/hardware/ICameraService.o

./pollux/obj/SHARED_LIBRARIES/libcamera_client_intermediates/ICameraServiceProxy.o

查看ICameraService.h,BpCameraService.h,ICameraService.cpp文件发现与IServiceManager与BpServiceManager类似.这里提供相关生成类的文件,可以在这里下载查看。

8. Client使用Service代理对象调用Service接口方法:

因此,在Client的调用端:

binder::Status res = cs->getNumberOfCameras(

::android::hardware::ICameraService::CAMERA_TYPE_BACKWARD_COMPATIBLE,

&count);

1

2

3

8.1 由上面的分析我们得知cs实际上是一个BpCameraService(BpBinder(handle)),调用cs的getNumberOfCameras()函数即调用到BpCameraService的getNumberOfCamera的封装函数:

//out/target/product/pollux/obj/SHARED_LIBRARIES/libcamera_client_intermediates/aidl-generated/src/aidl/android/hardware/ICameraService.cpp

BpCameraService::BpCameraService(const ::android::sp<::android::IBinder>& _aidl_impl)

: BpInterface<ICameraService>(_aidl_impl){

}

::android::binder::Status BpCameraService::getNumberOfCameras(int32_t type, int32_t* _aidl_return) {

::android::Parcel _aidl_data;

::android::Parcel _aidl_reply;

::android::status_t _aidl_ret_status = ::android::OK;

::android::binder::Status _aidl_status;

_aidl_ret_status = _aidl_data.writeInterfaceToken(getInterfaceDescriptor());

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

_aidl_ret_status = _aidl_data.writeInt32(type);

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

_aidl_ret_status = remote()->transact(ICameraService::GETNUMBEROFCAMERAS, _aidl_data, &_aidl_reply);

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

_aidl_ret_status = _aidl_status.readFromParcel(_aidl_reply);

if (((_aidl_ret_status) != (::android::OK))) {

goto _aidl_error;

}

if (!_aidl_status.isOk()) {

return _aidl_status;

}

_aidl_ret_status = _aidl_reply.readInt32(_aidl_return);

}

_aidl_error:

_aidl_status.setFromStatusT(_aidl_ret_status);

return _aidl_status;

}

8.2 从之前的文章中我们知道remote()实际上得到的是BpBinder(handle),即接下来到了BpBinder的transact()函数,然后调用IPCThreadState的transact方法,

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags){

}

只不过这里的handle是CameraService的handle,code是ICameraService::GETNUMBEROFCAMERAS,和其他参数不同。

8.3 接下来的过程与上面叙述的调用的ServiceManager的流程是大致相同的,不详细赘述。调用CameraService方法获取的结果也将写在调用接口函数的&count指针所指向的位置上。

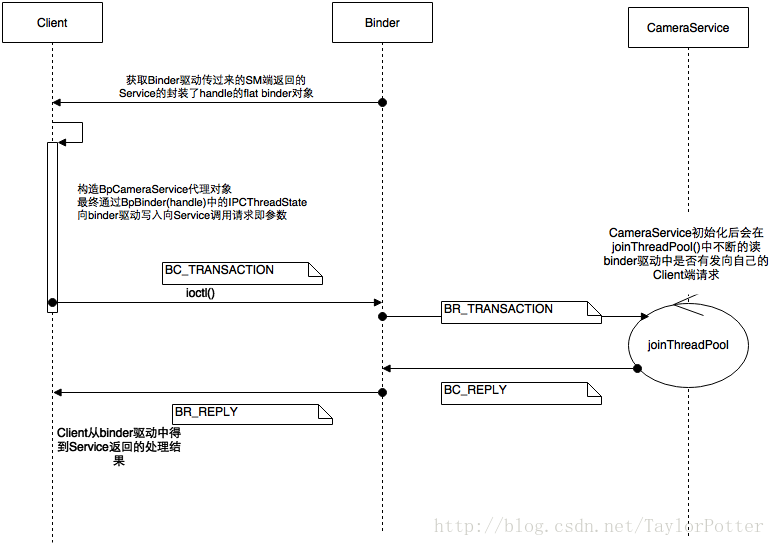

大致流程如图所示:

这里是非ServiceManager的普通Service,在上一篇文章Android Framework:Binder(4)-Native Service的注册的末尾我们看到CamaraService在自己的线程池中不和binder驱动交互,查询是否有向自己的Client请求,BpBinder(handle)的transact()通过binder驱动,会调用到BBinder(handle)的transact()方法,最终调用BnCameraService的onTransact函数,从而实现Client端调用Service的功能。

至此我们成功的跟踪了一个Client端调用Service的完整过程。

三、Native Service调用总结

一般Service的跨进程调用过程如图所示:

ServiceManager在Service调用过程中扮演着重要的角色,Client端需要通过ServiceManager查询符合servicename的service的handle值,SM将该handle值封装成一个flat_binder_object对象通过binder驱动传递给Client,Client通过读取binder驱动获取该对象,通过该对象构造获得Service的代理对象,BpXXXService,通过代理对象中的进行接口函数的调用,最终还是通过代理对象中的BpBinder(handle)向binder驱动发送请求,由binder驱动代为转达至Service。当然任何一个service初始化时都需要向SM进行注册,包括SM自己。

Binder驱动的协议,和传输过程中的一些数据结构目前还是很凌乱的状态,准备日后整理下后再更新此篇博客。

来源:oschina

链接:https://my.oschina.net/u/920274/blog/3191434