假设有n n n ( x 1 , y 1 ) , ( x 2 , y 2 ) , ⋯ , ( x n , y n ) (x_1, \, y_1), \, (x_2, \, y_2), \cdots, \, (x_n, \, y_n) ( x 1 , y 1 ) , ( x 2 , y 2 ) , ⋯ , ( x n , y n ) y = β 0 + β 1 x y = \beta_0 + \beta_1 x y = β 0 + β 1 x n n n β 0 \beta_0 β 0 β 1 \beta_1 β 1 RSS = ∑ i = 1 n ( y i − ( β 0 + β 1 x i ) ) 2 \displaystyle \text{RSS} = \sum_{i = 1}^n \left( y_i - (\beta_0 + \beta_1 x_i) \right)^2 RSS = i = 1 ∑ n ( y i − ( β 0 + β 1 x i ) ) 2 R S S RSS R S S

对于如何求出 β 0 \beta_0 β 0 β 1 \beta_1 β 1 β 0 \beta_0 β 0 β 1 \beta_1 β 1 β 0 \beta_0 β 0 β 1 \beta_1 β 1 { ∂ RSS ∂ β 0 = 2 n β 0 + 2 ∑ x i β 1 − 2 ∑ y i ∂ RSS ∂ β 1 = 2 ∑ x i 2 β 1 + 2 ∑ x i β 0 − 2 ∑ x i y i

\begin{cases}

& \frac{\partial \text{ RSS}}{\partial \beta_0} = 2 n \beta_0 + 2 \sum x_i \beta_1 - 2 \sum y_i \\

& \frac{\partial \text{ RSS}}{\partial \beta_1} = 2 \sum x_i^2 \beta_1 + 2 \sum x_i \beta_0 - 2 \sum x_i y_i

\end{cases}

{ ∂ β 0 ∂ RSS = 2 n β 0 + 2 ∑ x i β 1 − 2 ∑ y i ∂ β 1 ∂ RSS = 2 ∑ x i 2 β 1 + 2 ∑ x i β 0 − 2 ∑ x i y i ∑ \sum ∑ i = 1 i = 1 i = 1 i = n i = n i = n { β 1 = ∑ ( x i − x ˉ ) ( y i − y ˉ ) ∑ ( x i − x ˉ ) 2 β 0 = y ˉ − β 1 x ˉ

\begin{cases}

& \beta_1 = \frac{\sum (x_i - \bar{x} ) (y_i - \bar{y})}{\sum (x_i - \bar{x} )^2 } \\

& \beta_0 = \bar{y} - \beta_1 \bar{x} \\

\end{cases}

{ β 1 = ∑ ( x i − x ˉ ) 2 ∑ ( x i − x ˉ ) ( y i − y ˉ ) β 0 = y ˉ − β 1 x ˉ ∑ \sum ∑ i = 1 i = 1 i = 1 i = n i = n i = n x ˉ = ∑ i = 1 n x i n \bar{x} = \frac{\sum_{i = 1}^n x_i}{n} x ˉ = n ∑ i = 1 n x i y ˉ = ∑ i = 1 n y i n \bar{y} = \frac{\sum_{i = 1}^n y_i}{n} y ˉ = n ∑ i = 1 n y i

我们把RSS 的表达式展开,有RSS ( β 0 , β 1 ) = n β 0 2 + ∑ x i 2 β 1 2 + ∑ y i 2 + 2 ∑ x i β 0 β 1 − 2 ∑ y i β 0 − 2 ∑ x i y i β 1 \text{RSS} (\beta_0, \, \beta_1) = n \beta_0^2 + \sum x_i^2 \, \beta_1^2 + \sum y_i^2 + 2 \sum x_i \, \beta_0 \beta_1 - 2 \sum y_i \, \beta_0 - 2 \sum x_i y_i \, \beta_1 RSS ( β 0 , β 1 ) = n β 0 2 + ∑ x i 2 β 1 2 + ∑ y i 2 + 2 ∑ x i β 0 β 1 − 2 ∑ y i β 0 − 2 ∑ x i y i β 1 RSS ( β 0 , β 1 ) \text{RSS} (\beta_0, \, \beta_1) RSS ( β 0 , β 1 ) β 0 \beta_0 β 0 β 1 \beta_1 β 1 RSS ( β 0 , β 1 ) \text{RSS} (\beta_0, \, \beta_1) RSS ( β 0 , β 1 ) β 0 \beta_0 β 0 β 1 \beta_1 β 1

先化简一下,RSS ( β 0 , β 1 ) / n = β 0 2 + ∑ x i 2 n β 1 2 + ∑ y i 2 n + 2 ∑ x i n β 0 β 1 − 2 ∑ y i n β 0 − 2 ∑ x i y i n β 1 \text{RSS} (\beta_0, \, \beta_1) / n= \beta_0^2 + \frac{\sum x_i^2}{n} \, \beta_1^2 + \frac{\sum y_i^2}{n} + 2 \frac{\sum x_i}{n} \, \beta_0 \beta_1 - 2 \frac{\sum y_i}{n} \, \beta_0 - 2 \frac{\sum x_i y_i}{n} \, \beta_1 RSS ( β 0 , β 1 ) / n = β 0 2 + n ∑ x i 2 β 1 2 + n ∑ y i 2 + 2 n ∑ x i β 0 β 1 − 2 n ∑ y i β 0 − 2 n ∑ x i y i β 1

我们先通过配发匹配掉 β 0 2 \beta_0^2 β 0 2 β 0 \beta_0 β 0 β 0 β 1 \beta_0 \beta_1 β 0 β 1 RSS / n = ( β 0 + ∑ x i n β 1 − ∑ y i n ) 2 − ( ∑ x i n ) 2 β 1 2 + ∑ x i 2 n β 1 2 + ∑ y i 2 n − ( ∑ y i n ) 2 + 2 ∑ x i n ∑ y i n β 1 − 2 ∑ x i y i n β 1 \text{RSS} / n = \left(\beta_0 + \frac{\sum x_i}{n} \, \beta_1 - \frac{\sum y_i}{n} \right)^2 - ( \frac{\sum x_i}{n} )^2 \beta_1^2 + \frac{\sum x_i^2}{n} \beta_1^2 + \frac{\sum y_i^2}{n} -(\frac{\sum y_i}{n} )^2 + 2 \frac{\sum x_i}{n} \frac{\sum y_i}{n} \beta_1 - 2 \frac{\sum x_i y_i}{n} \, \beta_1 RSS / n = ( β 0 + n ∑ x i β 1 − n ∑ y i ) 2 − ( n ∑ x i ) 2 β 1 2 + n ∑ x i 2 β 1 2 + n ∑ y i 2 − ( n ∑ y i ) 2 + 2 n ∑ x i n ∑ y i β 1 − 2 n ∑ x i y i β 1

然后我们再匹配掉 β 1 2 \beta_1^2 β 1 2 β 1 \beta_1 β 1

RSS / n = ( β 0 + ∑ x i n β 1 − ∑ y i n ) 2 + ( ∑ x i 2 n − ( ∑ x i n ) 2 ) [ β 1 2 + 2 ∑ x i n ∑ y i n − 2 ∑ x i y i n ∑ x i 2 n − ( ∑ x i n ) 2 β 1 + ( ∑ x i n ∑ y i n − ∑ x i y i n ∑ x i 2 n − ( ∑ x i n ) 2 ) 2 ] + ∑ y i 2 n − ( ∑ y i n ) 2 − ( ∑ x i n ∑ y i n − ∑ x i y i n ) 2 ∑ x i 2 n − ( ∑ x i n ) 2 = ( β 0 + ∑ x i n β 1 − ∑ y i n ) 2 + ( ∑ x i 2 n − ( ∑ x i n ) 2 ) [ β 1 + ∑ x i n ∑ y i n − ∑ x i y i n ∑ x i 2 n − ( ∑ x i n ) 2 ] 2 + ∑ y i 2 n − ( ∑ y i n ) 2 − ( ∑ x i n ∑ y i n − ∑ x i y i n ) 2 ∑ x i 2 n − ( ∑ x i n ) 2 \begin{aligned} \text{RSS} / n &= \left(\beta_0 + \frac{\sum x_i}{n} \, \beta_1 - \frac{\sum y_i}{n} \right)^2 + \\

& \left(\frac{\sum x_i^2}{n} - ( \frac{\sum x_i}{n} )^2 \right) \left[ \beta_1^2 + \frac{2 \frac{\sum x_i}{n} \frac{\sum y_i}{n} - 2\frac{\sum x_i y_i}{n}}{\frac{\sum x_i^2}{n} - ( \frac{\sum x_i}{n} )^2 } \beta_1 + \left( \frac{\frac{\sum x_i}{n} \frac{\sum y_i}{n} - \frac{\sum x_i y_i}{n}}{\frac{\sum x_i^2}{n} - ( \frac{\sum x_i}{n} )^2} \right)^2 \right] \\

& \qquad + \frac{\sum y_i^2}{n} - (\frac{\sum y_i}{n} )^2 - \frac{ \left( \frac{\sum x_i}{n} \frac{\sum y_i}{n} - \frac{\sum x_i y_i}{n} \right)^2}{\frac{\sum x_i^2}{n} - ( \frac{\sum x_i}{n} )^2} \\

&= \left(\beta_0 + \frac{\sum x_i}{n} \, \beta_1 - \frac{\sum y_i}{n} \right)^2 + \left(\frac{\sum x_i^2}{n} - ( \frac{\sum x_i}{n} )^2 \right) \left[ \beta_1 + \frac{ \frac{\sum x_i}{n} \frac{\sum y_i}{n} - \frac{\sum x_i y_i}{n} }{\frac{\sum x_i^2}{n} - ( \frac{\sum x_i}{n} )^2} \right]^2 \\

& \qquad + \frac{\sum y_i^2}{n} - (\frac{\sum y_i}{n} )^2 - \frac{ \left( \frac{\sum x_i}{n} \frac{\sum y_i}{n} - \frac{\sum x_i y_i}{n} \right)^2}{\frac{\sum x_i^2}{n} - ( \frac{\sum x_i}{n} )^2} \\

\end{aligned}

RSS / n = ( β 0 + n ∑ x i β 1 − n ∑ y i ) 2 + ( n ∑ x i 2 − ( n ∑ x i ) 2 ) ⎣ ⎡ β 1 2 + n ∑ x i 2 − ( n ∑ x i ) 2 2 n ∑ x i n ∑ y i − 2 n ∑ x i y i β 1 + ( n ∑ x i 2 − ( n ∑ x i ) 2 n ∑ x i n ∑ y i − n ∑ x i y i ) 2 ⎦ ⎤ + n ∑ y i 2 − ( n ∑ y i ) 2 − n ∑ x i 2 − ( n ∑ x i ) 2 ( n ∑ x i n ∑ y i − n ∑ x i y i ) 2 = ( β 0 + n ∑ x i β 1 − n ∑ y i ) 2 + ( n ∑ x i 2 − ( n ∑ x i ) 2 ) [ β 1 + n ∑ x i 2 − ( n ∑ x i ) 2 n ∑ x i n ∑ y i − n ∑ x i y i ] 2 + n ∑ y i 2 − ( n ∑ y i ) 2 − n ∑ x i 2 − ( n ∑ x i ) 2 ( n ∑ x i n ∑ y i − n ∑ x i y i ) 2

经过两次配方,我们可以看到,要使得RSS / n \text{RSS} / n RSS / n { β 1 = ∑ x i y i n − ∑ x i n ∑ y i n ∑ x i 2 n − ( ∑ x i n ) 2 β 0 = y ˉ − β 1 x ˉ

\begin{cases}

& \beta_1 = \frac{ \frac{\sum x_i y_i}{n} - \frac{\sum x_i}{n} \frac{\sum y_i}{n} }{\frac{\sum x_i^2}{n} - ( \frac{\sum x_i}{n} )^2} \\

& \beta_0 = \bar{y} - \beta_1 \bar{x} \\

\end{cases}

⎩ ⎨ ⎧ β 1 = n ∑ x i 2 − ( n ∑ x i ) 2 n ∑ x i y i − n ∑ x i n ∑ y i β 0 = y ˉ − β 1 x ˉ

经过化简计算,我们有 ∑ x i y i n − ∑ x i n ∑ y i n ∑ x i 2 n − ( ∑ x i n ) 2 = ∑ ( x i − x ˉ ) ( y i − y ˉ ) ∑ ( x i − x ˉ ) 2 \displaystyle \frac{ \frac{\sum x_i y_i}{n} - \frac{\sum x_i}{n} \frac{\sum y_i}{n} }{\frac{\sum x_i^2}{n} - ( \frac{\sum x_i}{n} )^2} = \frac{\sum (x_i - \bar{x} ) (y_i - \bar{y})}{\sum (x_i - \bar{x} )^2 } n ∑ x i 2 − ( n ∑ x i ) 2 n ∑ x i y i − n ∑ x i n ∑ y i = ∑ ( x i − x ˉ ) 2 ∑ ( x i − x ˉ ) ( y i − y ˉ ) β 0 \beta_0 β 0 β 1 \beta_1 β 1

通过上述计算,可以发现配方法的过程要繁琐很多。并且配方法不易于对多变量(multivariate)回归的推广。

配方法的一个好处是避免了求导这个步骤。另外,配方法的一个直接结果就是我们可以得到最小的 RSS 的值。即当 β 0 \beta_0 β 0 β 1 \beta_1 β 1 RSS / n = ∑ y i 2 n − ( ∑ y i n ) 2 − ( ∑ x i n ∑ y i n − ∑ x i y i n ) 2 ∑ x i 2 n − ( ∑ x i n ) 2 \displaystyle \text{RSS} / n = \frac{\sum y_i^2}{n} - (\frac{\sum y_i}{n} )^2 - \frac{ \left( \frac{\sum x_i}{n} \frac{\sum y_i}{n} - \frac{\sum x_i y_i}{n} \right)^2}{\frac{\sum x_i^2}{n} - ( \frac{\sum x_i}{n} )^2} RSS / n = n ∑ y i 2 − ( n ∑ y i ) 2 − n ∑ x i 2 − ( n ∑ x i ) 2 ( n ∑ x i n ∑ y i − n ∑ x i y i ) 2

RSS = ∑ y i 2 − n ( ∑ y i n ) 2 − n ( ∑ x i n ∑ y i n − ∑ x i y i n ) 2 ∑ x i 2 n − ( ∑ x i n ) 2 \displaystyle \text{RSS} = \sum y_i^2 - n (\frac{\sum y_i}{n} )^2 - n \frac{ \left( \frac{\sum x_i}{n} \frac{\sum y_i}{n} - \frac{\sum x_i y_i}{n} \right)^2}{\frac{\sum x_i^2}{n} - ( \frac{\sum x_i}{n} )^2} RSS = ∑ y i 2 − n ( n ∑ y i ) 2 − n n ∑ x i 2 − ( n ∑ x i ) 2 ( n ∑ x i n ∑ y i − n ∑ x i y i ) 2

import numpy as np

from scipy. stats import norm

import matplotlib. pyplot as plt

import pandas as pd

from sklearn. linear_model import LinearRegression

class least_square_singleVariable :

def __init__ ( self) :

return

def find_beta_1_and_beta_0 ( self, train_x: 'pd.Series' , train_y: 'pd.Series' ) - > 'tuple(float, float)' :

"""

Given the independent variable train_x and the dependent variable train_y, find the value for

beta_1 and beta_0

"""

x_bar = np. mean( train_x)

y_bar = np. mean( train_y)

beta_1 = np. dot( train_x - x_bar, train_y - y_bar) / ( np. sum ( ( train_x - x_bar) ** 2 ) )

beta_0 = y_bar - beta_1 * x_bar

return beta_1, beta_0

def find_optimal_RSS ( self, train_x: "numpy.ndarray" , train_y: "numpy.ndarray" ) - > float :

"""

Calculate the residual sum of squares (RSS) using the formula derived in the text above.

"""

n = len ( train_x)

x_bar = np. mean( train_x)

y_bar = np. mean( train_y)

sum_xi_square = np. sum ( train_x ** 2 )

sum_yi_square = np. sum ( train_y ** 2 )

TSS = np. sum ( ( train_y - y_bar) ** 2 )

sum_xiyi = np. dot( train_x, train_y)

res = sum_yi_square - n * y_bar ** 2 - \

n * ( x_bar * y_bar - sum_xiyi / n) ** 2 / ( sum_xi_square / n - x_bar ** 2 )

return res

def get_optimal_RSS_sklearn ( self, train_x: "numpy.ndarray" , train_y: "numpy.ndarray" ) - > float :

"""

Calculate the residual sum of squares using the LinearRegression() model in sklearn.

The result should be the same as the result returned by the function find_optimal_RSS

"""

n = len ( train_x)

model = LinearRegression( )

model. fit( train_x. reshape( n, 1 ) , train_y)

R_square = model. score( train_x. reshape( n, 1 ) , train_y)

y_bar = np. mean( train_y)

TSS = np. sum ( ( train_y - y_bar) ** 2 )

RSS = ( 1 - R_square) * TSS

return RSS

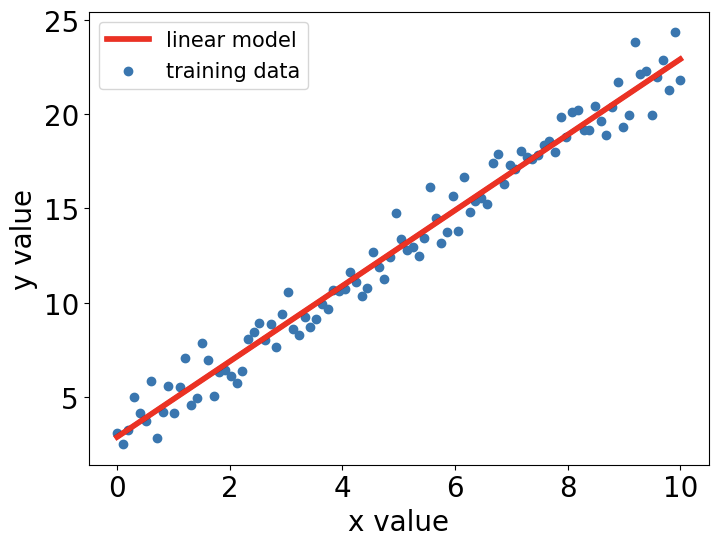

我们来验证利用上述公式计算得到的 RSS 是否与 sklearn 包中 LinearRegression() 给出的 RSS 相等。

a = least_square_singleVariable( )

num_points = 100

train_x = np. linspace( 0 , 10 , num_points)

train_y = 2 * train_x + 3 + np. random. normal( 0 , 1 , num_points)

beta_1, beta_0 = ( a. find_beta_1_and_beta_0( train_x, train_y) )

print ( a. find_optimal_RSS( train_x, train_y) )

print ( a. get_optimal_RSS_sklearn( train_x, train_y) )

输出的结果如下:

89.00851265874053

89.00851265873582

我们发现利用我们推导出来的公式计算得到的RSS 值与sklearn 中利用R-square 来计算得到的 RSS 是一致的。

作图如下:

plt. figure( figsize= ( 8 , 6 ) , dpi= 100 )

plt. scatter( train_x, train_y)

plt. plot( train_x, beta_1 * train_x + beta_0, color= 'red' , linewidth= 4 )

plt. xlabel( "x value" , fontsize= 20 )

plt. ylabel( "y value" , fontsize= 20 )

plt. xticks( fontsize= 20 )

plt. yticks( fontsize= 20 )

plt. legend( [ 'linear model' , 'training data' ] , fontsize= 15 )