#寻找损失函数的极值点的算法

#局部最优

#为什么一定会找到最小值?

#随机初始化值的概率

#学习速率是一种超参数

#有哪些优化算法,以及他们的学习速率怎么定

#学习速率过高过低,会影响找到极值点的效率,如果太大,会让损失函数的极值在极值点附近来回跳动

感知器的历程

#多层感知器(神经网络)

#单个神经元

#多个神经元(多分类)

- 无法解决异或问题

- 单层神经元的缺陷:神经元要求数据必须是线性可分得,异或无法找到一条直线分割两个类

- 0 1 :1;1 0 :0;0 0: 0;1 1 :1

多层感知器

- 输入层,隐含层,输出层,输出

- 激活函数:

- relu __/

- sigmoid,映射到(-1,1)

- tanh (-1,1)

- leak relu 负小正大

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

import os

os.getcwd()

path = 'D:\\xxx\\pythonPycharm_2018.3.5 Build 183.5912.18\\xxx

'

#修改当前工作目录

os.chdir(path)

#查看修改后的工作目录

print("目录修改成功 %s" %os.getcwd())

目录修改成功 D:\xxx\pythonPycharm_2018.3.5 Build 183.5912.18\xxx

data = pd.read_csv('./advertising.csv')

data.head()

| TV | radio | newspaper | sales | |

|---|---|---|---|---|

| 0 | 230.1 | 37.8 | 69.2 | 22.1 |

| 1 | 44.5 | 39.3 | 45.1 | 10.4 |

| 2 | 17.2 | 45.9 | 69.3 | 9.3 |

| 3 | 151.5 | 41.3 | 58.5 | 18.5 |

| 4 | 180.8 | 10.8 | 58.4 | 12.9 |

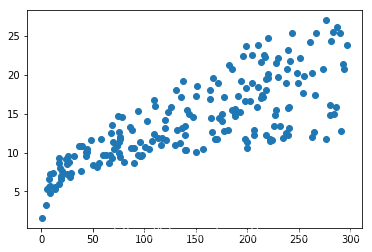

plt.scatter(data.TV,data.sales)

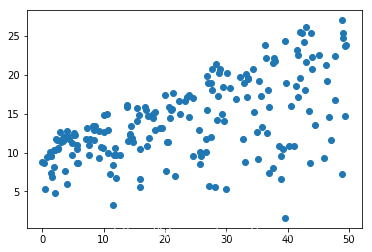

plt.scatter(data.radio,data.sales)

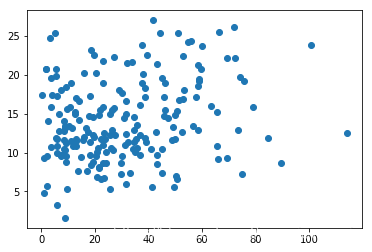

plt.scatter(data.newspaper,data.sales)

x = data.iloc[:,0:-1]

y = data.iloc[:,-1]

import tensorflow as tf

print(y)

0 22.1

1 10.4

2 9.3

3 18.5

4 12.9

5 7.2

6 11.8

7 13.2

8 4.8

9 10.6

10 8.6

11 17.4

12 9.2

13 9.7

14 19.0

15 22.4

16 12.5

17 24.4

18 11.3

19 14.6

20 18.0

21 12.5

22 5.6

23 15.5

24 9.7

25 12.0

26 15.0

27 15.9

28 18.9

29 10.5

...

170 8.4

171 14.5

172 7.6

173 11.7

174 11.5

175 27.0

176 20.2

177 11.7

178 11.8

179 12.6

180 10.5

181 12.2

182 8.7

183 26.2

184 17.6

185 22.6

186 10.3

187 17.3

188 15.9

189 6.7

190 10.8

191 9.9

192 5.9

193 19.6

194 17.3

195 7.6

196 9.7

197 12.8

198 25.5

199 13.4

Name: sales, Length: 200, dtype: float64

model = tf.keras.Sequential([tf.keras.layers.Dense(10,input_shape = (3,),activation = 'relu')

,tf.keras.layers.Dense(1)]

)

#隐含层10

#3*10

#输入层 10*3 + 10*1 bias 40个参数,隐含层10个值

#输出层 1个值,11个参数

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense (Dense) (None, 10) 40

_________________________________________________________________

dense_1 (Dense) (None, 1) 11

=================================================================

Total params: 51

Trainable params: 51

Non-trainable params: 0

_________________________________________________________________

model.compile(optimizer = 'adam',

loss = 'mse'

)

model.fit(x,y,epochs = 100)

WARNING:tensorflow:Falling back from v2 loop because of error: Failed to find data adapter that can handle input: <class 'pandas.core.frame.DataFrame'>, <class 'NoneType'>

Train on 200 samples

Epoch 1/100

200/200 [==============================] - 2s 8ms/sample - loss: 552.0370

Epoch 2/100

200/200 [==============================] - 0s 274us/sample - loss: 416.8464

Epoch 3/100

200/200 [==============================] - 0s 303us/sample - loss: 317.0524

Epoch 4/100

200/200 [==============================] - 0s 214us/sample - loss: 238.6739

Epoch 5/100

200/200 [==============================] - 0s 319us/sample - loss: 192.6979

Epoch 6/100

200/200 [==============================] - 0s 304us/sample - loss: 156.6224

Epoch 7/100

200/200 [==============================] - 0s 279us/sample - loss: 137.7957

Epoch 8/100

200/200 [==============================] - 0s 249us/sample - loss: 123.7041

Epoch 9/100

200/200 [==============================] - 0s 230us/sample - loss: 116.2142

Epoch 10/100

200/200 [==============================] - 0s 304us/sample - loss: 109.5697

Epoch 11/100

200/200 [==============================] - 0s 299us/sample - loss: 104.0680

Epoch 12/100

200/200 [==============================] - 0s 329us/sample - loss: 98.7045

Epoch 13/100

200/200 [==============================] - 0s 299us/sample - loss: 93.6163

Epoch 14/100

200/200 [==============================] - 0s 255us/sample - loss: 88.7300

Epoch 15/100

200/200 [==============================] - 0s 274us/sample - loss: 84.1461

Epoch 16/100

200/200 [==============================] - 0s 449us/sample - loss: 79.4312

Epoch 17/100

200/200 [==============================] - 0s 261us/sample - loss: 75.0235

Epoch 18/100

200/200 [==============================] - 0s 274us/sample - loss: 71.0549

Epoch 19/100

200/200 [==============================] - 0s 239us/sample - loss: 66.6472

Epoch 20/100

200/200 [==============================] - 0s 304us/sample - loss: 62.6407

Epoch 21/100

200/200 [==============================] - 0s 304us/sample - loss: 58.9893

Epoch 22/100

200/200 [==============================] - 0s 284us/sample - loss: 55.3490

Epoch 23/100

200/200 [==============================] - 0s 280us/sample - loss: 51.8215

Epoch 24/100

200/200 [==============================] - ETA: 0s - loss: 30.16 - 0s 319us/sample - loss: 48.5518

Epoch 25/100

200/200 [==============================] - 0s 434us/sample - loss: 45.5142

Epoch 26/100

200/200 [==============================] - 0s 300us/sample - loss: 42.7557

Epoch 27/100

200/200 [==============================] - 0s 249us/sample - loss: 40.1837

Epoch 28/100

200/200 [==============================] - 0s 262us/sample - loss: 37.6466

Epoch 29/100

200/200 [==============================] - 0s 299us/sample - loss: 35.3706

Epoch 30/100

200/200 [==============================] - 0s 349us/sample - loss: 33.1281

Epoch 31/100

200/200 [==============================] - 0s 244us/sample - loss: 31.0810

Epoch 32/100

200/200 [==============================] - 0s 244us/sample - loss: 28.9801

Epoch 33/100

200/200 [==============================] - 0s 240us/sample - loss: 27.1998

Epoch 34/100

200/200 [==============================] - 0s 304us/sample - loss: 25.4241

Epoch 35/100

200/200 [==============================] - 0s 249us/sample - loss: 23.3864

Epoch 36/100

200/200 [==============================] - 0s 284us/sample - loss: 21.3694

Epoch 37/100

200/200 [==============================] - 0s 324us/sample - loss: 19.3851

Epoch 38/100

200/200 [==============================] - 0s 254us/sample - loss: 17.2471

Epoch 39/100

200/200 [==============================] - 0s 200us/sample - loss: 15.2541

Epoch 40/100

200/200 [==============================] - 0s 254us/sample - loss: 13.8191

Epoch 41/100

200/200 [==============================] - 0s 273us/sample - loss: 12.3512

Epoch 42/100

200/200 [==============================] - 0s 259us/sample - loss: 11.1168

Epoch 43/100

200/200 [==============================] - 0s 269us/sample - loss: 10.2072

Epoch 44/100

200/200 [==============================] - 0s 269us/sample - loss: 9.4599

Epoch 45/100

200/200 [==============================] - 0s 319us/sample - loss: 8.9538

Epoch 46/100

200/200 [==============================] - 0s 319us/sample - loss: 8.4765

Epoch 47/100

200/200 [==============================] - 0s 238us/sample - loss: 8.0536

Epoch 48/100

200/200 [==============================] - 0s 249us/sample - loss: 7.7974

Epoch 49/100

200/200 [==============================] - 0s 284us/sample - loss: 7.4901

Epoch 50/100

200/200 [==============================] - 0s 394us/sample - loss: 7.2274

Epoch 51/100

200/200 [==============================] - 0s 299us/sample - loss: 7.0059

Epoch 52/100

200/200 [==============================] - 0s 244us/sample - loss: 6.8145

Epoch 53/100

200/200 [==============================] - 0s 269us/sample - loss: 6.5581

Epoch 54/100

200/200 [==============================] - ETA: 0s - loss: 6.477 - 0s 309us/sample - loss: 6.3564

Epoch 55/100

200/200 [==============================] - 0s 295us/sample - loss: 6.1689

Epoch 56/100

200/200 [==============================] - 0s 234us/sample - loss: 6.0046

Epoch 57/100

200/200 [==============================] - 0s 289us/sample - loss: 5.8701

Epoch 58/100

200/200 [==============================] - 0s 264us/sample - loss: 5.7062

Epoch 59/100

200/200 [==============================] - 0s 304us/sample - loss: 5.5620

Epoch 60/100

200/200 [==============================] - 0s 284us/sample - loss: 5.4335

Epoch 61/100

200/200 [==============================] - 0s 234us/sample - loss: 5.3382

Epoch 62/100

200/200 [==============================] - 0s 224us/sample - loss: 5.2391

Epoch 63/100

200/200 [==============================] - 0s 249us/sample - loss: 5.1235

Epoch 64/100

200/200 [==============================] - 0s 194us/sample - loss: 5.0437

Epoch 65/100

200/200 [==============================] - 0s 219us/sample - loss: 4.9524

Epoch 66/100

200/200 [==============================] - 0s 225us/sample - loss: 4.8507

Epoch 67/100

200/200 [==============================] - 0s 219us/sample - loss: 4.7549

Epoch 68/100

200/200 [==============================] - 0s 189us/sample - loss: 4.6943

Epoch 69/100

200/200 [==============================] - 0s 284us/sample - loss: 4.5864

Epoch 70/100

200/200 [==============================] - 0s 265us/sample - loss: 4.5284

Epoch 71/100

200/200 [==============================] - 0s 294us/sample - loss: 4.4632

Epoch 72/100

200/200 [==============================] - 0s 315us/sample - loss: 4.3811

Epoch 73/100

200/200 [==============================] - 0s 314us/sample - loss: 4.3129

Epoch 74/100

200/200 [==============================] - 0s 249us/sample - loss: 4.2554

Epoch 75/100

200/200 [==============================] - 0s 254us/sample - loss: 4.2137

Epoch 76/100

200/200 [==============================] - 0s 224us/sample - loss: 4.1420

Epoch 77/100

200/200 [==============================] - 0s 209us/sample - loss: 4.0912

Epoch 78/100

200/200 [==============================] - 0s 214us/sample - loss: 4.0383

Epoch 79/100

200/200 [==============================] - 0s 259us/sample - loss: 3.9995

Epoch 80/100

200/200 [==============================] - 0s 219us/sample - loss: 3.9455

Epoch 81/100

200/200 [==============================] - 0s 224us/sample - loss: 3.9012

Epoch 82/100

200/200 [==============================] - 0s 194us/sample - loss: 3.8656

Epoch 83/100

200/200 [==============================] - 0s 234us/sample - loss: 3.8233

Epoch 84/100

200/200 [==============================] - 0s 184us/sample - loss: 3.7835

Epoch 85/100

200/200 [==============================] - 0s 180us/sample - loss: 3.7444

Epoch 86/100

200/200 [==============================] - 0s 189us/sample - loss: 3.7232

Epoch 87/100

200/200 [==============================] - 0s 209us/sample - loss: 3.6805

Epoch 88/100

200/200 [==============================] - 0s 224us/sample - loss: 3.6476

Epoch 89/100

200/200 [==============================] - 0s 159us/sample - loss: 3.6209

Epoch 90/100

200/200 [==============================] - 0s 234us/sample - loss: 3.5872

Epoch 91/100

200/200 [==============================] - 0s 254us/sample - loss: 3.5596

Epoch 92/100

200/200 [==============================] - 0s 219us/sample - loss: 3.5362

Epoch 93/100

200/200 [==============================] - 0s 185us/sample - loss: 3.5044

Epoch 94/100

200/200 [==============================] - 0s 434us/sample - loss: 3.5039

Epoch 95/100

200/200 [==============================] - 0s 344us/sample - loss: 3.4594

Epoch 96/100

200/200 [==============================] - 0s 279us/sample - loss: 3.4415

Epoch 97/100

200/200 [==============================] - 0s 299us/sample - loss: 3.4206

Epoch 98/100

200/200 [==============================] - 0s 224us/sample - loss: 3.3926

Epoch 99/100

200/200 [==============================] - 0s 345us/sample - loss: 3.3714

Epoch 100/100

200/200 [==============================] - 0s 319us/sample - loss: 3.3478

<tensorflow.python.keras.callbacks.History at 0x23c87e55080>

test = data.iloc[:10,0:-1]

model.predict(test)

WARNING:tensorflow:Falling back from v2 loop because of error: Failed to find data adapter that can handle input: <class 'pandas.core.frame.DataFrame'>, <class 'NoneType'>

array([[22.526861 ],

[ 6.9270716],

[ 8.889206 ],

[19.793991 ],

[12.460696 ],

[ 9.313395 ],

[11.779992 ],

[11.498346 ],

[ 1.3081465],

[10.4266 ]], dtype=float32)

test = data.iloc[:10,-1]

print(test)

0 22.1

1 10.4

2 9.3

3 18.5

4 12.9

5 7.2

6 11.8

7 13.2

8 4.8

9 10.6

Name: sales, dtype: float64

来源:CSDN

作者:Z pz

链接:https://blog.csdn.net/wenniewennie/article/details/104413635