系列目录:

- 菜鸟笔记-DuReader阅读理解基线模型代码阅读笔记(一)——

数据 - 菜鸟笔记-DuReader阅读理解基线模型代码阅读笔记(二)——

介绍及分词 - 菜鸟笔记-DuReader阅读理解基线模型代码阅读笔记(三)—— 预处理

未完待续 … …

基线系统加入了新的段落抽取策略来提升模型表现。

段落抽取思路

基线系统使用了新的段落抽取策略提高模型效果,具体代码见DuReader代码库中paddle/para_extraction.py文件。相似的策略被用于2018年的Top-1系统 (Liu et al. 2018) at 2018 Machine Reading Challenge。

DuReader (He et al. 2018)原来的基线使用了简单策略选择段落训练和测试模型。但是真正包含答案的段落可能并没有被包含进去。因此,使用更多的信息进行答案抽取。

段落抽取的新策略具体如下,将新的段落抽取策略用于每个文档。对于单个文档:

- 去除文档中的重复段落

- 如果段落短于预先设定的最大长度则使用预先定义的分隔符将标题与段落连接起来。

- 计算每个段落于问题的F1分数

- 将标题与top-K个F1分数的段落使用预先定义的分隔符连接起来,构造短于预定义最大长度的段落。

代码

主要分为两个函数compute_paragraph_score、paragraph_selection如下,整体代码见DuReader基线系统\paddle\paragraph_extraction.py文件。

compute_paragraph_score

计算每个段落与问题的 f1 score 分数,并作为segmented_paragraphs_scores字段添加到样例中。

def compute_paragraph_score(sample):

"""

计算每个段落与问题的 f1 score 分数,在样例中添加字段segmented_paragraphs_scores

Args:

sample: 数据集的一个样例.

Returns:

None

Raises:

None

"""

question = sample["segmented_question"]

# 遍历所有文档

for doc in sample['documents']:

doc['segmented_paragraphs_scores'] = []

#遍历所有段落

for p_idx, para_tokens in enumerate(doc['segmented_paragraphs']):

if len(question) > 0:

#计算段落与问题的f1_score

related_score = metric_max_over_ground_truths(f1_score,

para_tokens,

[question])

else:

related_score = 0.0

doc['segmented_paragraphs_scores'].append(related_score)

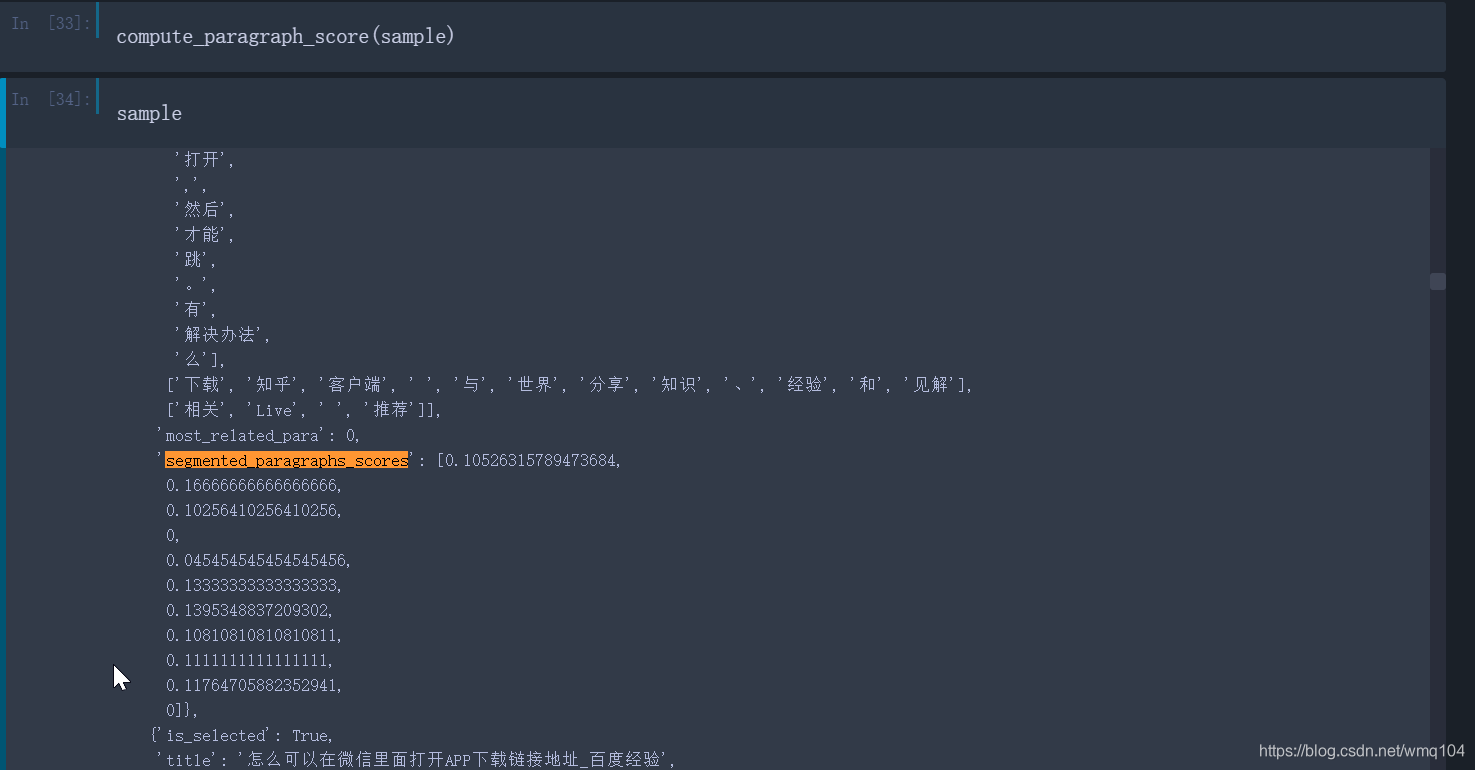

在jupyter notebook中调用结果如下图所示:

由图可见在样例的每个文档中增加了segmented_paragraphs_scores字段,它是一个列表,列表元素为该文档每个段落与问题的f1_score。

paragraph_selection

针对每个文档,去除文档中重复的段落,将文档截短到预设最大长度之下,保留包括尽可能多的信息的段落。

def paragraph_selection(sample, mode):

"""

针对每个文档,选择包括尽可能多的信息的段落。

Args:

sample: 一个样例.

mode: string of ("train", "dev", "test"), 处理的是什么数据集。

Returns:

None

Raises:

None

"""

#定义段落最大长度

MAX_P_LEN = 500

#定义分隔符

splitter = u'<splitter>'

#选择相关性topN的段落

topN = 3

doc_id = None

#如果含有答案所在文档,记录文档id并继续

if 'answer_docs' in sample and len(sample['answer_docs']) > 0:

doc_id = sample['answer_docs'][0]

if doc_id >= len(sample['documents']):

#数据错误,答案文档ID>文档数,样例将被dataset.py过滤掉

return

#遍历文档

for d_idx, doc in enumerate(sample['documents']):

if 'segmented_paragraphs_scores' not in doc:

continue

#去除重复段落、在样本中插入了每个段落的长度paragraphs_length,

status = dup_remove(doc)

segmented_title = doc["segmented_title"]

title_len = len(segmented_title)

para_id = None

if doc_id is not None:

para_id = sample['documents'][doc_id]['most_related_para']

total_len = title_len + sum(doc['paragraphs_length'])

#添加分隔符

para_num = len(doc["segmented_paragraphs"])

total_len += para_num

# 如果原文档总长度小于预定义的最大长度,不需要删除段落,直接增加分隔符,重新计算答案范围等参数

if total_len <= MAX_P_LEN:

incre_len = title_len

total_segmented_content = copy.deepcopy(segmented_title)

#计算答案范围变化量

for p_idx, segmented_para in enumerate(doc["segmented_paragraphs"]):

if doc_id == d_idx and para_id > p_idx:

incre_len += len([splitter] + segmented_para)

if doc_id == d_idx and para_id == p_idx:

incre_len += 1

total_segmented_content += [splitter] + segmented_para

#计算新的答案范围

if doc_id == d_idx:

answer_start = incre_len + sample['answer_spans'][0][0]

answer_end = incre_len + sample['answer_spans'][0][1]

sample['answer_spans'][0][0] = answer_start

sample['answer_spans'][0][1] = answer_end

#更新样本属性

doc["segmented_paragraphs"] = [total_segmented_content]

doc["segmented_paragraphs_scores"] = [1.0]

doc['paragraphs_length'] = [total_len]

doc['paragraphs'] = [''.join(total_segmented_content)]

doc['most_related_para'] = 0

continue

#如果文档总长度大于预定义长度,需要寻找topN段落的id

para_infos = []

for p_idx, (para_tokens, para_scores) in \

enumerate(zip(doc['segmented_paragraphs'], doc['segmented_paragraphs_scores'])):

para_infos.append((para_tokens, para_scores, len(para_tokens), p_idx))

para_infos.sort(key=lambda x: (-x[1], x[2]))

topN_idx = []

for para_info in para_infos[:topN]:

topN_idx.append(para_info[-1])

final_idx = []

total_len = title_len

#训练模式下,首先保留包含答案的文档

if doc_id == d_idx:

if mode == "train":

final_idx.append(para_id)

total_len = title_len + 1 + doc['paragraphs_length'][para_id]

#长度小于total_len时,增加topN文档

for id in topN_idx:

if total_len > MAX_P_LEN:

break

if doc_id == d_idx and id == para_id and mode == "train":

continue

total_len += 1 + doc['paragraphs_length'][id]

final_idx.append(id)

total_segmented_content = copy.deepcopy(segmented_title)

final_idx.sort()

incre_len = title_len

#计算答案范围变化

for id in final_idx:

if doc_id == d_idx and id < para_id:

incre_len += 1 + doc['paragraphs_length'][id]

if doc_id == d_idx and id == para_id:

incre_len += 1

total_segmented_content += [splitter] + doc['segmented_paragraphs'][id]

#计算新的答案范围

if doc_id == d_idx:

answer_start = incre_len + sample['answer_spans'][0][0]

answer_end = incre_len + sample['answer_spans'][0][1]

sample['answer_spans'][0][0] = answer_start

sample['answer_spans'][0][1] = answer_end

#更新样本属性

doc["segmented_paragraphs"] = [total_segmented_content]

doc["segmented_paragraphs_scores"] = [1.0]

doc['paragraphs_length'] = [total_len]

doc['paragraphs'] = [''.join(total_segmented_content)]

doc['most_related_para'] = 0

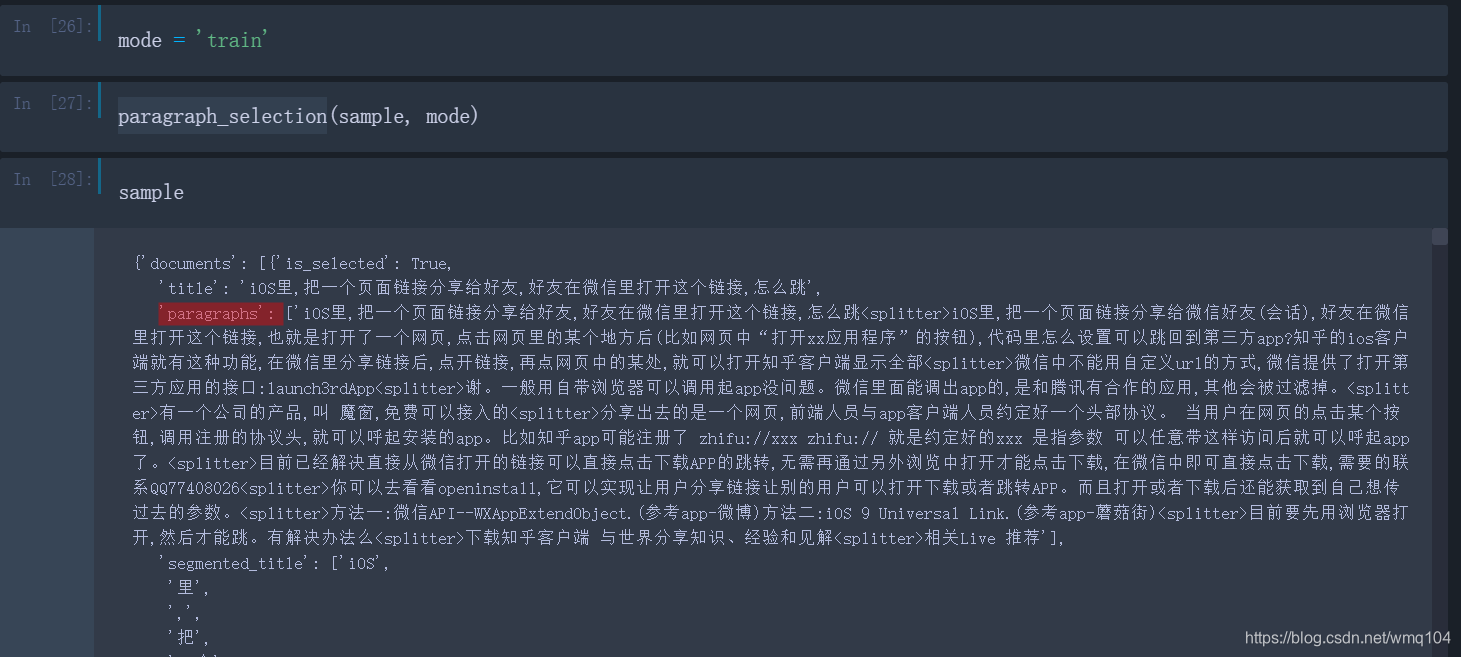

在jupyter notebook中调用结果如下图所示:

由图可见,每个文档的长度都被大大缩短至预定义最大长度之下,去除了文档中重复的段落,所有段落都通过分隔符拼接了起来形成一个整的段落,为后续数据处理提供了方便。

来源:CSDN

作者:青萍之默

链接:https://blog.csdn.net/wmq104/article/details/104241479