一、搭建集群各节点

准备五个节点,分别为:

192.168.154.150

192.168.154.151

192.168.154.152

192.168.154.153

192.168.154.155

这里为方便操作,只准备了前三个节点.

rabbitmq集群镜像模式构建

1) 停止各节点服务

rabbitmqctl stop

2) 文件同步

选择76、77、78任意一个节点为Master(这里选择76为Master),也就是说我们需要把76的Cookie文件同步到77、78节点上,进入76的/var/lib/rabbitmq目录下,把/var/lib/rabbitmq/.erlang.cookie文件的权限修改为777.然后把.erlang.cookie文件远程复制到其它各个节点.最后把所有cookie文件的权限还原为400即可.

scp .erlang.cookie 192.168.154.151:/var/lib/rabbitmq/

说明:scp 是 linux 系统下基于 ssh 登陆进行安全的远程文件拷贝命令

3) 集群节点启动

rabbitmq-server -detached

lsof -i:5672

4) slave节点加入集群中

77节点

rabbitmqctl stop_app

rabbitmqctl join_cluster [--ram] dongge02

rabbitmqctl start_app

lsof -i:5672

78节点

rabbitmqctl stop_app

rabbitmqctl join_cluster dongge023

rabbitmqctl start_app

lsof -i:5672

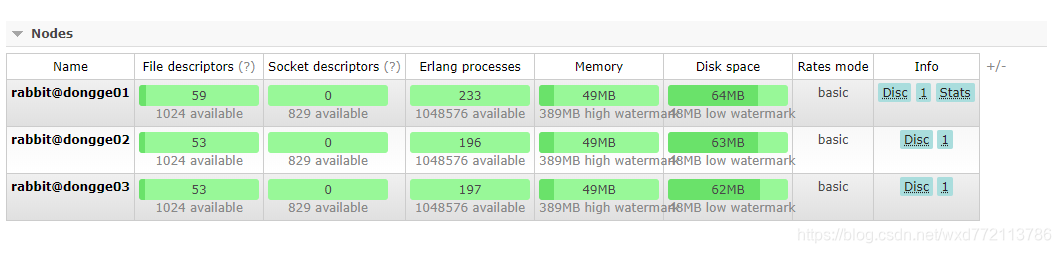

5) 访问任意一个节点对应的管控台查看集群信息

访问地址 http://192.168.154.150:15672/

6) 配置镜像队列

在任意一个节点上设置镜像队列策略,实现各节点的同步

rabbitmqctl set_policy ha-all "^" '{"ha-mode":"all"}'

其它命令

移出集群节点,可以使用 rabbitmqctl forget_cluster_node dongge01

修改集群名称(默认为第一个节点的主机名称),可以使用 rabbitmqctl set_cluster_name newname

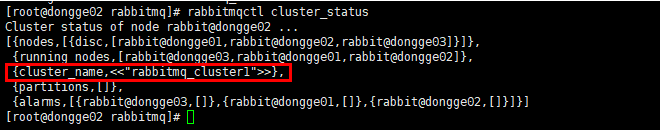

查看集群状态,rabbitmqctl cluster_status

可能出现的问题

rabbitmqctl join_cluster rabbit@dongge01

Clustering node rabbit@dongge02 with rabbit@dongge01 ...

Error: unable to connect to nodes [rabbit@dongge01]: nodedown

DIAGNOSTICS

===========

attempted to contact: [rabbit@dongge01]

rabbit@dongge01:

* unable to connect to epmd (port 4369) on dongge01: nxdomain (non-existing domain)

current node details:

- node name: 'rabbitmq-cli-83@dongge02'

- home dir: /var/lib/rabbitmq

- cookie hash: aC0Gq/wYO5LTAlBUZWA/CQ==

解决方案

集群节点间需能互相访问,故每个集群节点的hosts文件应包含集群内所有节点的信息以保证互相解析

vim /etc/hosts

IP rabbit@rabbitmq-node1

IP rabbit@rabbitmq-node2

IP rabbit@rabbitmq-node3

之后重启各节点中的rabbitmq

二、搭建负载均衡组件haproxy

下载依赖包

yum install gcc vim wget

下载haproxy

wget http://www.haproxy.org/download/1.6/src/haproxy-1.6.5.tar.gz

解压到指定目录

tar -zxvf haproxy-1.6.5.tar.gz -C /usr/local

进入目录,进行编译、安装

cd /usr/local/haproxy-1.6.5

make TARGET=linux31 PREFIX=/usr/local/haproxy

make install PREFIX=/usr/local/haproxy

mkdir /etc/haproxy

赋予权限

groupadd -r -g 149 haproxy

useradd -g haproxy -r -s /sbin/nologin -u 149 haproxy

创建配置文件

touch /etc/haproxy/haproxy.cfg

151-haproxy

global

log 127.0.0.1 local0 info

maxconn 5120

chroot /usr/local/haproxy

uid 99

gid 99

daemon

quiet

nbproc 20

pidfile /var/run/haproxy.pid

defaults

log global

# 模式

mode tcp

#if you set mode to tcp,then you nust change tcplog into httplog

option tcplog

option dontlognull

retries 3

option redispatch

maxconn 2000

contimeout 5s

##客户端空闲超时时间为 60秒 则HA 发起重连机制

clitimeout 60s

##服务器端链接超时时间为 15秒 则HA 发起重连机制

srvtimeout 15s

#front-end IP for consumers and producters

listen rabbitmq_cluster

bind 0.0.0.0:5672

#配置TCP模式

mode tcp

#balance url_param userid

#balance url_param session_id check_post 64

#balance hdr(User-Agent)

#balance hdr(host)

#balance hdr(Host) use_domain_only

#balance rdp-cookie

#balance leastconn

#balance source //ip

#简单的轮询

balance roundrobin

#rabbitmq集群节点配置 #inter 每隔五秒对mq集群做健康检查, 2次正确证明服务器可用,2次失败证明服务器不可用,并且配置主备机制

server dongge01 192.168.154.150:5672 check inter 5000 rise 2 fall 2

server dongge02 192.168.154.151:5672 check inter 5000 rise 2 fall 2

server dongge03 192.168.154.152:5672 check inter 5000 rise 2 fall 2

#配置haproxy web监控,查看统计信息

listen stats

bind 192.168.154.151:8100

mode http

option httplog

stats enable

#设置haproxy监控地址为http://localhost:8100/rabbitmq-stats

stats uri /rabbitmq-stats

stats refresh 5s

152-haproxy

global

log 127.0.0.1 local0 info

maxconn 5120

chroot /usr/local/haproxy

uid 99

gid 99

daemon

quiet

nbproc 20

pidfile /var/run/haproxy.pid

defaults

log global

# 模式

mode tcp

#if you set mode to tcp,then you nust change tcplog into httplog

option tcplog

option dontlognull

retries 3

option redispatch

maxconn 2000

contimeout 5s

##客户端空闲超时时间为 60秒 则HA 发起重连机制

clitimeout 60s

##服务器端链接超时时间为 15秒 则HA 发起重连机制

srvtimeout 15s

#front-end IP for consumers and producters

listen rabbitmq_cluster

bind 0.0.0.0:5672

#配置TCP模式

mode tcp

#balance url_param userid

#balance url_param session_id check_post 64

#balance hdr(User-Agent)

#balance hdr(host)

#balance hdr(Host) use_domain_only

#balance rdp-cookie

#balance leastconn

#balance source //ip

#简单的轮询

balance roundrobin

#rabbitmq集群节点配置 #inter 每隔五秒对mq集群做健康检查, 2次正确证明服务器可用,2次失败证明服务器不可用,并且配置主备机制

server dongge01 192.168.154.150:5672 check inter 5000 rise 2 fall 2

server dongge02 192.168.154.151:5672 check inter 5000 rise 2 fall 2

server dongge03 192.168.154.152:5672 check inter 5000 rise 2 fall 2

#配置haproxy web监控,查看统计信息

listen stats

bind 192.168.154.152:8100

mode http

option httplog

stats enable

#设置haproxy监控地址为http://localhost:8100/rabbitmq-stats

stats uri /rabbitmq-stats

stats refresh 5s

启动haproxy

/usr/local/haproxy/sbin/haproxy -f /etc/haproxy/haproxy.cfg

说明:-f 表示指定配置文件,即某个具体路径下的文件.

查看haproxy进程状态

ps -ef | grep haproxy

注意:haproxy服务最好弄在新的服务器上,当然也可以弄在节点服务器上(需要绑定一个新端口,比如5671,因为节点服务已经占用5672端口).vim /etc/haproxy/haproxy.cfg即可.

https://blog.csdn.net/hanzhuang12345/article/details/94779142

访问haproxy

对rmq节点进行监控:http://192.168.154.151:8100/rabbitmq-stats

三、搭建高可用组件keepalived

安装所需软件包

yum install -y openssl openssl-devel

下载

wget http://www.keepalived.org/software/keepalived-1.2.18.tar.gz

解压、编译、安装

tar -zxvf keepalived-1.2.18.tar.gz -C /usr/local/

cd keepalived-1.2.18/ && ./configure --prefix=/usr/local/keepalived

make && make install

//将keepalived安装成Linux系统服务,因为没有使用keepalived的默认安装路径(默认路径:/usr/local),安装完成之后,需要做一些修改工作

//首先创建文件夹,将keepalived配置文件进行复制:

mkdir /etc/keepalived

cp /usr/local/keepalived/etc/keepalived/keepalived.conf /etc/keepalived/

//然后复制keepalived脚本文件:

cp /usr/local/keepalived/etc/rc.d/init.d/keepalived /etc/init.d/

cp /usr/local/keepalived/etc/sysconfig/keepalived /etc/sysconfig/

//删除软链接

rm -f /usr/sbin/keepalived

ln -s /usr/local/sbin/keepalived /usr/sbin/

rm -f /sbin/keepalived

ln -s /usr/local/keepalived/sbin/keepalived /sbin/

//可以设置开机自启动:chkconfig keepalived on,到此我们安装完毕!

chkconfig keepalived on

keepalived配置

在新建的keepalived目录中修改配置文件

vim /etc/keepalived/keepalived.conf

151服务节点

! Configuration File for keepalived

global_defs {

router_id donnge02 ##标识节点的字符串,通常为hostname

}

vrrp_script chk_haproxy {

script "/etc/keepalived/haproxy_check.sh" ##执行脚本位置

interval 2 ##检测时间间隔

weight -20 ##如果条件成立则权重减20

}

vrrp_instance VI_1 {

state MASTER ## 主节点为MASTER,备份节点为BACKUP

interface ens33 ## 绑定虚拟IP的网络接口(网卡),与本机IP地址所在的网络接口相同

virtual_router_id 151 ## 虚拟路由ID号(主备节点一定要相同)

mcast_src_ip 192.168.154.151 ## 本机ip地址

priority 100 ##优先级配置(0-254的值)

nopreempt

advert_int 1 ## 组播信息发送间隔,俩个节点必须配置一致,默认1s

authentication { ## 认证匹配

auth_type PASS

auth_pass wxd

}

track_script {

chk_haproxy

}

virtual_ipaddress {

192.168.154.70 ## 虚拟ip,可以指定多个

}

}

152服务节点

! Configuration File for keepalived

global_defs {

router_id dongge03 ##标识节点的字符串,通常为hostname

}

vrrp_script chk_haproxy {

script "/etc/keepalived/haproxy_check.sh" ##执行脚本位置

interval 2 ##检测时间间隔

weight -20 ##如果条件成立则权重减20

}

vrrp_instance VI_1 {

state BACKUP ## 主节点为MASTER,备份节点为BACKUP

interface ens33 ## 绑定虚拟IP的网络接口(网卡),与本机IP地址所在的网络接口相同

virtual_router_id 151 ## 虚拟路由ID号(主备节点一定要相同)

mcast_src_ip 192.168.154.152 ## 本机ip地址

priority 90 ##优先级配置(0-254的值)

nopreempt

advert_int 1 ## 组播信息发送间隔,俩个节点必须配置一致,默认1s

authentication { ## 认证匹配

auth_type PASS

auth_pass wxd

}

track_script {

chk_haproxy

}

virtual_ipaddress {

192.168.154.70 ## 虚拟ip,可以指定多个

}

}

编写haproxy_check.sh脚本

添加文件位置为/etc/keepalived/haproxy_check.sh(151、152两个节点文件内容一致即可)

#!/bin/bash

COUNT=`ps -C haproxy --no-header |wc -l`

if [ $COUNT -eq 0 ];then

/usr/local/haproxy/sbin/haproxy -f /etc/haproxy/haproxy.cfg

sleep 2

if [ `ps -C haproxy --no-header |wc -l` -eq 0 ];then

killall keepalived

fi

fi

haproxy_check.sh脚本授权,赋予可执行权限

chmod +x /etc/keepalived/haproxy_check.sh

启动keepalived

当我们启动两个haproxy节点以后,我们可以启动keepalived服务程序

//查看状态

ps -ef | grep haproxy

//启动两台机器的keepalived

service keepalived start | stop | status | restart

ps -ef | grep keepalived

复制多个文件到指定目录

cp haproxy_check.sh keepalived.conf /etc/keepalived

ip a 查看所有的ip,包括了keepalived方式配成的虚拟ip(该虚拟ip此时在151主节点上存在)

来源:CSDN

作者:人生需要一次说走就走的旅行

链接:https://blog.csdn.net/wxd772113786/article/details/104133163