原理参见:

adaboosting原理探讨(一)

https://blog.csdn.net/sinat_29957455/article/details/79810188

adaboosting原理探讨(二)

https://blog.csdn.net/baiduforum/article/details/6721749

adaboosting原理探讨(三)

https://blog.csdn.net/qq_38150441/article/details/80476242

import numpy as np

from sklearn.ensemble import AdaBoostClassifier

from sklearn import tree

import matplotlib.pyplot as plt

%matplotlib inline

X = np.arange(10).reshape(-1,1)

y = np.array([1,1,1,-1,-1,-1,1,1,1,-1])

display(X,y)

#array([[0],[1],[2],[3],[4],[5],[6],[7],[8],[9]])

#array([ 1, 1, 1, -1, -1, -1, 1, 1, 1, -1])

ada = AdaBoostClassifier(n_estimators=3,algorithm='SAMME')

ada.fit(X,y)

#AdaBoostClassifier(algorithm='SAMME', base_estimator=None, learning_rate=1.0,n_estimators=3, random_state=None)

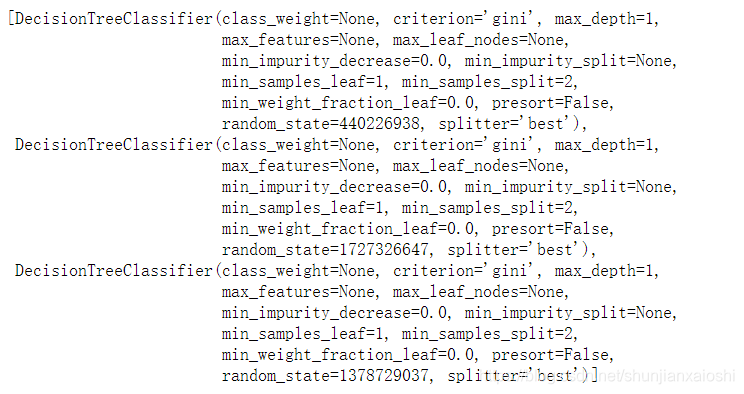

ada.estimators_

plt.figure(figsize=(9,6))

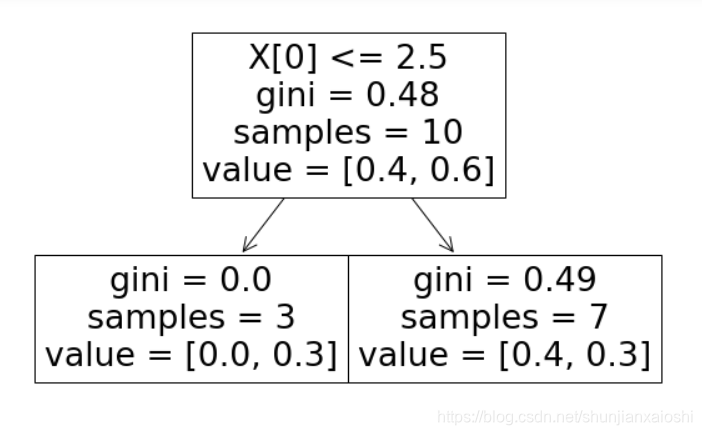

_ = tree.plot_tree(ada[0])

y_ = ada[0].predict(X)

y_

#array([ 1, 1, 1, -1, -1, -1, -1, -1, -1, -1])

#第一棵树预测的值与真实值相比,有三个值划分错误

# 误差率

e1 = np.round(0.1*(y != y_).sum(),4)

e1

#0.3

# 计算第一颗树权重

# 随机森林中每棵树的权重一样的

# adaboost提升树中每棵树的权重不同

a1 = np.round(1/2*np.log((1-e1)/e1),4)

a1

#0.4236

# 样本预测准确:更新的权重

w2 = 0.1*np.e**(-a1*y*y_)

w2 = w2/w2.sum()

w2 = w2.round(4)

w2

#array([0.0714, 0.0714, 0.0714, 0.0714, 0.0714, 0.0714, 0.1667, 0.1667,0.1667, 0.0714])

# 分类函数f1(x)= a1*G1(x) = 0.4236G1(x)

X

#array([[0],[1],[2],[3],[4],[5],[6],[7],[8],[9]])

y

#array([ 1, 1, 1, -1, -1, -1, 1, 1, 1, -1])

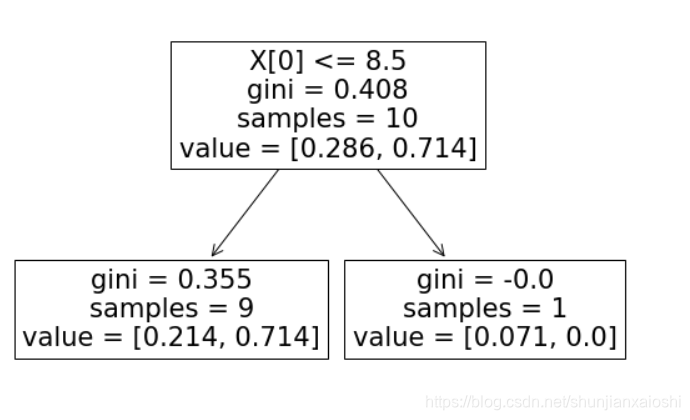

plt.figure(figsize=(9,6))

_ = tree.plot_tree(ada[1])

# 计算误差率

e2 = 0.0715*3

e2

#0.21449999999999997

a2 = np.round(1/2*np.log((1 - e2)/e2),4)

a2

#0.649

y_ = ada[1].predict(X)

w3 = w2*np.e**(-a2*y*y_)

w3 = w3/w3.sum()

w3 = w3.round(4)

w3

#array([0.0455, 0.0455, 0.0455, 0.1665, 0.1665, 0.1665, 0.1062, 0.1062,0.1062, 0.0455])

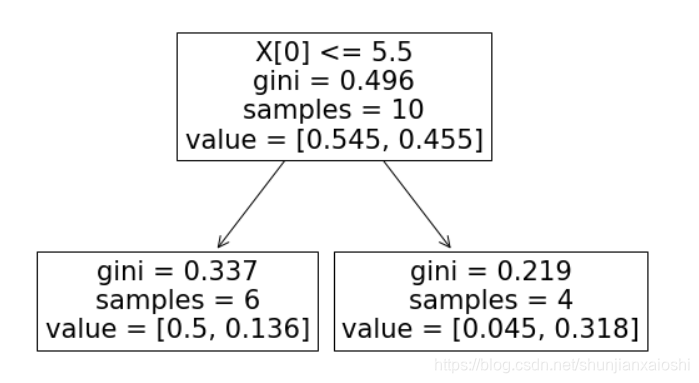

plt.figure(figsize=(9,6))

_ = tree.plot_tree(ada[2])

y_ = ada[2].predict(X)

y_

#array([-1, -1, -1, -1, -1, -1, 1, 1, 1, 1])

y

#array([ 1, 1, 1, -1, -1, -1, 1, 1, 1, -1])

e3 = (w3*(y_ != y)).sum()

a3 = 1/2*np.log((1 - e3)/e3)

a3

#0.7514278247629759

display(a1,a2,a3) #打印三棵树的权重

#0.4236

#0.649

#0.7514278247629759

# 弱分类器合并成强分类器

# 加和

综上,将上面计算得到的a1、a2、a3各值代入G(x)中,

G(x) = sign[f3(x)] = sign[ a1 * G1(x) + a2 * G2(x) + a3 * G3(x) ],

得到最终的分类器为:

sign意思负号函数,大于等于0变成1,小于0变成-1

G(x) = sign[f3(x)] = sign[ 0.4236G1(x) + 0.6496G2(x)+0.7514G3(x) ]。

# 集成树

ada.predict(X)

#array([ 1, 1, 1, -1, -1, -1, 1, 1, 1, -1])

y_predict = a1*ada[0].predict(X) + a2*ada[1].predict(X) + a3*ada[2].predict(X)

y_predict

#array([ 0.32117218, 0.32117218, 0.32117218, -0.52602782, -0.52602782,-0.52602782, 0.97682782, 0.97682782, 0.97682782, -0.32117218])

np.sign(y_predict).astype(np.int)

#array([ 1, 1, 1, -1, -1, -1, 1, 1, 1, -1])

来源:CSDN

作者:W流沙W

链接:https://blog.csdn.net/shunjianxaioshi/article/details/104116784