paper reading - [第一代GCN] Spectral Networks and Deep Locally Connected Networks on Graphs

图卷积的一些知识:

文章目录

- paper reading - [第一代GCN] Spectral Networks and Deep Locally Connected Networks on Graphs

文章目录

- paper reading - [第一代GCN] Spectral Networks and Deep Locally Connected Networks on Graphs

CNN在image和audio recognition表现出色的原因 :

ability to exploit the local translational invariance of signal classes over their domain 。

在各域上各类信号的“局部平移不变性”。

就我理解而言,主要的点是“局部平移不变性”,而且这个性质,在信号从一个域映射到另一个域的时候,各类之间仍旧可分。

CNN的结构特点:

- multiscale:(???)

- hierarchical:层次化

- local receptive fields:局部接收域(局部连接)

由于gird的性质,使得CNN可以有以下的特点:

- The translation structure ==> filters instead of generic linear maps(滤波器替代线性映射) ==> weight sharing(权重共享)

- The metric on the grid ==> compactly supported filters ==> smaller than the size of the input signals(小尺寸滤波器)。

- The multiscale dyadic clustering of the grid ==> stride convolutions and pooling(卷积和池化) ==> subsampling(下采样)

CNN的局限

Although the spatial convolutional structure can be exploited at several layers, typical CNN architectures do not assume any geometry in the “feature” dimension, resulting in 4-D tensors which are only convolutional along their spatial coordinates.

即对于一个4-D的tensor而言,其有X,Y,Z,feature四个维度,典型的CNN只能对X,Y,Z三个维度(即空间维度)进行卷积操作(通过3D convolution 操作),而不能对feature维度(特征维度)进行操作。

CNN的适用范围以及GCN与GCN的联系:

CNN适用范围:

- data of Euclidean domain。即欧几里得域的数据,论文中称之为grid,即具有标准的几何结构。

- translational equivariance/invariance with respect to this grid。即有由于grid而产生的平移不变性。

GCN与CNN的联系:

generalization of CNNs to signals defined on more general domains 。

即GCN是CNN在domain上的推广,推广的方式是通过推广卷积的概念(by extension the notion of convolution )。从CNN的Euclidean domain(有规则几何结构)推广到更加general的domains(无规则几何结构,即graph)。

two constructions of GCN

spatial construction:hierarchical clustering of the domain

原理:

n extend properties (2) and (3) to general graphs, and use them to define “locally” connected and pooling layers, which require O(n) parameters instead of O(n2) .

即:构造局部连接(locally connected)。

局部连接的实现:

neighborhoods ==> sparse “filters” (sparse “filters” with receptive fields given by these neighborhoods,即接收域由neighborhoods决定的稀疏滤波器,仅在neighborhoods的范围内非0) ==> locally connected networks

下采样的实现:

下采样的本质:

input all the feature maps over a cluster, and output a single feature for that cluster.

即:feature maps --=cluster==> a single feature,其实就是降维。

CNN的下采样:

natural multiscale clustering of the grid ==> pooling and subsampling layers.

GCN的下采样:

GCN的下采样的原理和CNN的下采样的原理相似,都是下采样的本质。

feature maps --=cluster==> a single feature

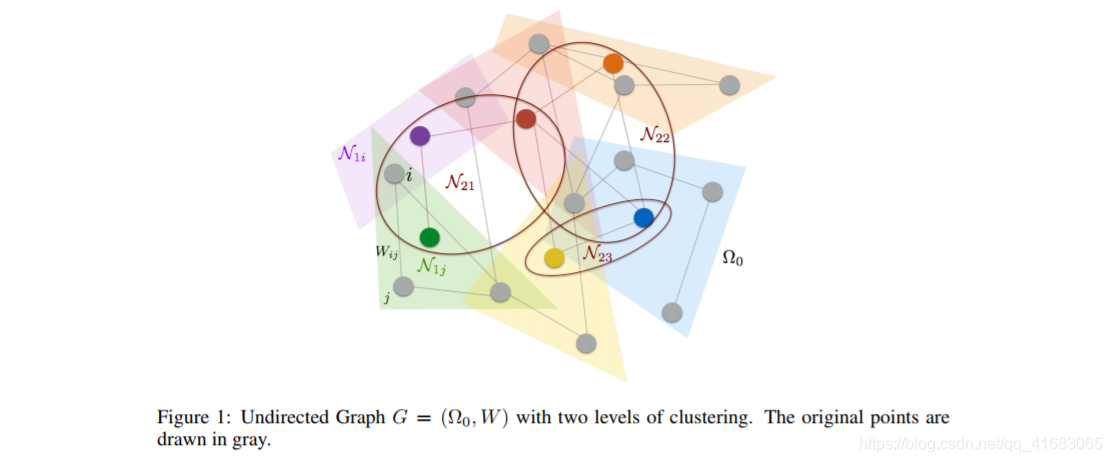

注:上图为下采样的示意图。

深度局部连接的实现:

各变量的含义:

- Ω:各个层的输入信号。其中Ω0是原始的输入信号。

- K scales:网络的层数。

- dk clusters:第k层的下采样的cluster数,用于决定下一次输入feature的维度。

- Nk,i:第k层的第i个neighborhoods,局部连接的接收域。(见figure 1)

- fk:第k层的滤波器数目,graph中每一个点的特征数。

核心公式:

对应的符号说明:

- xk,i :第k层的信号的第i个特征。

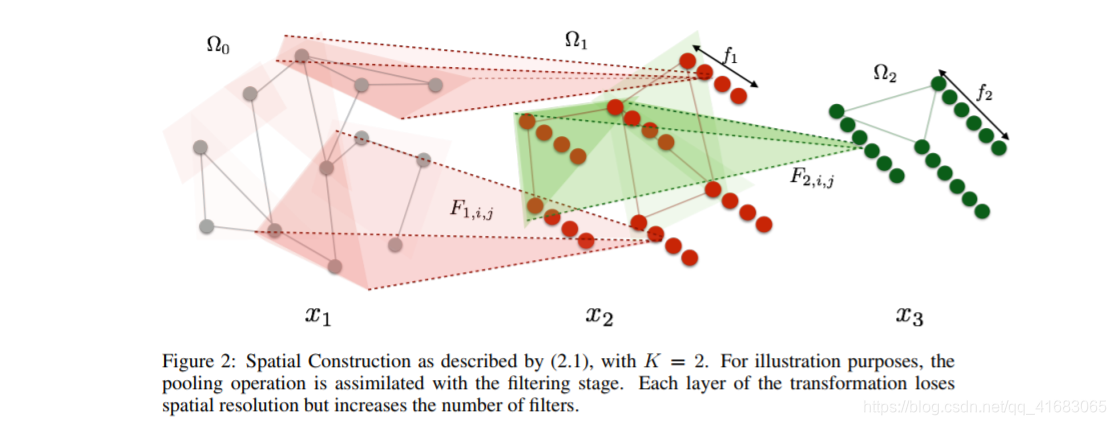

- Fk,i,j :第k层的第j种滤波器的第i个值。Fk,i,j is a dk−1 × dk−1 sparse matrix with nonzero entries in the locations given by Nk。

- h:非线性激活函数。

- Lk :第k层的池化操作。Lk outputs the result of a pooling operation over each cluster in Ωk。

是对信号 进行滤波器 操作,得到的结果仅仅与 (当前层数) 和 (滤波器编号) 有关。之后经过 的非线性激活和 的池化(下采样),得到下一层的信号。

整体来说与CNN的计算流程类似。

每层的计算结果(结合 Figure 2):

Each layer of the transformation loses spatial resolution but increases the number of filters.

即每层有两个结果:

- loses spatial resolution:空间分辨率降低,即空间点数减少。

- increases the number of filters:滤波器数目增加,即每个点特征数 增。

模型评价:

优点:

it requires relatively weak regularity assumptions on the graph. Graphs having low intrinsic dimension have localized neighborhoods, even if no nice global embedding exists.

即:对于弱规则结构的graph依旧适用,且对于无良好global embedding的低维图也适用(原因是:低维图依旧存在 localized neighborhoods)。

缺点:

no easy way to induce weight sharing across different locations of the graph。

即:对于graph的不同位置,需采用不同的滤波器,从而导致无法实现 weight sharing 权重共享。

CNN的单次卷积操作是由一个 filter 在整个数据立方(grid)上进行滑动(即 stride convolutions ),使得数据立方的不同位置可以使用同一个 fliter,而这个操作无法在 spatial construction 的 GCN 中实现。

spectral construction:spectrum of the graph Laplacian

原理

draws on the properties of convolutions in the Fourier domain.

In Rd , convolutions are linear operators diagonalised by the Fourier basis exp(iω·t), ω, t ∈ Rd.

One may then extend convolutions to general graphs by finding the corresponding “Fourier” basis.

This equivalence is given through the graph Laplacian, an operator which provides an harmonic analysis on the graphs.

即:卷积是被傅里叶基底对角化的线性操作子。

对这个概念进行推广,就是找到对graph而言的“傅里叶”基底,即 graph Laplacian。通过 graph Laplacian 得到 equivalence.

两种 Laplacian

combinatorial Laplacian

graph Laplacian

符号解释:

- :graph 的度矩阵。

- :权重矩阵

- :对角矩阵

核心公式:

对应的符号说明:

- :第 k 层的信号的第 i 个特征。

- :第 k 层的第 **j **种滤波器的第 i 个值。 is a diagonal matrix.

- :实值非线性激活。

- :graph Laplacian 的特征向量,由特征值排序。the eigenvectors of the graph Laplacian , ordered by eigenvalue.

需要注意的是,此时 并不能被 shared across locations,这要经由 smooth spectral multipliers 来实现。

公式解析:

graph Laplacian 的谱分解(或称为特征分解:将 表示为特征值 和特征向量矩阵 之积)可表示为

注意:可写为的条件为特征向量 eigenvectors 正交,此处满足条件。

graph 上的 Fourier 变换表示为

对应 Fourier 逆变换为

滤波器(卷积核)的 Fourier 变换为 ,即 为定义在频谱上的滤波器。

整个卷积的原理就是卷积定理,求卷积的过程在频域上借一步而已:

综上所述: 为对信号进行 Fourier 变换。 为频域上的信号和卷积核(滤波器)的卷积操作,通过 中的 再变回时域,从而完成时域的卷积操作。

需要注意的是,$V\sum_{i=1}^{f_{k-1}} F_{k,i,j}V^T $ 是线性操作子,非线性由 引入。

另外,其他论文中的公式为

smooth spectral:

Often, only the first eigenvectors of the Laplacian are useful in practice, which carry the smooth geometry of the graph. The cutoff frequency depends upon the intrinsic regularity of the graph and also the sample size. In that case, we can replace in (5) by , obtained by keeping the first columns of .

从信号的角度来看,信号的高频成份携带有信号的细节部分,去除其高频成份,即可实现 smooth。

实现 smooth 的方法就是仅仅保留特征向量矩阵 中前 个特征向量,原因是特征向量的排序是按照特征值的排序。

频域的对角操作子的好处和弊端:

好处:

将参数数目从 降低到 .

弊端

most graphs have meaningful eigenvectors only for the very top of the spectrum.

Even when the individual high frequency eigenvectors are not meaningful, a cohort of high frequency eigenvectors may contain meaningful information.

However this construction may not be able to access this information because it is nearly diagonal at the highest frequencies.

简单来说,对于绝大多数 graph 而言,very top of the spectrum 的 eigenvectors 是意义的。而且高频特征向量的组合也含有信息。

而对角操作子只能同时提取单个特征向量的信息,即无法提取 a cohort of high frequency eigenvectors 所含有的信息。

translation invariant 的实现:

linear operators in the Fourier basis ==> translation invariant ==> “classic” convolutions

spatial subsampling 的实现:

spatial subsampling can also be obtained via dropping the last part of the spectrum of the Laplacian, leading to max-pooling, and ultimately to deep convolutonal networks。

局部连接和位置共享的实现:

in order to learn a layer in which features will be not only shared across locations but also well localized in the original domain, one can learn spectral multipliers which are smooth.

Smoothness can be prescribed by learning only a subsampled set of frequency multipliers and using an interpolation kernel to obtain the rest, such as cubic splines.

即:要实现每层的 feature 的位置共享和局部连接,spectral multipliers 应当 smooth,由对 frequency multipliers 的 subsample 来实现。

至此,GCN 在 spectral 上的卷积和池化的操作和局部连接和位置共享的实现已经介绍完毕,就可以实现deep convolutonal networks。

experiments

待补。

来源:CSDN

作者:qq_41683065

链接:https://blog.csdn.net/qq_41683065/article/details/104117593