# knn的算法思想就是看离他最近的几个元组都是属于哪个类,把最多的作为他的类别

from sklearn import datasets

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

from sklearn.model_selection import cross_val_score

import matplotlib.pyplot as plt

iris = datasets.load_iris()

iris_X = iris.data

iris_y = iris.target

# train_d, test_d, train_tar, test_tar = train_test_split(iris_X, iris_y, test_size=0.2, random_state=5)

# # 随机划分测试集和训练集

#

# knn = KNeighborsClassifier(n_neighbors=5)

# # 寻找离他最近的5个元组并确定每个类别占的比例

# knn.fit(train_d, train_tar)

#

# # 用训练好的函数预测

#

# print('R^2:',knn.score(test_d,test_tar))

# print(knn.predict_proba(test_d))

# 方法二

knn = KNeighborsClassifier(n_neighbors=5)

scores = cross_val_score(knn, iris_X, iris_y, cv=5, scoring='accuracy')

# scoring常用参数:r2,accuracy

# 将数据分成不同的5组,分别计算他的得分(在这里是准确度)

print(scores)

# 选择参数

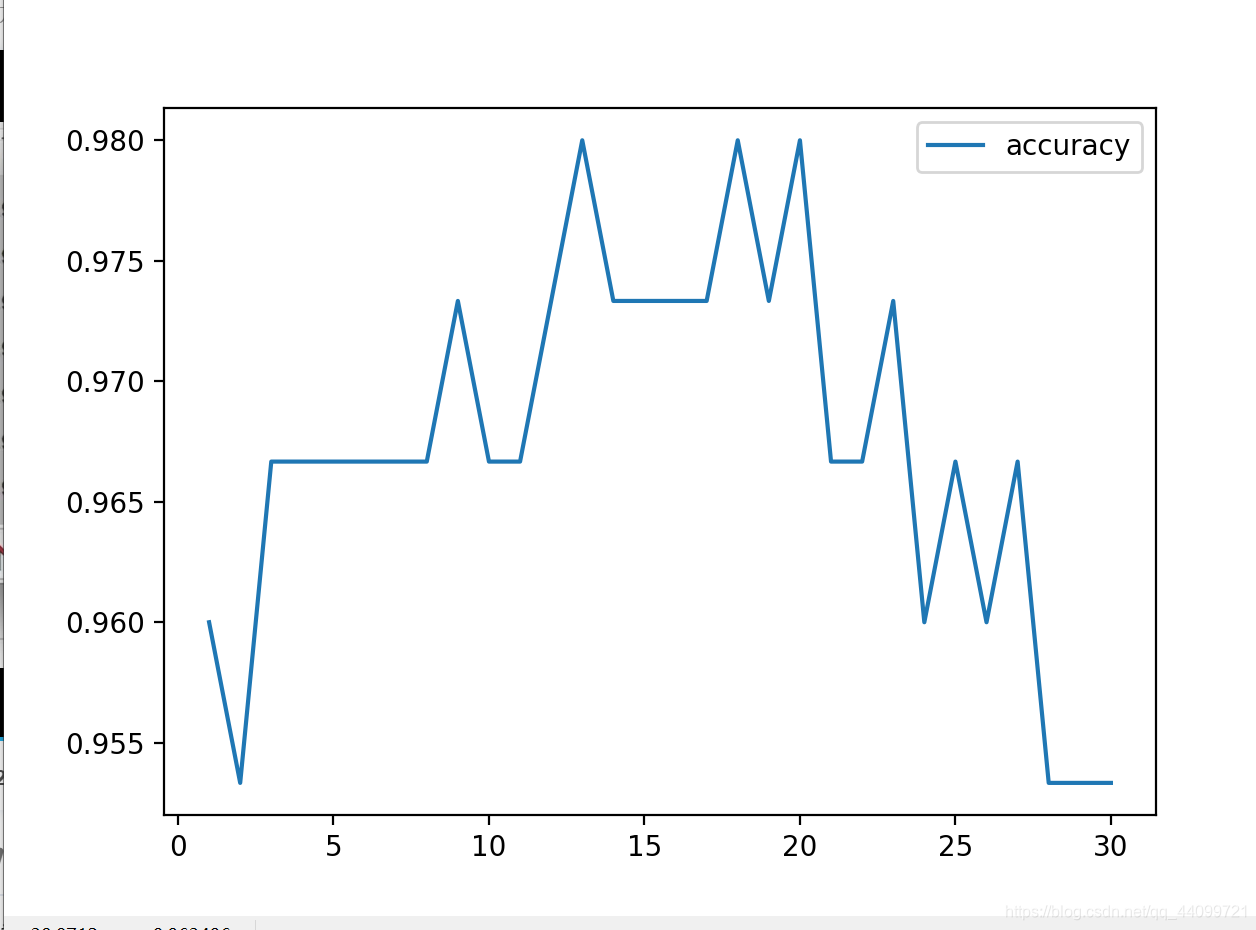

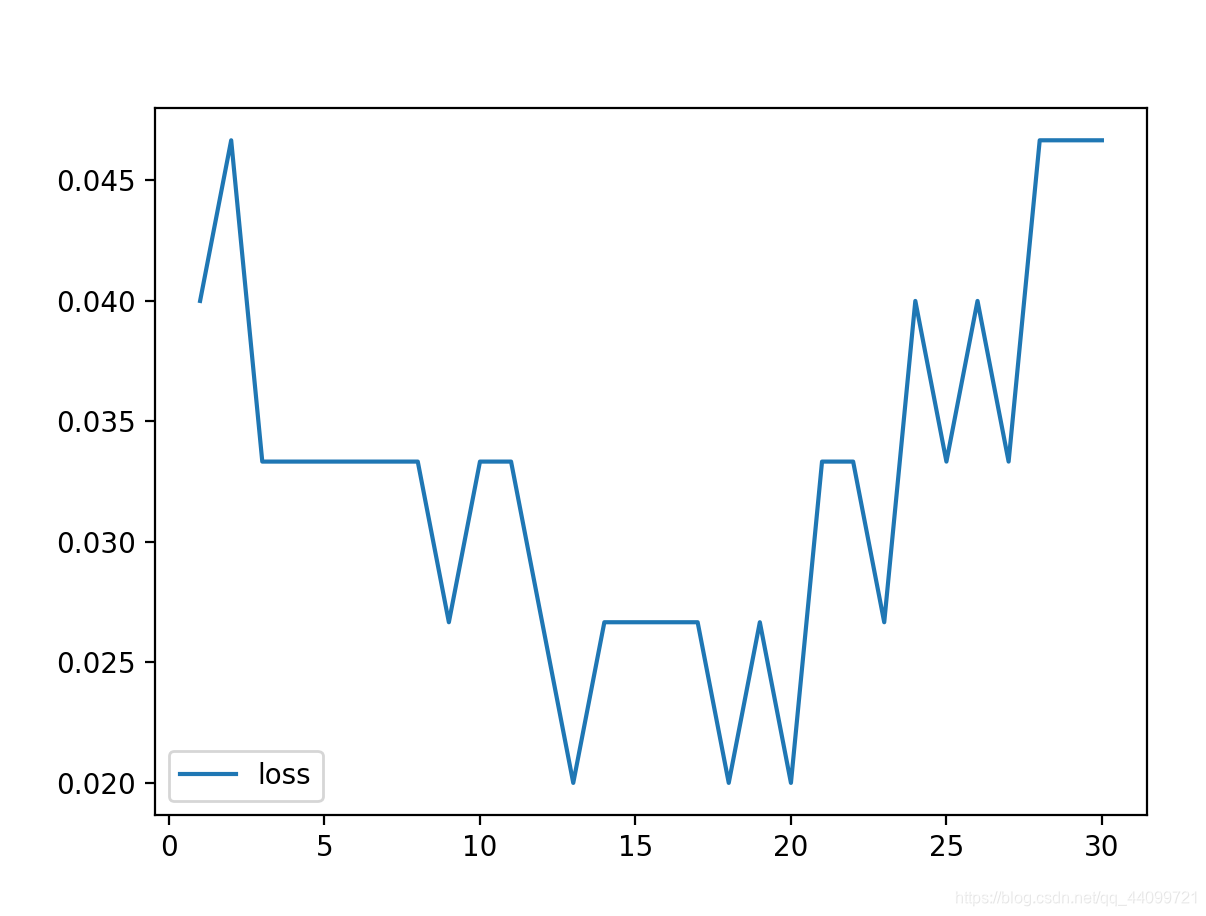

k_scores = []

k_loss = []

for k in range(1, 31):

knn = KNeighborsClassifier(n_neighbors=k)

scores = cross_val_score(knn, iris_X, iris_y, cv=10, scoring='accuracy')

loss = -cross_val_score(knn, iris_X, iris_y, cv=10, scoring='neg_mean_squared_error')

# 做回归问题

k_scores.append(scores.mean())

k_loss.append(loss.mean())

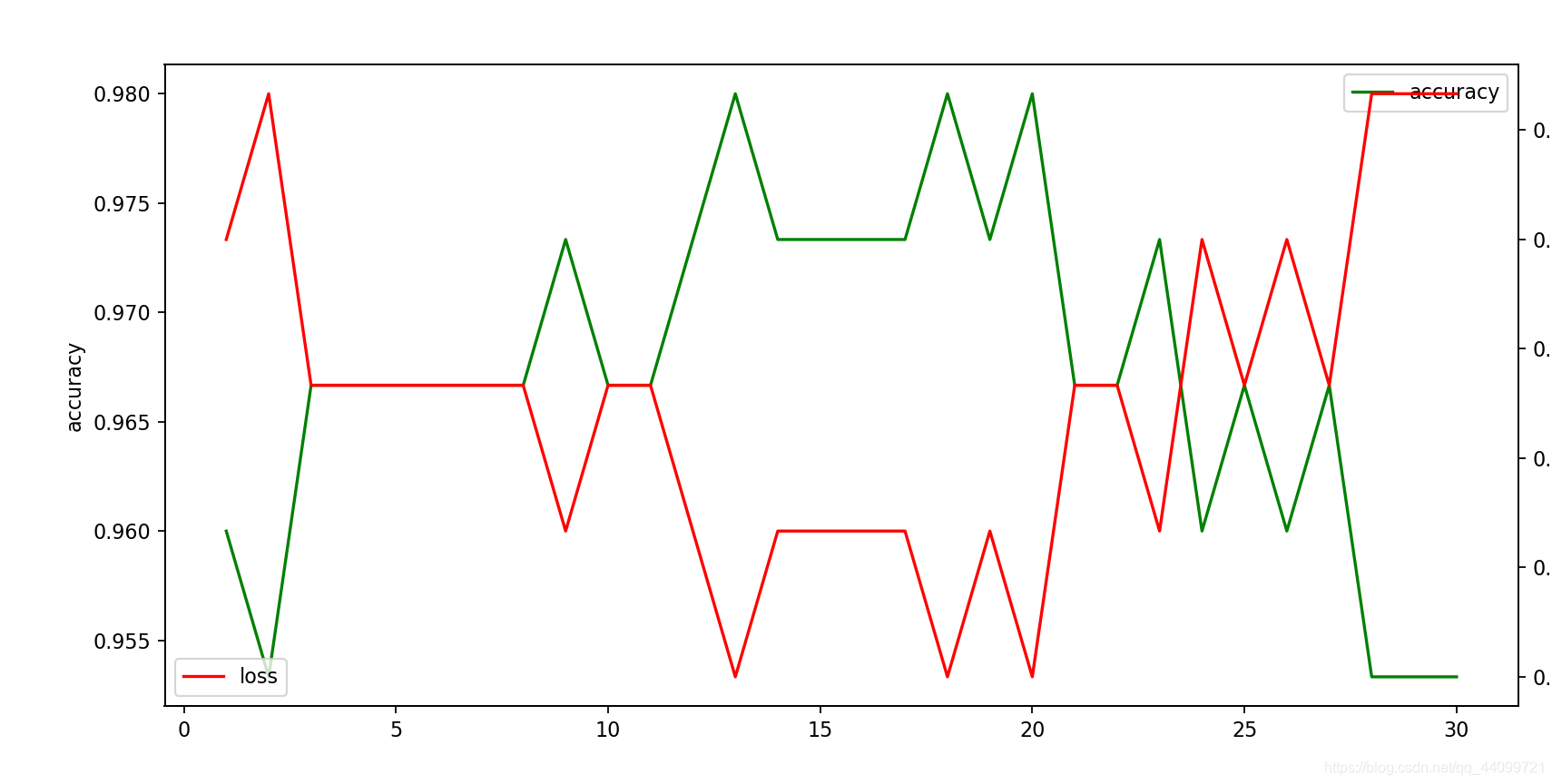

fig = plt.figure(figsize=(100, 50), dpi=80)

ax1 = fig.add_subplot(111)

ax1.plot(list(range(1, 31)),k_scores, label='accuracy',color='green')

ax2 = ax1.twinx()

ax2.plot(list(range(1, 31)), k_loss, label='loss',color="red")

ax1.legend()

ax2.legend()

ax1.set_ylabel('accuracy') # 左Y轴的label

ax2.set_ylabel('loss') # 设置xlabel

plt.show()

# 可以看出13的时候最好

来源:CSDN

作者:小宅520

链接:https://blog.csdn.net/qq_44099721/article/details/104045043