datawhale数据竞赛day02-数据清洗

数据清洗主要是删除原始数据集中的无关数据、重复戴护具,平滑噪声数据,筛选掉与挖掘主题无关的数据,处理缺失值、异常值等

在这一步,可以将训练集和测试值放在一起做处理

import pandas as pd

#读入数据

data_train = pd.read_csv('train_data.csv')

data_test = pd.read_csv('test_a.csv')

#print(data_train)

#print(data_test)

#区分训练集和测试集,之后一起做数据处理

data_train['type'] = "Train"

data_test['Type'] = "Test"

#将数据合在一起

data_all = pd.concat([data_train, data_test], ignore_index=True)

print(data_all)

缺失值分析及处理

一、缺失值出现的原因分析

数据集会因为各种原因有所缺失,例如调查时没有记录某些观察值等。了解缺失的数据是什么至关重要,这样才可以决定下一步如何处理这些缺失值。

-

根据第一天ETA,UV和PV都有18条记录缺失

PV和UV都是客户的信息,PV表示该板块当月租客浏览网页次数;uv表示该板块当月租客浏览网页总人数,这两种数据的缺失应该是外在原因,浏览记录被删除等 -

rentType有–类型,houseToward有暂无数据,buildYear有“暂无信息”这一选项

–和暂无信息都可以看作是缺失值,视为其他选项

二、采取合适的方式对缺失值进行填充

处理初始值的方法可以分为3类:删除记录、数据插补和不处理

- pv和uv的缺失值用均值填充

#pv和uv的缺失值用均值填充

data['uv'].fillna(data['uv'].mean(), inplace = True)

data['pv'].fillna(data['uv'].mean(), inplace = True)

data['uv'] = data['uv'].astype('int')

data['pv'] = data['pv'].astype('int')

- rentType的–就视为未知方式

#rentType的--视为未知方式,注意此处要先选列,如果先选行替换不了

#data_all[data_all['rentType'] == '--']['rentType'] = "未知方式"

data_all['rentType'][data_all['rentType'] == '--'] = '未知方式'

- buildYear“暂无信息”用众数填充

#buildYear的”暂无信息“用众数

#当用行号索引的时候, 尽量用 iloc 来进行索引; 而用标签索引的时候用 loc , ix 尽量别用。

# buildYearmean = pd.DataFrame(data[data['buildYear'] != '暂无信息']['buildYear'].mode())

#data.loc[data[data['buildYear'] == '暂无信息'].index, 'buildYear'] = buildYearmean.iloc[0, 0]

data_all['buildYear'][data_all['buildYear'] == '暂无信息'] = data_all['buildYear'][data_all['buildYear'] != '暂无信息'].mode()[0]

data['buildYear'] = data['buildYear'].astype('int')

三、基本的数据处理

- 转换object类型数据

#转换object类型数据

columns =['rentType','communityName','houseType', 'houseFloor', 'houseToward', 'houseDecoration', 'region', 'plate']

for col in columns:

data[col] = LabelEncoder().fit_transform(data[col])

- 时间字段的处理

#分割交易时间

def month(x):

month = int(x.splot('/')[1])

return month

def day(x):

day = int(x.splot('/')[2])

return day

data['month'] = data['tradeTime'].apply(lambda x: month(x))

data['day'] = data['tradeTime'].apply(lambda x: day(x))

- 删除无关字段

- city都是SH,所以可以删去

- tradeTime已经做了分割,原数据没用

- ID是每个记录的标志号,无用可删

# 去掉部分特征",

# 去掉部分特征",

#city都是SH,所以可以删去

data.drop('city', axis=1, inplace=True)

#tradeTime已经做了分割,原数据没用

data.drop('tradeTime', axis=1, inplace=True)

#ID是每个记录的标志号,无用可删

data.drop('ID', axis=1, inplace=True)

异常值分析及处理

异常值分析是检验数据是否有录入错误以及含有不合常理的数据。异常值是指样本中的个别值,其数值明显明显偏离其余的观测值。异常值也称为离群点。

一、根据测试集数据的分布处理训练集的数据分布

二、使用合适的方法找出异常值

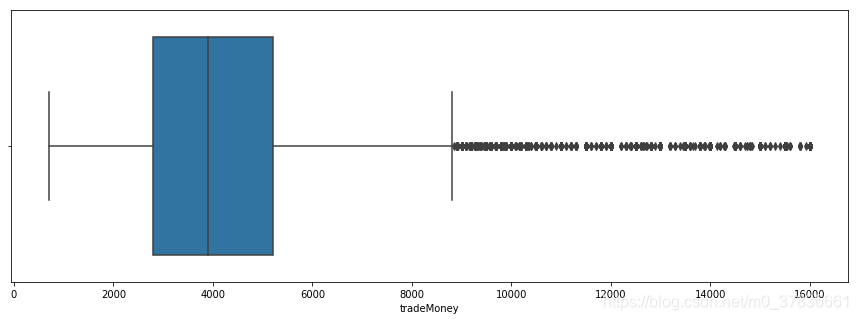

这里主要针对area和tradeMoney两个维度处理。针对tradeMoney,采用IsolationForest模型自动处理;针对area和totalFloor是主观+数据可视化的方式得到的结果

三、 对异常值进行处理

#异常值分析

def IF_drop(train):

IForest = IsolationForest(contamination=0.01)

IForest.fit(train["tradeMoney"].values.reshape(-1,1))

y_pred = IForest.predict(train["tradeMoney"].values.reshape(-1,1))

drop_index = train.loc[y_pred==-1].index

print(drop_index)

train.drop(drop_index,inplace=True)

return train

data_train = IF_drop(data_train)

#处理异常值

def dropData(train):

# 丢弃部分异常值

train = train[train.area <= 200]

train = train[(train.tradeMoney <=16000) & (train.tradeMoney >=700)]

train.drop(train[(train['totalFloor'] == 0)].index, inplace=True)

return train

#数据集异常值处理

data_train = dropData(data_train)

# 处理异常值后再次查看面积和租金分布图

plt.figure(figsize=(15,5))

sns.boxplot(data_train.area)

plt.show()

plt.figure(figsize=(15,5)),

sns.boxplot(data_train.tradeMoney)

plt.show()

深度清洗

分析每一个communityName,city,region,plate的数据分布并对其进行数据清洗

主要思路:对每一个region的数据,对area和tradeMoney两个维度进行深度清洗,采用主观+数据可视化的方式

def cleanData(data):

data.drop(data[(data['tradeMoney']>16000)].index,inplace=True)

data.drop(data[(data['area']>160)].index,inplace=True)

data.drop(data[(data['tradeMoney']<100)].index,inplace=True)

data.drop(data[(data['totalFloor']==0)].index,inplace=True)

#深度清理

data.drop(data[(data['region']=='RG00001') & (data['tradeMoney']<1000) & (data['area']>50)].index,inplace=True)

data.drop(data[(data['region']=='RG00001') & (data['tradeMoney']>25000)].index,inplace=True)

data.drop(data[(data['region']=='RG00001') & (data['area']>250)&(data['tradeMoney']<20000)].index,inplace=True)

data.drop(data[(data['region']=='RG00001') & (data['area']>400)&(data['tradeMoney']>50000)].index,inplace=True)

data.drop(data[(data['region']=='RG00001') & (data['area']>100)&(data['tradeMoney']<2000)].index,inplace=True)

data.drop(data[(data['region']=='RG00002') & (data['area']<100)&(data['tradeMoney']>60000)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['area']<300)&(data['tradeMoney']>30000)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['tradeMoney']<500)&(data['area']<50)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['tradeMoney']<1500)&(data['area']>100)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['tradeMoney']<2000)&(data['area']>300)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['tradeMoney']>5000)&(data['area']<20)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['area']>600)&(data['tradeMoney']>40000)].index,inplace=True)

data.drop(data[(data['region']=='RG00004') & (data['tradeMoney']<1000)&(data['area']>80)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['tradeMoney']<200)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']<2000)&(data['area']>180)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']>50000)&(data['area']<200)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['area']>200)&(data['tradeMoney']<2000)].index,inplace=True)

data.drop(data[(data['region']=='RG00007') & (data['area']>100)&(data['tradeMoney']<2500)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['area']>200)&(data['tradeMoney']>25000)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['area']>400)&(data['tradeMoney']<15000)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['tradeMoney']<3000)&(data['area']>200)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['tradeMoney']>7000)&(data['area']<75)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['tradeMoney']>12500)&(data['area']<100)].index,inplace=True)

data.drop(data[(data['region']=='RG00004') & (data['area']>400)&(data['tradeMoney']>20000)].index,inplace=True)

data.drop(data[(data['region']=='RG00008') & (data['tradeMoney']<2000)&(data['area']>80)].index,inplace=True)

data.drop(data[(data['region']=='RG00009') & (data['tradeMoney']>40000)].index,inplace=True)

data.drop(data[(data['region']=='RG00009') & (data['area']>300)].index,inplace=True)

data.drop(data[(data['region']=='RG00009') & (data['area']>100)&(data['tradeMoney']<2000)].index,inplace=True)

data.drop(data[(data['region']=='RG00011') & (data['tradeMoney']<10000)&(data['area']>390)].index,inplace=True)

data.drop(data[(data['region']=='RG00012') & (data['area']>120)&(data['tradeMoney']<5000)].index,inplace=True)

data.drop(data[(data['region']=='RG00013') & (data['area']<100)&(data['tradeMoney']>40000)].index,inplace=True)

data.drop(data[(data['region']=='RG00013') & (data['area']>400)&(data['tradeMoney']>50000)].index,inplace=True)

data.drop(data[(data['region']=='RG00013') & (data['area']>80)&(data['tradeMoney']<2000)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['area']>300)&(data['tradeMoney']>40000)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']<1300)&(data['area']>80)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']<8000)&(data['area']>200)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']<1000)&(data['area']>20)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']>25000)&(data['area']>200)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']<20000)&(data['area']>250)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']>30000)&(data['area']<100)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']<50000)&(data['area']>600)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']>50000)&(data['area']>350)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['tradeMoney']>4000)&(data['area']<100)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['tradeMoney']<600)&(data['area']>100)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['area']>165)].index,inplace=True)

data.drop(data[(data['region']=='RG00012') & (data['tradeMoney']<800)&(data['area']<30)].index,inplace=True)

data.drop(data[(data['region']=='RG00007') & (data['tradeMoney']<1100)&(data['area']>50)].index,inplace=True)

data.drop(data[(data['region']=='RG00004') & (data['tradeMoney']>8000)&(data['area']<80)].index,inplace=True)

data.loc[(data['region']=='RG00002')&(data['area']>50)&(data['rentType']=='合租'),'rentType']='整租'

data.loc[(data['region']=='RG00014')&(data['rentType']=='合租')&(data['area']>60),'rentType']='整租'

data.drop(data[(data['region']=='RG00008')&(data['tradeMoney']>15000)&(data['area']<110)].index,inplace=True)

data.drop(data[(data['region']=='RG00008')&(data['tradeMoney']>20000)&(data['area']>110)].index,inplace=True)

data.drop(data[(data['region']=='RG00008')&(data['tradeMoney']<1500)&(data['area']<50)].index,inplace=True)

data.drop(data[(data['region']=='RG00008')&(data['rentType']=='合租')&(data['area']>50)].index,inplace=True)

data.drop(data[(data['region']=='RG00015') ].index,inplace=True)

data.reset_index(drop=True, inplace=True)

return data

data_train = cleanData(data_train)

【来源】

{1]《特征工程》

[2]《Python数据分析与挖掘》

[3] https://zhuanlan.zhihu.com/p/40775756

[4] https://zhuanlan.zhihu.com/p/42756654

[5] https://zhuanlan.zhihu.com/p/25040651