分别下载以下包及下载路径

elasticsearch下载网址:https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.5.1-linux-x86_64.tar.gz

logstash下载网址:https://artifacts.elastic.co/downloads/logstash/logstash-7.5.1.tar.gz

filebeat下载网址:https://artifacts.elastic.co/downloads/kibana/kibana-7.5.1-linux-x86_64.tar.gz

Kibana下载网址:https://artifacts.elastic.co/downloads/kibana/kibana-7.5.1-linux-x86_64.tar.gz

node的下载网址:https://nodejs.org/dist/v10.15.3/node-v10.15.3-linux-x64.tar.gz

phantomjs下载网址:https://github.com/Medium/phantomjs/releases/download/v2.1.1/phantomjs-2.1.1-linux-x86_64.tar.bz2

elasticsearch-head下载网址:https://github.com/bitcoin/secp256k1

1、安装elasticsearch

修改两个主机上的hosts文件

10.0.2.111 ip-10-0-2-111

10.0.2.153 ip-10-0-2-153

第一在主的主机上部署

1)解压安装包

[root@ip-10-0-2-153 elk]# tar -xf updates/elasticsearch-7.5.1-linux-x86_64.tar.gz

[root@ip-10-0-2-153 elk]# mv elasticsearch-7.5.1/ elasticsearch

2)修改和配置配置文件

[root@ip-10-0-2-153 elk]# cd elasticsearch/

[root@ip-10-0-2-153 elasticsearch]# cd config/

[root@ip-10-0-2-153 config]# mv elasticsearch.yml elasticsearch.yml_bak

[root@ip-10-0-2-153 config]# vim elasticsearch.yml

elasticsearch配置文件内容如下

#集群名称

cluster.name: cpct

# 节点名称

node.name: ip-10.0.2.153

# 存放数据目录,先创建该目录

path.data: /usr/local/elk/elasticsearch/data

# 存放日志目录,先创建该目录

path.logs: /usr/local/elk/elasticsearch/logs

# 节点IP

network.host: 10.0.2.153

# tcp端口

transport.tcp.port: 9300

# http端口

http.port: 9200

# 种子节点列表,主节点的IP地址必须在seed_hosts中

discovery.seed_hosts: ["10.0.2.153:9300","10.0.2.111:9300"]

# 主合格节点列表,若有多个主节点,则主节点进行对应的配置

cluster.initial_master_nodes: ["10.0.2.153:9300"]

# 主节点相关配置

# 是否允许作为主节点

node.master: true

# 是否保存数据

node.data: true

node.ingest: false

node.ml: false

cluster.remote.connect: false

# 跨域

http.cors.enabled: true

http.cors.allow-origin: "*"

http.cors.allow-methods: OPTIONS, HEAD, GET, POST, PUT, DELETE

http.cors.allow-headers: "X-Requested-With, Content-Type, Content-Length, X-User"

3)创建普通用户,将elasticsearch所属组和所属者改为普通用户,(我这边是centos),并已centos启动elasticsearch

[root@ip-10-0-2-153 elk]# chown -R centos.centos elasticsearch/

[root@ip-10-0-2-153 elk]# su - centos

Last login: Tue Jan 7 19:12:26 CST 2020 from 10.0.1.85 on pts/0

[centos@ip-10-0-2-153 ~]$ cd /usr/local/elk/elasticsearch/bin/

[centos@ip-10-0-2-153 bin]$ ./elasticsearch -d

4)此时出现以下报错

[centos@ip-10-0-2-153 bin]$ ./elasticsearch -d

future versions of Elasticsearch will require Java 11; your Java version from [/home/deploy/java8/jre] does not meet this requirement

[centos@ip-10-0-2-153 bin]$ ERROR: [1] bootstrap checks failed

[1]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

解决办法:

A、出现future versions of Elasticsearch will require Java 11; your Java version from [/home/deploy/java8/jre] does not meet this requirement,这个报错是因为elasticsearch默认是要java11的,但这个时候是向下兼容,则可以不考虑

B、出现[1]: max virtual memory areas vm.max_map_count [65530] is too low, increase to at least [262144]

我们只需要切换为root用户执行一下操作就可以

[root@ip-10-0-2-153 elk]# vi /etc/sysctl.conf

[root@ip-10-0-2-153 elk]# tail -1 /etc/sysctl.conf

vm.max_map_count=655360

[root@ip-10-0-2-153 elk]# sysctl -p

vm.max_map_count = 655360

重新启动后

[centos@ip-10-0-2-153 ~]$ cd /usr/local/elk/elasticsearch/bin/

[centos@ip-10-0-2-153 bin]$ ./elasticsearch -d

future versions of Elasticsearch will require Java 11; your Java version from [/home/deploy/java8/jre] does not meet this requirement

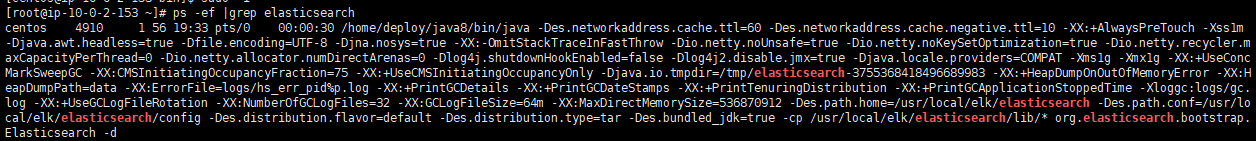

查询进程是否已启动

第二在从的主机上部署,部署过程和主一致

其中配置文件有配置如下,其余一致

#集群名称

cluster.name: cpct

# 节点名称

node.name: ip-10-0-2-111

# 存放数据目录,先创建该目录

path.data: /usr/local/elk/elasticsearch/data

# 存放日志目录,先创建该目录

path.logs: /usr/local/elk/elasticsearch/logs

# 节点IP

network.host: 10.0.2.111

# tcp端口

transport.tcp.port: 9300

# http端口

http.port: 9200

# 种子节点列表,主节点的IP地址必须在seed_hosts中

discovery.seed_hosts: ["10.0.2.153:9300","10.0.2.111:9300"]

# 主合格节点列表,若有多个主节点,则主节点进行对应的配置

cluster.initial_master_nodes: ["10.0.2.153:9300"]

# 主节点相关配置

# 是否允许作为主节点

node.master: false

# 是否保存数据

node.data: true

node.ingest: false

node.ml: false

cluster.remote.connect: false

# 跨域

http.cors.enabled: true

http.cors.allow-origin: "*"

http.cors.allow-methods: OPTIONS, HEAD, GET, POST, PUT, DELETE

http.cors.allow-headers: "X-Requested-With, Content-Type, Content-Length, X-User"

第三在主的主机上配置elasticsearch-head

1) 安装node服务

[root@ip-10-0-2-153 updates]# tar -xf node-v10.15.3-linux-x64.tar.gz

[root@ip-10-0-2-153 updates]# mv node-v10.15.3-linux-x64 ../

[root@ip-10-0-2-153 updates]# cd ../

[root@ip-10-0-2-153 elk]# mv node-v10.15.3-linux-x64/ node

[root@ip-10-0-2-153 elk]# source /etc/profile

[root@ip-10-0-2-153 elk]# node -v

v10.15.3

2)从github上下载elasticsearch-node

[root@ip-10-0-2-153 elasticsearch-head]# yum -y install bzip2

[root@ip-10-0-2-153 elk]# git clone https://github.com/bitcoin/secp256k1

Cloning into 'secp256k1'...

remote: Enumerating objects: 11, done.

remote: Counting objects: 100% (11/11), done.

remote: Compressing objects: 100% (9/9), done.

remote: Total 4931 (delta 4), reused 5 (delta 2), pack-reused 4920

Receiving objects: 100% (4931/4931), 2.03 MiB | 460.00 KiB/s, done.

Resolving deltas: 100% (3447/3447), done.

[root@ip-10-0-2-153 elk]# cd elasticsearch-head

[root@ip-10-0-2-153 elasticsearch-head]# wget https://github.com/Medium/phantomjs/releases/download/v2.1.1/phantomjs-2.1.1-linux-x86_64.tar.bz2

[root@ip-10-0-2-153 elasticsearch-head]# npm install

npm WARN deprecated phantomjs-prebuilt@2.1.16: this package is now deprecated

> phantomjs-prebuilt@2.1.16 install /usr/local/elk/elasticsearch-head/node_modules/phantomjs-prebuilt

> node install.js

PhantomJS not found on PATH

Download already available at /tmp/phantomjs/phantomjs-2.1.1-linux-x86_64.tar.bz2

Verified checksum of previously downloaded file

Extracting tar contents (via spawned process)

Removing /usr/local/elk/elasticsearch-head/node_modules/phantomjs-prebuilt/lib/phantom

Copying extracted folder /tmp/phantomjs/phantomjs-2.1.1-linux-x86_64.tar.bz2-extract-1578400904569/phantomjs-2.1.1-linux-x86_64 -> /usr/local/elk/elasticsearch-head/node_modules/phantomjs-prebuilt/lib/phantom

Writing location.js file

Done. Phantomjs binary available at /usr/local/elk/elasticsearch-head/node_modules/phantomjs-prebuilt/lib/phantom/bin/phantomjs

npm notice created a lockfile as package-lock.json. You should commit this file.

npm WARN elasticsearch-head@0.0.0 license should be a valid SPDX license expression

npm WARN optional SKIPPING OPTIONAL DEPENDENCY: fsevents@1.2.11 (node_modules/fsevents):

npm WARN notsup SKIPPING OPTIONAL DEPENDENCY: Unsupported platform for fsevents@1.2.11: wanted {"os":"darwin","arch":"any"} (current: {"os":"linux","arch":"x64"})

added 67 packages from 69 contributors and audited 1771 packages in 7.116s

found 40 vulnerabilities (19 low, 2 moderate, 19 high)

run `npm audit fix` to fix them, or `npm audit` for details

[root@ip-10-0-2-153 elasticsearch-head]# npm run start &

[1] 5731

[root@ip-10-0-2-153 elasticsearch-head]#

> elasticsearch-head@0.0.0 start /usr/local/elk/elasticsearch-head

> grunt server

Running "connect:server" (connect) task

Waiting forever...

Started connect web server on http://localhost:9100

第四测试

1)访问http://10.0.2.153:9200/检验elasticsearch是否部署成功

2)访问http://10.0.2.153:9200/_cluster/health?pretty=true检验整个集群是否通

3)最后访问http://10.0.2.153:9100查看具体情况

2、安装kibana服务

1)解压安装包并修改相关配置文件内容

[root@ip-10-0-2-211 elk]# tar -xf updates/kibana-7.5.1-linux-x86_64.tar.gz

[root@ip-10-0-2-211 elk]# mv kibana-7.5.1-linux-x86_64/ kibana

[root@ip-10-0-2-211 elk]# cd kibana/

[root@ip-10-0-2-211 kibana]# vim config/kibana.yml

只需要修改 kibana.yml的配置文件,将server.host后面改成本机地址,elasticsearch.hosts改成我们的原地址

2)启动kibana服务

[root@ip-10-0-2-211 kibana]# cd bin/

[root@ip-10-0-2-211 bin]# ./kibana

Kibana should not be run as root. Use --allow-root to continue.

[root@ip-10-0-2-211 bin]# ./kibana --allow-root &

[1] 30371

3)检查进程是否运行

[root@ip-10-0-2-211 bin]# ps -ef |grep node

root 30371 30179 32 10:01 pts/0 00:00:38 ./../node/bin/node ./../src/cli --allow-root

[root@ip-10-0-2-211 bin]# netstat -antpu |grep 5601

tcp 0 0 10.0.2.211:5601 0.0.0.0:* LISTEN 30371/./../node/bin

4)访问http://10.0.2.211:5601

3、部署客户端的日志收集服务

1)部署logstash服务

解压安装包,并修改配置文件

[root@ip-10-0-2-95 elk]# tar -xf updates/logstash-7.5.1.tar.gz

[root@ip-10-0-2-95 elk]# mv logstash-7.5.1/ logstash

[root@ip-10-0-2-95 elk]# cd logstash/

[root@ip-10-0-2-95 logstash]# cd bin/

[root@ip-10-0-2-95 bin]# vim log_manage.conf

启动logstash服务

[root@ip-10-0-2-95 bin]# ./logstash -f log_manage.conf &

[1] 1920

log_manage.conf配置文件信息如下

input {

file{

path => "/var/log/messages" #指定要收集的日志文件

type => "system" #指定类型为system,可以自定义,type值和output{ } 中的type对应即可

start_position => "beginning" #从开始处收集

}

file{

path => "/home/deploy/activity_service/logs/gxzx-act-web.log"

type => "activity"

start_position => "beginning"

}

file{

path => "/home/deploy/tomcat8_manage/logs/catalina-*out"

type => "manage"

start_position => "beginning"

}

file{

path => "/home/deploy/tomcat8_coin/logs/catalina-*out"

type => "coin"

start_position => "beginning"

}

}

output {

if [type] == "system" { #如果type为system,

elasticsearch { #就输出到Elasticsearch服务器

hosts => ["10.0.2.153:9200"] #Elasticsearch监听地址及端口

index => "system-%{+YYYY.MM.dd}" #指定索引格式

}

}

if [type] == "activity" {

elasticsearch {

hosts => ["10.0.2.153:9200"]

index => "activity-%{+YYYY.MM.dd}"

}

}

if [type] == "coin" {

elasticsearch {

hosts => ["10.0.2.153:9200"]

index => "coin-%{+YYYY.MM.dd}"

}

}

if [type] == "manage" {

elasticsearch {

hosts => ["10.0.2.153:9200"]

index => "manage-%{+YYYY.MM.dd}"

}

}

}

查看服务是否启动

[root@ip-10-0-2-95 bin]# ps -ef |grep logstash

root 1920 1848 99 10:34 pts/0 00:00:33 /home/deploy/java8/bin/java -Xms1g -Xmx1g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFraction=75 -XX:+UseCMSInitiatingOccupancyOnly -Djava.awt.headless=true -Dfile.encoding=UTF-8 -Djruby.compile.invokedynamic=true -Djruby.jit.threshold=0 -Djruby.regexp.interruptible=true -XX:+HeapDumpOnOutOfMemoryError -Djava.security.egd=file:/dev/urandom -Dlog4j2.isThreadContextMapInheritable=true -cp /usr/local/elk/logstash/logstash-core/lib/jars/animal-sniffer-annotations-1.14.jar:/usr/local/elk/logstash/logstash-core/lib/jars/commons-codec-1.11.jar:/usr/local/elk/logstash/logstash-core/lib/jars/commons-compiler-3.0.11.jar:/usr/local/elk/logstash/logstash-core/lib/jars/error_prone_annotations-2.0.18.jar:/usr/local/elk/logstash/logstash-core/lib/jars/google-java-format-1.1.jar:/usr/local/elk/logstash/logstash-core/lib/jars/gradle-license-report-0.7.1.jar:/usr/local/elk/logstash/logstash-core/lib/jars/guava-22.0.jar:/usr/local/elk/logstash/logstash-core/lib/jars/j2objc-annotations-1.1.jar:/usr/local/elk/logstashlogstash-core/lib/jars/jackson-annotations-2.9.9.jar:/usr/local/elk/logstash/logstash-core/lib/jars/jackson-core-2.9.9.jar:/usr/local/elk/logstash/logstash-core/lib/jars/jackson-databind-2.9.9.3.jar:/usr/local/elk/logstash/logstash-core/lib/jars/jackson-dataformat-cbor-2.9.9.jar:/usr/local/elk/logstash/logstash-core/lib/jars/janino-3.0.11.jar:/usr/local/elk/logstash/logstash-core/lib/jars/javassist-3.24.0-GA.jar:/usr/local/elk/logstash/logstash-core/lib/jars/jruby-complete-9.2.8.0.jar:/usr/local/elk/logstash/logstash-core/lib/jars/jsr305-1.3.9.jar:/usr/local/elk/logstash/logstash-core/lib/jars/log4j-api-2.11.1.jar:/usr/local/elk/logstash/logstash-core/lib/jars/log4j-core-2.11.1.jar:/usr/local/elk/logstash/logstash-core/lib/jars/log4j-slf4j-impl-2.11.1.jar:/usr/local/elk/logstash/logstash-core/lib/jars/logstash-core.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.core.commands-3.6.0.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.core.contenttype-3.4.100.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.core.expressions-3.4.300.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.core.filesystem-1.3.100.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.core.jobs-3.5.100.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.core.resources-3.7.100.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.core.runtime-3.7.0.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.equinox.app-1.3.100.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.equinox.common-3.6.0.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.equinox.preferences-3.4.1.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.equinox.registry-3.5.101.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.jdt.core-3.10.0.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.osgi-3.7.1.jar:/usr/local/elk/logstash/logstash-core/lib/jars/org.eclipse.text-3.5.101.jar:/usr/local/elk/logstash/logstash-core/lib/jars/reflections-0.9.11.jar:/usr/local/elk/logstash/logstash-core/lib/jars/slf4j-api-1.7.25.jar org.logstash.Logstash -f log_manage.conf

2)安装filebeat服务和启动服务

[root@ip-10-0-2-95 elk]# tar -xf updates/filebeat-7.5.1-linux-x86_64.tar.gz

[root@ip-10-0-2-95 elk]# mv filebeat-7.5.1-linux-x86_64/ filebeat

[root@ip-10-0-2-95 elk]# cd filebeat/

[root@ip-10-0-2-95 filebeat]# ./filebeat &

[1] 2027

4、在kibana web端配置log索引

1) 进入到create页面

2)进行创建

2) 进入discover页面查询我们刚刚创建的索引

3)创建第二个index

3)在查询日志的时候我们可以通过索引选择不同的索引

搜索时可以通过不同的项加“:”加“筛选内容”进行刷选

至此整个elk服务部署完毕,其余的我们还可以在logstash搜集到的日志归集到kafa、redis等等服务上,构建集群服务

来源:CSDN

作者:yunson_Liu

链接:https://blog.csdn.net/baidu_38432732/article/details/103878589