节点及实例规划:

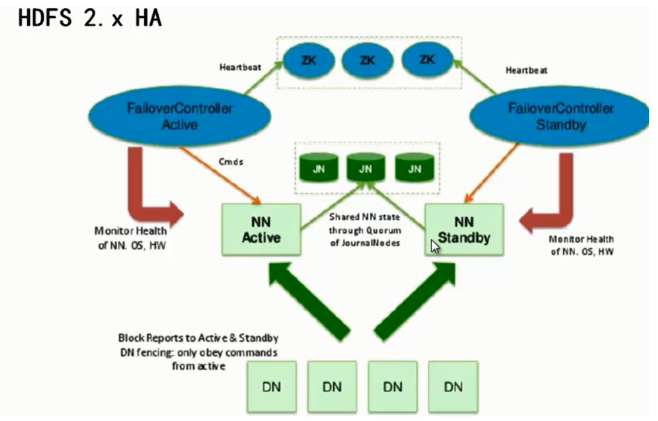

High Availability With QJM 部署要点及注意事项请参考 https://my.oschina.net/u/3862440/blog/2208568 HA 部署小节。

编辑"hdfs-site.xml"

dfs.nameservices

--配置命名服务,一个集群一个服务名,服务名下面包含多个服务和几点,对外统一提供服务。

<property>

<name>dfs.nameservices</name>

<value>mycluster</value>

</property>dfs.ha.namenodes.[nameservice ID]

--配置所有的NN的service id,一个service服务下面有多个NN节点,为了做NN高可用,集群必须知道每个节点的ID,以便区分。我这里规划了2个NN,所以NN ID有两个。

<property>

<name>dfs.ha.namenodes.mycluster</name>

<value>nn1,nn2</value>

</property>dfs.namenode.rpc-address.[nameservice ID].[name node ID]

--配置所有的NN的rpc协议(用于NN与DN或者客户端之间的数据传输),我这里配置了2个NN 3个DN,所以这里需要为这两个NN配置rpc传输协议。

<property>

<name>dfs.namenode.rpc-address.mycluster.nn1</name>

<value>hd1:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.mycluster.nn2</name>

<value>hd2:8020</value>

</property>dfs.namenode.http-address.[nameservice ID].[name node ID]

--配置所有NN的http传输协议,也就是客户端管理管理的web界面传输协议,这个需要连接到各个NN,所以需要配置2个NN的http协议。

<property>

<name>dfs.namenode.http-address.mycluster.nn1</name>

<value>hd1:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.mycluster.nn2</name>

<value>hd2:50070</value>

</property>dfs.namenode.shared.edits.dir

-- the URI which identifies the group of JNs where the NameNodes will write/read edits。

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hd2:8485;hd3:8485;hd4:8485/mycluster</value>

</property>dfs.journalnode.edits.dir (不存在需要创建)

- the path where the JournalNode daemon will store its local state。

这里需要明白JN在此处的用途,引用官方的一句话“Using the Quorum Journal Manager or Conventional Shared Storage”,可以很清楚看出JN是管理和转化共享存储的,所以既然是共享存储,肯定跟DN有关系,所以也需要配置3个JN,这3个JN存放的是edits日志,被所有的NN和DN所共享。

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/home/hadoop/journal/node/local/data</value>

</property>dfs.client.failover.proxy.provider.[nameservice ID]

--固定配置,配置客户端连接。客户端通过这个类方法连接到集群,必须配置。

<property>

<name>dfs.client.failover.proxy.provider.mycluster</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>dfs.ha.fencing.methods

--配置sshfence ,这个跟配置ssh对等性(免密登录)的功能一样,但是方法不一样。因为做高可用,客户端通过集群服务名连接集群,集群内部有多个NN,我们只做了NN和DN之间的免密登录,但是集群跟NN之间的没有做,说白了就是ZK跟NN之间需要免密登录。

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>Configuring automatic failover 1:

--配置自动切换,自动切换需要配置ZK,由ZK统一管理NN。客户端请求ZK,ZK搜索集群内活动的NN,然后提供服务。

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>编辑 core-site.xml

fs.defaultFS

--配置dfs默认目录

<property>

<name>fs.defaultFS</name>

<value>hdfs://mycluster</value>

</property>Configuring automatic failover 2:--配置自动切换

<property>

<name>ha.zookeeper.quorum</name>

<value>hd1:2181,hd2:2181,hd3:2181</value>

</property>配置hadoop临时目录(不存在需要创建)

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/hadoop/hadoop-2.7.1</value>

</property>配置Datanode

[hadoop@hd1 hadoop]$ more slaves

hd2

hd3

hd4注意:masters(SNN),yarn,MR不需要配置。

拷贝配置文件到hd2,hd3,hd4节点。

配置zookeep:

解压 zookeeper-3.4.6.tar.gz

配置

dataDir=/usr/hadoop/zookeeper-3.4.6/tmpserver.1=hd1:2888:3888 server.2=hd2:2888:3888 server.3=hd3:2888:3888

server.1,.2,.3是zookeeper id

mkdir -p /usr/hadoop/zookeeper-3.4.6/tmp

mkdir -p /home/hadoop/journal/node/local/data

vi /usr/hadoop/zookeeper-3.4.6/tmp/myid

[hadoop@hd1 tmp]$ more myid

1hd1 的 /usr/hadoop/zookeeper-3.4.6/tmp/myid文件写入1,hd2写入2,hd3写入3,依此类推,,,

拷贝zookeeper 到节点2,节点3 scp -r zookeeper-3.4.6/ hadoop@hd3:/usr/hadoop/

配置JN PATH路径

启动ZK(需要在hd1,hd2,hd3节点启动zk):

sh zkServer.sh start

JMX enabled by default

Using config: /usr/hadoop/zookeeper-3.4.6/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED

[hadoop@hd1 bin]$ jps

1777 QuorumPeerMain

1795 Jps启动JN(hd2,hd3,hd4):

[hadoop@hd4 ~]$ hadoop-daemon.sh start journalnode

starting journalnode, logging to /usr/hadoop/hadoop-2.7.1/logs/hadoop-hadoop-journalnode-hd4.out

[hadoop@hd4 ~]$ jps

1843 JournalNode

1879 JpsNN格式化(任意一台NN执行,hd1,hd2 ):

[hadoop@hd1 sbin]$ hdfs namenode -format

18/10/07 05:54:30 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = hd1/192.168.83.11

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 2.7.1

........

18/10/07 05:54:34 INFO namenode.FSImage: Allocated new BlockPoolId: BP-841723191-192.168.83.11-1538862874971

18/10/07 05:54:34 INFO common.Storage: Storage directory /usr/hadoop/hadoop-2.7.1/dfs/name has been successfully formatted.

18/10/07 05:54:35 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

18/10/07 05:54:35 INFO util.ExitUtil: Exiting with status 0

18/10/07 05:54:35 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hd1/192.168.83.11

************************************************************/

元数据文件已经生成 ,目前只是在执行了format节点上有元数据,所以需要拷贝到另外一台NN节点

ls /usr/hadoop/hadoop-2.7.1/dfs/name/current

total 16

-rw-rw-r-- 1 hadoop hadoop 353 Oct 7 05:54 fsimage_0000000000000000000

-rw-rw-r-- 1 hadoop hadoop 62 Oct 7 05:54 fsimage_0000000000000000000.md5

-rw-rw-r-- 1 hadoop hadoop 2 Oct 7 05:54 seen_txid

-rw-rw-r-- 1 hadoop hadoop 205 Oct 7 05:54 VERSIONNN元数据拷贝(注意:在拷贝元数据之前,需要提前启动format过的NN,只启动一个节点)

-

启动format的NN

[hadoop@hd1 current]$ hadoop-daemon.sh start namenode

starting namenode, logging to /usr/hadoop/hadoop-2.7.1/logs/hadoop-hadoop-namenode-hd1.out

[hadoop@hd1 current]$ jps

1777 QuorumPeerMain

2177 Jps-

在未format NN节点(hd2)执行元数据拷贝命令

[hadoop@hd2 ~]$ hdfs namenode -bootstrapStandby

18/10/07 06:07:15 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = localhost.localdomain/127.0.0.1

STARTUP_MSG: args = [-bootstrapStandby]

STARTUP_MSG: version = 2.7.1

。。。。。。。。。。。。。。。。。。。。。。

************************************************************/

18/10/07 06:07:15 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

18/10/07 06:07:15 INFO namenode.NameNode: createNameNode [-bootstrapStandby]

18/10/07 06:07:16 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

=====================================================

About to bootstrap Standby ID nn2 from:

Nameservice ID: mycluster

Other Namenode ID: nn1

Other NN's HTTP address: http://hd1:50070

Other NN's IPC address: hd1/192.168.83.11:8020

Namespace ID: 1626081692

Block pool ID: BP-841723191-192.168.83.11-1538862874971

Cluster ID: CID-230e9e54-e6d1-4baf-a66a-39cc69368ed8

Layout version: -63

isUpgradeFinalized: true

=====================================================

18/10/07 06:07:17 INFO common.Storage: Storage directory /usr/hadoop/hadoop-2.7.1/dfs/name has been successfully formatted.

18/10/07 06:07:18 INFO namenode.TransferFsImage: Opening connection to http://hd1:50070/imagetransfer?getimage=1&txid=0&storageInfo=-63:1626081692:0:CID-230e9e54-e6d1-4baf-a66a-39cc69368ed8

18/10/07 06:07:18 INFO namenode.TransferFsImage: Image Transfer timeout configured to 60000 milliseconds

18/10/07 06:07:18 INFO namenode.TransferFsImage: Transfer took 0.01s at 0.00 KB/s

18/10/07 06:07:18 INFO namenode.TransferFsImage: Downloaded file fsimage.ckpt_0000000000000000000 size 353 bytes.

18/10/07 06:07:18 INFO util.ExitUtil: Exiting with status 0

18/10/07 06:07:18 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at localhost.localdomain/127.0.0.1

************************************************************/检查hd2节点下是否有元数据存在

[hadoop@hd2 current]$ ls -l /usr/hadoop/hadoop-2.7.1/dfs/name/current

total 16

-rw-rw-r-- 1 hadoop hadoop 353 Oct 7 06:17 fsimage_0000000000000000000

-rw-rw-r-- 1 hadoop hadoop 62 Oct 7 06:17 fsimage_0000000000000000000.md5

-rw-rw-r-- 1 hadoop hadoop 2 Oct 7 06:17 seen_txid

-rw-rw-r-- 1 hadoop hadoop 205 Oct 7 06:17 VERSION启动所有服务:

[hadoop@hd1 current]$ start-dfs.sh

18/10/07 06:30:07 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting namenodes on [hd1 hd2]

hd2: starting namenode, logging to /usr/hadoop/hadoop-2.7.1/logs/hadoop-hadoop-namenode-hd2.out

hd1: starting namenode, logging to /usr/hadoop/hadoop-2.7.1/logs/hadoop-hadoop-namenode-hd1.out

hd3: starting datanode, logging to /usr/hadoop/hadoop-2.7.1/logs/hadoop-hadoop-datanode-hd3.out

hd4: starting datanode, logging to /usr/hadoop/hadoop-2.7.1/logs/hadoop-hadoop-datanode-hd4.out

hd2: starting datanode, logging to /usr/hadoop/hadoop-2.7.1/logs/hadoop-hadoop-datanode-hd2.out

Starting journal nodes [hd2 hd3 hd4]

hd4: starting journalnode, logging to /usr/hadoop/hadoop-2.7.1/logs/hadoop-hadoop-journalnode-hd4.out

hd2: starting journalnode, logging to /usr/hadoop/hadoop-2.7.1/logs/hadoop-hadoop-journalnode-hd2.out

hd3: starting journalnode, logging to /usr/hadoop/hadoop-2.7.1/logs/hadoop-hadoop-journalnode-hd3.out

18/10/07 06:30:27 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting ZK Failover Controllers on NN hosts [hd1 hd2]

hd2: starting zkfc, logging to /usr/hadoop/hadoop-2.7.1/logs/hadoop-hadoop-zkfc-hd2.out

hd1: starting zkfc, logging to /usr/hadoop/hadoop-2.7.1/logs/hadoop-hadoop-zkfc-hd1.out

[hadoop@hd1 current]$ jps

1777 QuorumPeerMain

3202 NameNode

3511 DFSZKFailoverController

3548 Jpshd2:

[hadoop@hd2 ~]$ jps

3216 NameNode

3617 Jps

3364 JournalNode

3285 DataNode

1915 QuorumPeerMainhd2节点 zkfc未启动,查看日志

2018-10-07 06:30:38,441 ERROR org.apache.hadoop.ha.ActiveStandbyElector: Connection timed out: couldn't connect to ZooKeeper in 5000 milliseconds

2018-10-07 06:30:39,318 INFO org.apache.zookeeper.ZooKeeper: Session: 0x0 closed

2018-10-07 06:30:39,320 INFO org.apache.zookeeper.ClientCnxn: EventThread shut down

2018-10-07 06:30:39,330 FATAL org.apache.hadoop.ha.ZKFailoverController: Unable to start failover controller. Unable to connect to ZooKeeper quorum at hd1:2181,hd2:2181,hd3:2181. Please check the c

onfigured value for ha.zookeeper.quorum and ensure that ZooKeeper is running.zk 未格式化导致,在其中一台NN格式化zk重新启动

退到hd1节点,执行stop-dfs.sh

hdfs zkfc -formatZK

来源:oschina

链接:https://my.oschina.net/u/3862440/blog/2223203