调度方式:

- 节点选择器: nodeSelector,nodeName

- 节点亲和调度: nodeAffinity

- pod亲和调度: podAffinity

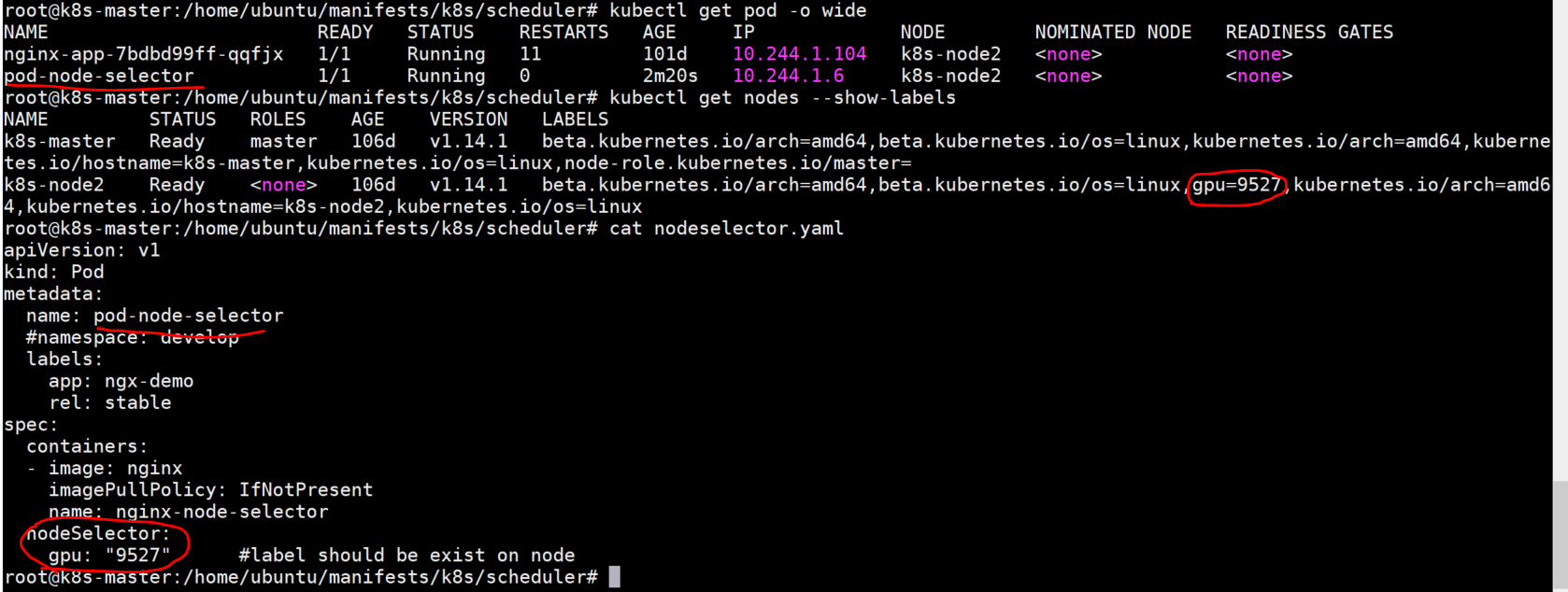

♦ kubectl get nodes --show-labels 可以查看节点存在哪些标签

一、节点选择器

♦ nodeSelector选择的标签需要在node上存在,node才会被选中为pod创建的节点

下图中pod使用了nodeSelector选项过滤包含了gup=9527标签的node,然后pod就创建到了ks-node2上:

二、节点亲和调度

♦ pod.spec.affinity.nodeAffinity.preferredDuringSchedulingIgnoredDuringExecution 软亲和性,尽量找满足需求的

apiVersion: v1

kind: Pod

metadata:

name: pod-node-preferaffinity

#namespace: develop

labels:

app: ngx-demo

rel: stable

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: nginx-node-preferaffinity

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- preference:

matchExpressions:

- key: gpu

operator: In

values:

- "952"

weight: 10

♦ pod.spec.affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution 硬亲和性,必须满足需求

root@k8s-master:/home/ubuntu/manifests/k8s/scheduler# cat nodeaffinity_require.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-node-requiredaffinity

#namespace: develop

labels:

app: ngx-demo

rel: stable

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: nginx-node-requiredaffinity

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: gpu

operator: In

values:

- "952"

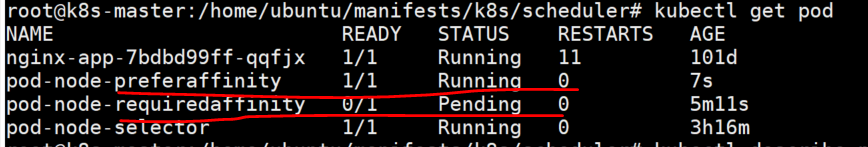

使用同样的matchExpressions来匹配label,required必须满足标签要求,否则会一直处于pending状态:

三、Pod亲和调度

♦ 使用podAffinity我们需要有一个标准来判断哪些节点属于亲和;使用topologyKey判定是否属于同一个位置

♦ pod亲和性与节点亲和性一样有prefered和required两种类型

♦ pod.spec.affinity.podAffinity.requiredDuringSchedulingIgnoredDuringExecution

root@k8s-master:/home/ubuntu/manifests/k8s/scheduler# cat pod_affinity_require.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-first

#namespace: develop

labels:

app: ngx-demo

rel: stable

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: nginx-1

---

apiVersion: v1

kind: Pod

metadata:

name: pod-podrequiredaffinity-second # 该pod会根据第一个部署的pod标签找到亲和的机器来部署pod

#namespace: develop

labels:

app: ngx-demo

rel: stable

spec:

containers:

- image: tomcat

imagePullPolicy: IfNotPresent

name: tomcat-podrequiredaffinity

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions: # 这里匹配的是第一个pod的标签,所以key/values应该是想要pod部署的在一起的label

- key: app

operator: In

values:

- "ngx-demo"

topologyKey: kubernetes.io/hostname #使用的hostname来判断是否在同一位置,也可以使用其它自定义的标签

♦ pod.spec.affinity.podAffinity.preferredDuringSchedulingIgnoredDuringExecution

root@k8s-master:/home/ubuntu/manifests/k8s/scheduler# cat pod_affinity_prefer.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-first

#namespace: develop

labels:

app: ngx-demo

rel: stable

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: nginx-1

---

apiVersion: v1

kind: Pod

metadata:

name: pod-podrequiredaffinity-second

#namespace: develop

labels:

app: ngx-demo

rel: stable

spec:

containers:

- image: tomcat

imagePullPolicy: IfNotPresent

name: tomcat-podrequiredaffinity

affinity:

podAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- podAffinityTerm:

labelSelector:

matchExpressions:

- key: app

operator: In

values:

- "ngx-demo"

topologyKey: kubernetes.io/hostname #可以使用自定义label

weight: 60

♦ pod.spec.affinity.podAntiAffinity 反亲和性,创建的pod不会在一起,反亲和性也有prefered和required两种类型

apiVersion: v1

kind: Pod

metadata:

name: pod-first

#namespace: develop

labels:

app: ngx-demo

rel: stable

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: nginx-1

---

apiVersion: v1

kind: Pod

metadata:

name: pod-podrequired_anti_affinity-second

#namespace: develop

labels:

app: ngx-demo

rel: stable

spec:

containers:

- image: tomcat

imagePullPolicy: IfNotPresent

name: tomcat-podrequired_anti_affinity

affinity:

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- "ngx-demo"

topologyKey: kubernetes.io/hostname

四、污点调度

taint --- 污点就是定义在节点上的键值属性

taintToleration --- 容忍度是定义在pod上的键值属性,是一个列表

- 标签 可定义在所有资源

- 注解 可定义在所有资源

- 污点 只能定义在节点上

taint的effect定义对pod的排斥效果:

- NoSchedule:仅影响调度过程,对现存的pod不影响

- NoExecute: 既影响调度,也影响现存pod;不满足容忍的pod对象将被驱逐

- PreferNoSchedule:尽量不调度,实在没地方运行也可以

♦ 如果一个节点上定义了污点,一个pod能否调上来,我们先去检查能够匹配的容忍度和污点;剩余不能被容忍的污点就去检查污点的effect,

如果是PreferNoSchedule,还是能调度;

如果是NoSchedule,还没调度的就不能被调度,已经调度完的没影响;

如果是NoExecute,完全不能被调度,如果此前有运行的pod,后来改了污点,pod将被驱逐

♦ kubectl taint --help 打污点标签

kubectl taint node k8s-node2 node-type=prod:NoSchedule 给k8s-node2打上prod环境标签,effect为NoSchedule

kubectl taint node k8s-node2 node-type- 移除所有key为node-type的污点

kubectl taint node k8s-node2 node-type:NoSchedule- 移除所有key为node-type中effect为NoSchedule的污点

示例:我们首先使用反亲和性特点使得nginx和tomcat的pod不能在同一个node上被创建,然后再tomcat中设置容忍度满足k8s-master节点的污点(默认情况下,master节点是不能被创建普通pod的)

root@k8s-master:/home/ubuntu/manifests/k8s/scheduler# cat pod_toleration_master_taint.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-first

#namespace: develop

labels:

app: ngx-demo

rel: stable

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: nginx-1

---

apiVersion: v1

kind: Pod

metadata:

name: pod-toleration-master-taint-second

#namespace: develop

labels:

app: ngx-demo

rel: stable

spec:

containers:

- image: tomcat

imagePullPolicy: IfNotPresent

name: tomcat-toleration-master-taint

tolerations: # 设置容忍度 可以容忍master节点NoSchedule

- effect: NoSchedule # 如果effect为空,则表示容忍所有的

key: node-role.kubernetes.io/master operator: Exists

affinity:

podAntiAffinity: #反亲和性使得tomat的pod不能和nginx在同一个pod上

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- "ngx-demo"

topologyKey: kubernetes.io/hostname

最终结果使得tomcat的pod可以被调度到master节点: