Alertmanager 主要用于接收 Prometheus 发送的告警信息,它支持丰富的告警通知渠道,而且很容易做到告警信息进行去重,降噪,分组,策略路由,是一款前卫的告警通知系统。

安装alertmanager

#安装go 1.11

$ wget https://studygolang.com/dl/golang/go1.11.linux-amd64.tar.gz

$ tar zxvf go1.11.linux-amd64.tar.gz && mv go1.11 /opt/go

$ vi /etc/profile 添加

export GOROOT=/opt/go

export PATH=$GOROOT/bin:$PATH

export GOPATH=/opt/go-project

export PATH=$PATH:$GOPATH/bin

$ source /etc/profile

$ go version

#安装alertmanager(或者使用tar包安装)

$ git clone https://github.com/prometheus/alertmanager.git

$ cd alertmanager/

$ make build

安装成功以后,便可编辑报警配置文件了

配置文件为alertmanager.yml,默认如下所示

global:

resolve_timeout: 2h

route:

group_by: ['alertname']

group_wait: 5s

group_interval: 10s

repeat_interval: 1h

receiver: 'webhook'

receivers:

- name: 'webhook'

webhook_configs: #通过webhook报警

- url: 'http://example.com/xxxx'

send_resolved: true

修改配置,使用邮件报警

$ cat alertmanager.yml

global:

resolve_timeout: 5m

smtp_smarthost: 'smtp.263.net:25'

smtp_from: 'xxx@xxx.com'

smtp_auth_username: 'xxx@xxx.com'

smtp_auth_password: 'xxx'

smtp_require_tls: false

route:

group_by: ['alertname']

group_wait: 5m

group_interval: 10s

repeat_interval: 1h

receiver: 'manager'

routes:

- match:

severity: critical

receiver: manager

templates:

- 'templates/wechat.tmpl'

receivers:

- name: 'manager'

email_configs:

- to: 'xxx@xxx.com'

send_resolved: true说明

- golobal 下为发件人信息配置,其中:

- smtp_require_tls: false 为关闭ssl设置

- route 下设置为 alert报警设置,其中:

- repeat_interval: 1h :设置发送频率

- receiver: 'manager' :定义的为邮件接收方,下面的receivers 的值要与这个一样

- template 下设置发送邮件的模板wechat.tmpl

-

{{ define "wechat.default.message" }} {{ range .Alerts }} 告警状态: {{ .Status }} 告警类型:{{ .Labels.alertname }} 故障主机: {{ .Labels.instance }} 告警详情: {{ .Annotations.description }} 触发时间: {{ .StartsAt.Format "2006-01-02 15:04:05" }} {{ end }} {{ end }} - 其中 templates目录为自定义创建的

-

- receivers: 定义接受者信息,其中

- name的值要与上面route里定义的receiver值一样

- email_config:邮件接受者信息

- send_resolved:当故障解决后,发送邮件

更加详细配置可参考 github

启动

./alertmanager --config.file=/opt/prometheus-2.5.0.linux-amd64/conf/alertmanager.yml #这个文件是自己自定义的,位置随便放

配置prometheus

安装prometheus参考上节

上面配置完alertmanager,启动如果没有报错就配置prometheus

修改prometheus.yml配置文件,主要修改这两点

alerting:

alertmanagers:

- static_configs:

- targets:

- 10.10.10.12:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

- "rules/*"说明

- alerting:用来配置alertmanager的地址,可配置多个

- rule_files: 用来指定监控的报警规则,其中 rules为自定义建立的目录,本示例与prometheus.yml同级目录,rules目录可存放多个规则文件

rules下报警文件定义示例如下

$ cat rules/node.yml

groups:

- name: node.rules

rules:

- alert: NodeDataDiskUsage

expr: ceil((1 - (node_filesystem_avail_bytes / node_filesystem_size_bytes))*100)> 80

for: 5m

labels:

severity: critical

annotations:

description: "{{$labels.instance}} data disk usage is above 80% current {{$value}}%"

- alert: NodeMemoryUsage

expr: ceil(((node_memory_MemTotal_bytes- node_memory_MemAvailable_bytes)/(node_memory_MemTotal_bytes)*100)) > 80

for: 5m

labels:

severity: critical

annotations:

summary: "{{$labels.instance}}: High memory usage detected"

description: "{{$labels.instance}}: Memory usage is above 80% for 5m (current value is: {{ $value }} %)"

- alert: NodeCpuLoad

expr: node:cpu_load15

for: 15s

labels:

severity: critical

annotations:

description: "{{$labels.instance}}: cpu load is {{ $value }}"

- alert: NodeCpuUsage

expr: ceil((avg(irate(node_cpu_seconds_total[5m]))* 100)/5) > 80

for: 5m

labels:

severity: critical

annotations:

description: "{{$labels.instance}}: High cpu usage is above 80% for 5m (current value is: {{$value}} %)"说明:

- 上面中expr指定的值,可拿到prometheus里直接执行并能够获取值

修改完prometheus.yml启动并执行一条rule

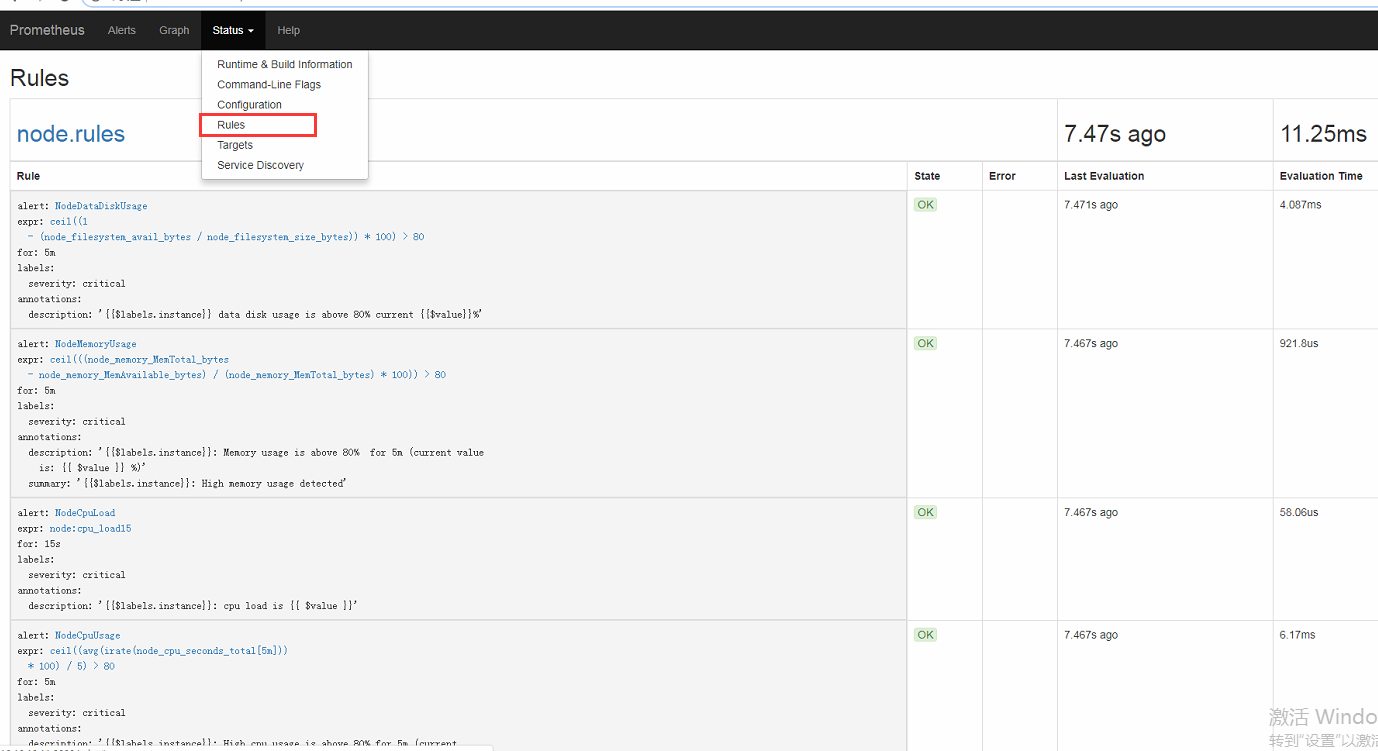

查看定义的规则

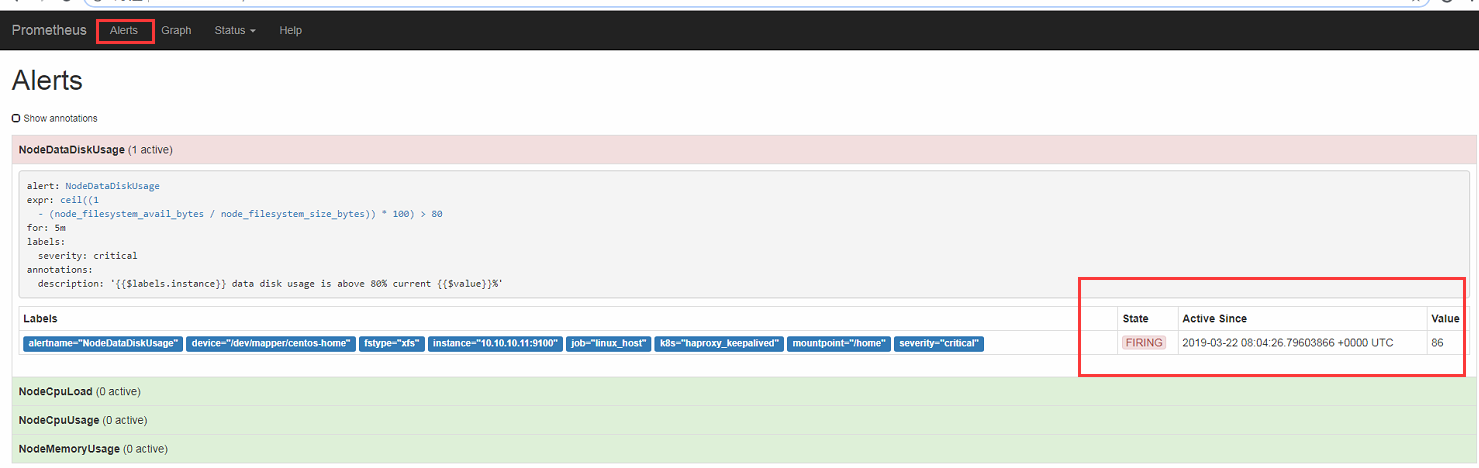

查看报警

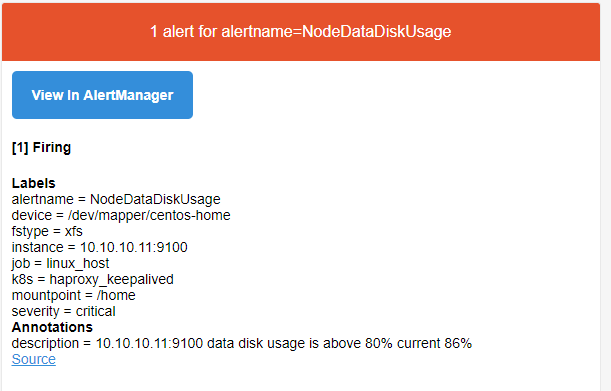

报警邮件

来源:oschina

链接:https://my.oschina.net/u/4353832/blog/3602097