Kubernetes二进制安装

环境准备:

主机环境:做好主机名hosts文件映射

硬件2cpu 2G内存

192.168.30.21 k8s-master

192.168.30.22 k8s-node1

192.168.30.23 k8s-node2

关闭防火墙和selinux

关闭防火墙: systemctl stop firewalld

systemctl disable firewalld

Iptables -F

关闭selinux: $ sed -i 's/enforcing/disabled/' /etc/selinux/config $ setenforce 0

临时 $ setenforce 0

1. 每台机器安装docker-ce

这里是Centos7安装方式

安装依赖包

$ sudo yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

添加Docker软件包源

$ sudo yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

安装Docker-ce

$ sudo yum install docker-ce

启动Docker

$ sudo systemctl start docker

默认是国外的源,下载会很慢,建议配置国内镜像仓库

#vim /etc/docker/daemon.json

{

"registry-mirrors": [ "https://registry.docker-cn.com" ]

}

$ systemctl enable docker

2. 自签TLS证书

master操作

[root@k8s-master ~]# mkdir ssl

[root@k8s-master ~]# cd ssl/

[root@k8s-master ssl]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

[root@k8s-master ssl]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

[root@k8s-master ssl]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

[root@k8s-master ssl]# chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

[root@k8s-master ssl]# mv cfssl_linux-amd64 /usr/local/bin/cfssl

[root@k8s-master ssl]# mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

[root@k8s-master ssl]# mv cfssl-certinfo_linux-amd64 /usr/bin/cfssl-certinfo

[root@k8s-master ssl]# cat > ca-config.json <<EOF

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

[root@k8s-master ssl]# cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

[root@k8s-master ssl]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca -

[root@k8s-master ssl]# ls ca*

ca-config.json ca.csr ca-csr.json ca-key.pem ca.pem

[root@k8s-master ssl]# cat > server-csr.json <<EOF

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"192.168.30.21",

"192.168.30.22",

"192.168.30.23",

"10.10.10.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes server-csr.json | cfssljson -bare server

[root@k8s-master ssl]# cat > admin-csr.json <<EOF

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "system:masters",

"OU": "System"

}

]

}

EOF

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

[root@k8s-master ssl]# cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "BeiJing",

"ST": "BeiJing",

"O": "k8s",

"OU": "System"

}

]

}

EOF

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

[root@k8s-master ssl]# ls |grep -v pem |xargs -i rm {}

[root@k8s-master ssl]# ls

admin-key.pem ca-key.pem kube-proxy-key.pem server-key.pem

admin.pem ca.pem kube-proxy.pem server.pem

3. 部署etcd集群

二进制包下载地址:https://github.com/coreos/etcd/releases/tag/v3.2.12

[root@k8s-master ~]# ls

etcd.sh etcd-v3.2.12-linux-amd64.tar.gz

[root@k8s-master ]# tar zxvf etcd-v3.2.12-linux-amd64.tar.gz

[root@k8s-master ~]# mkdir /opt/kubernetes

[root@k8s-master ~]# mkdir /opt/kubernetes/{bin,cfg,ssl}

[root@k8s-master ~]# mv etcd-v3.2.12-linux-amd64/etcd /opt/kubernetes/bin

[root@k8s-master ~]# mv etcd-v3.2.12-linux-amd64/etcdctl /opt/kubernetes/bin

[root@k8s-master ~]# vim /opt/kubernetes/cfg/etcd

#[Member]

ETCD_NAME="etcd01"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.30.21:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.30.21:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.30.21:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.30.21:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.30.21:2380,etcd02=https://192.168.30.22:2380,etcd03=https://192.168.30.23:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

[root@k8s-master ~]# vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/opt/kubernetes/cfg/etcd

ExecStart=/opt/kubernetes/bin/etcd \

--name=${ETCD_NAME} \

--data-dir=${ETCD_DATA_DIR} \

--listen-peer-urls=${ETCD_LISTEN_PEER_URLS} \

--listen-client-urls=${ETCD_LISTEN_CLIENT_URLS},http://127.0.0.1:2379 \

--advertise-client-urls=${ETCD_ADVERTISE_CLIENT_URLS} \

--initial-advertise-peer-urls=${ETCD_INITIAL_ADVERTISE_PEER_URLS} \

--initial-cluster=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-token=${ETCD_INITIAL_CLUSTER} \

--initial-cluster-state=new \

--cert-file=/opt/kubernetes/ssl/server.pem \

--key-file=/opt/kubernetes/ssl/server-key.pem \

--peer-cert-file=/opt/kubernetes/ssl/server.pem \

--peer-key-file=/opt/kubernetes/ssl/server-key.pem \

--trusted-ca-file=/opt/kubernetes/ssl/ca.pem \

--peer-trusted-ca-file=/opt/kubernetes/ssl/ca.pem

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

[root@k8s-master ~]# cp ssl/server*pem ssl/ca*.pem /opt/kubernetes/ssl/

启动的时候出现点问题还没有找到,但不影响使用

[root@k8s-master ~]# systemctl start etcd

[root@k8s-master ~]# systemctl enable etcd

[root@k8s-master ~]# ps -ef |grep etcd

设置秘钥互信

[root@k8s-master ~]# ssh-keygen

[root@k8s-master ~]# ls /root/ .ssh

id_rsa id_rsa.pub

[root@k8s-master ~]# ssh-copy-id root@192.168.30.22

[root@k8s-master ~]# ssh-copy-id root@192.168.30.23

在node上操作

[root@k8s-node1 ~]# mkdir /opt/kubernetes

[root@k8s-node1 ~]# mkdir /opt/kubernetes/{bin,cfg,ssl}

[root@k8s-node2 ~]# mkdir /opt/kubernetes

[root@k8s-node2 ~]# mkdir /opt/kubernetes/{bin,cfg,ssl}

在matster把bin下的文件传到其他node上

[root@k8s-master ~]# scp -r /opt/kubernetes/bin/ root@192.168.30.22:/opt/kubernetes/

[root@k8s-master ~]# scp -r /opt/kubernetes/bin/ root@192.168.30.23:/opt/kubernetes/

[root@k8s-master ~]# scp -r /opt/kubernetes/ssl/ root@192.168.30.22:/opt/kubernetes/

[root@k8s-master ~]# scp -r /opt/kubernetes/ssl/ root@192.168.30.23:/opt/kubernetes/

[root@k8s-master ~]# scp -r /opt/kubernetes/cfg/ root@192.168.30.22:/opt/kubernetes/

[root@k8s-master ~]# scp -r /opt/kubernetes/cfg/ root@192.168.30.23:/opt/kubernetes/

[root@k8s-master ~]# scp /usr/lib/systemd/system/etcd.service root@192.168.30.22:/usr/lib/systemd/system/etcd.service

[root@k8s-master ~]# scp /usr/lib/systemd/system/etcd.service root@192.168.30.23:/usr/lib/systemd/system/etcd.service

在node节点上修改配置文件并启动

[root@k8s-node1 ~]# vim /opt/kubernetes/cfg/etcd

#[Member]

ETCD_NAME="etcd02"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.30.22:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.30.22:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.30.22:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.30.22:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.30.21:2380,etcd02=https://192.168.30.22:2380,etcd03=https://192.168.30.23:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

[root@k8s-node1 kubernetes]# systemctl start etcd

[root@k8s-node1 kubernetes]# systemctl enable etcd

Node2也修改配置文件并启动

[root@k8s-node2 ~]# vim /opt/kubernetes/cfg/etcd

#[Member]

ETCD_NAME="etcd03"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://192.168.30.23:2380"

ETCD_LISTEN_CLIENT_URLS="https://192.168.30.23:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://192.168.30.23:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://192.168.30.23:2379"

ETCD_INITIAL_CLUSTER="etcd01=https://192.168.30.21:2380,etcd02=https://192.168.30.22:2380,etcd03=https://192.168.30.23:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

[root@k8s-node2 ~]# systemctl start etcd

[root@k8s-node2 ~]# systemctl enable etcd

Master操作设置环境变量方便启动

[root@k8s-master ~]# vim /etc/profile

PATH=$PATH:/opt/kubernetes/bin

[root@k8s-master ~]# source /etc/profile

[root@k8s-master ssl]# etcd

etcd etcdctl

[root@k8s-master ssl]# etcdctl \

--ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem \

--endpoints="https://192.168.30.21:2379,https://192.168.30.22:2379,https://192.168.30.23:2379" \

cluster-health

member 4bbac693a1c7e1c is healthy: got healthy result from https://192.168.30.22:2379

member ea6022d7dbd6646 is healthy: got healthy result from https://192.168.30.21:2379

member e3bbc087ad4ec1b5 is healthy: got healthy result from https://192.168.30.23:2379

4. 部署Flannel容器集群网络

[root@k8s-master ~]# ls

flannel-v0.9.1-linux-amd64.tar.gz

[root@k8s-master ~]# tar zxvf flannel-v0.9.1-linux-amd64.tar.gz

[root@k8s-master ~]# scp flanneld mk-docker-opts.sh root@192.168.30.22:/opt/kubernetes/bin

[root@k8s-master ~]# scp flanneld mk-docker-opts.sh root@192.168.30.23:/opt/kubernetes/bin

在node节点配置证书

[root@k8s-node1 ~]# cd /opt/kubernetes/cfg

[root@k8s-node1 cfg]# vim flanneld.sh

FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.30.21:2379,https://192.168.30.22:2379,https://192.168.30.23:2379 -etcd-cafile=/opt/kubernetes/ssl/ca.pem -etcd-certfile=/opt/kubernetes/ssl/server.pem -etcd-keyfile=/opt/kubernetes/ssl/server-key.pem"

[root@k8s-node1 kubernetes]# cat <<EOF >/usr/lib/systemd/system/docker.service

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

After=network-online.target firewalld.service

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd \$DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP \$MAINPID

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

TimeoutStartSec=0

Delegate=yes

KillMode=process

Restart=on-failure

StartLimitBurst=3

StartLimitInterval=60s

[Install]

WantedBy=multi-user.target

EOF

生成/systemd/system/flannled文件进行管理,这里修改一下,自己设置的

[root@k8s-node1 ~]# cat <<EOF >/usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld.sh

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq \$FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF

生成文件可以在下面查看

[root@k8s-node1 ~]# vim /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld.sh

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

[root@k8s-master ~]# cd ssl

[root@k8s-master ssl]# /opt/kubernetes/bin/etcdctl \

--ca-file=ca.pem --cert-file=server.pem --key-file=server-key.pem \

--endpoints="https://192.168.30.21:2379,https://192.168.30.22:2379,https://192.168.30.23:2379" \

set /coreos.com/network/config '{ "Network": "172.17.0.0/16", "Backend": {"Type": "vxlan"}}'

[root@k8s-node1 ~]# systemctl start flanneld

[root@k8s-node1 ~]# ip a

6: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 8e:8e:2d:30:e6:65 brd ff:ff:ff:ff:ff:ff

inet 172.17.50.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::8c8e:2dff:fe30:e665/64 scope link

valid_lft forever preferred_lft forever

查看flanneld网络是否已经分配网络

[root@k8s-node1 ~]# cat /run/flannel/subnet.env

DOCKER_OPT_BIP="--bip=172.17.37.1/24"

DOCKER_OPT_IPMASQ="--ip-masq=false"

DOCKER_OPT_MTU="--mtu=1450"

DOCKER_NETWORK_OPTIONS=" --bip=172.17.37.1/24 --ip-masq=false --mtu=1450"

重启确保docker/flanneld同一个网络

[root@k8s-node1 ~]# systemctl restart docker

[root@k8s-node1 ~]# ip a

5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:30:4b:3b:a5 brd ff:ff:ff:ff:ff:ff

inet 172.17.50.1/24 brd 172.17.50.255 scope global docker0

valid_lft forever preferred_lft forever

6: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 8e:8e:2d:30:e6:65 brd ff:ff:ff:ff:ff:ff

inet 172.17.50.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::8c8e:2dff:fe30:e665/64 scope link

valid_lft forever preferred_lft forever

在另一台node2上继续操作

[root@k8s-node2 ~]# vim /usr/lib/systemd/system/flanneld.service

[Unit]

Description=Flanneld overlay address etcd agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=/opt/kubernetes/cfg/flanneld.sh

ExecStart=/opt/kubernetes/bin/flanneld --ip-masq $FLANNEL_OPTIONS

ExecStartPost=/opt/kubernetes/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

[root@k8s-node2 ~]# vim /opt/kubernetes/cfg/flanneld.sh

FLANNEL_OPTIONS="--etcd-endpoints=https://192.168.30.21:2379,https://192.168.30.22:2379,https://192.168.30.23:2379 -etcd-cafile=/opt/kubernetes/ssl/ca.pem -etcd-certfile=/opt/kubernetes/ssl/server.pem -etcd-keyfile=/opt/kubernetes/ssl/server-key.pem"

[root@k8s-node2 ~]# systemctl start flanneld

添加标记的

[root@k8s-node2 ~]# vim /usr/lib/systemd/system/docker.service

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

EnvironmentFile=/run/flannel/subnet.env

ExecStart=/usr/bin/dockerd $DOCKER_NETWORK_OPTIONS

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

[root@k8s-node2 ~]# systemctl restart docker

[root@k8s-node2 ~]# ip a

5: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:1d:bb:ae:ea brd ff:ff:ff:ff:ff:ff

inet 172.17.62.1/24 brd 172.17.62.255 scope global docker0

valid_lft forever preferred_lft forever

6: flannel.1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1450 qdisc noqueue state UNKNOWN group default

link/ether 1e:c0:d4:c4:8f:94 brd ff:ff:ff:ff:ff:ff

inet 172.17.62.0/32 scope global flannel.1

valid_lft forever preferred_lft forever

inet6 fe80::1cc0:d4ff:fec4:8f94/64 scope link

valid_lft forever preferred_lft forever

5. 创建Node节点kubeconfig 文件

kubeconfig是用于node节点kube-proxy和kubelet集群通信做的认证

创建 TLS Bootstrapping Token

[root@k8s-master ssl ~]# export BOOTSTRAP_TOKEN=$(head -c 16 /dev/urandom | od -An -t x | tr -d ' ')

cat > token.csv <<EOF

${BOOTSTRAP_TOKEN},kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

[root@k8s-master ssl ~]# ls

token.csv

[root@k8s-master ~]# cat token.csv

ebc5356f3dad6811915e725cdec16b39,kubelet-bootstrap,10001,"system:kubelet-bootstrap"

创建kubelet bootstrapping kubeconfig

[root@k8s-master ~]# export KUBE_APISERVER="https://192.168.30.21:6443"

[root@k8s-master ]# cd /opt/kubernetes/bin

[root@k8s-master bin]# rz -E

rz waiting to receive.

[root@k8s-master bin]# ls

etcd etcdctl kubectl

[root@k8s-master bin]# chmod +x kubectl

[root@k8s-master bin]# ls

etcd etcdctl kubectl

[root@k8s-master ~]# cd ssl

设置集群参数

[root@k8s-master ssl]# kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=bootstrap.kubeconfig

设置客户端认证参数

[root@k8s-master ssl]#kubectl config set-credentials kubelet-bootstrap \

--token=${BOOTSTRAP_TOKEN} \

--kubeconfig=bootstrap.kubeconfig

查看kubeconfig已经把token和IP加进去了

[root@k8s-master ssl]# cat bootstrap.kubeconfig

server: https://192.168.30.21:6443EOF

name: kubernetes

contexts: []

current-context: ""

kind: Config

preferences: {}

users:

- name: kubelet-bootstrap

user:

as-user-extra: {}

token: 02d9dadadf07946c59811771b41a2796

设置上下文参数

[root@k8s-master ssl]# kubectl config set-context default \

--cluster=kubernetes \

--user=kubelet-bootstrap \

--kubeconfig=bootstrap.kubeconfig

设置默认上下文

[root@k8s-master ssl]# kubectl config use-context default --kubeconfig=bootstrap.kubeconfig

创建kube-proxy kubeconfig文件

[root@k8s-master ssl]# kubectl config set-cluster kubernetes \

--certificate-authority=./ca.pem \

--embed-certs=true \

--server=${KUBE_APISERVER} \

--kubeconfig=kube-proxy.kubeconfig

配置证书

[root@k8s-master ssl]# kubectl config set-credentials kube-proxy \

--client-certificate=./kube-proxy.pem \

--client-key=./kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=kube-proxy.kubeconfig

设置上下文

[root@k8s-master ssl]# kubectl config set-context default \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=kube-proxy.kubeconfig

设置默认的上下文

[root@k8s-master ssl]# kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

查看kubeconfig

[root@k8s-master ssl]# cat kube-proxy.kubeconfig

server: https://192.168.30.21:6443EOF

name: kubernetes

contexts:

- context:

cluster: kubernetes

user: kube-proxy

name: default

current-context: default

kind: Config

preferences: {}

users:

- name: kube-proxy

user:

as-user-extra: {}

6. 部署matser组件

下载k8s二进制包

https://github.com/kubernetes/kubernetes/blob/master/CHANGELOG-1.15.md#v1150

[root@k8s-master ~]# mkdir master_pkg

把zip传进来

[root@k8s-master ~]# mv master.zip master_pkg/

[root@k8s-master ~]# cd master_pkg/

[root@k8s-master master_pkg]# ls

master.zip

[root@k8s-master master_pkg]# unzip master.zip

[root@k8s-master master_pkg]# ls

apiserver.sh kube-apiserver kubectl master.zip

controller-manager.sh kube-controller-manager kube-scheduler scheduler.sh

[root@k8s-master master_pkg]# mv kube-controller-manager kube-scheduler kube-apiserver /opt/kubernetes/bin

[root@k8s-master master_pkg]# chmod +x /opt/kubernetes/bin/*

[root@k8s-master master_pkg]# chmod +x *.sh

[root@k8s-master master_pkg]# ./apiserver.sh 192.168.30.21 https://192.168.30.21:2379,https://192.168.30.22:2379,https://192.168.30.23:2379

[root@k8s-master master_pkg]# cp /root/ssl/token.csv /opt/kubernetes/cfg

[root@k8s-master cfg]# systemctl restart kube-apiserver.service

[root@k8s-master master_pkg]# ./apiserver.sh 192.168.30.21 https://192.168.30.21:2379,https://192.168.30.22:2379,https://192.168.30.23:2379

[root@k8s-master master_pkg]# ./controller-manager.sh 127.0.0.1

[root@k8s-master master_pkg]# ./scheduler.sh 127.0.0.1

[root@k8s-master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

etcd-0 Healthy {"health": "true"}

etcd-1 Healthy {"health": "true"}

etcd-2 Healthy {"health": "true"}

scheduler Healthy ok

7部署node节点组件

[root@k8s-master ssl]# scp *kubeconfig root@192.168.30.22:/opt/kubernetes/cfg

[root@k8s-master ssl]# scp *kubeconfig root@192.168.30.23:/opt/kubernetes/cfg

去node节点操作,把包拉进来

[root@k8s-node1 ~]# unzip node.zip

[root@k8s-node1 ~]# mv kubelet kube-proxy /opt/kubernetes/bin/

[root@k8s-node1 ~]# chmod +x /opt/kubernetes/bin/*

[root@k8s-node1 ~]# chmod +x *.sh

[root@k8s-node1 ~]# ./kubelet.sh 192.168.30.22 10.10.10.2

查看已经指定nodeIP和DNS

[root@k8s-node1 ]# cat/opt/kubernetes/cfg/kubelet

[root@k8s-node1 ~]# ./proxy.sh 192.168.30.22

查看kube-proxy已经指定完毕

[root@k8s-node1 ~]# cat /opt/kubernetes/cfg/kube-proxy

启动kubelet,确保kubelet进程开启

[root@k8s-node1 ~]# systemctl start kubelet

[root@k8s-node1 ~]# ps -ef |grep kube

root 15953 1 0 22:28 ? 00:00:00 /opt/kubernetes/bin/kubelet --logtostderr=true --v=4 --address=192.168.30.22 --hostname-override=192.168.30.22 --kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig --experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig --cert-dir=/opt/kubernetes/ssl --allow-privileged=true --cluster-dns=8.8.8.8 --cluster-domain=cluster.local --fail-swap-on=false --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0

root 15959 3614 0 22:28 pts/1 00:00:00 grep --color=auto kube

kubelet 启动时向 kube-apiserver 发送 TLS bootstrapping 请求,需要先将 bootstrap token 文件中的 kubelet-bootstrap 用户赋予 system:node-bootstrapper 角色,然后 kubelet 才有权限创建认证请求

[root@k8s-master ~]# kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap

[root@k8s-node1 ~]# systemctl restart kubelet.service

[root@k8s-master ~]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-txkD8-4zDyVBKYyYG5d0htf7fBOZkrSRMn4bBqLtPM8 14s kubelet-bootstrap Pending

修改资源并同意node创建的节点的请求

[root@k8s-master ssl]# kubectl certificate approve node-csr-txkD8-4zDyVBKYyYG5d0htf7fBOZkrSRMn4bBqLtPM8

查看状态已经同意请求

[root@k8s-master ssl]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-txkD8-4zDyVBKYyYG5d0htf7fBOZkrSRMn4bBqLtPM8 10m kubelet-bootstrap Approved,Issued

查看node节点状态已经准备好

[root@k8s-master ssl]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.30.22 Ready <none> 5m v1.9.0

查看node主机已经多出自动生成的证书了

[root@k8s-node1 ~]# ls /opt/kubernetes/ssl/

ca-key.pem kubelet-client.crt kubelet.crt server-key.pem

ca.pem kubelet-client.key kubelet.key server.pem

另一个node主机进行相同的操作,直接把目录文件传过去

[root@k8s-node1 ~]# scp -r /opt/kubernetes/bin/ root@192.168.30.23:/opt/kubernetes/

[root@k8s-node1 ~]# scp -r /opt/kubernetes/cfg/ root@192.168.30.23:/opt/kubernetes/

[root@k8s-node1 ~]# scp /usr/lib/systemd/system/kubelet.service root@192.168.30.23:/usr/lib/systemd/system

[root@k8s-node1 ~]# scp /usr/lib/systemd/system/kube-proxy.service root@192.168.30.23:/usr/lib/systemd/system

修改kubelet的IP为本机IP

[root@k8s-node2 ~]# vim /opt/kubernetes/cfg/kubelet

KUBELET_OPTS="--logtostderr=true \

--v=4 \

--address=192.168.30.23 \

--hostname-override=192.168.30.23 \

--kubeconfig=/opt/kubernetes/cfg/kubelet.kubeconfig \

--experimental-bootstrap-kubeconfig=/opt/kubernetes/cfg/bootstrap.kubeconfig \

--cert-dir=/opt/kubernetes/ssl \

--allow-privileged=true \

--cluster-dns=8.8.8.8 \

--cluster-domain=cluster.local \

--fail-swap-on=false \

--pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google-containers/pause-amd64:3.0"

把kube-proxy的IP也指定本机的IP

[root@k8s-node2 ~]# vim /opt/kubernetes/cfg/kube-proxy

KUBE_PROXY_OPTS="--logtostderr=true --v=4 --hostname-override=192.168.30.23 --kubeconfig=/opt/kubernetes/cfg/kube-proxy.kubeconfig"

启动服务

[root@k8s-node2 ~]# systemctl start kubelet

[root@k8s-node2 ~]# systemctl start kube-proxy

查看集群状态请求

[root@k8s-master ssl]# kubectl get csr

NAME AGE REQUESTOR CONDITION

node-csr-txkD8-4zDyVBKYyYG5d0htf7fBOZkrSRMn4bBqLtPM8 33m kubelet-bootstrap Approved,Issued

node-csr-uxtAiGucJJJv_SzObVgfqm6sZ9GdvepQxVfkUcGCE3I 1m kubelet-bootstrap Pending

已经同意状态请求加入

[root@k8s-master ssl]# kubectl certificate approve node-csr-uxtAiGucJJJv_SzObVgfqm6sZ9GdvepQxVfkUcGCE3I

查看集群节点

[root@k8s-master ssl]# kubectl get node

NAME STATUS ROLES AGE VERSION

192.168.30.22 Ready <none> 30m v1.9.0

192.168.30.23 Ready <none> 1m v1.9.0

8.运行一个测试示例检验集群工作状态,

运行一个nginx的镜像,并创建3个副本

[root@k8s-master ~]# kubectl run nginx --image=nginx --replicas=3

deployment "nginx" created

查看pod

[root@k8s-master ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nginx-8586cf59-62jsg 0/1 ContainerCreating 0 1m

nginx-8586cf59-92pc7 0/1 ImagePullBackOff 0 1m

nginx-8586cf59-vdm48 0/1 ContainerCreating 0 1m

等待一会,让pod起来

[root@k8s-master ~]# kubectl get pod 或者kubectl get all

NAME READY STATUS RESTARTS AGE

nginx-8586cf59-62jsg 1/1 Running 0 23m

nginx-8586cf59-92pc7 1/1 Running 0 23m

nginx-8586cf59-vdm48 1/1 Running 0 23m

查看哪些节点在运行容器

[root@k8s-master ~]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

nginx-8586cf59-62jsg 1/1 Running 0 35m 172.17.50.2 192.168.30.22

nginx-8586cf59-92pc7 1/1 Running 0 35m 172.17.62.2 192.168.30.23

nginx-8586cf59-vdm48 1/1 Running 0 35m 172.17.62.3 192.168.30.23

暴露端口使之访问

[root@k8s-master ~]# kubectl expose deployment nginx --port=88 --target-port=80 --type=NodePort

[root@k8s-master ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.10.10.1 <none> 443/TCP 17h

nginx NodePort 10.10.10.158 <none> 88:46438/TCP 5m

因为我们只在node节点部署了flanneld所有只能在node节点访问:10.10.10.158:88端口

或者访问node任意节点http://192.168.30.22/23:46438都可以

[root@k8s-node1 ~]# curl 10.10.10.158:88

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

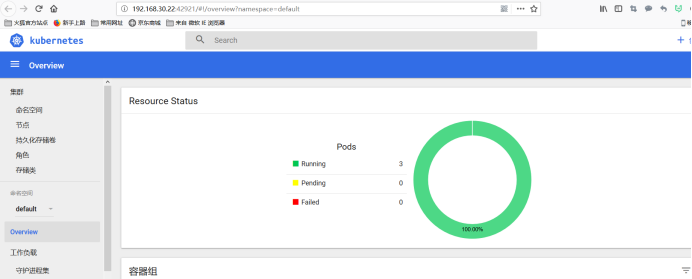

9. 部署web ui (Dashboard)

[root@k8s-master ~]# mkdir UI

[root@k8s-master ~]# cd UI/

[root@k8s-master UI]# ls

dashboard-deployment.yaml dashboard-rbac.yaml dashboard-service.yaml

[root@k8s-master UI]# kubectl create -f dashboard-rbac.yaml

[root@k8s-master UI]# kubectl create -f dashboard-service.yaml

[root@k8s-master UI]# kubectl create -f dashboard-deployment.yaml

查看命名空间

[root@k8s-master UI]# kubectl get ns

NAME STATUS AGE

default Active 18h

kube-public Active 18h

kube-system Active 18h

[root@k8s-master UI]# kubectl get all -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

svc/kubernetes-dashboard NodePort 10.10.10.229 <none> 80:42921/TCP 7m

访问http://192.168.30.23/24:42921/都可以

来源:oschina

链接:https://my.oschina.net/u/4412009/blog/3475384