目录

8-2 scikit-learn中的多项式回归于pipeline

8-3 过拟合与前拟合03-Overfitting-and-Underfitting

8-1 什么是多项式回归

x看作是一个特征,x^2是另一个特征,则可以看作是线性回归,但实际结果就是非线性

线性回归?

解决方案, 添加一个特征

x无序的

8-2 scikit-learn中的多项式回归于pipeline

scikit-learn中的多项式回归和Pipeline

X零次方前的系数为1,第二列为x的值,第三例为x的平方

关于PolynomialFeatures

如果样本有两个特征则1, a, b, a*a, a*b, b*b

Pipeline

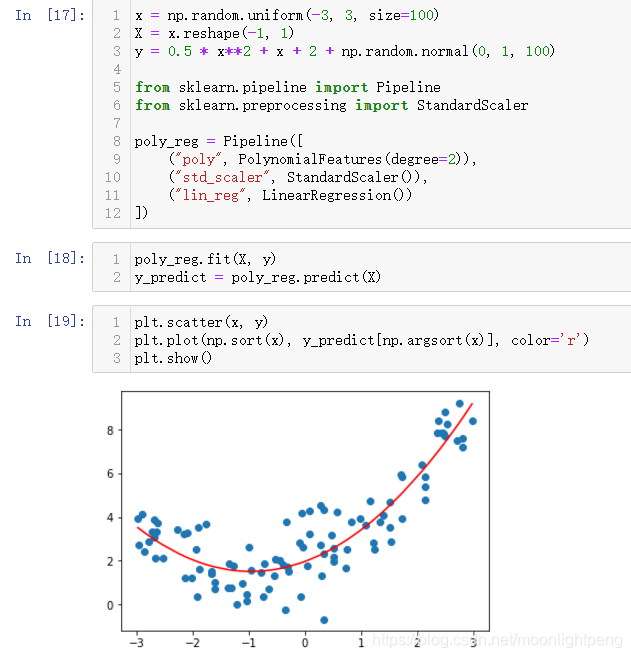

x = np.random.uniform(-3, 3, size=100)

X = x.reshape(-1, 1)

y = 0.5 * x**2 + x + 2 + np.random.normal(0, 1, 100)

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import StandardScaler

poly_reg = Pipeline([

("poly", PolynomialFeatures(degree=2)),

("std_scaler", StandardScaler()),

("lin_reg", LinearRegression())

])8-3 过拟合与前拟合03-Overfitting-and-Underfitting

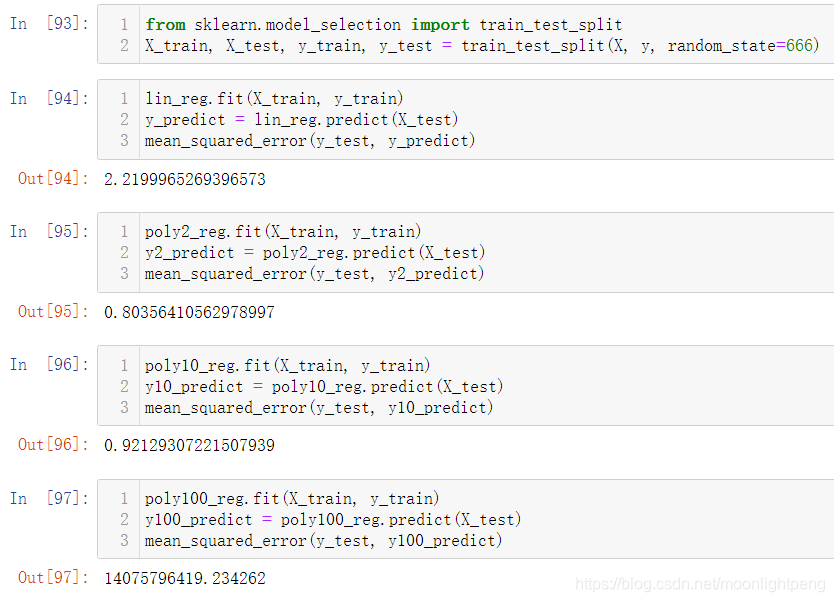

均方误差来对比

使用线性回归

使用多项式回归

from sklearn.pipeline import Pipeline

from sklearn.preprocessing import PolynomialFeatures

from sklearn.preprocessing import StandardScaler

def PolynomialRegression(degree):

return Pipeline([

("poly", PolynomialFeatures(degree=degree)),

("std_scaler", StandardScaler()),

("lin_reg", LinearRegression())

])

train test split的意义

来源:oschina

链接:https://my.oschina.net/u/4416268/blog/4302888