Author: xidianwangtao@gmail.com Kubernetes 1.13

摘要:Kubelet动态配置可以使让我们及其方便的大规模更新集群Kubelet配置,让我们可以像配置集群中其他应用一样通过ConfigMap配置Kubelet,并且Kubelet能动态感知到配置的变化,自动退出重新加载最新配置。不仅如此,Kubelet Dynamic Config还有本地Checkpoint数据、失败回滚到上一个可用配置集等美丽特性。本文介绍了Kubelet的配置组成部分、简要工作流,以及核心机制(BootStrap、Sync)的实现原理、目前还有待完善的地方等。

Kubelet Configuration

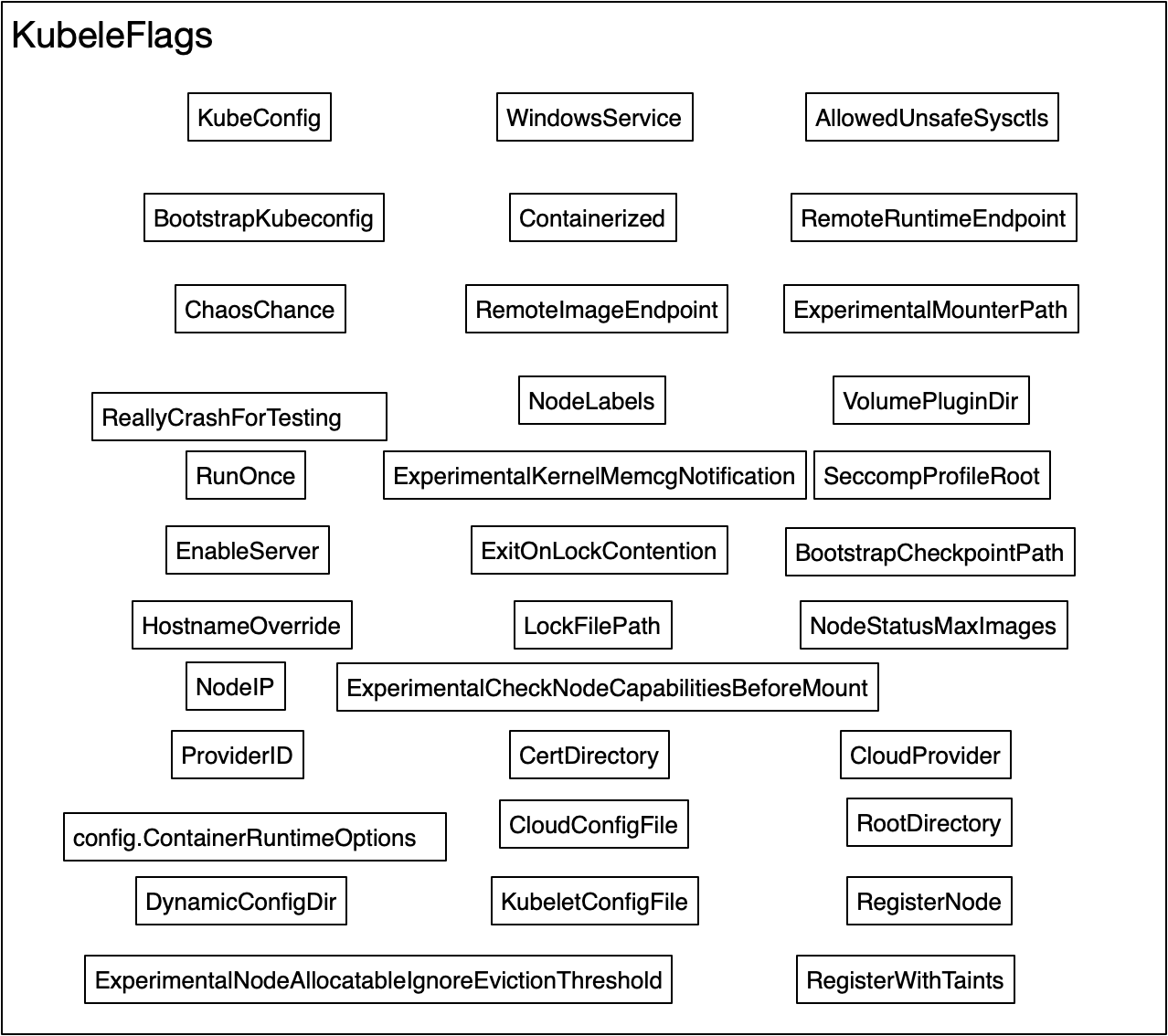

Kubelet配置分两部分:

- KubeletFlag: 指那些不允许在kubelet运行时进行修改的配置集,或者不能在集群中各个Nodes之间共享的配置集。

- KubeletConfiguration: 指可以在集群中各个Nodes之间共享的配置集。

Dynamic Kubelet Config

Core Features

-

Kubelet attempts to use the dynamically assigned configuration.

-

Kubelet “checkpoints” configuration to local disk, enabling restarts without API server access.

-

Kubelet reports assigned, active, and last-known-good configuration sources in the Node status.

-

When invalid configuration is dynamically assigned, Kubelet automatically falls back to a last-known-good configuration and reports errors in the Node status.

Workflow

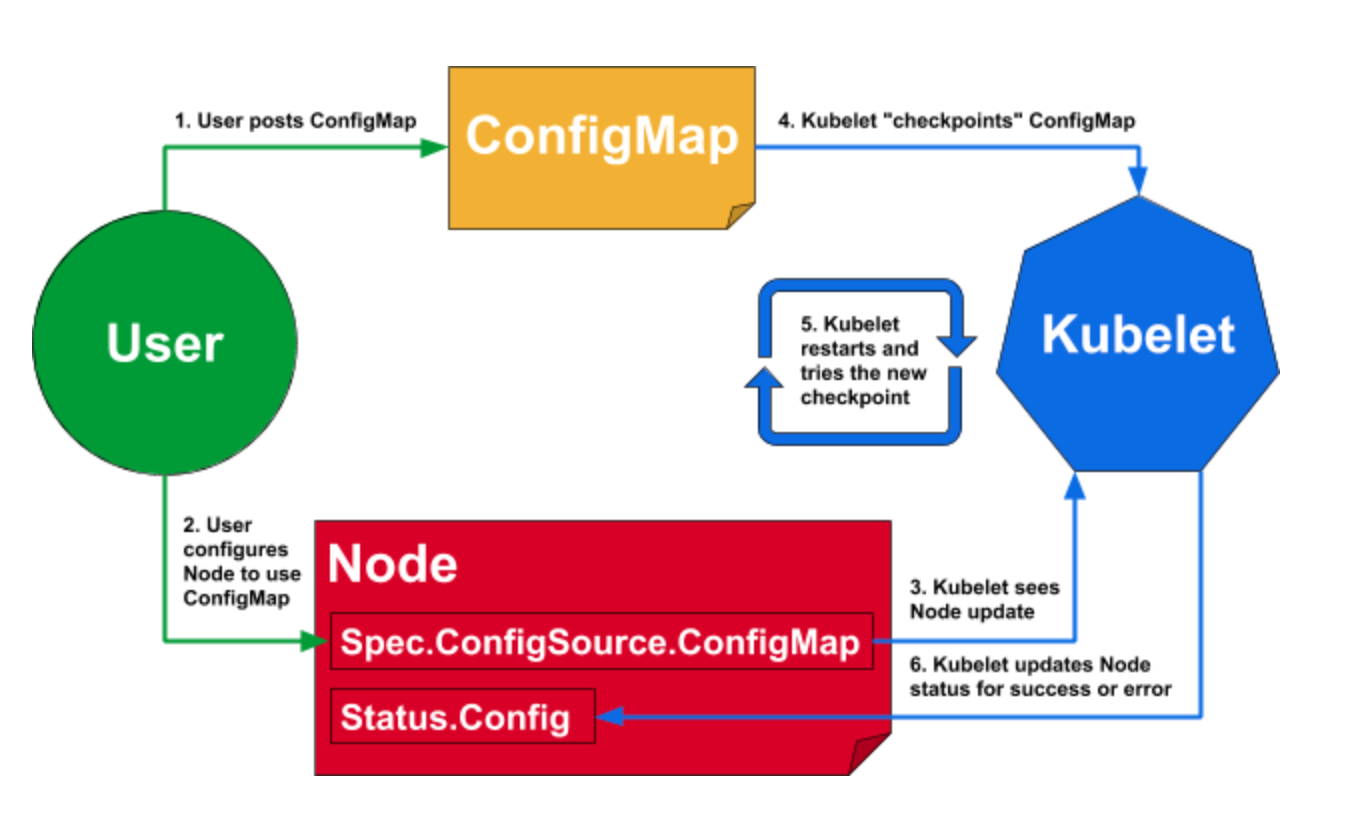

The basic workflow for configuring a Kubelet in a live cluster is as follows:

-

Each Kubelet watches its associated Node object for changes.

-

When the

Node.Spec.ConfigSource.ConfigMapreference is updated, the Kubelet will “checkpoint” the new ConfigMap by writing the files it contains to local disk. -

The Kubelet will then exit, and the OS-level process manager will restart it.

Note that if the

Node.Spec.ConfigSource.ConfigMapreference is not set, the Kubelet uses the set of flags and config files local to the machine it is running on.

-

Once restarted, the Kubelet will attempt to use the configuration from the new checkpoint.

-

If the new configuration passes the Kubelet’s internal validation, the Kubelet will update Node.Status.Config to reflect that it is using the new configuration.

-

If the new configuration is invalid, the Kubelet will fall back to its last-known-good configuration and report an error in

Node.Status.Config. -

Note that the default last-known-good configuration is the combination of Kubelet command-line flags with the Kubelet’s local configuration file. Command-line flags that overlap with the config file always take precedence over both the local configuration file and dynamic configurations, for backwards-compatibility.

The status of the Node’s Kubelet configuration is reported via Node.Status.Config. Once you have updated a Node to use the new ConfigMap, you can observe this status to confirm that the Node is using the intended configuration.

更多使用简介请参考:https://kubernetes.io/docs/tasks/administer-cluster/reconfigure-kubelet/

Enable and New Dynamic Kubelet Config Controller

在Kubelet构建Command时(NewKubeletCommand),会对kubelet的配置进行分析、加载。

func NewKubeletCommand(stopCh <-chan struct{}) *cobra.Command {

...

// load kubelet config file, if provided

if configFile := kubeletFlags.KubeletConfigFile; len(configFile) > 0 {

kubeletConfig, err = loadConfigFile(configFile)

...

if err := kubeletConfigFlagPrecedence(kubeletConfig, args); err != nil {

klog.Fatal(err)

}

// update feature gates based on new config

if err := utilfeature.DefaultFeatureGate.SetFromMap(kubeletConfig.FeatureGates); err != nil {

klog.Fatal(err)

}

}

// We always validate the local configuration (command line + config file).

// This is the default "last-known-good" config for dynamic config, and must always remain valid.

if err := kubeletconfigvalidation.ValidateKubeletConfiguration(kubeletConfig); err != nil {

klog.Fatal(err)

}

// use dynamic kubelet config, if enabled

var kubeletConfigController *dynamickubeletconfig.Controller

if dynamicConfigDir := kubeletFlags.DynamicConfigDir.Value(); len(dynamicConfigDir) > 0 {

var dynamicKubeletConfig *kubeletconfiginternal.KubeletConfiguration

dynamicKubeletConfig, kubeletConfigController, err = BootstrapKubeletConfigController(dynamicConfigDir,

func(kc *kubeletconfiginternal.KubeletConfiguration) error {

return kubeletConfigFlagPrecedence(kc, args)

})

if err != nil {

klog.Fatal(err)

}

// If we should just use our existing, local config, the controller will return a nil config

if dynamicKubeletConfig != nil {

kubeletConfig = dynamicKubeletConfig

// Note: flag precedence was already enforced in the controller, prior to validation,

// by our above transform function. Now we simply update feature gates from the new config.

if err := utilfeature.DefaultFeatureGate.SetFromMap(kubeletConfig.FeatureGates); err != nil {

klog.Fatal(err)

}

}

}

// construct a KubeletServer from kubeletFlags and kubeletConfig

kubeletServer := &options.KubeletServer{

KubeletFlags: *kubeletFlags,

KubeletConfiguration: *kubeletConfig,

}

// use kubeletServer to construct the default KubeletDeps

kubeletDeps, err := UnsecuredDependencies(kubeletServer)

if err != nil {

klog.Fatal(err)

}

// add the kubelet config controller to kubeletDeps

kubeletDeps.KubeletConfigController = kubeletConfigController

// start the experimental docker shim, if enabled

if kubeletServer.KubeletFlags.ExperimentalDockershim {

if err := RunDockershim(&kubeletServer.KubeletFlags, kubeletConfig, stopCh); err != nil {

klog.Fatal(err)

}

return

}

// run the kubelet

klog.V(5).Infof("KubeletConfiguration: %#v", kubeletServer.KubeletConfiguration)

if err := Run(kubeletServer, kubeletDeps, stopCh); err != nil {

klog.Fatal(err)

}

...

}

-

首先会检查

--config参数是否配置了,如果配置了,则读取该文件内容并转换成KubeletConfiguration,并调用kubeletConfigFlagPrecedence将其与GlobalFlags、Command Line Flags等进行整合。后面分析Dynamic Kubelet Config Controller BootStrap时会对kubeletConfigFlagPrecedence进行详细说明。 -

然后对上面整合得到的KubeletConfig中各个参数进行合法有效性校验。

-

如果kubelet配置了

--dynamic-config-dir并且Enable了DynamicKubeletConfig FeatureGates(默认Disable),则会创建Dynamic Kubelet Config Controller,并调用其BootStrap方法进行配置初始化。注意,这里给Controller设置的TransformFunc为kubeletConfigFlagPrecedence,后面会分析。

type Controller struct {

transform TransformFunc

// pendingConfigSource; write to this channel to indicate that the config source needs to be synced from the API server

pendingConfigSource chan bool

// configStatus manages the status we report on the Node object

configStatus status.NodeConfigStatus

// nodeInformer is the informer that watches the Node object

nodeInformer cache.SharedInformer

// remoteConfigSourceInformer is the informer that watches the assigned config source

remoteConfigSourceInformer cache.SharedInformer

// checkpointStore persists config source checkpoints to a storage layer

checkpointStore store.Store

}

-

如果Dynamic Kubelet Config Controller的BootStrap返回的DynamicKubeletConfig不为空,那么原来从

--config中加载的KubeletConfig配置将被丢弃,使用DynamicKubeletConfig。 -

将 Dynamic Kubelet Config Controller添加到Kubelet Dependencies中。Kubelet Dependencies是Kubelet运行所依赖的接口,包括cAdvisor接口、Dockershim ClientConfig接口、VolumePlugin接口、DynamicPluginProber接口、OOMAdjuster接口等共20个组件接口。

Dynamic Kubelet Config Controller BootStrap

NewKubeletCommand时,会创建Dynamic Kubelet Config Controller并调用其BootStrap方法完成Kubelet Dynamic Config的Checkpoints加载和Node ConfigStatus的更新。下面将详细分析BootStrap的原理。

// Bootstrap attempts to return a valid KubeletConfiguration based on the configuration of the Controller,

// or returns an error if no valid configuration could be produced. Bootstrap should be called synchronously before StartSync.

// If the pre-existing local configuration should be used, Bootstrap returns a nil config.

func (cc *Controller) Bootstrap() (*kubeletconfig.KubeletConfiguration, error) {

utillog.Infof("starting controller")

// ensure the filesystem is initialized

if err := cc.initializeDynamicConfigDir(); err != nil {

return nil, err

}

// determine assigned source and set status

assignedSource, err := cc.checkpointStore.Assigned()

if err != nil {

return nil, err

}

if assignedSource != nil {

cc.configStatus.SetAssigned(assignedSource.NodeConfigSource())

}

// determine last-known-good source and set status

lastKnownGoodSource, err := cc.checkpointStore.LastKnownGood()

if err != nil {

return nil, err

}

if lastKnownGoodSource != nil {

cc.configStatus.SetLastKnownGood(lastKnownGoodSource.NodeConfigSource())

}

// if the assigned source is nil, return nil to indicate local config

if assignedSource == nil {

return nil, nil

}

// attempt to load assigned config

assignedConfig, reason, err := cc.loadConfig(assignedSource)

if err == nil {

// update the active source to the non-nil assigned source

cc.configStatus.SetActive(assignedSource.NodeConfigSource())

go wait.Forever(func() { cc.checkTrial(configTrialDuration) }, 10*time.Second)

return assignedConfig, nil

} // Assert: the assigned config failed to load or validate

utillog.Errorf(fmt.Sprintf("%s, error: %v", reason, err))

// set status to indicate the failure with the assigned config

cc.configStatus.SetError(reason)

// if the last-known-good source is nil, return nil to indicate local config

if lastKnownGoodSource == nil {

return nil, nil

}

// attempt to load the last-known-good config

lastKnownGoodConfig, _, err := cc.loadConfig(lastKnownGoodSource)

if err != nil {

// we failed to load the last-known-good, so something is really messed up and we just return the error

return nil, err

}

// set status to indicate the active source is the non-nil last-known-good source

cc.configStatus.SetActive(lastKnownGoodSource.NodeConfigSource())

return lastKnownGoodConfig, nil

}

BootStrap中关键的几个流程是:

-

Initialize Dynamic Config Dir

-

Get Assigned NodeConfigSource From Checkpoints

-

Get LastKnowsGood(LKG) NodeConfigSource From Checkpoints

-

Load Assigned Config

-

Load LastKnownGood Config If Necessary

Initialize Dynamic Config Dir

确保以下目录已经创建,如果没有,则自动创建:

-

{dynamic-config-dir}/store/: Dynamic Config Dir的根目录; -

{dynamic-config-dir}/store/meta/: Dynamic Config Dir的meta目录,包含assigned和last-known-good两个子目录; -

{dynamic-config-dir}/store/meta/assigned: Kubelet将要试图加载并使用的Checkpointed NodeConfigSource,文件内容是kubeletconfigv1beta1.SerializedNodeConfigSource的Yaml格式; -

{dynamic-config-dir}/store/meta/last-known-good: Kubelet在使用Assigned NodeConfigSource异常时,希望回滚的NodeConfigSource,文件内容是kubeletconfigv1beta1.SerializedNodeConfigSource的Yaml格式。 -

{dynamic-config-dir}/store/checkpoints/: 记录各个Checkpoints的ConfigMap中的Kubelet配置内容,它下面还有两个子目录,分别是{ReferencedConfigMap-UID}和{ReferencedConfigMap-ResourceVersion},最外层是文件名为{node.spec.configSource.configMap.data}的key(通常设置为kubelet),文件中内容是data.key对应的value,也就是纯粹的kubelet配置项。

Get Assigned NodeConfigSource From Checkpoints

-

从Node

{dynamic-config-dir}/store/meta/assigned文件中读取Assigned NodeConfigSource,并设置到nodeConfigStatus.Assigned中。 -

如果读取到的读取Assigned NodeConfigSource为空,则BootStrape返回nil,表示需要load config。

Get LastKnowsGood(LKG) NodeConfigSource From Checkpoints

- 从Node

{dynamic-config-dir}/store/meta/last-known-good/目录中读取Last-Known-Good NodeConfigSource,并设置到nodeConfigStatus.LastKnownGood中。

Load Assigned Config

-

如果读取到的读取Assigned NodeConfigSource为非空,则从Checkpoints中加载对应的Config,并确保能成功转换成KubeletConfiguration类型。

-

检查Assigned NodeConfigSource引用的ConfigMap的UID和ResourceVersion是否为空;

-

检查目录

{dynamic-config-dir}/store/checkpoints/{ReferencedConfigMap-UID}/{ReferencedConfigMap-ResourceVersion}/是否存在; -

当以上条件都满足时,加载

{dynamic-config-dir}/store/checkpoints/{ReferencedConfigMap-UID}/{ReferencedConfigMap-ResourceVersion}/{ReferencedConfigMap-KubeletKey}并进行kubeletconfig.KubeletConfiguration格式转换后返回。{ReferencedConfigMap-KubeletKey}通常我们写成kubelet。

-

-

把Load出来的KubeletConfiguration,通过

transform(kubeletConfigFlagPrecedence)与其他Flags进行整合,最终形成kubelet启动的完整配置。整合逻辑如下:-

附加 Global Flags(logtostderr, v, log_dir, log_file等,)

-

附加 Credential Provider Flags(azure-container-registry-config)

-

附加 Version Flag(version)

-

附加 log Flush Frequency Flag(log-flush-frequency)

-

整合KubeletConfiguration和Kubelet Config Flags (cmd/kubelet/app/options/options.go:459中枚举了所有Kubelet Config Flags),注意这里KubeletConfiguration的FeatureGates与DefaultFeatureGate进行了合并。

-

再附加Command Line中的kubelet参数。

-

最后把KubeletConfiguration的FeatureGates加回到Kubelet Config Flags中,也就是说,如果KubeletConfiguration的FeatureGates与DefaultFeatureGate有冲突时,以前者为准。

-

-

对transform后的KubeletConfiguration中的相关配置进行合法性校验,比如:

-

--enforce-node-allocatable中的key只能是pods,system-resverved,kube-reserved,none类型之一。 -

--hairpin-mode的值只能是hairpin-veth, promiscuous-bridge,none之一。

-

-

如果以上Load Config过程中一切正常,接下来就会:

-

将前面合法有效的Assigned NodeConfigSource更新到Node ConfigStatus的Active字段中,表示启用该Assigned NodeConfigSource。

-

然后异步启动协程:每10s调用checkTrial检查Assigned Path(即

{dynamic-config-dir}/store/meta/assigned)的最近修改时间是否已经过了10min(hard code,暂不可配置),如果不到10min,则checkTrial不做任何处理,继续等待下一个10s循环触发checkTrial,如果已经超过10min,处理如下。CheckTrial的目的是给Node预留一些容忍重启次数,或者Master协同时间。-

检查Assigned NodeConfigSource和当前checkpoints中记录LKG NodeConfigSource(

{dynamic-config-dir}/store/meta/last-known-good/)是否内容一致; -

如果不一致,则将Assigned NodeConfigSource更新到checkpoints中的LKG NodeConfigSource(

{dynamic-config-dir}/store/meta/last-known-good/),并将Assigned NodeConfigSource更新Node ConfigStatus的LastKnownGood字段。 -

如果一致,则checkTrial不需要做任何处理。

-

-

BootStrap结束,返回该Assigned NodeConfigSource。

-

Load LastKnownGood Config If Necessary

当Load Assigned NodeConfigSource过程有异常,说明Assigned配置有问题,则需要回滚到LastKnownGood Config。

-

如果前面的Load Assigned NodeConfigSource过程有异常,将异常的reason信息更新到Node ConfigStatus.Error中。然后开始尝试Load前面读取到的Last-Known-Good NodeConfigSource。如果前面读取到的读取Last-Known-Good NodeConfigSource为空,则BootStrape返回nil,表示需要load local config。

-

Load LKG NodeConfigSource的逻辑与Load Assigned NodeConfigSource完全一样,都是调用

Controller.loadConfig(source checkpoint.RemoteConfigSource) (*kubeletconfig.KubeletConfiguration, string, error)进行的。 -

如果Load LKG NodeConfigSource成功,将LKG NodeConfigSource更新到Node ConfigStatus.Active字段中,BootStrap返回LKG NodeConfigSource。

-

如果Load LKG NodeConfigSource失败,则返回nil和error。

StartSync

BootStrap只是完成Kubelet启动时Checkpointed Dynamic Config的处理和加载。作为一个Controller,它还需要不断的与API Server进行Sync,当Referenced ConfigMap发生变更时,完成Download并Checkpoints等一系列控制。

在Kubelet run时,检查以下条件都满足的情况下,会调用KubeletConfigController StartSync开始其主控制逻辑:

-

DynamicKubeletConfigFeatureGates是否Enable; -

配置了

--dynamic-config-dir; -

KubeletConfigController成功添加到了Kubelet Dependencies中;

-

Kubelet以非standalone模式运行(即有效配置了KubeConfig);

-

Kubelet以非Runonce模式运行(即

--runonce为false或者没有配置);

// StartSync tells the controller to start the goroutines that sync status/config to/from the API server.

// The clients must be non-nil, and the nodeName must be non-empty.

func (cc *Controller) StartSync(client clientset.Interface, eventClient v1core.EventsGetter, nodeName string) error {

const errFmt = "cannot start Kubelet config sync: %s"

if client == nil {

return fmt.Errorf(errFmt, "nil client")

}

if eventClient == nil {

return fmt.Errorf(errFmt, "nil event client")

}

if nodeName == "" {

return fmt.Errorf(errFmt, "empty nodeName")

}

// status sync worker

statusSyncLoopFunc := utilpanic.HandlePanic(func() {

utillog.Infof("starting status sync loop")

wait.JitterUntil(func() {

cc.configStatus.Sync(client, nodeName)

}, 10*time.Second, 0.2, true, wait.NeverStop)

})

// remote config source informer, if we have a remote source to watch

assignedSource, err := cc.checkpointStore.Assigned()

if err != nil {

return fmt.Errorf(errFmt, err)

} else if assignedSource == nil {

utillog.Infof("local source is assigned, will not start remote config source informer")

} else {

cc.remoteConfigSourceInformer = assignedSource.Informer(client, cache.ResourceEventHandlerFuncs{

AddFunc: cc.onAddRemoteConfigSourceEvent,

UpdateFunc: cc.onUpdateRemoteConfigSourceEvent,

DeleteFunc: cc.onDeleteRemoteConfigSourceEvent,

},

)

}

remoteConfigSourceInformerFunc := utilpanic.HandlePanic(func() {

if cc.remoteConfigSourceInformer != nil {

utillog.Infof("starting remote config source informer")

cc.remoteConfigSourceInformer.Run(wait.NeverStop)

}

})

// node informer

cc.nodeInformer = newSharedNodeInformer(client, nodeName,

cc.onAddNodeEvent, cc.onUpdateNodeEvent, cc.onDeleteNodeEvent)

nodeInformerFunc := utilpanic.HandlePanic(func() {

utillog.Infof("starting Node informer")

cc.nodeInformer.Run(wait.NeverStop)

})

// config sync worker

configSyncLoopFunc := utilpanic.HandlePanic(func() {

utillog.Infof("starting Kubelet config sync loop")

wait.JitterUntil(func() {

cc.syncConfigSource(client, eventClient, nodeName)

}, 10*time.Second, 0.2, true, wait.NeverStop)

})

go statusSyncLoopFunc()

go remoteConfigSourceInformerFunc()

go nodeInformerFunc()

go configSyncLoopFunc()

return nil

}

Start Node ConfigStatus Sync Worker

KubeletConfigController创建的时会invoke NewNodeConfigStatus,那时就会往nodeConfigStatus.syncCh中传入true,开始ConfigStatus的Sync操作。

// Sync attempts to sync the status with the Node object for this Kubelet,

// if syncing fails, an error is logged, and work is queued for retry.

func (s *nodeConfigStatus) Sync(client clientset.Interface, nodeName string) {

select {

case <-s.syncCh:

default:

// no work to be done, return

return

}

utillog.Infof("updating Node.Status.Config")

// grab the lock

s.mux.Lock()

defer s.mux.Unlock()

// if the sync fails, we want to retry

var err error

defer func() {

if err != nil {

utillog.Errorf(err.Error())

s.sync()

}

}()

// get the Node so we can check the current status

oldNode, err := client.CoreV1().Nodes().Get(nodeName, metav1.GetOptions{})

if err != nil {

err = fmt.Errorf("could not get Node %q, will not sync status, error: %v", nodeName, err)

return

}

status := &s.status

// override error, if necessary

if len(s.errorOverride) > 0 {

// copy the status, so we don't overwrite the prior error

// with the override

status = status.DeepCopy()

status.Error = s.errorOverride

}

...

// apply the status to a copy of the node so we don't modify the object in the informer's store

newNode := oldNode.DeepCopy()

newNode.Status.Config = status

// patch the node with the new status

if _, _, err := nodeutil.PatchNodeStatus(client.CoreV1(), types.NodeName(nodeName), oldNode, newNode); err != nil {

utillog.Errorf("failed to patch node status, error: %v", err)

}

}

-

ConfigStatus Sync Worker启动后每隔一定时间([10s, 12s]中的一个随机数)启动一次,循环如此。

-

worker从API Server获取本节点对应的Node,将ConfigStatus调用Patch接口更新到Node.Status.Config中。

-

如果Sync失败,则会触发重新Sync。

Start Assigned NodeConfigSource Informer

-

Assigned NodeConfigSource Informer通过FieldSelector List指定name的ConfigMap对象,resyncPeriod为[15m,30m]中的一个随机值。

-

注册Add/Update/Delete Event Handler分别为onAddRemoteConfigSourceEvent/onUpdateRemoteConfigSourceEvent/onDeleteRemoteConfigSourceEvent;

-

Add/Update/Delete时,则invoke pokeConfigSourceWorker往KubeletConfigController.pendingConfigSource channel传入true,触发Config Sync Worker从API Server同步最新的ConfigMap download and save到Checkpoints, 并停止kubelet待systemd重启。

-

Delete时,稍有不同的是,check in-memory 或者 download from api都会失败,此时只更新ConfigStatus.Error,并不会有后续的save to checkpoints、更新

meta/assigned及停止kubelet的操作。

-

Start Node Informer

-

Node Informer通过FieldSelector List指定name的该nodename对象,resyncPeriod为[15m,30m]中的一个随机值。

-

注册Add/Update/Delete Event Handler分别为onAddNodeEvent/onUpdateNodeEvent/onDeleteNodeEvent;

-

Add/Update时,则invoke pokeConfigSourceWorker往KubeletConfigController.pendingConfigSource channel传入true,触发Config Sync Worker从API Server同步最新的ConfigMap download and save到Checkpoints, 并停止kubelet待systemd重启。

-

Delete时,并不会触发任何实际的操作,比如清空

{dynamic-config-dir}/store/下面的任何子目录,包括checkpionts。所以,当你kubectl delete node $nodeName然后再kubectl add node $nodeName时,由onAddNodeEvent触发pokeConfigSourceWorker进行Referenced ConfigMap的Sync工作。

-

Start Config Sync Worker

Config Sync Worker是Dynamic Kubelet Config Controller最为核心的的Worker,它负责检查检查配置是否有更新,如果有更新还需要进行最新配置的下载及checkpointstore的更新、kubelet进程的退出等。

// syncConfigSource checks if work needs to be done to use a new configuration, and does that work if necessary

func (cc *Controller) syncConfigSource(client clientset.Interface, eventClient v1core.EventsGetter, nodeName string) {

select {

case <-cc.pendingConfigSource:

default:

// no work to be done, return

return

}

// if the sync fails, we want to retry

var syncerr error

defer func() {

if syncerr != nil {

utillog.Errorf(syncerr.Error())

cc.pokeConfigSourceWorker()

}

}()

// get the latest Node.Spec.ConfigSource from the informer

source, err := latestNodeConfigSource(cc.nodeInformer.GetStore(), nodeName)

if err != nil {

cc.configStatus.SetErrorOverride(fmt.Sprintf(status.SyncErrorFmt, status.InternalError))

syncerr = fmt.Errorf("%s, error: %v", status.InternalError, err)

return

}

// a nil source simply means we reset to local defaults

if source == nil {

utillog.Infof("Node.Spec.ConfigSource is empty, will reset assigned and last-known-good to defaults")

if updated, reason, err := cc.resetConfig(); err != nil {

reason = fmt.Sprintf(status.SyncErrorFmt, reason)

cc.configStatus.SetErrorOverride(reason)

syncerr = fmt.Errorf("%s, error: %v", reason, err)

return

} else if updated {

restartForNewConfig(eventClient, nodeName, nil)

}

return

}

// construct the interface that can dynamically dispatch the correct Download, etc. methods for the given source type

remote, reason, err := checkpoint.NewRemoteConfigSource(source)

if err != nil {

reason = fmt.Sprintf(status.SyncErrorFmt, reason)

cc.configStatus.SetErrorOverride(reason)

syncerr = fmt.Errorf("%s, error: %v", reason, err)

return

}

// "download" source, either from informer's in-memory store or directly from the API server, if the informer doesn't have a copy

payload, reason, err := cc.downloadConfigPayload(client, remote)

if err != nil {

reason = fmt.Sprintf(status.SyncErrorFmt, reason)

cc.configStatus.SetErrorOverride(reason)

syncerr = fmt.Errorf("%s, error: %v", reason, err)

return

}

// save a checkpoint for the payload, if one does not already exist

if reason, err := cc.saveConfigCheckpoint(remote, payload); err != nil {

reason = fmt.Sprintf(status.SyncErrorFmt, reason)

cc.configStatus.SetErrorOverride(reason)

syncerr = fmt.Errorf("%s, error: %v", reason, err)

return

}

// update the local, persistent record of assigned config

if updated, reason, err := cc.setAssignedConfig(remote); err != nil {

reason = fmt.Sprintf(status.SyncErrorFmt, reason)

cc.configStatus.SetErrorOverride(reason)

syncerr = fmt.Errorf("%s, error: %v", reason, err)

return

} else if updated {

restartForNewConfig(eventClient, nodeName, remote)

}

cc.configStatus.SetErrorOverride("")

}

-

ConfigStatus Sync Worker启动后每隔一定时间([10s, 12s]中的一个随机数)启动一次,循环如此。

-

从NodeInformer中获取Node最新的NodeConfigSource(

.Spec.ConfigSource.),如果发生异常,把error更新到ConfigStatus.Error中并return,并不会触发配置的回滚。 -

如果获取到Node最新的NodeConfigSource为空,则invoke resetConfig将本地checkpointstore的

assigned和last-known-good设置为空,如果在次之前assigned本来不为空,则invoke restartForNewConfig将kubelet进程退出并上报Event。-

makeEvent(nodeName, apiv1.EventTypeNormal, KubeletConfigChangedEventReason, message),其中message为"Kubelet restarting to use local config"。

-

kubelet由systemd重新启动时,会KubeletConfigController.BootStrap返回的KubeletConfiguration为空,因此KubeletConfig会由

--config主导。

-

-

如果获取到Node最新的NodeConfigSource不为空,则invoke downloadConfigPayload从本地in-memory store或者API Server中download对应的ConfigMap并封装到checkpoint.configMapPayload对象,并且更新该NodeConfigSource对象的UID和ResourceVersion。

-

然后invoke saveConfigCheckpoint:

-

先检查该NodeConfigSource对应的

{dynamic-config-dir}/store/checkpoints/{ReferencedConfigMap-UID}/{ReferencedConfigMap-ResourceVersion}/目录是否存在。如果已经存在,说明checkpoint已经有了,则return,结束saveConfigCheckpoint操作。 -

否则,invoke checkpiontStore.Save创建对应对应的

{dynamic-config-dir}/store/checkpoints/{ReferencedConfigMap-UID}/{ReferencedConfigMap-ResourceVersion}/目录,并且将ConfigMap.data(map[string]string)的Value字符串保存到文件名为data.key的文件中,我们通常以kubelet为Key,也就是保存到{dynamic-config-dir}/store/checkpoints/{ReferencedConfigMap-UID}/{ReferencedConfigMap-ResourceVersion}/kubelet文件中。

-

-

saveConfigCheckpoint成功后,invoke setAssignedConfig更新checkpointStore Assigned(

{dynamic-config-dir}/store/meta/assigned文件,如果assigned前后内容确实发生了变化,则退出kubelet进程。 -

如果以上步骤中,发生error,表示Sync失败,则会触发重新Sync。

当前不足

Kubelet Dynamic Config在Kubernetes 1.13中仍然还是Alpha,其中有几个关键点还没解决:

-

没有提供原生的集群灰度能力,需要用户自己实现自动化灰度节点配置。如果所有Node引用同一个Kubelet ConfigMap,当该ConfigMap发生错误变更后,可能会导致集群短时间不可用。

-

分批灰度所有Nodes的能力

-

或者是滚动灰度所有Nodes的能力

-

-

哪些集群配置可以通过Kubelet Dynamic Config安全可靠的动态变更,还没有一个完全明确的集合。通常情况下,我们可以参考

staging/src/k8s.io/kubelet/config/v1beta1/types.go:62中对KubeletConfiguration的定义注解了解那些Dynamic Config,但是还是建议在测试集群中测试过后,再通过Dynamic Config灰度到生产环境。

来源:oschina

链接:https://my.oschina.net/u/104700/blog/2995653