Intro

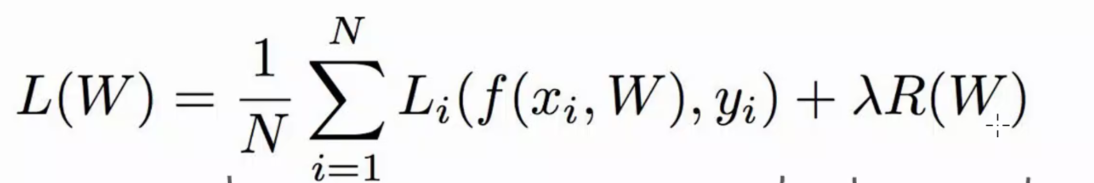

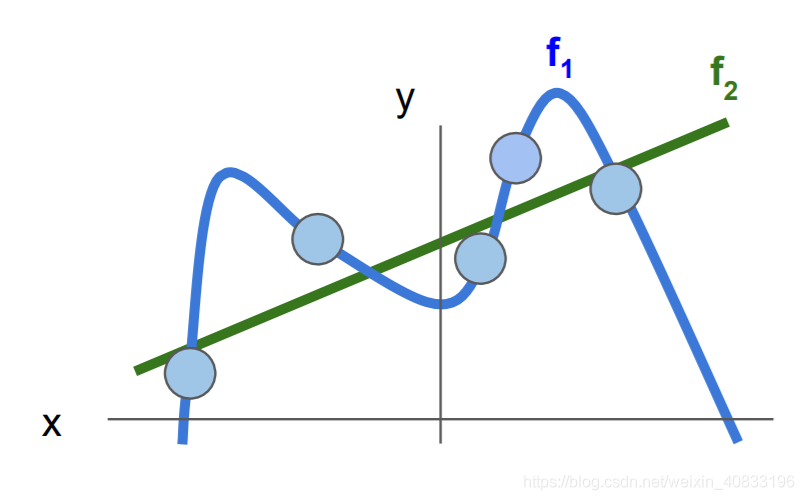

Regularization is an additional part in loss function or optimiazation loss, which prevent the loss function from doing too well on training data.

Formulation

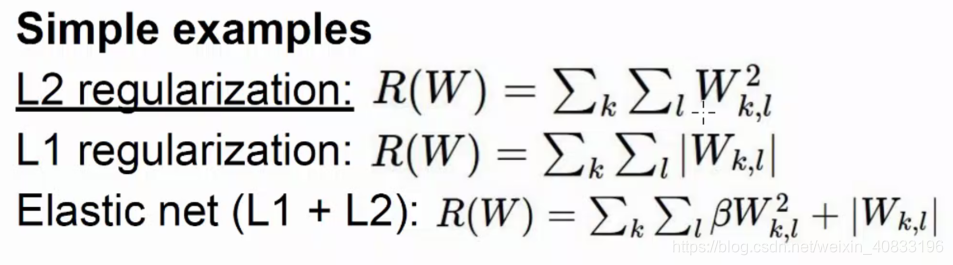

indeed, the regularation prefer a smaller W intuitively for constructing a simple model.

Intuition

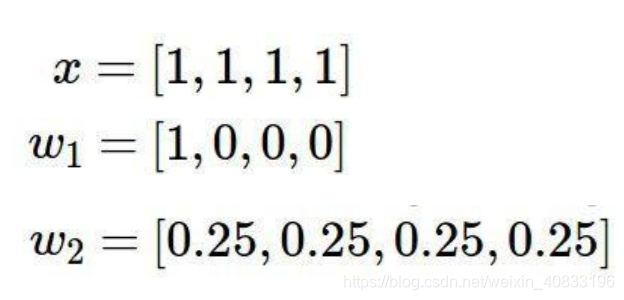

chosing L1-norm result in a pluse weight,while chosing L2-norm result in a plain vector.

pics from: cs231n

来源:CSDN

作者:George1998

链接:https://blog.csdn.net/weixin_40833196/article/details/104639596