KNN

no_loop计算dists矩阵:

参考KNN中no-loop矩阵乘法代码

损失函数

Numpy中ndim、shape、dtype、astype的用法

Python numpy函数:hstack()、vstack()、stack()、dstack()、vsplit()、concatenate()

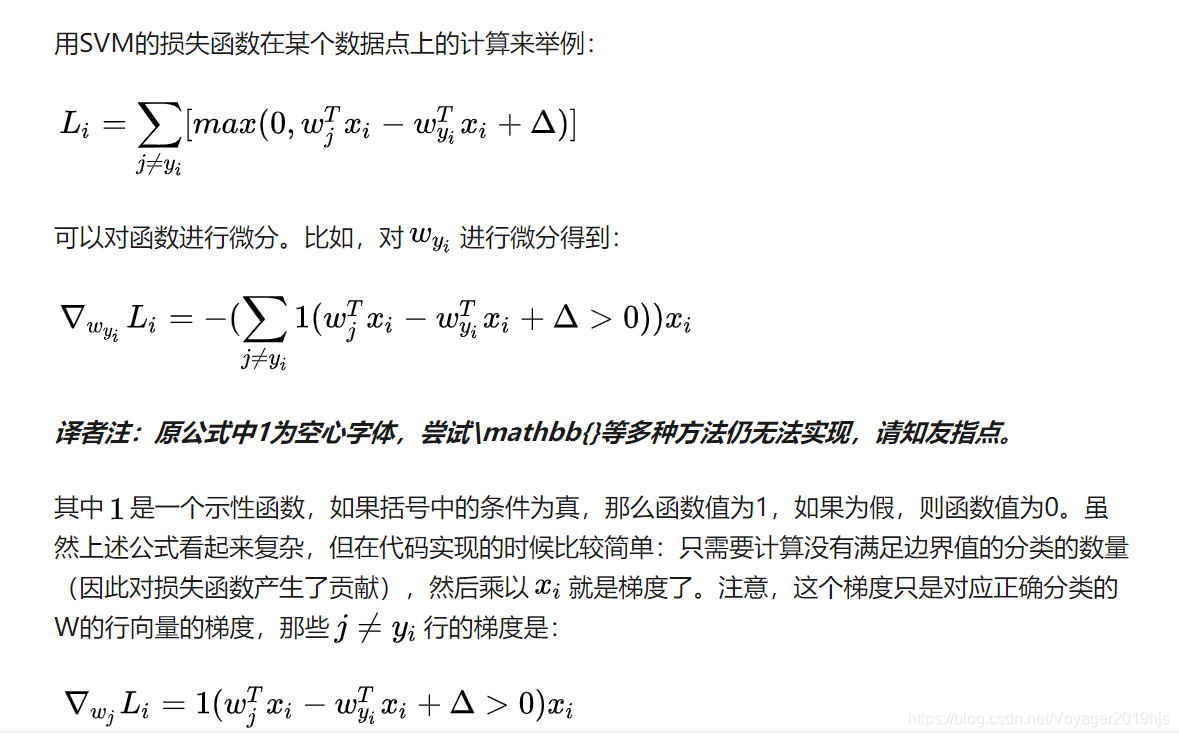

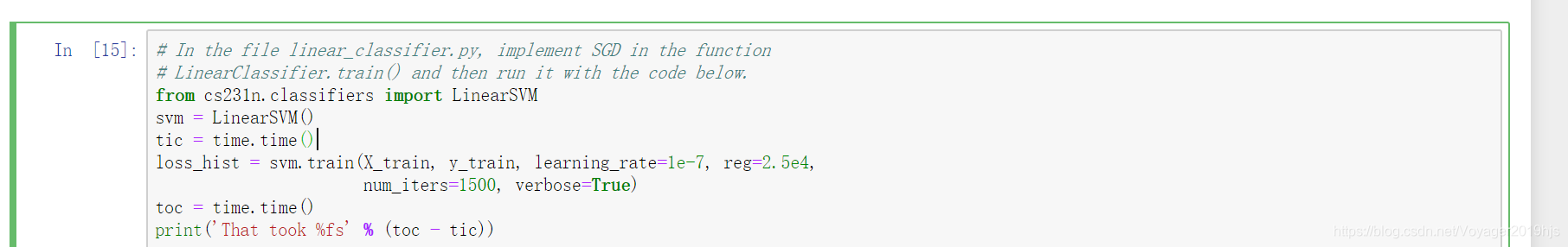

SVM

for i in range(num_train):

scores = X[i].dot(W) #第i个训练集在10个维度上的数值

correct_score = scores[y[i]] #真实数值

for j in range(num_att):

if j == y[i]:

continue

margin = scores[j] - correct_score + 1 # note delta = 1

if margin > 0: #如果差距>1则计入误差

loss += margin

dW[:,j]+=X[i].T ##通过求导得到

dW[:,y[i]] += -X[i].T

这里的优化参见下图cs231n官方笔记

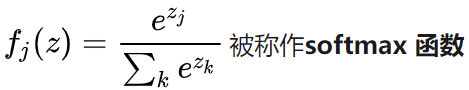

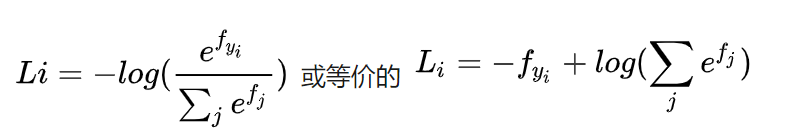

Softmax

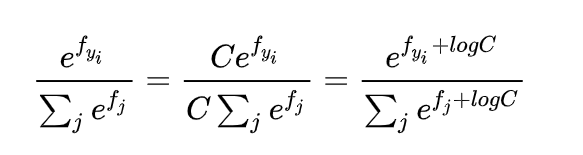

分子常常较大,在分式的分子和分母都乘以一个常数C

通常将C设为使得每项最大值为0

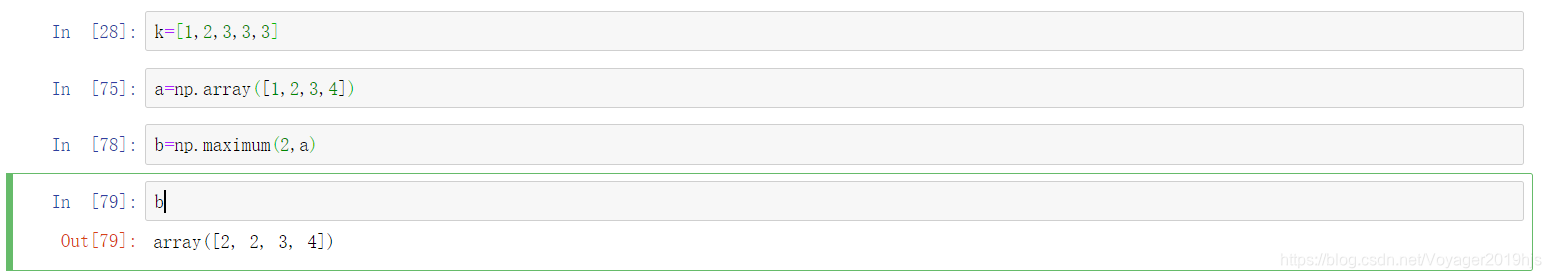

这里的

for i in range(num_train):

scores = X[i].dot(W)

# to keep numerical calculate stablly,minus maximum

scores -= np.max(scores)

exp_scores = np.exp(scores)

prob = exp_scores / np.sum(exp_scores)

for j in range(num_classes):

if j == y[i]:

correct_class_prob = prob[j]

correct_class_logprob = -np.log(correct_class_prob)

loss += correct_class_logprob

dW[:,j] += (correct_class_prob - 1) * X[i,:]

# 之前else没加,硬是找不出来这个bug

else:

dW[:,j] += prob[j] * X[i,:]

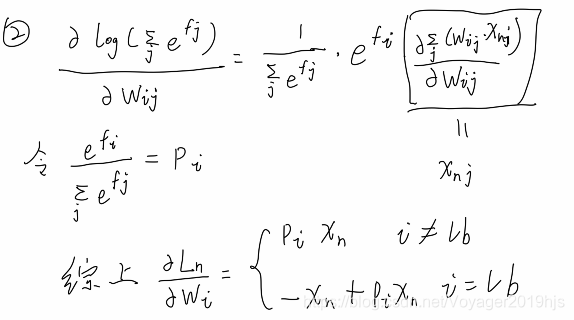

上边代码为求softmax loss的梯度

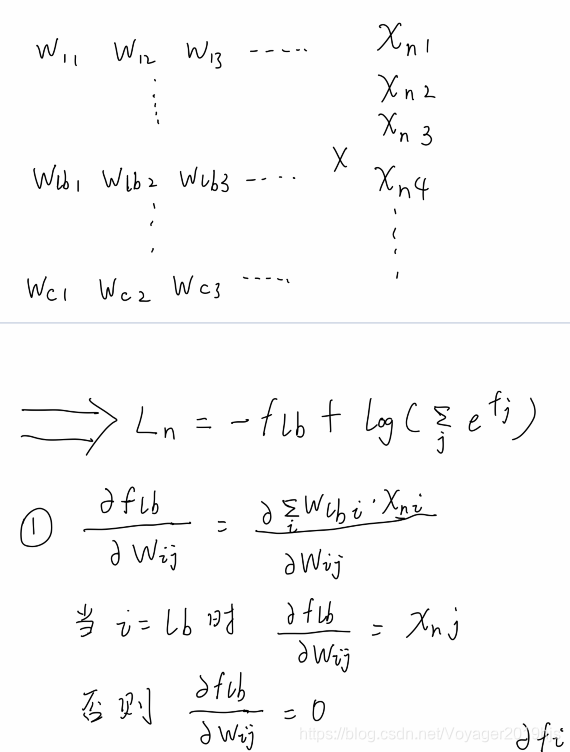

下面为梯度推导

loss /= num_train

loss += 0.5 * reg *np.sum(W * W)

dW /= num_train

dW += reg * W

dw就是loss对w求导,代码中dw和loss上下对应

来源:CSDN

作者:Shane-HuangCN

链接:https://blog.csdn.net/Voyager2019hjs/article/details/104286598