源码如下:

1 import jieba

2 import io

3 import re

4

5 #jieba.load_userdict("E:/xinxi2.txt")

6 patton=re.compile(r'..')

7

8 #添加字典

9 def add_dict():

10 f=open("E:/xinxi2.txt","r+",encoding="utf-8") #百度爬取的字典

11 for line in f:

12 jieba.suggest_freq(line.rstrip("\n"), True)

13 f.close()

14

15 #对句子进行分词

16 def cut():

17 number=0

18 f=open("E:/luntan.txt","r+",encoding="utf-8") #要处理的内容,所爬信息,CSDN论坛标题

19 for line in f:

20 line=seg_sentence(line.rstrip("\n"))

21 seg_list=jieba.cut(line)

22 for i in seg_list:

23 print(i) #打印词汇内容

24 m=patton.findall(i)

25 #print(len(m)) #打印字符长度

26 if len(m)!=0:

27 write(i.strip()+" ")

28 line=line.rstrip().lstrip()

29 print(len(line))#打印句子长度

30 if len(line)>1:

31 write("\n")

32 number+=1

33 print("已处理",number,"行")

34

35 #分词后写入

36 def write(contents):

37 f=open("E://luntan_cut2.txt","a+",encoding="utf-8") #要写入的文件

38 f.write(contents)

39 #print("写入成功!")

40 f.close()

41

42 #创建停用词

43 def stopwordslist(filepath):

44 stopwords = [line.strip() for line in open(filepath, 'r', encoding='utf-8').readlines()]

45 return stopwords

46

47 # 对句子进行去除停用词

48 def seg_sentence(sentence):

49 sentence_seged = jieba.cut(sentence.strip())

50 stopwords = stopwordslist('E://stop.txt') # 这里加载停用词的路径

51 outstr = ''

52 for word in sentence_seged:

53 if word not in stopwords:

54 if word != '\t':

55 outstr += word

56 #outstr += " "

57 return outstr

58

59 #循环去除、无用函数

60 def cut_all():

61 inputs = open('E://luntan_cut.txt', 'r', encoding='utf-8')

62 outputs = open('E//luntan_stop.txt', 'a')

63 for line in inputs:

64 line_seg = seg_sentence(line) # 这里的返回值是字符串

65 outputs.write(line_seg + '\n')

66 outputs.close()

67 inputs.close()

68

69 if __name__=="__main__":

70 add_dict()

71 cut()luntan.txt的来源,地址:https://www.cnblogs.com/zlc364624/p/12285055.html

其中停用词可自行百度下载,或者自己创建一个txt文件夹,自行添加词汇用换行符隔开。

百度爬取的字典在前几期博客中可以找到,地址:https://www.cnblogs.com/zlc364624/p/12289008.html

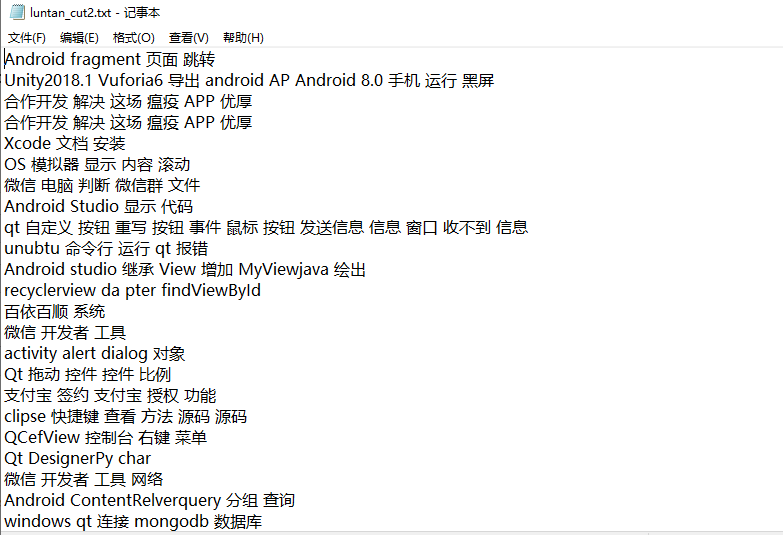

效果如下:

import jiebaimport ioimport re#jieba.load_userdict("E:/xinxi2.txt")patton=re.compile(r'..')#添加字典def add_dict(): f=open("E:/xinxi2.txt","r+",encoding="utf-8") #百度爬取的字典for line in f: jieba.suggest_freq(line.rstrip("\n"), True) f.close()#对句子进行分词def cut(): number=0f=open("E:/luntan.txt","r+",encoding="utf-8") #要处理的内容,所爬信息,CSDN论坛标题for line in f: line=seg_sentence(line.rstrip("\n")) seg_list=jieba.cut(line) for i in seg_list: print(i) #打印词汇内容m=patton.findall(i) #print(len(m)) #打印字符长度if len(m)!=0: write(i.strip()+" ") line=line.rstrip().lstrip() print(len(line))#打印句子长度if len(line)>1: write("\n") number+=1print("已处理",number,"行")#分词后写入def write(contents): f=open("E://luntan_cut2.txt","a+",encoding="utf-8") #要写入的文件f.write(contents) #print("写入成功!")f.close()#创建停用词def stopwordslist(filepath): stopwords = [line.strip() for line in open(filepath, 'r', encoding='utf-8').readlines()] return stopwords# 对句子进行去除停用词def seg_sentence(sentence): sentence_seged = jieba.cut(sentence.strip()) stopwords = stopwordslist('E://stop.txt') # 这里加载停用词的路径outstr = ''for word in sentence_seged: if word not in stopwords: if word != '\t': outstr += word #outstr += " "return outstr#循环去除、无用函数def cut_all(): inputs = open('E://luntan_cut.txt', 'r', encoding='utf-8') outputs = open('E//luntan_stop.txt', 'a') for line in inputs: line_seg = seg_sentence(line) # 这里的返回值是字符串outputs.write(line_seg + '\n') outputs.close() inputs.close()if __name__=="__main__": add_dict() cut()来源:https://www.cnblogs.com/zlc364624/p/12289643.html