NLP: Natural Language Processing 自然语言处理

文本相似度分析

指从海量数据(文章,评论)中,把相似的数据挑选出来

步骤如下:

1: 把评论翻译成机器看的懂的语言

- 中文分词:把句子拆分成词语

工具:结巴分词 (Terminal中pip install jieba -i https://pypi.douban.com/simple/)

结巴中文分析支持的三种分词模式包括:

(1)精确模式:试图将句子最精确的切开,适合文本分析(默认模式)

(2)全模式:把句子中所有的可以成词的词语都扫描出来,速度非常快,但是不能解决歧义问题

(3)搜索引擎模式:在精确模式的基础上,对长词再次切分,提高召回率,适合用于搜素引擎分词

text = "我来到北京清华大学"

seg_list = jieba.cut(text, cut_all=True)

print("全模式:", "/ ".join(seg_list))

执行结果:

全模式: 我/ 来到/ 北京/ 清华/ 清华大学/ 华大/ 大学

- 自制字典进行关键字提取,字典范围小效果不好。

import jieba

import jieba.analyse

jieba.load_userdict('./mydict.txt')

text = "故宫的著名景点包括乾清宫、太和殿和午门等。其中乾清宫非常精美,午门是紫禁城的正门,午门居中向阳。"

seg_list = jieba.cut(text, cut_all=False)

# 获取关键词

tags = jieba.analyse.extract_tags(text, topK=5)

print("关键词:")

print(" ".join(tags))

# 除去停用词

stopwords = ['的', '包括', '等', '是']

final = ''

for seg in seg_list:

if seg not in stopwords:

final += seg

print(final)

执行结果:

关键词:

午门 乾清宫 著名景点 太和殿 向阳

故宫著名景点乾清宫、太和殿和午门。其中乾清宫非常精美,午门紫禁城正门,午门居中向阳。

使用TF-IDF提取关键词优点如下:

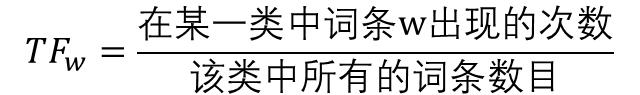

- 不考虑停用词(就是没什么意义的词),找出一句话中出现次数最多的单词,来代表这句话,这个就叫做词频(TF – Term Frequency),相应的权重值就会增高

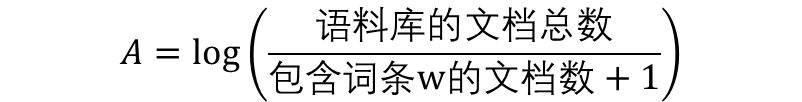

- 如果一个词在所有句子中都出现过,那么这个词就不能代表某句话,这个就叫做逆文本频率(IDF – Inverse Document Frequency)相应的权重值就会降低

- TF-IDF = TF * IDF

- TF公式:

- IDF公式:

2:使用机器看的懂得算法轮询去比较每一条和所有评论的相似程度(TF-IDF)

3:把相似的评论挑出来

- 制作词袋模型(bag-of-word):可以理解成装着所有词的袋子,把所有的词与标点符号用数字代替

- 用词袋模型制作语料库(corpus):把每一个句子都用词袋表示,每一个词语对应的数字与这个词语出现的次数组成元组放到列表中

- 把评论变成词向量:提取次数组成一个向量

举例:提供一个方法,当用户输入一句话时,方法将最相似的5句话返回

import jieba

from gensim import corpora, models, similarities

import csv

limitLine = 10

count = 0

all_doc_list = []

with open("C:/Users/scq/Desktop/pycharmprodect/机器学习code/dataSet/ChnSentiCorp_htl_all.csv", 'r', encoding='UTF-8') as f:

reader = csv.reader(f)

for row in reader:

if count == 0:

count += 1

continue

if count > limitLine: break

comment = list(jieba.cut(row[1], cut_all=False))

all_doc_list.append(list(comment))

count += 1

# print(all_doc_list)

"""

制作语料库

"""

# 制作词袋(bag-of-words)

dictionary = corpora.Dictionary(all_doc_list)

print(dictionary.keys())

print(dictionary.token2id)

# 制作语料库

corpus = [dictionary.doc2bow(doc) for doc in all_doc_list]

print(corpus)

# 测试文档转化:

doc_test = "商务大床房,房间很大,床有5M宽,整体感觉经济实惠不错!"

dic_test_list = [word for word in jieba.cut(doc_test)]

doc_test_vec = dictionary.doc2bow(dic_test_list)

print("doc_test_vec: ", doc_test_vec)

"""

相似度分析

"""

tfidf = models.TfidfModel(corpus)

# 获取测试文档中,每个词的TF-IDF值

print("tf_idf 值: ", tfidf[corpus])

# 对每个目标文档,分析要测试文档的相似度

index = similarities.SparseMatrixSimilarity(tfidf[corpus], num_features=len(dictionary.keys()))

print("index: ", index)

#分析出测试文档的相似度后将处理好的测试语句传入相似度中寻找所有词对应tfidf的值

sim = index[tfidf[doc_test_vec]]

print("sim:", sim)

sim_sorted = sorted(enumerate(sim), key=lambda item: -item[1]) #排序

print(sim_sorted)

执行结果:

[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155, 156, 157, 158, 159, 160, 161, 162, 163, 164, 165, 166, 167, 168, 169, 170, 171, 172, 173, 174, 175, 176, 177, 178, 179, 180, 181, 182, 183, 184, 185, 186, 187, 188, 189, 190, 191, 192, 193, 194, 195, 196, 197, 198, 199, 200, 201, 202, 203, 204, 205, 206, 207, 208, 209, 210, 211, 212, 213, 214, 215, 216, 217, 218, 219, 220, 221, 222, 223, 224, 225, 226, 227, 228, 229, 230, 231, 232, 233, 234, 235, 236, 237, 238, 239, 240, 241, 242, 243, 244, 245, 246, 247, 248, 249, 250, 251, 252, 253, 254, 255, 256, 257, 258, 259, 260, 261, 262, 263, 264, 265, 266, 267, 268, 269, 270, 271, 272, 273, 274, 275, 276, 277, 278, 279, 280, 281, 282, 283, 284, 285, 286, 287, 288, 289, 290, 291, 292, 293, 294, 295, 296, 297, 298, 299, 300, 301, 302, 303, 304, 305, 306, 307, 308, 309, 310, 311, 312, 313, 314, 315, 316, 317, 318, 319, 320, 321, 322]

{'"': 0, ',': 1, '.': 2, '不': 3, '会': 4, '但是': 5, '公交': 6, '公路': 7, '别的': 8, '如果': 9, '对': 10, '川沙': 11, '建议': 12, '房间': 13, '指示': 14, '是': 15, '用': 16, '的话': 17, '蔡陆线': 18, '距离': 19, '路线': 20, '较为简单': 21, '较近': 22, '非常': 23, '麻烦': 24, '!': 25, '2M': 26, '不错': 27, '商务': 28, '大床': 29, '实惠': 30, '宽': 31, '床有': 32, '很大': 33, '感觉': 34, '房': 35, '整体': 36, '经济': 37, ',': 38, '。': 39, '一下': 40, '不加': 41, '也': 42, '了': 43, '人': 44, '去': 45, '多少': 46, '太': 47, '好': 48, '差': 49, '应该': 50, '很': 51, '无论': 52, '早餐': 53, '本身': 54, '的': 55, '这个': 56, '那边': 57, '酒店': 58, '重视': 59, '问题': 60, '食品': 61, '-_-': 62, '|': 63, '~': 64, '一大': 65, '上': 66, '不到': 67, '不大好': 68, '京味': 69, '介绍': 70, '优势': 71, '但': 72, '低价位': 73, '内': 74, '出发': 75, '划算': 76, '到': 77, '加上': 78, '北京': 79, '北海': 80, '十分钟': 81, '可以': 82, '同胞': 83, '呢': 84, '呵': 85, '因素': 86, '在': 87, '好足': 88, '好近': 89, '安静': 90, '宾馆': 91, '小': 92, '就': 93, '差不多': 94, '很多': 95, '很小': 96, '总之': 97, '所值': 98, '找': 99, '挺': 100, '推荐': 101, '故居': 102, '整洁': 103, '无超': 104, '是从': 105, '暖气': 106, '朋友': 107, '梅兰芳': 108, '步行': 109, '比较': 110, '消费': 111, '热心': 112, '特色小吃': 113, '环境': 114, '确实': 115, '等等': 116, '给': 117, '胡同': 118, '自助游': 119, '节约': 120, '街道': 121, '设施': 122, '跟': 123, '还好': 124, '还是': 125, '还有': 126, '附近': 127, ';': 128, '5': 129, 'CBD': 130, '中心': 131, '为什么': 132, '勉强': 133, '卫生间': 134, '周围': 135, '店铺': 136, '星': 137, '有点': 138, '没什么': 139, '没有': 140, '电吹风': 141, '知道': 142, '说': 143, '他': 144, '价格': 145, '印象': 146, '客人': 147, '希望': 148, '总的来说': 149, '我': 150, '留些': 151, '算': 152, '装修': 153, '赶快': 154, '还': 155, '这样': 156, '配': 157, '上次': 158, '些': 159, '价格比': 160, '免费': 161, '前台': 162, '升级': 163, '地毯': 164, '好些': 165, '感谢': 166, '房子': 167, '新': 168, '早': 169, '服务员': 170, '比': 171, '要': 172, '这次': 173, '中': 174, '值得': 175, '同等': 176, '档次': 177, '!': 178, '-': 179, '10': 180, '12': 181, '21': 182, '24': 183, '30': 184, '68': 185, '8': 186, '9': 187, '“': 188, '”': 189, '、': 190, '一个': 191, '一层': 192, '一楼': 193, '一段距离': 194, '一系列': 195, '一般': 196, '不如': 197, '与': 198, '丽晶': 199, '也许': 200, '人多': 201, '从': 202, '以后': 203, '会议': 204, '住': 205, '像': 206, '元': 207, '元多': 208, '入住': 209, '全在': 210, '公司': 211, '几家': 212, '几次': 213, '分昆区': 214, '包头': 215, '包百': 216, '包百等': 217, '可能': 218, '吃': 219, '合适': 220, '吧': 221, '吵': 222, '味道': 223, '品种': 224, '哈': 225, '商圈': 226, '商场': 227, '因为': 228, '团体': 229, '地处': 230, '基本': 231, '外有': 232, '大': 233, '大小': 234, '实在': 235, '小卖部': 236, '小时': 237, '就是': 238, '就算': 239, '尽量': 240, '广场': 241, '广式': 242, '开会': 243, '总体': 244, '惊人': 245, '房间内': 246, '打车': 247, '提供': 248, '提升': 249, '插': 250, '敲': 251, '日常用品': 252, '昆区': 253, '晚上': 254, '晚茶': 255, '最': 256, '有': 257, '有人': 258, '有时': 259, '服务态度': 260, '来': 261, '楼道': 262, '次之': 263, '正宗': 264, '正赶上': 265, '每人': 266, '水果刀': 267, '没关系': 268, '油漆味': 269, '淡淡的': 270, '点心': 271, '点有': 272, '王府井': 273, '理论': 274, '略': 275, '略少': 276, '百货': 277, '的确': 278, '相对来说': 279, '离': 280, '科丽珑': 281, '等': 282, '管理': 283, '繁华': 284, '线板': 285, '细心': 286, '网速': 287, '自助餐': 288, '菜': 289, '菜品': 290, '营业': 291, '西贝': 292, '西贝筱面': 293, '让': 294, '设备齐全': 295, '超市': 296, '车费': 297, '较': 298, '边上': 299, '过': 300, '过去': 301, '还会': 302, '这里': 303, '里略': 304, '量': 305, '银河': 306, '错门': 307, '青山区': 308, '饭馆': 309, ':': 310, '1': 311, '2': 312, '3': 313, '不是': 314, '交通': 315, '只是': 316, '和': 317, '多': 318, '方便': 319, '有些': 320, '装潢': 321, '饭店': 322}

[[(0, 2), (1, 3), (2, 3), (3, 1), (4, 1), (5, 1), (6, 1), (7, 1), (8, 1), (9, 1), (10, 1), (11, 1), (12, 1), (13, 1), (14, 1), (15, 1), (16, 1), (17, 1), (18, 1), (19, 1), (20, 1), (21, 1), (22, 1), (23, 1), (24, 1)], [(13, 1), (25, 1), (26, 1), (27, 1), (28, 1), (29, 1), (30, 1), (31, 1), (32, 1), (33, 1), (34, 1), (35, 1), (36, 1), (37, 1), (38, 3)], [(13, 1), (38, 2), (39, 3), (40, 1), (41, 1), (42, 1), (43, 1), (44, 1), (45, 1), (46, 1), (47, 1), (48, 1), (49, 1), (50, 1), (51, 1), (52, 1), (53, 1), (54, 1), (55, 1), (56, 1), (57, 1), (58, 1), (59, 1), (60, 1), (61, 1)], [(13, 1), (19, 1), (27, 2), (38, 14), (39, 5), (55, 3), (62, 1), (63, 2), (64, 3), (65, 1), (66, 1), (67, 1), (68, 1), (69, 1), (70, 1), (71, 1), (72, 2), (73, 1), (74, 1), (75, 1), (76, 1), (77, 1), (78, 1), (79, 1), (80, 1), (81, 1), (82, 1), (83, 1), (84, 1), (85, 1), (86, 1), (87, 2), (88, 1), (89, 1), (90, 1), (91, 3), (92, 4), (93, 3), (94, 1), (95, 2), (96, 1), (97, 1), (98, 1), (99, 1), (100, 1), (101, 1), (102, 1), (103, 1), (104, 1), (105, 1), (106, 1), (107, 1), (108, 1), (109, 1), (110, 1), (111, 1), (112, 1), (113, 1), (114, 1), (115, 1), (116, 1), (117, 1), (118, 2), (119, 1), (120, 1), (121, 1), (122, 1), (123, 1), (124, 1), (125, 1), (126, 1), (127, 1), (128, 1)], [(1, 2), (2, 1), (3, 1), (129, 1), (130, 1), (131, 1), (132, 1), (133, 1), (134, 1), (135, 1), (136, 1), (137, 1), (138, 1), (139, 1), (140, 1), (141, 1), (142, 1), (143, 1)], [(38, 3), (48, 1), (55, 4), (58, 1), (82, 1), (117, 1), (144, 1), (145, 1), (146, 1), (147, 1), (148, 1), (149, 1), (150, 1), (151, 1), (152, 1), (153, 1), (154, 1), (155, 1), (156, 2), (157, 1)], [(15, 1), (27, 1), (38, 3), (39, 4), (43, 1), (44, 1), (45, 1), (53, 1), (55, 4), (58, 1), (95, 1), (110, 1), (124, 1), (158, 1), (159, 1), (160, 1), (161, 1), (162, 1), (163, 1), (164, 1), (165, 1), (166, 1), (167, 1), (168, 1), (169, 1), (170, 1), (171, 1), (172, 1), (173, 1)], [(15, 1), (27, 1), (38, 1), (50, 1), (55, 1), (58, 1), (87, 1), (101, 1), (174, 1), (175, 1), (176, 1), (177, 1), (178, 1)], [(13, 1), (15, 5), (34, 3), (38, 25), (39, 22), (42, 2), (43, 2), (45, 1), (48, 3), (51, 4), (55, 9), (58, 10), (66, 1), (77, 1), (82, 1), (87, 3), (92, 1), (93, 1), (124, 1), (125, 1), (126, 1), (134, 1), (135, 1), (140, 1), (143, 1), (148, 1), (156, 1), (161, 2), (165, 1), (168, 2), (171, 1), (173, 1), (179, 1), (180, 1), (181, 1), (182, 1), (183, 2), (184, 1), (185, 1), (186, 1), (187, 1), (188, 1), (189, 1), (190, 4), (191, 1), (192, 1), (193, 1), (194, 1), (195, 1), (196, 1), (197, 1), (198, 1), (199, 2), (200, 1), (201, 1), (202, 2), (203, 2), (204, 1), (205, 3), (206, 1), (207, 2), (208, 2), (209, 1), (210, 1), (211, 1), (212, 2), (213, 2), (214, 1), (215, 2), (216, 2), (217, 1), (218, 1), (219, 3), (220, 1), (221, 5), (222, 1), (223, 1), (224, 1), (225, 1), (226, 2), (227, 1), (228, 1), (229, 1), (230, 1), (231, 1), (232, 1), (233, 2), (234, 1), (235, 1), (236, 1), (237, 1), (238, 1), (239, 1), (240, 1), (241, 2), (242, 1), (243, 1), (244, 1), (245, 1), (246, 1), (247, 3), (248, 1), (249, 1), (250, 1), (251, 1), (252, 1), (253, 2), (254, 2), (255, 1), (256, 1), (257, 7), (258, 1), (259, 1), (260, 1), (261, 1), (262, 1), (263, 1), (264, 1), (265, 1), (266, 1), (267, 1), (268, 1), (269, 1), (270, 1), (271, 1), (272, 1), (273, 1), (274, 1), (275, 1), (276, 1), (277, 1), (278, 1), (279, 2), (280, 1), (281, 1), (282, 2), (283, 1), (284, 1), (285, 1), (286, 1), (287, 1), (288, 1), (289, 1), (290, 1), (291, 1), (292, 2), (293, 1), (294, 1), (295, 1), (296, 2), (297, 1), (298, 1), (299, 1), (300, 1), (301, 1), (302, 1), (303, 2), (304, 1), (305, 1), (306, 2), (307, 1), (308, 4), (309, 1), (310, 2)], [(13, 1), (27, 1), (38, 4), (39, 6), (53, 1), (55, 1), (58, 1), (82, 1), (92, 1), (95, 1), (110, 3), (122, 1), (135, 1), (155, 2), (168, 1), (224, 1), (269, 1), (311, 1), (312, 1), (313, 1), (314, 1), (315, 1), (316, 2), (317, 1), (318, 1), (319, 1), (320, 1), (321, 1), (322, 1)]]

doc_test_vec: [(13, 1), (25, 1), (27, 1), (28, 1), (29, 1), (30, 1), (31, 1), (32, 1), (33, 1), (34, 1), (35, 1), (36, 1), (37, 1), (38, 3)]

tf_idf 值: <gensim.interfaces.TransformedCorpus object at 0x000001E2E7BC4408>

index: <gensim.similarities.docsim.SparseMatrixSimilarity object at 0x000001E2E7BC4448>

sim: [0.00265907 0.9563599 0.00805737 0.01925601 0. 0.00658716

0.0127648 0.01414677 0.03330713 0.01512228]

[(1, 0.9563599), (8, 0.033307128), (3, 0.019256009), (9, 0.01512228), (7, 0.014146771), (6, 0.012764804), (2, 0.008057371), (5, 0.006587164), (0, 0.002659067), (4, 0.0)]

可以看出,对于‘’商务大床房,房间很大,床有5M宽,整体感觉经济实惠不错!‘’这句话,输出为较高的权重值所对应的数字为近似的话

情感分类

情感分类是指根据文本所表达的含义和情感信息将文本划分成褒扬或者贬义的两种或几种类型,是对文本作者情感倾向、观点或者态度的划分。

需要做的准备:分好类的数据集

当我们有了一定量的数据积累之后,最简单的想法就是:新来的数据集和哪个已知的数据相似,我们就认为他属于哪个类别

方法: 逻辑回归、KNN

- 逻辑回归:是一种分类算法

只要我们输入计算机能看得懂的数据,计算机就会自动的进行学习与记录,一旦模型建立,当我们再输入新的数据的时候,模型就会自动识别出相应的类型。

那么关键就在于:把数据转化成算法能读懂的形式,“喂”给算法,然后等着算法进行分类后最终的结果。

举例:使用NLP和逻辑回归对酒店评论做情感分类

分析步骤:

- 把句子向量化(就是把句子翻译成计算机看得懂的语言)

- 把数据喂给算法,生成模型

- 用新值去预测类别

import jieba

from gensim import corpora

import csv

from sklearn.linear_model import LogisticRegression

all_doc_list = []

label_list = []

with open("C:/Users/scq/Desktop/pycharmprodect/机器学习code/dataSet/ChnSentiCorp_htl_all.csv", 'r', encoding='UTF-8') as f:

reader = csv.reader(f)

for row in reader:

label = row[0]

comment = list(jieba.cut(row[1], cut_all=False))

label_list.append(label)

all_doc_list.append(list(comment))

# print(all_doc_list)

"""

制作语料库

"""

# 制作词袋(bag-of-words)

dictionary = corpora.Dictionary(all_doc_list)

# print(dictionary.keys())

# print(dictionary.token2id)

# 制作语料库

corpus = [dictionary.doc2bow(doc) for doc in all_doc_list]

# print(corpus)

doc_vec = []

# 把文字向量化

for doc in all_doc_list:

doc_test_vec = dictionary.doc2bow(doc)

list = [0 for x in range(len(dictionary.keys()))]

for dic in doc_test_vec:

list[dic[0]] += dic[1]

doc_vec.append(list)

print(doc_vec)

log_reg = LogisticRegression(multi_class='ovr', solver='sag')

log_reg.fit(doc_vec, label_list)

print("w1", log_reg.coef_)

print("w0", log_reg.intercept_)

# Predict

test = "不错,在同等档次酒店中应该是值得推荐的!"

test_list = [word for word in jieba.cut(test)]

test_vec = dictionary.doc2bow(test_list)

test_list = [0 for x in range(len(dictionary.keys()))]

for doc in test_vec:

test_list[dic[0]] += dic[1]

pred_list = []

pred_list.append(test_list)

# 用来预测分类的概率值,比如第一个行第一个概率最大,那么得到的y=0

y_proba = log_reg.predict_proba(pred_list)

# 用来预测分类号的

y_hat = log_reg.predict(pred_list)

print(y_proba)

print(y_hat)

来源:CSDN

作者:哦?

链接:https://blog.csdn.net/qq_44241861/article/details/104101569