1 Logistic Regression¶

1.1 The data

我们将建立一个逻辑回归模型来预测一个学生是否被大学录取。假设你是一个大学系的管理员,你想根据两次考试的结果来决定每个申请人的录取机会。你有以前的申请人的历史数据,你可以用它作为逻辑回归的训练集。对于每一个培训例子,你有两个考试的申请人的分数和录取决定。为了做到这一点,我们将建立一个分类模型,根据考试成绩估计入学概率。

拿到数据,首先看一下数据的样子

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import os

path = "C:/Users/Amber/Documents/唐宇迪-机器学习课程资料/机器学习算法配套案例实战/梯度下降/data/LogiReg_data.txt"

pdData = pd.read_csv(path, header=None, names=['Exam 1', 'Exam 2', 'Admitted'])

print(pdData.head())

Exam 1 Exam 2 Admitted

0 34.623660 78.024693 0

1 30.286711 43.894998 0

2 35.847409 72.902198 0

3 60.182599 86.308552 1

4 79.032736 75.344376 1

查看一下数据的维度

print(pdData.shape)

(100, 3)

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import os

path = "C:/Users/Amber/Documents/唐宇迪-机器学习课程资料/机器学习算法配套案例实战/梯度下降/data/LogiReg_data.txt"

pdData = pd.read_csv(path, header=None, names=['Exam 1', 'Exam 2', 'Admitted'])

#print(pdData.head())

#print(pdData.shape)

positive = pdData[pdData['Admitted'] == 1] # returns the subset of rows such Admitted = 1, i.e. the set of *positive* examples

negative = pdData[pdData['Admitted'] == 0] # returns the subset of rows such Admitted = 0, i.e. the set of *negative* examples

fig, ax = plt.subplots(figsize=(10,5)) #指定画图域的长高

ax.scatter(positive['Exam 1'], positive['Exam 2'], s=30, c='b', marker='o', label='Admitted')#正例/负例分别带标签,用不同的颜色画图

ax.scatter(negative['Exam 1'], negative['Exam 2'], s=30, c='r', marker='x', label='Not Admitted')

ax.legend()

ax.set_xlabel('Exam 1 Score')

ax.set_ylabel('Exam 2 Score')

根据散点图,可以看出决策边界,既可以将两类点分开。

1.2 The logistic regression¶

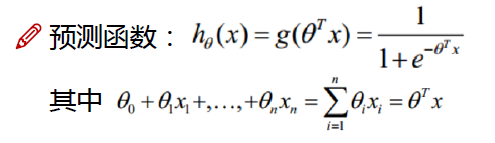

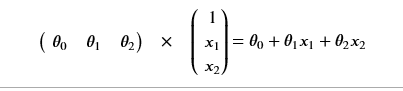

目标:建立分类器(求解出三个参数 θ0 /θ1/θ2 )

设定阈值,根据阈值判断录取结果

1.2.1 要完成的模块¶

-

sigmoid: 映射到概率的函数 -

model: 返回预测结果值 -

cost: 根据参数计算损失 -

gradient: 计算每个参数的梯度方向 -

descent: 进行参数更新 -

accuracy: 计算精度

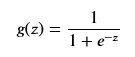

1.2.2 sigmoid 函数¶

def sigmoid(z):

return 1 / (1 + np.exp(-z))

nums = np.arange(-10, 10, step=1) #creates a vector containing 20 equally spaced values from -10 to 10

fig, ax = plt.subplots(figsize=(12,4))

ax.plot(nums, sigmoid(nums), 'r')

1.2.3 Sigmoid¶

- g:R→[0,1]

- g(0)=0.5

- g(−∞)=0

- g(+∞)=1

def model(X, theta): # 定义一个模型,输入为X数据,参数为theta,X于theta进行组合,

#np.dot 矩阵乘法,并且将结果值返回sigmoid函数中

return sigmoid(np.dot(X, theta.T))

model函数,对应到如下的预测函数,g(z)为sigmoid函数

做回归模型的时候,需要插入一列,让那一列的值都等于1,将数值运算转换为矩阵运算。

原来是x1/x2两列,现在最左边插入一列,全为1

pdData.insert(0, 'Ones', 1) # in a try / except structure so as not to return an error if the block si executed several times

# 列名为Ones,指定的数值为1,第0列

# set X (training data) and y (target variable)

orig_data = pdData.as_matrix() # convert the Pandas representation of the data to an array useful for further computations

cols = orig_data.shape[1]

X = orig_data[:,0:cols-1]

y = orig_data[:,cols-1:cols]

# convert to numpy arrays and initalize the parameter array theta

#X = np.matrix(X.values)

#y = np.matrix(data.iloc[:,3:4].values) #np.array(y.values)

theta = np.zeros([1, 3]) # 指定theta参数,虽不关心具体的值是多少,但需要占位,通常用0填充,1行3列,三个theta参数

# 观察5个样本,看设置是否正确

print(X[:5])

[[ 1. 34.62365962 78.02469282]

[ 1. 30.28671077 43.89499752]

[ 1. 35.84740877 72.90219803]

[ 1. 60.18259939 86.3085521 ]

[ 1. 79.03273605 75.34437644]]

print(y[:5])

[[0.]

[0.]

[0.]

[1.]

[1.]]

print(theta)

[[0. 0. 0.]]

print(X.shape,y.shape,theta.shape)

(100, 3) (100, 1) (1, 3)

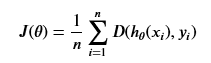

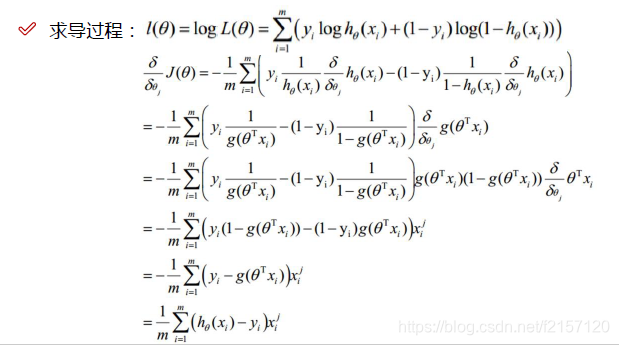

1.2.4 损失函数¶

将对数似然函数取负号

![]()

![]()

求平均损失:

def cost(X, y, theta):

left = np.multiply(-y, np.log(model(X, theta))) # left为损失函数的左半部分

right = np.multiply(1 - y, np.log(1 - model(X, theta))) # right为损失函数的右半部分

return np.sum(left - right) / (len(X)) # 累加和,最后除以样本数

# 验证公式可用,得出初始值

(cost(X, y, theta))

0.6931471805599453

1.2.5 计算梯度

def gradient(X, y, theta):

grad = np.zeros(theta.shape) # 定义梯度,其形状应与theta参数的形状/大小一致,并用0占位

error = (model(X, theta)- y).ravel() # yi为真实值,model(X,theta) 为预测值,二者差值为误差,h_theta(x)即为model(X,theta), .ravel()返回视图/值,修改时会改变原值

for j in range(len(theta.ravel())): #for each parmeter,先对theta_0求偏导,然后对theta_1偏导,依次遍历所有的参数。总长度为theta的尺度

term = np.multiply(error, X[:,j]) # 取第j列,一次对所有的样本进行计算

grad[0, j] = np.sum(term) / len(X) # 每次梯度都是对第j个参数,term 求和,除以X的长度/总样本的个数

return grad #算完theta_0,theta_1,... 所有的方向,返回

1.2.6 Gradient descent¶

比较3中不同梯度下降方法

STOP_ITER = 0 # 按照迭代次数进行优化,次数达到,则迭代停止

STOP_COST = 1 # 按照损失值的大小,进行优化,如果损失值达标,几乎不再变化,则迭代停止

STOP_GRAD = 2 # 如果相邻两次迭代,梯度大小几乎不变,则停止

def stopCriterion(type, value, threshold):

#设定三种不同的停止策略

if type == STOP_ITER: return value > threshold

elif type == STOP_COST: return abs(value[-1]-value[-2]) < threshold

elif type == STOP_GRAD: return np.linalg.norm(value) < threshold

洗牌

import numpy.random

# 做迭代更新时,首先应该对数据进行洗牌

def shuffleData(data):

np.random.shuffle(data) # 数据打乱

cols = data.shape[1]

X = data[:, 0:cols-1] # 打乱后,重新定义X,y

y = data[:, cols-1:]

return X, y

import time

def descent(data, theta, batchSize, stopType, thresh, alpha):

#梯度下降求解,batchSize=1,随机梯度下降,batchSize=N 总的样本数,则为总体批量下降,batchSize = 1~N之间的某一中间值,则为mini-batch梯度下降

# stopType:停止策略

# thresh:与停止策略所对应的阈值

# alpha:学习率

init_time = time.time()

i = 0 # 迭代次数,第0次迭代

k = 0 # batch,第0个batch

X, y = shuffleData(data) # 打乱

grad = np.zeros(theta.shape) # 计算的梯度

costs = [cost(X, y, theta)] # 损失值

while True:

grad = gradient(X[k:k+batchSize], y[k:k+batchSize], theta)

k += batchSize #取batch数量个数据

if k >= n:

k = 0

X, y = shuffleData(data) #重新洗牌

theta = theta - alpha*grad # 参数更新

costs.append(cost(X, y, theta)) # 计算新的损失

i += 1

if stopType == STOP_ITER: value = i

elif stopType == STOP_COST: value = costs

elif stopType == STOP_GRAD: value = grad

if stopCriterion(stopType, value, thresh): break # 如果达到停止条件,则退出,停止循环

return theta, i-1, costs, grad, time.time() - init_time

def runExpe(data, theta, batchSize, stopType, thresh, alpha):

#import pdb; pdb.set_trace();

theta, iter, costs, grad, dur = descent(data, theta, batchSize, stopType, thresh, alpha)

name = "Original" if (data[:,1]>2).sum() > 1 else "Scaled"

name += " data - learning rate: {} - ".format(alpha)

# 根据batchSize取选择梯度下降的方法

if batchSize==n: strDescType = "Gradient"

elif batchSize==1: strDescType = "Stochastic"

else: strDescType = "Mini-batch ({})".format(batchSize)

# 选择停止策略

name += strDescType + " descent - Stop: "

if stopType == STOP_ITER: strStop = "{} iterations".format(thresh)

elif stopType == STOP_COST: strStop = "costs change < {}".format(thresh)

else: strStop = "gradient norm < {}".format(thresh)

name += strStop

# 结果展示,画图

print ("***{}\nTheta: {} - Iter: {} - Last cost: {:03.2f} - Duration: {:03.2f}s".format(

name, theta, iter, costs[-1], dur))

fig, ax = plt.subplots(figsize=(12,4))

ax.plot(np.arange(len(costs)), costs, 'r')

ax.set_xlabel('Iterations')

ax.set_ylabel('Cost')

ax.set_title(name.upper() + ' - Error vs. Iteration')

return theta

1.2.7 不同的停止策略¶

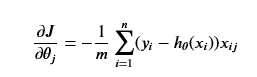

#选择的梯度下降方法是基于所有样本的

n=100

runExpe(orig_data, theta, n, STOP_ITER, thresh=5000, alpha=0.000001)

# n = 100, 为样本的数量

# thresh = 5000,即设置5000次迭代

# alpha = 0.000001 学习率

***Original data - learning rate: 1e-06 - Gradient descent - Stop: 5000 iterations

Theta: [[-0.00027127 0.00705232 0.00376711]] - Iter: 5000 - Last cost: 0.63 - Duration: 2.61s

此时的整体程序:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import numpy.random

import time

path = "C:/Users/Amber/Documents/唐宇迪-机器学习课程资料/机器学习算法配套案例实战/梯度下降/data/LogiReg_data.txt"

pdData = pd.read_csv(path, header=None, names=['Exam 1', 'Exam 2', 'Admitted'])

#print(pdData.head())

#print(pdData.shape)

positive = pdData[pdData['Admitted'] == 1] # returns the subset of rows such Admitted = 1, i.e. the set of *positive* examples

negative = pdData[pdData['Admitted'] == 0] # returns the subset of rows such Admitted = 0, i.e. the set of *negative* examples

# fig, ax = plt.subplots(figsize=(10,5)) #指定画图域的长高

# ax.scatter(positive['Exam 1'], positive['Exam 2'], s=30, c='b', marker='o', label='Admitted')#正例/负例分别带标签,用不同的颜色画图

# ax.scatter(negative['Exam 1'], negative['Exam 2'], s=30, c='r', marker='x', label='Not Admitted')

# ax.legend()

# ax.set_xlabel('Exam 1 Score')

# ax.set_ylabel('Exam 2 Score')

def sigmoid(z):

return 1 / (1 + np.exp(-z))

# nums = np.arange(-10, 10, step=1) #creates a vector containing 20 equally spaced values from -10 to 10

# fig, ax = plt.subplots(figsize=(12,4))

# ax.plot(nums, sigmoid(nums), 'r')

def model(X, theta):

return sigmoid(np.dot(X, theta.T))

pdData.insert(0, 'Ones', 1) # in a try / except structure so as not to return an error if the block si executed several times

# set X (training data) and y (target variable)

orig_data = pdData.as_matrix() # convert the Pandas representation of the data to an array useful for further computations

cols = orig_data.shape[1]

X = orig_data[:,0:cols-1]

y = orig_data[:,cols-1:cols]

# convert to numpy arrays and initalize the parameter array theta

#X = np.matrix(X.values)

#y = np.matrix(data.iloc[:,3:4].values) #np.array(y.values)

theta = np.zeros([1, 3])

# print(X[:5])

# print(y[:5])

# print(theta)

# print(X.shape,y.shape,theta.shape)

def cost(X, y, theta):

left = np.multiply(-y, np.log(model(X, theta)))

right = np.multiply(1 - y, np.log(1 - model(X, theta)))

return np.sum(left - right) / (len(X))

# print(cost(X, y, theta))

def gradient(X, y, theta):

grad = np.zeros(theta.shape)

error = (model(X, theta)- y).ravel()

for j in range(len(theta.ravel())): #for each parmeter

term = np.multiply(error, X[:,j])

grad[0, j] = np.sum(term) / len(X)

return grad

STOP_ITER = 0

STOP_COST = 1

STOP_GRAD = 2

def stopCriterion(type, value, threshold):

#设定三种不同的停止策略

if type == STOP_ITER: return value > threshold

elif type == STOP_COST: return abs(value[-1]-value[-2]) < threshold

elif type == STOP_GRAD: return np.linalg.norm(value) < threshold

#洗牌

def shuffleData(data):

np.random.shuffle(data)

cols = data.shape[1]

X = data[:, 0:cols-1]

y = data[:, cols-1:]

return X, y

def descent(data, theta, batchSize, stopType, thresh, alpha):

#梯度下降求解

init_time = time.time()

i = 0 # 迭代次数

k = 0 # batch

X, y = shuffleData(data)

grad = np.zeros(theta.shape) # 计算的梯度

costs = [cost(X, y, theta)] # 损失值

while True:

grad = gradient(X[k:k+batchSize], y[k:k+batchSize], theta)

k += batchSize #取batch数量个数据

if k >= n:

k = 0

X, y = shuffleData(data) #重新洗牌

theta = theta - alpha*grad # 参数更新

costs.append(cost(X, y, theta)) # 计算新的损失

i += 1

if stopType == STOP_ITER: value = i

elif stopType == STOP_COST: value = costs

elif stopType == STOP_GRAD: value = grad

if stopCriterion(stopType, value, thresh): break

return theta, i-1, costs, grad, time.time() - init_time

def runExpe(data, theta, batchSize, stopType, thresh, alpha):

#import pdb; pdb.set_trace();

theta, iter, costs, grad, dur = descent(data, theta, batchSize, stopType, thresh, alpha)

name = "Original" if (data[:,1]>2).sum() > 1 else "Scaled"

name += " data - learning rate: {} - ".format(alpha)

if batchSize==n: strDescType = "Gradient"

elif batchSize==1: strDescType = "Stochastic"

else: strDescType = "Mini-batch ({})".format(batchSize)

name += strDescType + " descent - Stop: "

if stopType == STOP_ITER: strStop = "{} iterations".format(thresh)

elif stopType == STOP_COST: strStop = "costs change < {}".format(thresh)

else: strStop = "gradient norm < {}".format(thresh)

name += strStop

print ("***{}\nTheta: {} - Iter: {} - Last cost: {:03.2f} - Duration: {:03.2f}s".format(

name, theta, iter, costs[-1], dur))

fig, ax = plt.subplots(figsize=(12,4))

ax.plot(np.arange(len(costs)), costs, 'r')

ax.set_xlabel('Iterations')

ax.set_ylabel('Cost')

ax.set_title(name.upper() + ' - Error vs. Iteration')

return theta

#选择的梯度下降方法是基于整体的,5000次迭代

n=100

runExpe(orig_data, theta, n, STOP_ITER, thresh=5000, alpha=0.000001)

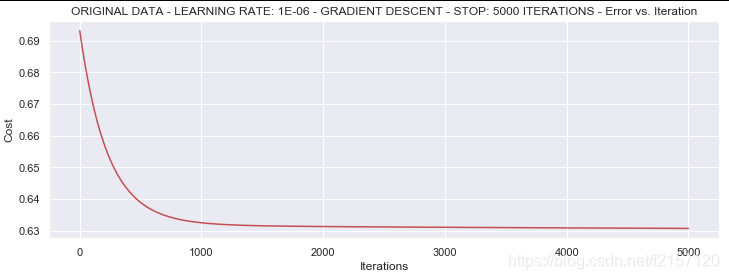

1.2.7.2 根据损失值停止¶

设定损失值的阈值 1E-6, 差不多需要110 000次迭代

# 不指定迭代次数,当两次迭代差值小于thresh时停止迭代

runExpe(orig_data, theta, n, STOP_COST, thresh=0.000001, alpha=0.001)

***Original data - learning rate: 0.001 - Gradient descent - Stop: costs change < 1e-06

Theta: [[-5.13364014 0.04771429 0.04072397]] - Iter: 109901 - Last cost: 0.38 - Duration: 63.58s

发现,新的算法得到的Cost值0.38,优于之前的0.63,迭代次数接近11万次,花了63秒

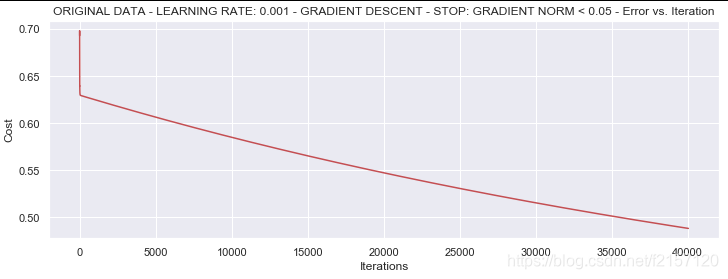

1.2.7.3 根据梯度变化停止¶

设定阈值 0.05,差不多需要40 000次迭代

runExpe(orig_data, theta, n, STOP_GRAD, thresh=0.05, alpha=0.001)

***Original data - learning rate: 0.001 - Gradient descent - Stop: gradient norm < 0.05

Theta: [[-2.37033409 0.02721692 0.01899456]] - Iter: 40045 - Last cost: 0.49 - Duration: 19.41s

整体程序

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import numpy.random

import time

path = "C:/Users/Amber/Documents/唐宇迪-机器学习课程资料/机器学习算法配套案例实战/梯度下降/data/LogiReg_data.txt"

pdData = pd.read_csv(path, header=None, names=['Exam 1', 'Exam 2', 'Admitted'])

#print(pdData.head())

#print(pdData.shape)

positive = pdData[pdData['Admitted'] == 1] # returns the subset of rows such Admitted = 1, i.e. the set of *positive* examples

negative = pdData[pdData['Admitted'] == 0] # returns the subset of rows such Admitted = 0, i.e. the set of *negative* examples

# fig, ax = plt.subplots(figsize=(10,5)) #指定画图域的长高

# ax.scatter(positive['Exam 1'], positive['Exam 2'], s=30, c='b', marker='o', label='Admitted')#正例/负例分别带标签,用不同的颜色画图

# ax.scatter(negative['Exam 1'], negative['Exam 2'], s=30, c='r', marker='x', label='Not Admitted')

# ax.legend()

# ax.set_xlabel('Exam 1 Score')

# ax.set_ylabel('Exam 2 Score')

def sigmoid(z):

return 1 / (1 + np.exp(-z))

# nums = np.arange(-10, 10, step=1) #creates a vector containing 20 equally spaced values from -10 to 10

# fig, ax = plt.subplots(figsize=(12,4))

# ax.plot(nums, sigmoid(nums), 'r')

def model(X, theta):

return sigmoid(np.dot(X, theta.T))

pdData.insert(0, 'Ones', 1) # in a try / except structure so as not to return an error if the block si executed several times

# set X (training data) and y (target variable)

orig_data = pdData.as_matrix() # convert the Pandas representation of the data to an array useful for further computations

cols = orig_data.shape[1]

X = orig_data[:,0:cols-1]

y = orig_data[:,cols-1:cols]

# convert to numpy arrays and initalize the parameter array theta

#X = np.matrix(X.values)

#y = np.matrix(data.iloc[:,3:4].values) #np.array(y.values)

theta = np.zeros([1, 3])

# print(X[:5])

# print(y[:5])

# print(theta)

# print(X.shape,y.shape,theta.shape)

def cost(X, y, theta):

left = np.multiply(-y, np.log(model(X, theta)))

right = np.multiply(1 - y, np.log(1 - model(X, theta)))

return np.sum(left - right) / (len(X))

# print(cost(X, y, theta))

def gradient(X, y, theta):

grad = np.zeros(theta.shape)

error = (model(X, theta)- y).ravel()

for j in range(len(theta.ravel())): #for each parmeter

term = np.multiply(error, X[:,j])

grad[0, j] = np.sum(term) / len(X)

return grad

STOP_ITER = 0

STOP_COST = 1

STOP_GRAD = 2

def stopCriterion(type, value, threshold):

#设定三种不同的停止策略

if type == STOP_ITER: return value > threshold

elif type == STOP_COST: return abs(value[-1]-value[-2]) < threshold

elif type == STOP_GRAD: return np.linalg.norm(value) < threshold

#洗牌

def shuffleData(data):

np.random.shuffle(data)

cols = data.shape[1]

X = data[:, 0:cols-1]

y = data[:, cols-1:]

return X, y

def descent(data, theta, batchSize, stopType, thresh, alpha):

#梯度下降求解

init_time = time.time()

i = 0 # 迭代次数

k = 0 # batch

X, y = shuffleData(data)

grad = np.zeros(theta.shape) # 计算的梯度

costs = [cost(X, y, theta)] # 损失值

while True:

grad = gradient(X[k:k+batchSize], y[k:k+batchSize], theta)

k += batchSize #取batch数量个数据

if k >= n:

k = 0

X, y = shuffleData(data) #重新洗牌

theta = theta - alpha*grad # 参数更新

costs.append(cost(X, y, theta)) # 计算新的损失

i += 1

if stopType == STOP_ITER: value = i

elif stopType == STOP_COST: value = costs

elif stopType == STOP_GRAD: value = grad

if stopCriterion(stopType, value, thresh): break

return theta, i-1, costs, grad, time.time() - init_time

def runExpe(data, theta, batchSize, stopType, thresh, alpha):

#import pdb; pdb.set_trace();

theta, iter, costs, grad, dur = descent(data, theta, batchSize, stopType, thresh, alpha)

name = "Original" if (data[:,1]>2).sum() > 1 else "Scaled"

name += " data - learning rate: {} - ".format(alpha)

if batchSize==n: strDescType = "Gradient"

elif batchSize==1: strDescType = "Stochastic"

else: strDescType = "Mini-batch ({})".format(batchSize)

name += strDescType + " descent - Stop: "

if stopType == STOP_ITER: strStop = "{} iterations".format(thresh)

elif stopType == STOP_COST: strStop = "costs change < {}".format(thresh)

else: strStop = "gradient norm < {}".format(thresh)

name += strStop

print ("***{}\nTheta: {} - Iter: {} - Last cost: {:03.2f} - Duration: {:03.2f}s".format(

name, theta, iter, costs[-1], dur))

fig, ax = plt.subplots(figsize=(12,4))

ax.plot(np.arange(len(costs)), costs, 'r')

ax.set_xlabel('Iterations')

ax.set_ylabel('Cost')

ax.set_title(name.upper() + ' - Error vs. Iteration')

return theta

#选择的梯度下降方法是基于所有样本的

# n=100

# runExpe(orig_data, theta, n, STOP_ITER, thresh=5000, alpha=0.000001)

# 设置基于cost差值小于thresh时停止迭代

# runExpe(orig_data, theta, n, STOP_COST, thresh=0.000001, alpha=0.001)

# 设置基于梯度差停止迭代

runExpe(orig_data, theta, n, STOP_GRAD, thresh=0.05, alpha=0.001)

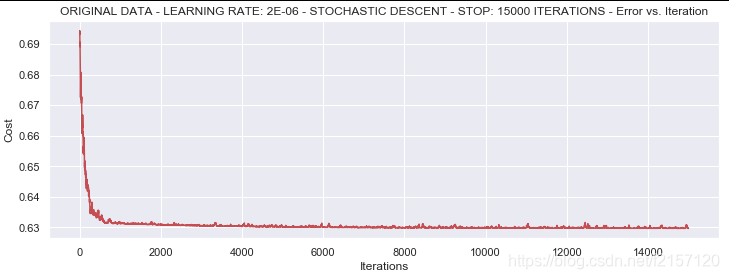

1.2.8 对比不同的梯度下降方法¶

runExpe(orig_data, theta, 1, STOP_ITER, thresh=5000, alpha=0.001)

***Original data - learning rate: 0.001 - Stochastic descent - Stop: 5000 iterations

Theta: [[-0.385463 0.0246911 0.00267637]] - Iter: 5000 - Last cost: 0.65 - Duration: 0.97s

有点爆炸。。。很不稳定,再来试试把学习率调小一些

runExpe(orig_data, theta, 1, STOP_ITER, thresh=15000, alpha=0.000002)

***Original data - learning rate: 2e-06 - Stochastic descent - Stop: 15000 iterations

Theta: [[-0.00202317 0.0098878 0.00083114]] - Iter: 15000 - Last cost: 0.63 - Duration: 2.71s

速度快,但稳定性差,需要很小的学习率

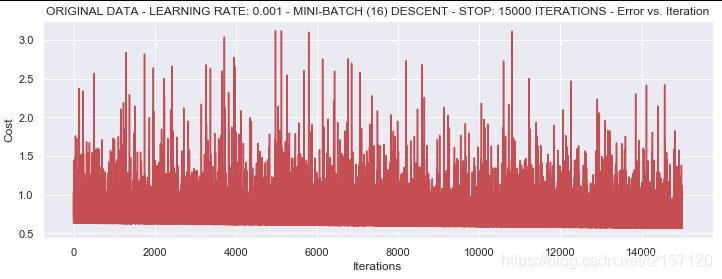

runExpe(orig_data, theta, 16, STOP_ITER, thresh=15000, alpha=0.001)

***Original data - learning rate: 0.001 - Mini-batch (16) descent - Stop: 15000 iterations

Theta: [[-1.03285883 0.03317548 0.02643331]] - Iter: 15000 - Last cost: 0.93 - Duration: 3.27s

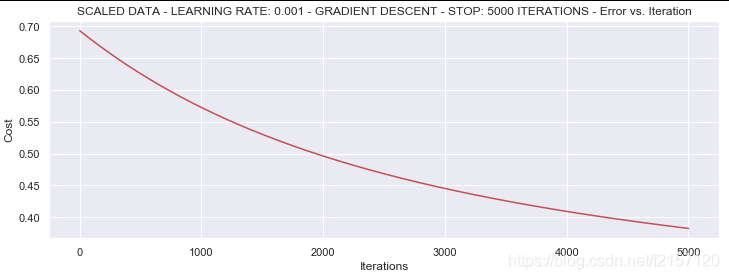

浮动仍然比较大,我们来尝试下对数据进行标准化 将数据按其属性(按列进行)减去其均值,然后除以其方差。最后得到的结果是,对每个属性/每列来说所有数据都聚集在0附近,方差值为1

from sklearn import preprocessing as pp

scaled_data = orig_data.copy()

scaled_data[:, 1:3] = pp.scale(orig_data[:, 1:3])

runExpe(scaled_data, theta, n, STOP_ITER, thresh=5000, alpha=0.001)

***Scaled data - learning rate: 0.001 - Gradient descent - Stop: 5000 iterations

Theta: [[0.3080807 0.86494967 0.77367651]] - Iter: 5000 - Last cost: 0.38 - Duration: 3.90s

它好多了!原始数据,只能达到达到0.61,而我们得到了0.38个在这里! 所以对数据做预处理是非常重要的

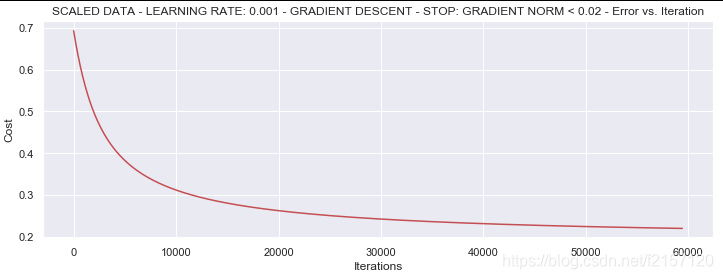

runExpe(scaled_data, theta, n, STOP_GRAD, thresh=0.02, alpha=0.001)

***Scaled data - learning rate: 0.001 - Gradient descent - Stop: gradient norm < 0.02

Theta: [[1.0707921 2.63030842 2.41079787]] - Iter: 59422 - Last cost: 0.22 - Duration: 36.86s

更多的迭代次数会使得损失下降的更多

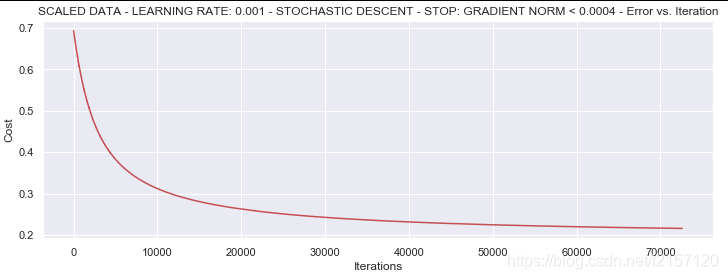

theta = runExpe(scaled_data, theta, 1, STOP_GRAD, thresh=0.002/5, alpha=0.001)

***Scaled data - learning rate: 0.001 - Stochastic descent - Stop: gradient norm < 0.0004

Theta: [[1.14905211 2.79259999 2.56688733]] - Iter: 72622 - Last cost: 0.22 - Duration: 18.33s

随机梯度下降更快,但是我们需要迭代的次数也需要更多,所以还是用mini-batch的比较合适!!!

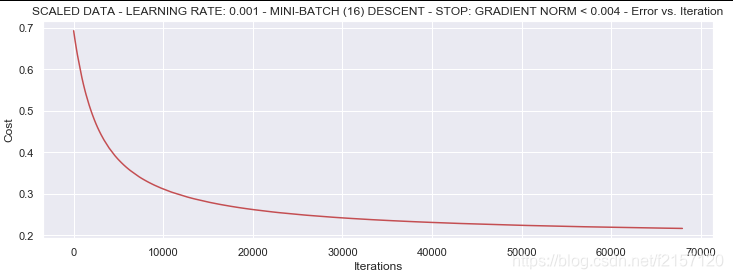

runExpe(scaled_data, theta, 16, STOP_GRAD, thresh=0.002*2, alpha=0.001)

***Scaled data - learning rate: 0.001 - Mini-batch (16) descent - Stop: gradient norm < 0.004

Theta: [[1.12425257 2.73721986 2.51657001]] - Iter: 67934 - Last cost: 0.22 - Duration: 23.23s

mini-batch优于sgd,但仍然不稳定

遇到问题,应该先从数据层面入手,最后才是修改模型

拿到数据时,应先对数据进行预处理,尝试整体/sgd/mini-batch等不同的方案,哪个方案好用哪个

1.3 精度

#设定阈值

def predict(X, theta):

return [1 if x >= 0.5 else 0 for x in model(X, theta)]

scaled_X = scaled_data[:, :3]

y = scaled_data[:, 3]

predictions = predict(scaled_X, theta)

correct = [1 if ((a == 1 and b == 1) or (a == 0 and b == 0)) else 0 for (a, b) in zip(predictions, y)]

accuracy = (sum(map(int, correct)) % len(correct))

print ('accuracy = {0}%'.format(accuracy))

accuracy = 60%

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import numpy.random

import time

path = "C:/Users/Amber/Documents/唐宇迪-机器学习课程资料/机器学习算法配套案例实战/梯度下降/data/LogiReg_data.txt"

pdData = pd.read_csv(path, header=None, names=['Exam 1', 'Exam 2', 'Admitted'])

#print(pdData.head())

#print(pdData.shape)

positive = pdData[pdData['Admitted'] == 1] # returns the subset of rows such Admitted = 1, i.e. the set of *positive* examples

negative = pdData[pdData['Admitted'] == 0] # returns the subset of rows such Admitted = 0, i.e. the set of *negative* examples

# fig, ax = plt.subplots(figsize=(10,5)) #指定画图域的长高

# ax.scatter(positive['Exam 1'], positive['Exam 2'], s=30, c='b', marker='o', label='Admitted')#正例/负例分别带标签,用不同的颜色画图

# ax.scatter(negative['Exam 1'], negative['Exam 2'], s=30, c='r', marker='x', label='Not Admitted')

# ax.legend()

# ax.set_xlabel('Exam 1 Score')

# ax.set_ylabel('Exam 2 Score')

def sigmoid(z):

return 1 / (1 + np.exp(-z))

# nums = np.arange(-10, 10, step=1) #creates a vector containing 20 equally spaced values from -10 to 10

# fig, ax = plt.subplots(figsize=(12,4))

# ax.plot(nums, sigmoid(nums), 'r')

def model(X, theta):

return sigmoid(np.dot(X, theta.T))

pdData.insert(0, 'Ones', 1) # in a try / except structure so as not to return an error if the block si executed several times

# set X (training data) and y (target variable)

orig_data = pdData.as_matrix() # convert the Pandas representation of the data to an array useful for further computations

cols = orig_data.shape[1]

X = orig_data[:,0:cols-1]

y = orig_data[:,cols-1:cols]

# convert to numpy arrays and initalize the parameter array theta

#X = np.matrix(X.values)

#y = np.matrix(data.iloc[:,3:4].values) #np.array(y.values)

theta = np.zeros([1, 3])

# print(X[:5])

# print(y[:5])

# print(theta)

# print(X.shape,y.shape,theta.shape)

def cost(X, y, theta):

left = np.multiply(-y, np.log(model(X, theta)))

right = np.multiply(1 - y, np.log(1 - model(X, theta)))

return np.sum(left - right) / (len(X))

# print(cost(X, y, theta))

def gradient(X, y, theta):

grad = np.zeros(theta.shape)

error = (model(X, theta)- y).ravel()

for j in range(len(theta.ravel())): #for each parmeter

term = np.multiply(error, X[:,j])

grad[0, j] = np.sum(term) / len(X)

return grad

STOP_ITER = 0

STOP_COST = 1

STOP_GRAD = 2

def stopCriterion(type, value, threshold):

#设定三种不同的停止策略

if type == STOP_ITER: return value > threshold

elif type == STOP_COST: return abs(value[-1]-value[-2]) < threshold

elif type == STOP_GRAD: return np.linalg.norm(value) < threshold

#洗牌

def shuffleData(data):

np.random.shuffle(data)

cols = data.shape[1]

X = data[:, 0:cols-1]

y = data[:, cols-1:]

return X, y

def descent(data, theta, batchSize, stopType, thresh, alpha):

#梯度下降求解

init_time = time.time()

i = 0 # 迭代次数

k = 0 # batch

X, y = shuffleData(data)

grad = np.zeros(theta.shape) # 计算的梯度

costs = [cost(X, y, theta)] # 损失值

while True:

grad = gradient(X[k:k+batchSize], y[k:k+batchSize], theta)

k += batchSize #取batch数量个数据

if k >= n:

k = 0

X, y = shuffleData(data) #重新洗牌

theta = theta - alpha*grad # 参数更新

costs.append(cost(X, y, theta)) # 计算新的损失

i += 1

if stopType == STOP_ITER: value = i

elif stopType == STOP_COST: value = costs

elif stopType == STOP_GRAD: value = grad

if stopCriterion(stopType, value, thresh): break

return theta, i-1, costs, grad, time.time() - init_time

def runExpe(data, theta, batchSize, stopType, thresh, alpha):

#import pdb; pdb.set_trace();

theta, iter, costs, grad, dur = descent(data, theta, batchSize, stopType, thresh, alpha)

name = "Original" if (data[:,1]>2).sum() > 1 else "Scaled"

name += " data - learning rate: {} - ".format(alpha)

if batchSize==n: strDescType = "Gradient"

elif batchSize==1: strDescType = "Stochastic"

else: strDescType = "Mini-batch ({})".format(batchSize)

name += strDescType + " descent - Stop: "

if stopType == STOP_ITER: strStop = "{} iterations".format(thresh)

elif stopType == STOP_COST: strStop = "costs change < {}".format(thresh)

else: strStop = "gradient norm < {}".format(thresh)

name += strStop

print ("***{}\nTheta: {} - Iter: {} - Last cost: {:03.2f} - Duration: {:03.2f}s".format(

name, theta, iter, costs[-1], dur))

fig, ax = plt.subplots(figsize=(12,4))

ax.plot(np.arange(len(costs)), costs, 'r')

ax.set_xlabel('Iterations')

ax.set_ylabel('Cost')

ax.set_title(name.upper() + ' - Error vs. Iteration')

return theta

#选择的梯度下降方法是基于所有样本的

# n=100

# runExpe(orig_data, theta, n, STOP_ITER, thresh=5000, alpha=0.000001)

# 设置两次迭代差值小于thresh时停止迭代

# runExpe(orig_data, theta, n, STOP_COST, thresh=0.000001, alpha=0.001)

# runExpe(orig_data, theta, n, STOP_GRAD, thresh=0.05, alpha=0.001)

# runExpe(orig_data, theta, 1, STOP_ITER, thresh=5000, alpha=0.001)

# runExpe(orig_data, theta, 1, STOP_ITER, thresh=15000, alpha=0.000002)

# runExpe(orig_data, theta, 16, STOP_ITER, thresh=15000, alpha=0.001)

from sklearn import preprocessing as pp

scaled_data = orig_data.copy()

scaled_data[:, 1:3] = pp.scale(orig_data[:, 1:3])

# runExpe(scaled_data, theta, n, STOP_ITER, thresh=5000, alpha=0.001)

# runExpe(scaled_data, theta, n, STOP_GRAD, thresh=0.02, alpha=0.001)

# theta = runExpe(scaled_data, theta, 1, STOP_GRAD, thresh=0.002/5, alpha=0.001)

runExpe(scaled_data, theta, 16, STOP_GRAD, thresh=0.002*2, alpha=0.001)

#设定阈值

def predict(X, theta):

return [1 if x >= 0.5 else 0 for x in model(X, theta)]

scaled_X = scaled_data[:, :3]

y = scaled_data[:, 3]

predictions = predict(scaled_X, theta)

correct = [1 if ((a == 1 and b == 1) or (a == 0 and b == 0)) else 0 for (a, b) in zip(predictions, y)]

accuracy = (sum(map(int, correct)) % len(correct))

print ('accuracy = {0}%'.format(accuracy))

LogiReg_data.txt

34.62365962451697,78.0246928153624,0

30.28671076822607,43.89499752400101,0

35.84740876993872,72.90219802708364,0

60.18259938620976,86.30855209546826,1

79.0327360507101,75.3443764369103,1

45.08327747668339,56.3163717815305,0

61.10666453684766,96.51142588489624,1

75.02474556738889,46.55401354116538,1

76.09878670226257,87.42056971926803,1

84.43281996120035,43.53339331072109,1

95.86155507093572,38.22527805795094,0

75.01365838958247,30.60326323428011,0

82.30705337399482,76.48196330235604,1

69.36458875970939,97.71869196188608,1

39.53833914367223,76.03681085115882,0

53.9710521485623,89.20735013750205,1

69.07014406283025,52.74046973016765,1

67.94685547711617,46.67857410673128,0

70.66150955499435,92.92713789364831,1

76.97878372747498,47.57596364975532,1

67.37202754570876,42.83843832029179,0

89.67677575072079,65.79936592745237,1

50.534788289883,48.85581152764205,0

34.21206097786789,44.20952859866288,0

77.9240914545704,68.9723599933059,1

62.27101367004632,69.95445795447587,1

80.1901807509566,44.82162893218353,1

93.114388797442,38.80067033713209,0

61.83020602312595,50.25610789244621,0

38.78580379679423,64.99568095539578,0

61.379289447425,72.80788731317097,1

85.40451939411645,57.05198397627122,1

52.10797973193984,63.12762376881715,0

52.04540476831827,69.43286012045222,1

40.23689373545111,71.16774802184875,0

54.63510555424817,52.21388588061123,0

33.91550010906887,98.86943574220611,0

64.17698887494485,80.90806058670817,1

74.78925295941542,41.57341522824434,0

34.1836400264419,75.2377203360134,0

83.90239366249155,56.30804621605327,1

51.54772026906181,46.85629026349976,0

94.44336776917852,65.56892160559052,1

82.36875375713919,40.61825515970618,0

51.04775177128865,45.82270145776001,0

62.22267576120188,52.06099194836679,0

77.19303492601364,70.45820000180959,1

97.77159928000232,86.7278223300282,1

62.07306379667647,96.76882412413983,1

91.56497449807442,88.69629254546599,1

79.94481794066932,74.16311935043758,1

99.2725269292572,60.99903099844988,1

90.54671411399852,43.39060180650027,1

34.52451385320009,60.39634245837173,0

50.2864961189907,49.80453881323059,0

49.58667721632031,59.80895099453265,0

97.64563396007767,68.86157272420604,1

32.57720016809309,95.59854761387875,0

74.24869136721598,69.82457122657193,1

71.79646205863379,78.45356224515052,1

75.3956114656803,85.75993667331619,1

35.28611281526193,47.02051394723416,0

56.25381749711624,39.26147251058019,0

30.05882244669796,49.59297386723685,0

44.66826172480893,66.45008614558913,0

66.56089447242954,41.09209807936973,0

40.45755098375164,97.53518548909936,1

49.07256321908844,51.88321182073966,0

80.27957401466998,92.11606081344084,1

66.74671856944039,60.99139402740988,1

32.72283304060323,43.30717306430063,0

64.0393204150601,78.03168802018232,1

72.34649422579923,96.22759296761404,1

60.45788573918959,73.09499809758037,1

58.84095621726802,75.85844831279042,1

99.82785779692128,72.36925193383885,1

47.26426910848174,88.47586499559782,1

50.45815980285988,75.80985952982456,1

60.45555629271532,42.50840943572217,0

82.22666157785568,42.71987853716458,0

88.9138964166533,69.80378889835472,1

94.83450672430196,45.69430680250754,1

67.31925746917527,66.58935317747915,1

57.23870631569862,59.51428198012956,1

80.36675600171273,90.96014789746954,1

68.46852178591112,85.59430710452014,1

42.0754545384731,78.84478600148043,0

75.47770200533905,90.42453899753964,1

78.63542434898018,96.64742716885644,1

52.34800398794107,60.76950525602592,0

94.09433112516793,77.15910509073893,1

90.44855097096364,87.50879176484702,1

55.48216114069585,35.57070347228866,0

74.49269241843041,84.84513684930135,1

89.84580670720979,45.35828361091658,1

83.48916274498238,48.38028579728175,1

42.2617008099817,87.10385094025457,1

99.31500880510394,68.77540947206617,1

55.34001756003703,64.9319380069486,1

74.77589300092767,89.52981289513276,1

来源:CSDN

作者:史努B

链接:https://blog.csdn.net/f2157120/article/details/104130432