As a replacement for SCSI and SATA, the storage industry has developed a new protocol called Non-Volatile Memory Express, usually shortened to NVMe. NVMe is a direct replacement for SCSI both for individual drives as well as for storage networking fabrics. It was specifically designed to support modern-day, low-latency SSDs.

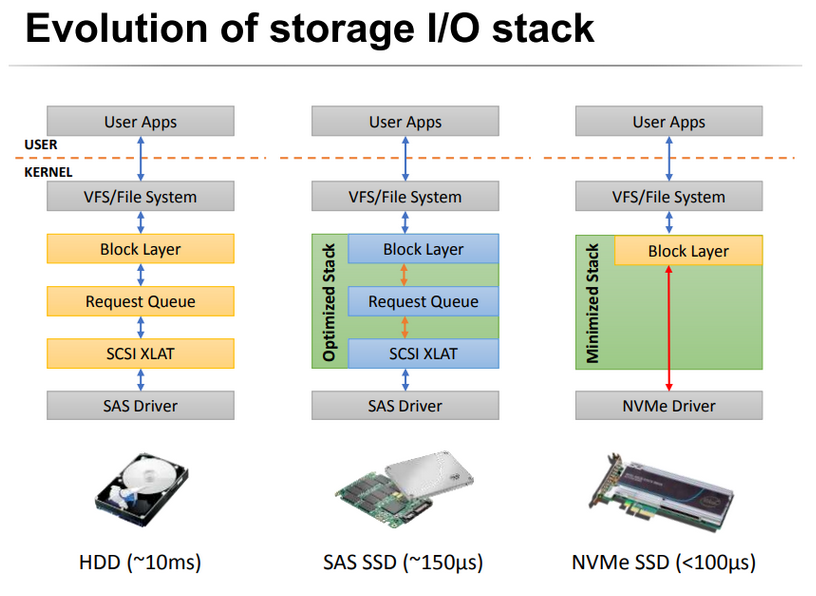

NVMe aims to optimize the I/O software stack. NVMe provides significant I/O performance and reduced latency compared to legacy protocols like SAS and SATA by placing storage physically closer to the processor and improving the protocol efficiency, thereby reducing the path of the I/O stack. NVMe devices sit directly on the PCIe bus, which offers higher bandwidth and lower latency versus using SAS/SATA storage controllers that connect to the PCIe interface.

Below is a simplified I/O stack that shows how NVMe simplifies the I/O path compared to SCSI (this illustration shows NVMe within a server, not the implementation of NVMe over Fabrics).

Now in addition to the existing storage networking technologies that exist today, there is also NVMe over Fabrics (NVMe-oF). This specification standard provides the capability to use NVMe outside of a PCIe bus, using fabric topologies that include Fibre Channel, Ethernet, TCP and InfiniBand.

Now one of the ways that NVMe reduces latency for storage is by increasing the level of parallelism with disk I/O. As SAS and SATA protocols were designed for mechanical spinning disks, only a single I/O queue was needed to manage read/write requests. But with the deployment of SSDs, this queuing mechanism has become obsolete. NVMe introduces thousands of queues (65,535) and with much greater queue depth (65,535 requests). By way of comparison, SAS and SATA only have 256 and 32 requests queues respectively.

Another industry first from NetApp.

NetApp has had a long history of using NAND media as an acceleration solution. PAM cards (Performance Acceleration Module) based on PCIe flash storage were introduced as early as 2008 to provide read caching (now the technology is called Flash Cache).

Way back in 2015, NetApp introduced the All-Flash FAS or AFF product line using SSDs for the back-end disk. But now with the NetApp AFF A800, the IT industry has the first all-flash platform that uses NVMe technology end-to-end.

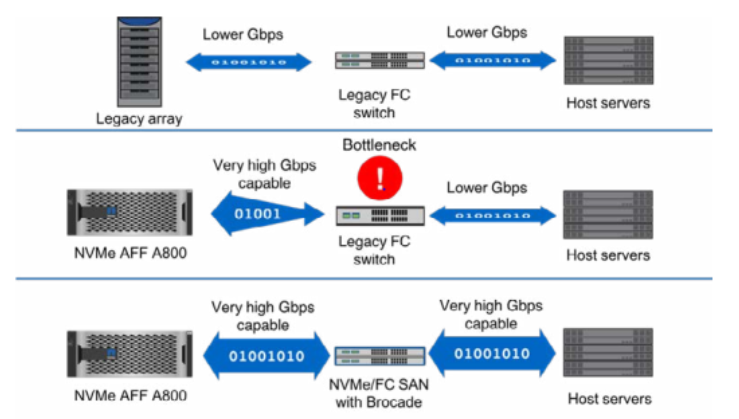

An NVMe-powered SAN scale-out cluster supports up to 12 nodes (6 HA pairs) with 1,440 drives and nearly 160PB of effective capacity. NAS scale-out clusters support up to 24 nodes (12 HA pairs). At the front end of the AFF A800, NetApp supports FC-NVMe using 32Gb (Gen 6) Fibre Channel. This allows the company to claim that AFF A800 is the first end-to-end NVMe enabled storage array in the market. The platform also supports 100Gb Ethernet.

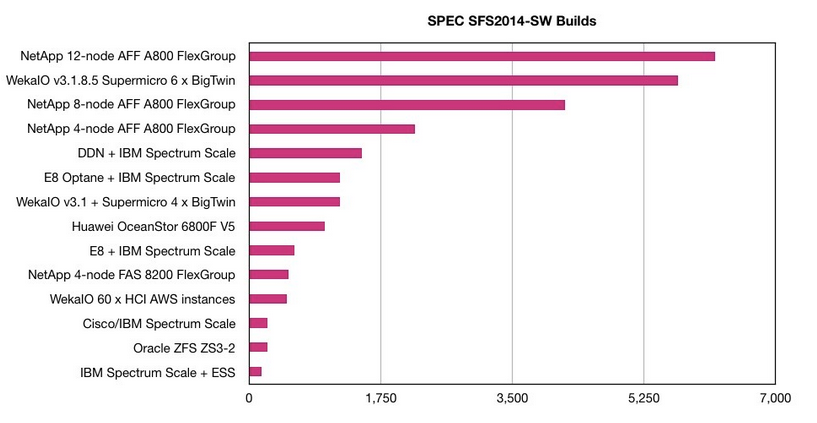

In terms of performance, a single node-pair can achieve over 1 million IOPS and 25GB/s throughput at 200µs latency (that’s microseconds, not milliseconds!). The NetApp A800 recently won the SPEC SFS 2014 software build benchmark for the world’s fastest performance benchmark.

NetApp is working on host-side connectivity using NVMe-oF, with technology from the acquisition of Plexistor. This is now being marketed as Memory Accelerated Data or MAX Data for short. MAX Data uses host-based storage-class memory (SCM) and the Plexistor software to create an extremely low-latency local file system, capable of delivering single digit microsecond latencies. The local file system acts as a write-back cache, offloading data via snapshots to an AFF appliance over 100Gb/s RDMA.

The beauty of being a NetApp customer is that you can migrate to ever faster and lower-latency hardware, all without changing your data management platform (ONTAP). How about them apples!

For more on how NetApp is leveraging NVMe to transform the enterprise data storage landscape, download their whitepaper – NVMe Modern SAN Primer.

来源:CSDN

作者:yiyeguzhou100

链接:https://blog.csdn.net/yiyeguzhou100/article/details/104111627