缺失值分析及处理

- 缺失值出现的原因分析

- 采取合适的方式对缺失值进行填充

异常值分析及处理

- 根据测试集数据的分布处理训练集的数据分布

- 使用合适的方法找出异常值

- 对异常值进行处理

深度清洗

- 分析每一个communityName、city、region、plate的数据分布并对其进行数据清洗

参考资料:

一文带你探索性数据分析(EDA)

主要思路分析

虽然这步骤是缺失值处理,但还会涉及到一些最最基础的数据处理。

-

缺失值处理

缺失值的处理手段大体可以分为:删除、填充、映射到高维(当做类别处理)。详细的请自行查找相关资料学习。

根据任务一,直接找到的缺失值情况是pu和uv;但是,根据特征nunique分布的分析,可以发现rentType存在"–"的情况,这也算是一种缺失值。此外,诸如rentType的"未知方式";houseToward的"暂无数据"等,本质上也算是一种缺失值,但是对于这些缺失方式,我们可以把它当做是特殊的一类处理,而不需要去主动修改或填充值。

将rentType的"–“转换成"未知方式"类别;

pv/uv的缺失值用均值填充;

buildYear存在"暂无信息”,将其用众数填充。 -

转换object类型数据

这里直接采用LabelEncoder的方式编码,详细的编码方式请自行查阅相关资料学习。 -

时间字段的处理

buildYear由于存在"暂无信息",所以需要主动将其转换int类型;

tradeTime,将其分割成月和日。 -

删除无关字段

ID是唯一码,建模无用,所以直接删除;

city只有一个SH值,也直接删除;

tradeTime已经分割成月和日,删除原来字段

1.缺失值处理

处理特征的缺失值,同时去掉一些无用的特征(主观经验判断)

from sklearn.preprocessing import LabelEncoder

def preprocessingData(data):

#填充缺失值

data['rentType'][data['rentType'] == '--'] = '未知方式'

#转换object类型数据

columns = ['rentType', 'communityName', 'houseType', 'houseFloor', 'houseToward', 'houseDecoration', 'region', 'plate']

for feature in columns:

data[feature] = LabelEncoder().fit_transform(data[feature])

#将buildYear列转换为整形数据处理特殊值

buildYearmean = pd.DataFrame(data[data['buildYear'] != '暂无信息']['buildYear'].mode())#找出众数,来更换暂无信息

data.loc[data[data['buildYear'] == '暂无信息'].index, 'buildYear'] = buildYearmean.iloc[0,0]

data['buildYear'] = data['buildYear'].astype('int')

# 处理pu和uv的空值

data['pv'].fillna(data['pv'].mean(), inplace = True)

data['uv'].fillna(data['uv'].mean(), inplace = True)

data['pv'] = data['pv'].astype('int')

data['uv'] = data['uv'].astype('int')

#分割交易时间

def month(x):

month = int(x.split('/')[1])

return month

def day(x):

day = int(x.split('/')[2])

return day

data['month'] = data['tradeTime'].apply(lambda x: month(x))

data['day'] = data['tradeTime'].apply(lambda x: day(x))

#去掉无效特征来减少干扰

data.drop('city', axis = 1, inplace = True)

data.drop('tradeTime', axis =1, inplace = True)

data.drop('ID', axis =1, inplace = True)

return data

异常值处理

首先使用孤立森林来处理异常值

from sklearn.ensemble import IsolationForest

# clean data孤立森林异常检测

def IF_drop(train):

IForest = IsolationForest(contamination=0.01)

IForest.fit(train["tradeMoney"].values.reshape(-1,1))

y_pred = IForest.predict(train["tradeMoney"].values.reshape(-1,1))

drop_index = train.loc[y_pred==-1].index

print(drop_index)

train.drop(drop_index,inplace=True)

return train

data_train = IF_drop(data_train)

def dropData(train):

#丢弃部分异常值

train = train[train.area <= 200]

train = train[(train.tradeMoney <= 16000) & (train.tradeMoney >= 700)]

train.drop(train[(train['totalFloor'] == 0)].index, inplace = True)

return train

# 数据集异常值处理

data_train = dropData(data_train)

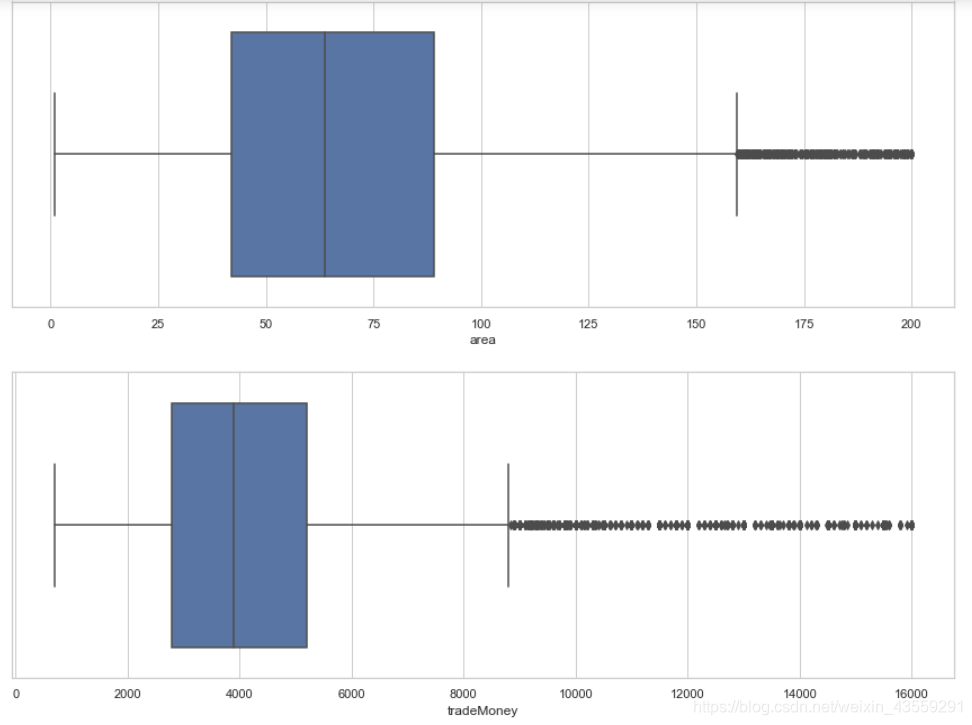

# 处理完异常值后再次查看面积和租金分布图

sns.set(style="whitegrid")

plt.figure(figsize = (15,5))

sns.boxplot(x = data_train['area'] )

plt.show()

plt.figure(figsize = (15,5))

sns.boxplot(x = data_train['tradeMoney'])

plt.show()

3.深度清洗

根据常识来利用某些特征的异常值来对数据进行清洗,对训练数据进行删除。单人住的,卫生间大于个数1就没有意义了。比如租房面积很大,结果费用很低,就不合理了。等等通过一些常识特征来处理。

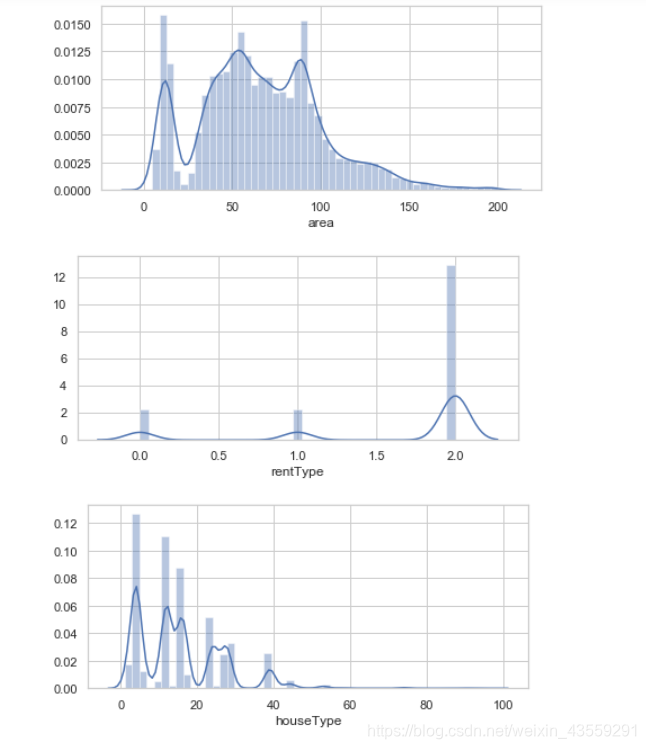

可以先看一下连续数值类型的特征分布情况:

numerical_col = [col for col in data_train.columns if data_train[col].dtypes != 'object' and col not in ['tradeMoney']]

for col_1 in numerical_col:

plt.figure(figsize = (7,3))

sns.distplot(data_train[col_1])

plt.show()

根据分布再结合主观来进行深度清洗:

def cleanData(data):

data.drop(data[(data['region']=='RG00001') & (data['tradeMoney']<1000)&(data['area']>50)].index,inplace=True)

data.drop(data[(data['region']=='RG00001') & (data['tradeMoney']>25000)].index,inplace=True)

data.drop(data[(data['region']=='RG00001') & (data['area']>250)&(data['tradeMoney']<20000)].index,inplace=True)

data.drop(data[(data['region']=='RG00001') & (data['area']>400)&(data['tradeMoney']>50000)].index,inplace=True)

data.drop(data[(data['region']=='RG00001') & (data['area']>100)&(data['tradeMoney']<2000)].index,inplace=True)

data.drop(data[(data['region']=='RG00002') & (data['area']<100)&(data['tradeMoney']>60000)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['area']<300)&(data['tradeMoney']>30000)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['tradeMoney']<500)&(data['area']<50)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['tradeMoney']<1500)&(data['area']>100)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['tradeMoney']<2000)&(data['area']>300)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['tradeMoney']>5000)&(data['area']<20)].index,inplace=True)

data.drop(data[(data['region']=='RG00003') & (data['area']>600)&(data['tradeMoney']>40000)].index,inplace=True)

data.drop(data[(data['region']=='RG00004') & (data['tradeMoney']<1000)&(data['area']>80)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['tradeMoney']<200)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']<2000)&(data['area']>180)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']>50000)&(data['area']<200)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['area']>200)&(data['tradeMoney']<2000)].index,inplace=True)

data.drop(data[(data['region']=='RG00007') & (data['area']>100)&(data['tradeMoney']<2500)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['area']>200)&(data['tradeMoney']>25000)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['area']>400)&(data['tradeMoney']<15000)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['tradeMoney']<3000)&(data['area']>200)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['tradeMoney']>7000)&(data['area']<75)].index,inplace=True)

data.drop(data[(data['region']=='RG00010') & (data['tradeMoney']>12500)&(data['area']<100)].index,inplace=True)

data.drop(data[(data['region']=='RG00004') & (data['area']>400)&(data['tradeMoney']>20000)].index,inplace=True)

data.drop(data[(data['region']=='RG00008') & (data['tradeMoney']<2000)&(data['area']>80)].index,inplace=True)

data.drop(data[(data['region']=='RG00009') & (data['tradeMoney']>40000)].index,inplace=True)

data.drop(data[(data['region']=='RG00009') & (data['area']>300)].index,inplace=True)

data.drop(data[(data['region']=='RG00009') & (data['area']>100)&(data['tradeMoney']<2000)].index,inplace=True)

data.drop(data[(data['region']=='RG00011') & (data['tradeMoney']<10000)&(data['area']>390)].index,inplace=True)

data.drop(data[(data['region']=='RG00012') & (data['area']>120)&(data['tradeMoney']<5000)].index,inplace=True)

data.drop(data[(data['region']=='RG00013') & (data['area']<100)&(data['tradeMoney']>40000)].index,inplace=True)

data.drop(data[(data['region']=='RG00013') & (data['area']>400)&(data['tradeMoney']>50000)].index,inplace=True)

data.drop(data[(data['region']=='RG00013') & (data['area']>80)&(data['tradeMoney']<2000)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['area']>300)&(data['tradeMoney']>40000)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']<1300)&(data['area']>80)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']<8000)&(data['area']>200)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']<1000)&(data['area']>20)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']>25000)&(data['area']>200)].index,inplace=True)

data.drop(data[(data['region']=='RG00014') & (data['tradeMoney']<20000)&(data['area']>250)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']>30000)&(data['area']<100)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']<50000)&(data['area']>600)].index,inplace=True)

data.drop(data[(data['region']=='RG00005') & (data['tradeMoney']>50000)&(data['area']>350)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['tradeMoney']>4000)&(data['area']<100)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['tradeMoney']<600)&(data['area']>100)].index,inplace=True)

data.drop(data[(data['region']=='RG00006') & (data['area']>165)].index,inplace=True)

data.drop(data[(data['region']=='RG00012') & (data['tradeMoney']<800)&(data['area']<30)].index,inplace=True)

data.drop(data[(data['region']=='RG00007') & (data['tradeMoney']<1100)&(data['area']>50)].index,inplace=True)

data.drop(data[(data['region']=='RG00004') & (data['tradeMoney']>8000)&(data['area']<80)].index,inplace=True)

data.loc[(data['region']=='RG00002')&(data['area']>50)&(data['rentType']=='合租'),'rentType']='整租'

data.loc[(data['region']=='RG00014')&(data['rentType']=='合租')&(data['area']>60),'rentType']='整租'

data.drop(data[(data['region']=='RG00008')&(data['tradeMoney']>15000)&(data['area']<110)].index,inplace=True)

data.drop(data[(data['region']=='RG00008')&(data['tradeMoney']>20000)&(data['area']>110)].index,inplace=True)

data.drop(data[(data['region']=='RG00008')&(data['tradeMoney']<1500)&(data['area']<50)].index,inplace=True)

data.drop(data[(data['region']=='RG00008')&(data['rentType']=='合租')&(data['area']>50)].index,inplace=True)

data.drop(data[(data['region']=='RG00015') ].index,inplace=True)

data.reset_index(drop=True, inplace=True)

return data

data_train = cleanData(data_train)

来源:CSDN

作者:weixin_43559291

链接:https://blog.csdn.net/weixin_43559291/article/details/103886173