之前就对GAN这项技术很感兴趣,可是后面一直没有找到时间研究一下,今天找来了一个很不错的例子学习实践了一下,简单来记录一下自己的实践,具体的代码如下:

#!usr/bin/env python

#encoding:utf-8

from __future__ import division

'''

__Author__:沂水寒城

功能: 基于GAN的手写数字生成实践

'''

import os

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

from tensorflow.examples.tutorials.mnist import input_data

#设置基本的参数信息

mb_size = 32

X_dim = 784

z_dim = 64

h_dim = 128

lr = 1e-3

m = 5

lam = 1e-3

gamma = 0.5

k_curr = 0

if not os.path.exists('result/'):

os.makedirs('result/')

mnist = input_data.read_data_sets('../../MNIST_data', one_hot=True)

def numberPloter(samples):

'''

数字图像绘制

'''

figure = plt.figure(figsize=(8, 8))

gs = gridspec.GridSpec(4, 4)

gs.update(wspace=0.05, hspace=0.05)

for i, sample in enumerate(samples):

ax = plt.subplot(gs[i])

plt.axis('off')

ax.set_xticklabels([])

ax.set_yticklabels([])

ax.set_aspect('equal')

plt.imshow(sample.reshape(28, 28), cmap='Greys_r')

return figure

def xavier_init(size):

'''

初始化

'''

in_dim = size[0]

xavier_stddev = 1. / tf.sqrt(in_dim / 2.)

return tf.random_normal(shape=size, stddev=xavier_stddev)

X = tf.placeholder(tf.float32, shape=[None, X_dim])

z = tf.placeholder(tf.float32, shape=[None, z_dim])

k = tf.placeholder(tf.float32)

D_W1 = tf.Variable(xavier_init([X_dim, h_dim]))

D_b1 = tf.Variable(tf.zeros(shape=[h_dim]))

D_W2 = tf.Variable(xavier_init([h_dim, X_dim]))

D_b2 = tf.Variable(tf.zeros(shape=[X_dim]))

G_W1 = tf.Variable(xavier_init([z_dim, h_dim]))

G_b1 = tf.Variable(tf.zeros(shape=[h_dim]))

G_W2 = tf.Variable(xavier_init([h_dim, X_dim]))

G_b2 = tf.Variable(tf.zeros(shape=[X_dim]))

theta_G = [G_W1, G_W2, G_b1, G_b2]

theta_D = [D_W1, D_W2, D_b1, D_b2]

def sample_z(m, n):

'''

随机数

'''

return np.random.uniform(-1., 1., size=[m, n])

def G(z):

'''

定义两个网络

'''

G_h1 = tf.nn.relu(tf.matmul(z, G_W1) + G_b1)

G_log_prob = tf.matmul(G_h1, G_W2) + G_b2

G_prob = tf.nn.sigmoid(G_log_prob)

return G_prob

def D(X):

'''

定义两个网络

'''

D_h1 = tf.nn.relu(tf.matmul(X, D_W1) + D_b1)

X_recon = tf.matmul(D_h1, D_W2) + D_b2

return tf.reduce_mean(tf.reduce_sum((X - X_recon)**2, 1))

# 计算损失

G_sample = G(z)

D_real = D(X)

D_fake = D(G_sample)

D_loss = D_real - k*D_fake

G_loss = D_fake

D_solver=(tf.train.AdamOptimizer(learning_rate=lr).minimize(D_loss, var_list=theta_D))

G_solver=(tf.train.AdamOptimizer(learning_rate=lr).minimize(G_loss, var_list=theta_G))

sess = tf.Session()

sess.run(tf.global_variables_initializer())

# 迭代计算一百万次,每1000次绘制一张图片

num = 0

for it in range(1000000):

X_mb, _ = mnist.train.next_batch(mb_size)

_, D_real_curr = sess.run([D_solver, D_real],feed_dict={X: X_mb, z: sample_z(mb_size, z_dim), k: k_curr})

_, D_fake_curr = sess.run([G_solver, D_fake],feed_dict={X: X_mb, z: sample_z(mb_size, z_dim)})

k_curr = k_curr + lam * (gamma*D_real_curr - D_fake_curr)

if it % 1000 == 0:

measure = D_real_curr + np.abs(gamma*D_real_curr - D_fake_curr)

print('Iter-{}; Convergence measure: {:.4}'.format(it, measure))

samples = sess.run(G_sample, feed_dict={z: sample_z(16, z_dim)})

fig = plot(samples)

plt.savefig('result/{}.png'.format(str(num).zfill(3)), bbox_inches='tight')

num += 1

plt.close(fig)

这是一个很简单实用的例子,基于GAN来生成手写数字,关于各部分的代码作用,我在具体的代码里面已经加入了相应的注释,下面我们来简单看一下输出的结果:

。。。。。。。。。。。。。。。。。。

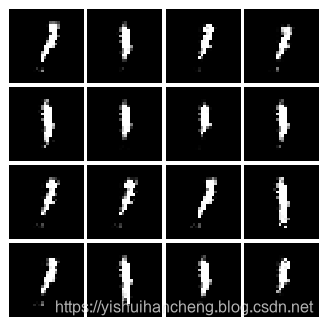

上面是展示了1000张图片的前100张,和后面将近100张左右的结果缩略图,这里给出来第一张和最后一张:

第一张:

最后一张:

之后找时间继续学习,欢迎交流!

来源:CSDN

作者:Together_CZ

链接:https://blog.csdn.net/Together_CZ/article/details/104009660