(单线程)使用正则的内涵段子爬虫

代码如下

1 from urllib.request import *

2 import re

3 import time

4

5

6 class Spider(object):

7 def __init__(self):

8 self.__start_page = int(input("请输入要爬取的开始页面:"))

9 self.__end_page = int(input("请输入要爬取的结束页面:"))

10

11 # 模拟浏览器代理

12 self.__header = {

13 "User-Agent": "Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/70.0.3514.0 Safari/537.36"}

14

15 # 用来记录帖子数量

16 self.num = 1

17

18 def __load_page(self, start_page, end_page):

19 """

20 下载页面

21 """

22 print("正在爬取中....")

23 for page in range(self.__start_page, self.__end_page + 1):

24 # 由于第一页是url比较特殊,要单独爬取

25 if page == 1:

26 url = "https://www.neihanba.com/dz/index.html"

27

28 else:

29 # 待爬取的地址

30 url = "https://www.neihanba.com/dz/list_" + str(page) + ".html"

31

32 # 发起请求

33 request = Request(url, headers=self.__header)

34 response = urlopen(request)

35

36 # 获取每页的html源码字符串

37 html = response.read().decode("gbk")

38

39 # 获取所有符合条件的,返回一个列表

40 content_list = re.findall(r'<div class="f18 mb20">.*?</div>', html)

41 42 # 调用__deal_info()开始处理多余的信息

43 self.__deal_info(content_list, page)

44

45 def __deal_info(self, content_list, page):

46 """

47 处理每条的段子多余的部分

48 """

49 content = " =============================第%d页=========================\n" % page # 用来拼接内容

50

51 for info in content_list:

52 # 注意 | 左右的空格不能随便加

53 info = re.sub('(<div class="f18 mb20">)|(</div>)', "", info)

54

55 content = content + " %d、" % self.num + info + "\n"

56 self.num += 1

57

58 # 调用write__page()开始写入数据

59 self.__write_page(content)

60

61 def __write_page(self, content):

62 """

63 把每个段子逐个写入文件里

64 """

65 with open("内涵段子.txt", "a") as f:

66 f.write(content)

67

68 def run(self):

69 """

70 控制爬虫运行

71 """

72

73 start_time = time.time()

74

75 # 开始爬取

76 self.__load_page(self.__start_page, self.__end_page)

77 print("爬取完成...")

78 end_time = time.time()

79 print('用时:%.2f秒.' % (end_time - start_time))

80

81

82 if __name__ == '__main__':

83 spider = Spider()

84 spider.run()

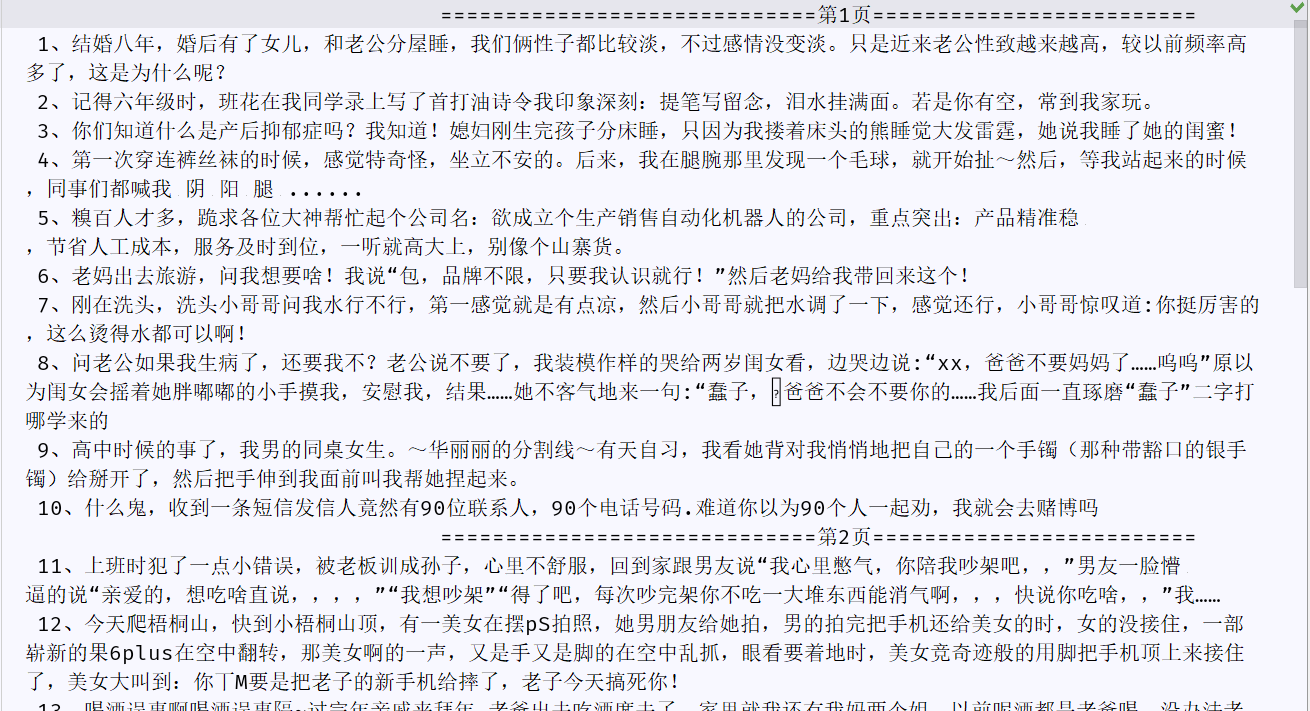

结果预览如下:

如果你和我有共同爱好,我们可以加个好友一起交流哈!

来源:https://www.cnblogs.com/ywk-1994/p/9581130.html