from keras.models import Sequential

from keras.layers import *

from keras.utils import np_utils

import numpy as np

from keras.datasets import mnist

#加载mnist数据集

(x_train,y_train),(x_test,y_test)=mnist.load_data()

#数据预处理,转换为Keras使用的格式

num_pixels=x_train.shape[1]*x_train.shape[2]

n_channels=1#图像通道数

def preprocess(matrix):

return matrix.reshape(matrix.shape[0],n_channels,matrix.shape[1],matrix.shape[2]).astype('float32')/255

x_train,x_test=preprocess(x_train),preprocess(x_test)

#对输出结果进行处理

#np_utils.to_categorical()整型的类别标签转为onehot编码

y_train=np_utils.to_categorical(y_train)

y_test=np_utils.to_categorical(y_test)

num_classes=y_train.shape[1]

#建立一个Sequential模型,基准模型

def baseline_model():

model=Sequential()

model.add(Flatten(input_shape=(1,28,28)))#将输入图像拉伸为向量

model.add(Dense(num_pixels,init='normal',activation='relu'))#输入层relu函数作为激活函数

model.add(Dense(num_classes,init='normal',activation='softmax'))#输出层softmax函数作为分类函数

#用Keras编译模型,类的交叉熵作为优化损失函数,分类精度作为主要性能指标

model.compile(loss='categorical_crossentropy',optimizer='adam',metrics=['accuracy'])

return model

#为了展示模型提供的效果,构建一个复杂的卷积神经网络

def convolution_small():

model=Sequential()

#操作在二维矩阵上的卷积滤波器:使用窗口为5*5的滤波器,对二维图像进行卷积滤波操作,产生32维输出向量

model.add(Convolution2D(32,5,5,border_mode='valid',input_shape=(1,28,28),activation='relu'))

#最大池化层:对2*2窗口进行最大化选择,以非线性的方式对图像进行采样

model.add(MaxPool2D(pool_size=(2,2)))

#dropout层:随机将神经元的20%重置为0,防止过拟合

model.add(Dropout(0.2))

model.add(Flatten())

model.add(Dense(128,activation='relu'))

model.add(Dense(num_classes,activation='softmax'))

model.compile(loss='categorical_crossentropy',optimizer='adam',metrics=['accuracy'])

return model

#为了展示神经网络的威力,创建一个更复杂的神经网络,两层卷积和池化

def convolution_large():

model=Sequential()

model.add(Convolution2D(30,5,5,border_mode='valid',input_shape=(1,28,28),activation='relu'))

model.add(MaxPool2D(pool_size=(2,2)))

model.add(Convolution2D(15,3,3,activation='relu'))

model.add(MaxPool2D(pool_size=(2,2)))

model.add(Dropout(0.2))

model.add(Flatten())

model.add(Dense(128,activation='relu'))

model.add(Dense(50,activation='relu'))

model.add(Dense(num_classes,activation='softmax'))

model.compile(loss='categorical_crossentropy',optimizer='adam',metrics=['accuracy'])

return model

#测试模型

np.random.seed(101)

models=[('baseline',baseline_model()),('small',convolution_small()),('large',convolution_large())]

for name,model in models:

print('With name:',name)

#训练模型,nb_epoch迭代次数,batch_size批量大小

model.fit(x_train,y_train,validation_data=(x_test,y_test),nb_epoch=10,batch_size=100,verbose=2)

#测试模型

scores=model.evaluate(x_test,y_test,verbose=0)

print('Baseline Error:%.2f%%'%(100-scores[1]*100))

print()

With name: baseline

Train on 60000 samples, validate on 10000 samples

Epoch 1/10

- 15s - loss: 0.2275 - accuracy: 0.9348 - val_loss: 0.1177 - val_accuracy: 0.9647

Epoch 2/10 - 15s - loss: 0.0884 - accuracy: 0.9738 - val_loss: 0.0770 - val_accuracy: 0.9751

Epoch 3/10 - 16s - loss: 0.0549 - accuracy: 0.9837 - val_loss: 0.0776 - val_accuracy: 0.9758

Epoch 4/10 - 15s - loss: 0.0362 - accuracy: 0.9891 - val_loss: 0.0643 - val_accuracy: 0.9786

Epoch 5/10 - 15s - loss: 0.0269 - accuracy: 0.9922 - val_loss: 0.0586 - val_accuracy: 0.9822

Epoch 6/10 - 15s - loss: 0.0195 - accuracy: 0.9941 - val_loss: 0.0764 - val_accuracy: 0.9766

Epoch 7/10 - 15s - loss: 0.0147 - accuracy: 0.9960 - val_loss: 0.0598 - val_accuracy: 0.9816

Epoch 8/10 - 15s - loss: 0.0101 - accuracy: 0.9974 - val_loss: 0.0608 - val_accuracy: 0.9824

Epoch 9/10 - 15s - loss: 0.0106 - accuracy: 0.9968 - val_loss: 0.0659 - val_accuracy: 0.9813

Epoch 10/10 - 15s - loss: 0.0108 - accuracy: 0.9967 - val_loss: 0.0606 - val_accuracy: 0.9836

Baseline Error:1.64%

With name: small

Train on 60000 samples, validate on 10000 samples

Epoch 1/10

- 76s - loss: 0.1826 - accuracy: 0.9468 - val_loss: 0.0601 - val_accuracy: 0.9813

Epoch 2/10 - 76s - loss: 0.0617 - accuracy: 0.9807 - val_loss: 0.0422 - val_accuracy: 0.9858

Epoch 3/10 - 76s - loss: 0.0428 - accuracy: 0.9864 - val_loss: 0.0459 - val_accuracy: 0.9858

Epoch 4/10 - 77s - loss: 0.0344 - accuracy: 0.9889 - val_loss: 0.0411 - val_accuracy: 0.9873

Epoch 5/10 - 76s - loss: 0.0273 - accuracy: 0.9911 - val_loss: 0.0302 - val_accuracy: 0.9903

Epoch 6/10 - 76s - loss: 0.0204 - accuracy: 0.9937 - val_loss: 0.0334 - val_accuracy: 0.9891

Epoch 7/10 - 75s - loss: 0.0179 - accuracy: 0.9938 - val_loss: 0.0344 - val_accuracy: 0.9898

Epoch 8/10 - 76s - loss: 0.0159 - accuracy: 0.9947 - val_loss: 0.0343 - val_accuracy: 0.9886

Epoch 9/10 - 76s - loss: 0.0117 - accuracy: 0.9961 - val_loss: 0.0372 - val_accuracy: 0.9882

Epoch 10/10 - 75s - loss: 0.0100 - accuracy: 0.9968 - val_loss: 0.0350 - val_accuracy: 0.9892

Baseline Error:1.08%

With name: large

Train on 60000 samples, validate on 10000 samples

Epoch 1/10

- 75s - loss: 0.2747 - accuracy: 0.9141 - val_loss: 0.0682 - val_accuracy: 0.9771

Epoch 2/10 - 78s - loss: 0.0784 - accuracy: 0.9758 - val_loss: 0.0439 - val_accuracy: 0.9844

Epoch 3/10 - 77s - loss: 0.0592 - accuracy: 0.9815 - val_loss: 0.0377 - val_accuracy: 0.9880

Epoch 4/10 - 79s - loss: 0.0479 - accuracy: 0.9847 - val_loss: 0.0298 - val_accuracy: 0.9910

Epoch 5/10 - 76s - loss: 0.0410 - accuracy: 0.9862 - val_loss: 0.0264 - val_accuracy: 0.9914

Epoch 6/10 - 75s - loss: 0.0379 - accuracy: 0.9875 - val_loss: 0.0271 - val_accuracy: 0.9912

Epoch 7/10 - 76s - loss: 0.0304 - accuracy: 0.9906 - val_loss: 0.0278 - val_accuracy: 0.9913

Epoch 8/10 - 75s - loss: 0.0288 - accuracy: 0.9906 - val_loss: 0.0242 - val_accuracy: 0.9921

Epoch 9/10 - 76s - loss: 0.0263 - accuracy: 0.9914 - val_loss: 0.0260 - val_accuracy: 0.9924

Epoch 10/10 - 78s - loss: 0.0252 - accuracy: 0.9919 - val_loss: 0.0251 - val_accuracy: 0.9927

Baseline Error:0.73%

说明:

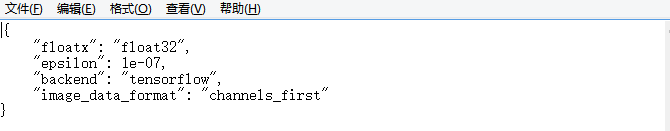

书本上是Theano框架,但我在input_shape里遇到了输入错误,解决了半天没解决,就又改回TensorFlow框架了。然后将c盘中keras的配置文件"image_data_format": “channels_last"改为了"image_data_format”: “channels_first”(如下),程序中的input_shape与之对应,问题解决了。

来源:CSDN

作者:Monica_Zzz

链接:https://blog.csdn.net/weixin_44819497/article/details/103688282