信用标准评分卡模型开发及实现的文章,是标准的评分卡建模流程在R上的实现,非常不错,就想着能不能把开发流程在Python上实验一遍呢,经过一番折腾后,终于在Python上用类似的代码和包实现出来,由于Python和R上函数的差异以及样本抽样的差异,本文的结果与该文有一定的差异,这是意料之中的,也是正常,接下来就介绍建模的流程和代码实现。

#####代码中需要引用的包##### import numpy as np import pandas as pd from sklearn.utils import shuffle from sklearn.feature_selection import RFE, f_regression import scipy.stats.stats as stats import matplotlib.pyplot as plt from sklearn.linear_model import LogisticRegression import math 二、数据集准备

加州大学机器学习数据库中的german credit data,原本是存在R包”klaR”中的GermanCredit,我在R中把它加载进去,然后导出csv,最终导入Python作为数据集

############## R ################# library(klaR) data(GermanCredit ,package="klaR") write.csv(GermanCredit,"/filePath/GermanCredit.csv") >>> df_raw = pd.read_csv('/filePath/GermanCredit.csv') >>> df_raw.dtypes Unnamed: 0 int64 status object duration int64 credit_history object purpose object amount int64 savings object employment_duration object installment_rate int64 personal_status_sex object other_debtors object present_residence int64 property object age int64 other_installment_plans object housing object number_credits int64 job object people_liable int64 telephone object foreign_worker object credit_risk object #提取样本训练集和测试集 def split_data(data, ratio=0.7, seed=None): if seed: shuffle_data = shuffle(data, random_state=seed) else: shuffle_data = shuffle(data, random_state=np.random.randint(10000)) train = shuffle_data.iloc[:int(ratio*len(shuffle_data)), ] test = shuffle_data.iloc[int(ratio*len(shuffle_data)):, ] return train, test #设置seed是为了保证下次拆分的结果一致 df_train,df_test = split_data(df_raw, ratio=0.7, seed=666) #将违约样本用“1”表示,正常样本用“0”表示。 credit_risk = [0 if x=='good' else 1 for x in df_train['credit_risk']] #credit_risk = np.where(df_train['credit_risk'] == 'good',0,1) data = df_train data['credit_risk']=credit_risk 三、定量指标筛选

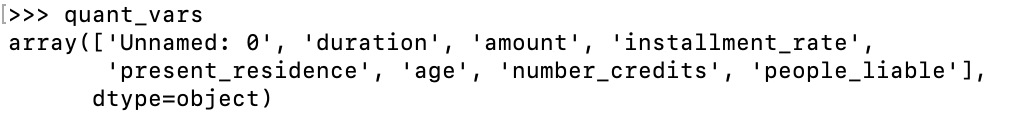

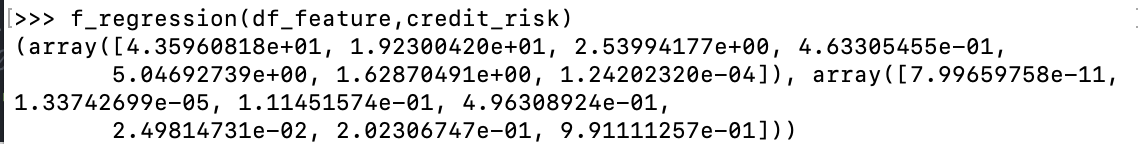

#获取定量指标 quant_index = np.where(data.dtypes=='int64') quant_vars = np.array(data.columns)[quant_index] quant_vars = np.delete(quant_vars,0) df_feature = pd.DataFrame(data,columns=['duration','amount','installment_rate','present_residence','age','number_credits','people_liable']) f_regression(df_feature,credit_risk) #输出逐步回归后得到的变量,选择P值<=0.1的变量 quant_model_vars = ["duration","amount","age","installment_rate"] 四、定性指标筛选

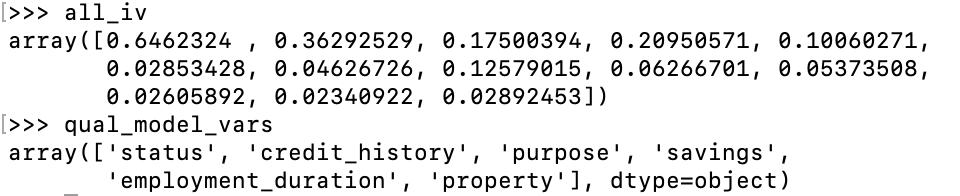

def woe(bad, good): return np.log((bad/bad.sum())/(good/good.sum())) all_iv = np.empty(len(factor_vars)) woe_dict = dict() #存起来后续有用 i = 0 for var in factor_vars: data_group = data.groupby(var)['credit_risk'].agg([np.sum,len]) bad = data_group['sum'] good = data_group['len']-bad woe_dict[var] = woe(bad,good) iv = ((bad/bad.sum()-good/good.sum())*woe(bad,good)).sum() all_iv[i] = iv i = i+1 high_index = np.where(all_iv>0.1) qual_model_vars = factor_vars[high_index]

至此,就完成了数据集的准备和指标的筛选,下面就要进行逻辑回归模型的建模了,请见

文章来源: 信用评分卡模型在Python中实践(上)