环境信息

完全分布式集群(一)集群基础环境及zookeeper-3.4.10安装部署

hadoop集群安装配置过程

安装hive前需要先部署hadoop集群

Hbase集群安装部署

下载hbase-1.2.6.1-bin.tar.gz并通过FTP工具上传至服务器,解压

[root@node222 ~]# ls /home/hadoop/hbase-1.2.6.1-bin.tar.gz

/home/hadoop/hbase-1.2.6.1-bin.tar.gz

[root@node222 ~]# gtar -zxf /home/hadoop/hbase-1.2.6.1-bin.tar.gz -C /usr/local/

[root@node222 ~]# ls /usr/local/hbase-1.2.6.1/

bin CHANGES.txt conf docs hbase-webapps LEGAL lib LICENSE.txt NOTICE.txt README.txt配置Hbase

1、配置hbase-env.sh

# 去掉对应环境变量前的注释符号“#”,根据服务器环境情况修改JAVA_HOME信息

export JAVA_HOME=/usr/local/jdk1.8.0_66

# 关闭hbase内置zookeeper

export HBASE_MANAGES_ZK=false2、创建hbase临时文件目录

[root@node222 ~]# mkdir -p /usr/local/hbase-1.2.6.1/hbaseData3、配置hbase-site.xml,配置时需要参照hadoop的core-site.xml和zk的zoo.cfg文件

<configuration>

<property>

<name>hbase.rootdir</name>

<value>hdfs://ns1/user/hbase/hbase_db</value>

<!-- hbase存放数据目录 配置为主节点的机器名端口参照core-site.xml -->

</property>

<property>

<name>hbase.tmp.dir</name>

<value>/usr/local/hbase-1.2.6.1/hbaseData</value>

</property>

<property>

<name>hbase.cluster.distributed</name>

<value>true</value>

<!-- 是否分布式部署 -->

</property>

<property>

<name>hbase.zookeeper.quorum</name>

<value>node222:2181,node224:2181,node225:2181</value>

<!-- 指定zookeeper集群个节点地址端口 -->

</property>

<property>

<name>hbase.zookeeper.property.dataDir</name>

<value>/usr/local/zookeeper-3.4.10/zkdata</value>

<!-- zookooper配置、日志等的存储位置 参照zoo.cfg -->

</property>

</configuration>

以上配置完成后,因为本次是集群环境部署,需将hadoop集群的core-site.xml 和 hdfs-site.xml 复制到hbase/conf目录下,用户hbase正确解析hdfs集群目录,否则会产生启动后,主节点正常启动,Hregionserver未正常启动问题,报错日志如下:

2018-10-16 11:40:46,252 ERROR [main] regionserver.HRegionServerCommandLine: Region server exiting

java.lang.RuntimeException: Failed construction of Regionserver: class org.apache.hadoop.hbase.regionserver.HRegionServer

at org.apache.hadoop.hbase.regionserver.HRegionServer.constructRegionServer(HRegionServer.java:2682)

at org.apache.hadoop.hbase.regionserver.HRegionServerCommandLine.start(HRegionServerCommandLine.java:64)

at org.apache.hadoop.hbase.regionserver.HRegionServerCommandLine.run(HRegionServerCommandLine.java:87)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.hadoop.hbase.util.ServerCommandLine.doMain(ServerCommandLine.java:126)

at org.apache.hadoop.hbase.regionserver.HRegionServer.main(HRegionServer.java:2697)

Caused by: java.lang.reflect.InvocationTargetException

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:422)

at org.apache.hadoop.hbase.regionserver.HRegionServer.constructRegionServer(HRegionServer.java:2680)

... 5 more

Caused by: java.lang.IllegalArgumentException: java.net.UnknownHostException: ns1

at org.apache.hadoop.security.SecurityUtil.buildTokenService(SecurityUtil.java:373)

at org.apache.hadoop.hdfs.NameNodeProxies.createNonHAProxy(NameNodeProxies.java:258)

at org.apache.hadoop.hdfs.NameNodeProxies.createProxy(NameNodeProxies.java:153)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:602)

at org.apache.hadoop.hdfs.DFSClient.<init>(DFSClient.java:547)

at org.apache.hadoop.hdfs.DistributedFileSystem.initialize(DistributedFileSystem.java:139)

at org.apache.hadoop.fs.FileSystem.createFileSystem(FileSystem.java:2591)

at org.apache.hadoop.fs.FileSystem.access$200(FileSystem.java:89)

at org.apache.hadoop.fs.FileSystem$Cache.getInternal(FileSystem.java:2625)

at org.apache.hadoop.fs.FileSystem$Cache.get(FileSystem.java:2607)

at org.apache.hadoop.fs.FileSystem.get(FileSystem.java:368)

at org.apache.hadoop.fs.Path.getFileSystem(Path.java:296)

at org.apache.hadoop.hbase.util.FSUtils.getRootDir(FSUtils.java:1003)

at org.apache.hadoop.hbase.regionserver.HRegionServer.initializeFileSystem(HRegionServer.java:609)

at org.apache.hadoop.hbase.regionserver.HRegionServer.<init>(HRegionServer.java:564)

... 10 more

Caused by: java.net.UnknownHostException: ns1

... 25 more配置regionservers,配置需要作为Hregionserver节点的节点主机名

# 本次将node224,node225作为HregionServer节点

[root@node222 ~]# vi /usr/local/hbase-1.2.6.1/conf/regionservers

node224

node225配置backup-master节点

当前配置backup-master节点有两种方式,一种是配置时不配置backup-master文件,待集群配置完成后,在某节点上通过

# 不用配置back-masters文件,直接在节点上启动,start 后的编号即 web端口的末尾

[hadoop@node225 ~]$ /usr/local/hbase-1.2.6.1/bin/local-master-backup.sh start 1

starting master, logging to /usr/local/hbase-1.2.6.1/logs/hbase-hadoop-1-master-node225.out

[hadoop@node225 ~]$ jps

2883 JournalNode

2980 NodeManager

2792 DataNode

88175 HMaster

88239 Jps

87966 HRegionServer为方便多master节点的统一管理,本次采用在%HBASE_HOME/conf目录下增加backup-masters文件(默认没有该文件),文件中设置需要作为backup-master节点的服务器

[root@node222 ~]# vi /usr/local/hbase-1.2.6.1/conf/backup-masters

node225以上配置完成后,将Hbase的目录拷贝至其他两节点

[root@node222 ~]# scp -r /usr/local/hbase-1.2.6.1 root@node224:/usr/local

[root@node222 ~]# scp -r /usr/local/hbase-1.2.6.1 root@node225:/usr/local配置环境变量,3节点都操作

vi /etc/profile

# 增加如下内容

export HBASE_HOME=/usr/local/hbase-1.2.6.1

export PATH=$HBASE_HOME/bin:$PATH

# 使配置生效

source /etc/profile将hbase目录授权给hadoop用户(因为hadoop集群配置的免密码登录是hadoop用户),3节点都操作

[root@node222 ~]# chown -R hadoop:hadoop /usr/local/hbase-1.2.6.1

[root@node224 ~]# chown -R hadoop:hadoop /usr/local/hbase-1.2.6.1

[root@node225 ~]# chown -R hadoop:hadoop /usr/local/hbase-1.2.6.1主节点上启动hbase,并检查各节点上启动hbase服务进程

[hadoop@node222 ~]$ /usr/local/hbase-1.2.6.1/bin/start-hbase.sh

starting master, logging to /usr/local/hbase-1.2.6.1/logs/hbase-hadoop-master-node222.out

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

node224: starting regionserver, logging to /usr/local/hbase-1.2.6.1/bin/../logs/hbase-hadoop-regionserver-node224.out

node225: starting regionserver, logging to /usr/local/hbase-1.2.6.1/bin/../logs/hbase-hadoop-regionserver-node225.out

node224: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

node224: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

node225: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

node225: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

node225: starting master, logging to /usr/local/hbase-1.2.6.1/bin/../logs/hbase-hadoop-master-node225.out

node225: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

node225: Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

[hadoop@node222 ~]$ jps

4037 NameNode

4597 NodeManager

4327 JournalNode

129974 HMaster

4936 DFSZKFailoverController

4140 DataNode

4495 ResourceManager

130206 Jps

[hadoop@node224 ~]$ jps

3555 JournalNode

3683 NodeManager

3907 DFSZKFailoverController

116853 HRegionServer

3464 DataNode

45724 ResourceManager

3391 NameNode

117006 Jps

[hadoop@node225 ~]$ jps

88449 HRegionServer

88787 Jps

2883 JournalNode

2980 NodeManager

88516 HMaster

2792 DataNode通过hbase shell查看当前集群的状态,1 active master, 1 backup masters, 2 servers

[hadoop@node222 ~]$ /usr/local/hbase-1.2.6.1/bin/hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hbase-1.2.6.1/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 1.2.6.1, rUnknown, Sun Jun 3 23:19:26 CDT 2018

hbase(main):001:0> status

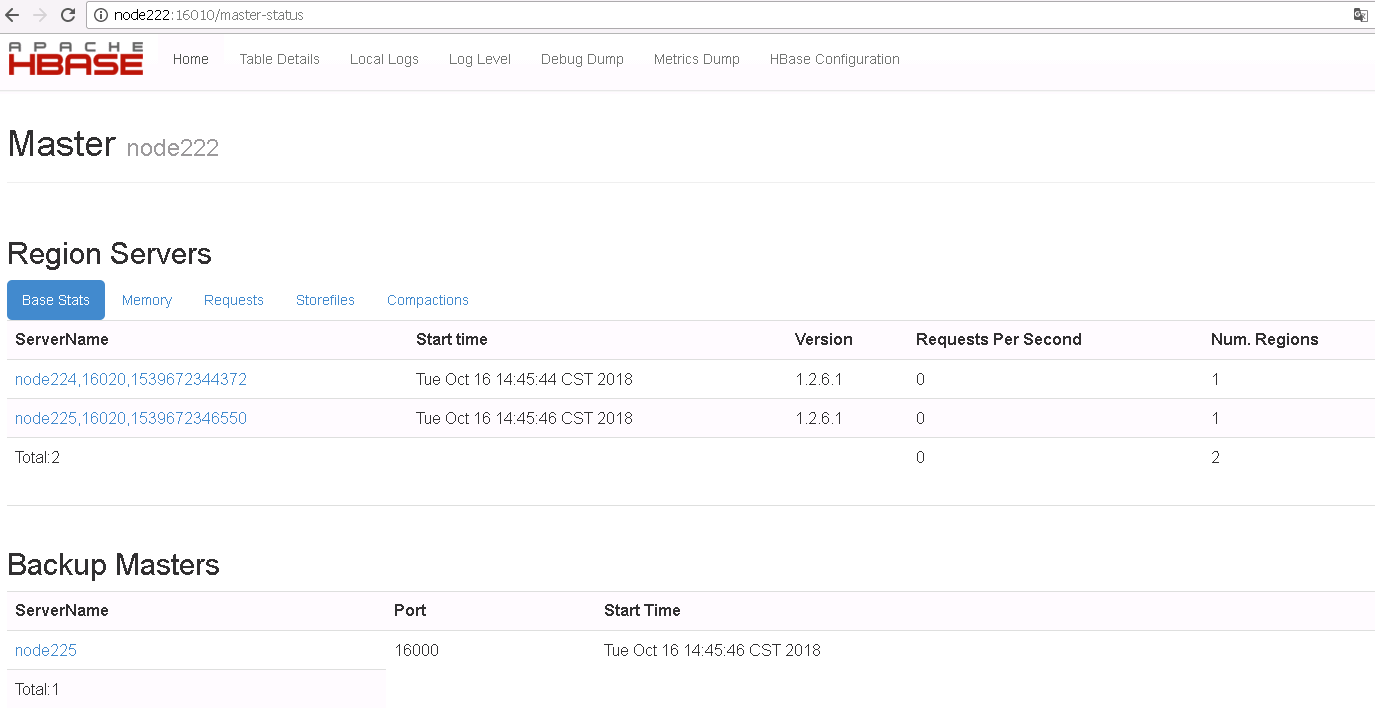

1 active master, 1 backup masters, 2 servers, 0 dead, 1.0000 average load通过web界面查看集群的状态

注意集群中配置可能遇到的问题

好多网上资料介绍的hbase-site.xml文件中的hbase.rootdir配置为master节点的机器名,正常情况不会有问题,但当hadoop集群的hdfs active和standby发生切换时,就会导致hbase启动后,Hmaster无法正常启动,HregionServer启动正常,错误信息如下。解决方法可参照本文中的配置说明。

activeMasterManager] master.HMaster: Failed to become active master

SLF4J: Class path contains multiple SLF4J bindings.多SLF4J问题,本次修改hbase文件,以hadoop的为准

[hadoop@node222 ~]$ /usr/local/hbase-1.2.6.1/bin/hbase shell

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/usr/local/hbase-1.2.6.1/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/usr/local/hadoop-2.6.5/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.slf4j.impl.Log4jLoggerFactory]

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 1.2.6.1, rUnknown, Sun Jun 3 23:19:26 CDT 2018

hbase(main):001:0> exit

# 屏蔽hbase自身的jar包

[hadoop@node222 ~]$ mv /usr/local/hbase-1.2.6.1/lib/slf4j-log4j12-1.7.5.jar /usr/local/hbase-1.2.6.1/lib/slf4j-log4j12-1.7.5.jar.bak

[hadoop@node222 ~]$ /usr/local/hbase-1.2.6.1/bin/hbase shell

HBase Shell; enter 'help<RETURN>' for list of supported commands.

Type "exit<RETURN>" to leave the HBase Shell

Version 1.2.6.1, rUnknown, Sun Jun 3 23:19:26 CDT 2018

Hbase 启动如下警告处理

starting master, logging to /usr/local/hbase-1.2.6.1/logs/hbase-root-master-node222.out

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option PermSize=128m; support was removed in 8.0

Java HotSpot(TM) 64-Bit Server VM warning: ignoring option MaxPermSize=128m; support was removed in 8.0

starting regionserver, logging to /usr/local/hbase-1.2.6.1/logs/hbase-root-1-regionserver-node222.out

修改hbase-env.sh注释如下两行export

[root@node222 ~]# vi /usr/local/hbase-1.2.6.1/conf/hbase-env.sh

# Configure PermSize. Only needed in JDK7. You can safely remove it for JDK8+

#export HBASE_MASTER_OPTS="$HBASE_MASTER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m"

#export HBASE_REGIONSERVER_OPTS="$HBASE_REGIONSERVER_OPTS -XX:PermSize=128m -XX:MaxPermSize=128m"

[root@node222 ~]# start-hbase.sh

starting master, logging to /usr/local/hbase-1.2.6.1/logs/hbase-root-master-node222.out

starting regionserver, logging to /usr/local/hbase-1.2.6.1/logs/hbase-root-1-regionserver-node222.out

来源:oschina

链接:https://my.oschina.net/u/3967174/blog/2246987