Highly Efficient Salient Object Detection with 100K Parameters

Abstract

Salient object detection models often demand a considerable amount of computation cost to make precise prediction for each pixel, making them hardly applicable on low-power devices.

In this paper, we aim to relieve the contradiction between computation cost and model performance by improving the network efficiency to a higher degree.

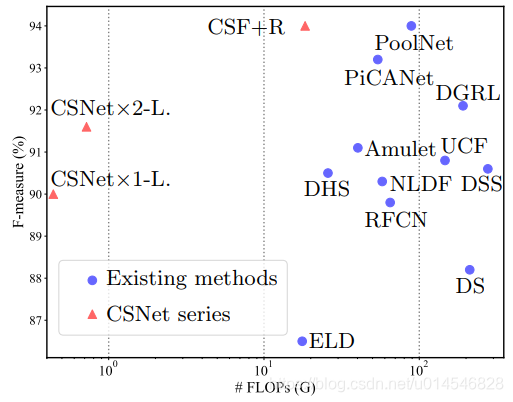

We propose a flexible convolutional module, namely generalized OctConv (gOctConv), to efficiently utilize both in-stage and cross-stages multi-scale features, while reducing the representation redundancy by a novel dynamic weight decay scheme. The effective dynamic weight decay scheme stably boosts the sparsity of parameters during training, supports learnable number of channels for each scale in gOctConv, allowing 80% of parameters reduce with negligible performance drop. Utilizing gOctConv, we build an extremely light-weighted model, namely CSNet, which achieves comparable performance with ∼ 0.2% parameters (100k) of large models on popular salient object detection benchmarks.

第一句,研究领域+提出问题:显著目标检测模型往往需要大量的计算成本才能对每个像素进行精确的预测,这使得显著目标检测模型在低功耗设备上难以应用。

第二句,工作内容:本文的目的是通过提高网络效率来缓解计算成本和模型性能之间的矛盾。

第三、四、五句,具体方法:

1. 提出了一种灵活的卷积模块 gOctConv,有效地利用阶段内和跨阶段的多尺度特征,二是通过一种新的动态权重衰减方案减少表示冗余。

2. 动态权重衰减方案(dynamic weight decay scheme)稳定提高了训练过程中参数的稀疏性,支持 gOctConv 中每个尺度的信道数可学习,减少表示冗余,使参数减少 80%,而性能下降可以忽略。

3. 利用 gOctConv,建立了一个非常轻量化的模型,即 CSNet,参数约为0.2% (100k),在显著目标检测基准上,与大模型的性能相当。

Introduction

Salient object detection (SOD) is an important computer vision task with various applications in image retrieval [17,5], visual tracking [23], photographic composition [15], image quality assessment [69], and weakly supervised semantic segmentation [25]. While convolutional neural networks (CNNs) based SOD methods have made significant progress, most of these methods focus on improving the state-of-the-art (SOTA) performance, by utilizing both fine details and global semantics [64,80,83,76,11], attention [3,2], as well as edge cues [12,68,85,61] etc. Despite the great performance, these models are usually resource-hungry, which are hardly applicable on low-power devices with limited storage/computational capability. How to build an extremely light-weighted SOD model with SOTA performance is an important but less explored area.

介绍本文的研究领域(应用范围,深度学习方法基本模型),以及本文要解决的问题(基于深度学习的模型,通常参数较大)。

显著目标检测 (Salient object detection, SOD) 是一项重要的计算机视觉任务,在图像检索[17,5]、视觉跟踪[23]、摄影合成[15]、图像质量评估[69]、弱监督语义分割[25]等领域有着广泛的应用。基于卷积神经网络 (CNNs) 的 SOD 方法取得了显著的进展,但这些方法大多着眼于提高最先进的 (the- of-the- state, SOTA) 性能,利用精细细节和全局语义[64,80,83,76,11]、注意力[3,2]以及边缘线索[12,68,85,61]等。尽管性能很好,但这些模型通常需要大量资源,很难应用于存储/计算能力有限的低功耗设备。如何建立一个 SOTA 性能极轻的 SOD 模型是一个重要但研究较少的领域。

The SOD task requires generating accurate prediction scores for every image pixel, thus requires both large scale high level feature representations for correctly locating the salient objects, as well as fine detailed low level representations for precise boundary refinement [12,67,24]. There are two major challenges towards building an extremely light-weighted SOD models. Firstly, serious redundancy could appear when the low frequency nature of high level feature meets the high output resolution of saliency maps. Secondly, SOTA SOD models [44,72,12,84,46,10] usually rely on ImageNet pre-trained backbone architectures [19,13] to extract features, which by itself is resource-hungry.

介绍本文要提出非常轻量化的 SOD 模型需要解决的问题。

SOD 任务需要为每个图像像素生成准确的预测分数,因此既需要大规模的高级特征表示来正确定位显著对象,也需要精细的低级别表示来精确地细化边界。建立一个非常轻量化的 SOD 模型有两个主要的挑战。

首先,当高阶特征的低频特性映射到高分辨率显著性输出时,会出现严重的冗余。

其次,SOTA SOD模型通常依赖于 ImageNet 预训练的骨干架构来提取特征,这本身就需要大量的资源。

Very recently, the spatial redundancy issue of low frequency features has also been noticed by Chen et al. [4] in the context of image and video classification. As a replacement of vanilla convolution, they design an OctConv operation to process feature maps that vary spatially slower at a lower spatial resolution to reduce computational cost. However, directly using OctConv [4] to reduce redundancy issue in the SOD task still faces two major challenges. 1) Only utilizing two scales, i.e., low and high resolutions as in OctConv, is not sufficient to fully reduce redundancy issues in the SOD task, which needs much stronger multi-scale representation ability than classification tasks. 2) The number of channels for each scale in OctConv is manually selected, requiring lots of efforts to re-adjust for saliency model as SOD task requires less category information.

针对本文要解决的问题,相关工作研究情况:

最近,Chen 等人在图像和视频分类中也注意到了低频特征的空间冗余问题,为此设计了一种 OctConv 操作来处理以较低空间分辨率变化较慢的特征映射,以减少计算成本。然而,直接使用 OctConv [4] 来减少 SOD 任务中的冗余仍然面临两大挑战:

1. 仅使用 OctConv 中的低分辨率和高分辨率两种尺度不足以完全减少 SOD 任务中的冗余问题,因为 SOD 任务需要比分类任务更强的多尺度表示能力。

2. 在 OctConv 中,每个尺度的通道数都是人工选择的,由于 SOD 任务需要较少的类别信息,需要花费大量的精力对显著性模型进行重新调整。

[4] Drop an octave: Reducing spatial redundancy in convolutional neural networks with octave convolution. (ICCV 2019)

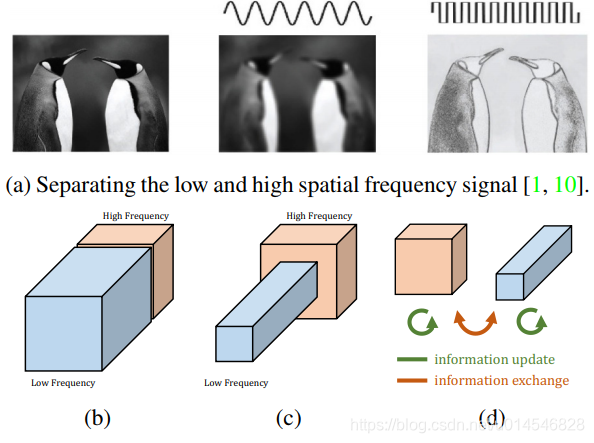

- OctConv motivation:

(a) 视觉空间频率模型表明,自然图像可以分解为一个低空间频率部分和一个高空间频率部分。(b) 卷积层的输出映射也可以按其空间频率进行分解和分组。(c) 提出的多频特征表示将平滑变化的低频映射存储在低分辨率张量中,以减少空间冗余。(d) 提出的倍频卷积直接作用于这个表示。它更新每个组的信息,并进一步支持组之间的信息交换。

In this paper, we propose a generalized OctConv (gOctConv) for building an extremely light-weighted SOD model, by extending the OctConv in the following aspects: 1). The flexibility to take inputs from arbitrary number of scales, from both in-stage features as well as cross-stages features, allows a much larger range of multi-scale representations. 2). We propose a dynamic weight decay scheme to support learnable number of channels for each scale, allowing 80% of parameters reduce with negligible performance drop.

针对要解决的问题和前人工作的不足,本文都做了什么:

本文提出一个广义 OctConv (gOctConv) 构建一个极轻量级的 SOD 模型, 通过在以下方面扩展 OctConv:

1. 从 in-stage 特征以及 cross-stages 特性,允许更大范围的多尺度表示。

2. 提出了一种动态权重衰减方案,支持每个尺度的信道数量可学习,80%的参数减少,而性能的影响可以忽略不计。

Benefiting from the flexibility and efficiency of gOctConv, we propose a highly light-weighted model, namely CSNet, that fully explores both in-stage and Cross-Stages multi-scale features. As a bonus to the extremely low number of parameters, our CSNet could be directly trained from scratch without ImageNet pre-training, avoiding the unnecessary feature representations for distinguishing between various categories in the recognition task.

基本卷积模块设计好了,还需要将其应用在 显著度检测 中,搭建新的任务网络:

利用 gOctConv 的灵活性和效率,提出了一个高度轻量化的 CSNet 模型,该模型充分探索了 阶段内 和 跨阶段 的多尺度特征。由于参数数量极低,CSNet 可以直接从头开始训练,而无需 ImageNet 预训练,避免了在识别任务中用于区分不同类别的不必要的特征表示。

In summary, we make two major contributions in this paper:

– We propose a flexible convolutional module, namely gOctConv, to efficiently utilize both in-stage and cross-stages multi-scale features for SOD task, while reducing the representation redundancy by a novel dynamic weight decay scheme.

– Utilizing gOctConv, we build an extremely light-weighted SOD model, namely CSNet, which achieves comparable performance with ∼ 0.2% parameters (100k) of SOTA large models on popular SOD benchmarks.

本文的贡献:gOctConv,dynamic weight decay scheme 和 CSNet。

Light-weighted Network with Generalized OctConv

Overview of Generalized OctConv

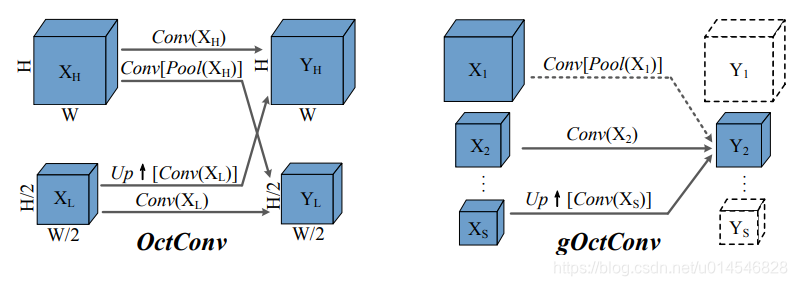

Originally designed to be a replacement of traditional convolution unit, the vanilla OctConv [4] shown in Fig. 2 (a) conducts the convolution operation across high/low scales within a stage. However, only two-scales within a stage can not introduce enough multi-scale information required for SOD task. The channels for each scale in vanilla OctConv is manually set, requires lots of efforts to re-adjust for saliency model as SOD task requires less category information. Therefore, we propose a generalized OctConv (gOctConv) allows arbitrary number of input resolutions from both in-stage and cross-stages conv features with learnable number of channels as shown in Fig. 2 (b). As a generalized version of vanilla OctConv, gOctConv improves the vanilla OctConv for SOD task in following aspects: 1). Arbitrary numbers of input and output scales is available to support a larger range of multi-scale representation. 2). Except for in-stage features, the gOctConv can also process cross-stages features with arbitrary scales from the feature extractor. 3). The gOctConv supports learnable channels for each scale through our proposed dynamic weight decay assisting pruning scheme. 4). Cross-scales feature interaction can be turned off to support a large complexity flexibility. The flexible gOctConv allows many instances under different designing requirements. We will give a detailed introduction of different instances of gOctConvs in following light-weighted model designing.

图 2 左侧 所示的普通 OctConv[4] 最初是为了替代传统的卷积单元而设计的,它在一个阶段内跨高/低尺度进行卷积运算。然而,仅在一个阶段内的两个尺度不能为 SOD 任务引入足够的多尺度信息【提出问题】。传统的 OctConv 中每个尺度的通道都是人工设置的,由于 SOD 任务需要较少的类别信息,需要花费大量的精力来重新调整显著性模型【解释问题产生的原因】。因此,本文提出一个广义 OctConv (gOctConv) 允许 in-stage 和 cross-stages 中的卷积特征通道,作为任意分辨率输入 ,如图 2 所示(b)。gOctConv 提高 SOD 任务表现在以下方面:

1. 任意数量的输入和输出尺度可以支持更大范围的多尺度表示。

2. 除了 in-stage 特征外,gOctConv 还可以处理特征提取器中任意尺度的 cross-stages 特征。

3. gOctConv 通过提出的动态权重衰减辅助剪枝方案支持每个尺度的可学习通道。

4. 可以关闭跨尺度特性交互,以支持较大的复杂性灵活性。

下面两节分别讲的是三大贡献中的两个:

1. gOctConvs:Light-weighted Model Composed of gOctConvs

2. dynamic weight decay scheme:Learnable Channels for gOctConv

Light-weighted Model Composed of gOctConvs

- Overview.

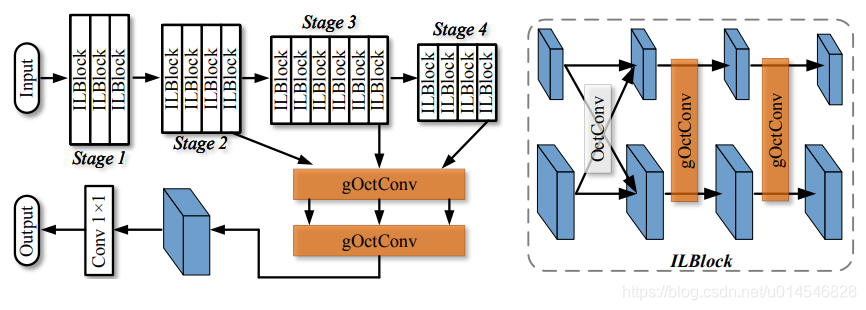

As shown in Fig. 3, our proposed light-weighted network, consisting of a feature extractor and a cross-stages fusion part, synchronously processes features with multiple scales. The feature extractor is stacked with our proposed in-layer multi-scale block, namely ILBlocks, and is split into 4 stages according to the resolution of feature maps, where each stage has 3, 4, 6, and 4 ILBlocks, respectively. The cross-stages fusion part, composed of gOctConvs, processes features from stages of the feature extractor to get a high-resolution output.

如图3所示,本文提出的轻量化网络由特征提取器和跨阶段融合部分组成,同步处理多尺度的特征。特征提取器与层内多尺度块 (ILBlock) 叠加,根据特征图的分辨率将特征提取器分为4个阶段,每个阶段分别有3、4、6和4个ILBlock。由 gOctConvs 组成的跨级融合部分,从特征提取器的各个阶段处理特征,得到高分辨率的输出。

- In-layer Multi-scale Block.

ILBlock enhances the multi-scale representation of features within a stage. gOctConvs are utilized to introduce multi-scale within ILBlock. Vanilla OctConv requires about 60% FLOPs [4] to achieves the similar performance to standard convolution, which is not enough for our objective of designing a highly light-weighted model. To save computational cost, interacting features with different scales in every layer is unnecessary. Therefore, we apply an instance of gOctConv that each input channel corresponds to an output channel with the same resolution through eliminating the cross scale operations. A depthwise operation within each scale in utilized to further save computational cost. This instance of gOctConv only requires about 1/channel FLOPs compared with vanilla OctConv.ILBlock is composed of a vanilla OctConv and two 3 × 3 gOctConvs as shown in Fig. 3. Vanilla OctConv interacts features with two scales and gOctConvs extract features within each scale. Multi-scale features within a block are separately processed and interacted alternately. Each convolution is followed by the BatchNorm [30] and PRelu [18]. Initially, we roughly double the channels of ILBlocks as the resolution decreases, except for the last two stages that have the same channel number. Unless otherwise stated, the channels for different scales in ILBlocks are set evenly. Learnable channels of OctConvs then are obtained through the scheme as described in Sec. 3.3.

Fig. 3. Illustration of our salient object detection pipeline, which uses gOctConv to extract both in-stage and cross-stages multi-scale features in a highly efficient way.

本段介绍的是 In-layer Multi-scale Block。

ILBlock 增强了一个阶段内特征的多尺度表示。gOctConvs 被用来在 ILBlock 中引入多尺度。普通 OctConv 需要大约60%的FLOPs[4]才能达到与标准卷积类似的性能,这对于高度轻量化模型的目标是不够的。为了节省计算成本,不需要在每一层中进行不同尺度的特征交互。因此,通过消除跨尺度操作,每个输入通道对应一个具有相同分辨率的输出通道。在每个规模内进行深度操作,以进一步节省计算成本。与普通的 OctConv 相比,gOctConv 实例只需要大约 1/channel FLOPs。ILBlock 由一个 vanilla OctConv 和两个 3x3 gOctConvs 组成,如图3所示。普通的 OctConv 与两个尺度相互作用特征,而 gOctConvs 则提取每个尺度内的特征。对块内的多尺度特征进行单独处理,并进行交互。每次卷积之后是 BatchNorm[30] 和 PRelu[18]。最初,ILBlock 的通道翻倍作为分辨率的降低,除了最后两个阶段具有相同的通道数。除非另有说明,ILBlock 中不同尺度的通道是均匀设置的。

- Cross-stages Fusion.

To retain a high output resolution, common methods retain high feature resolution on high-level of the feature extractor and construct complex multi-level aggregation module, inevitably increase the computational redundancy. While the value of multi-level aggregation is widely recognized [16,43], how to efficiently and concisely achieve it remains challenging. Instead, we simply use gOctConvs to fuse multi-scale features from stages of the feature extractor and generate the high-resolution output. As a trade-off between efficiency and performance, features from last three stages are used. A gOctConv 1 × 1 takes features with different scales from the last conv of each stage as input and conducts a cross-stages convolution to output features with different scales. To extract multi-scale features at a granular level, each scale of features is processed by a group of parallel convolutions with different dilation rates. Features are then sent to another gOctConv 1 × 1 to generate features with the highest resolution. Another standard conv 1 × 1 outputs the prediction result of saliency map. Learnable channels of gOctConvs in this part are also obtained.

本段介绍如何实现 Cross-stages 多层次特征聚合。

为了保持较高的输出分辨率,常用的方法在特征提取器的高层保留高分辨率特征,构造复杂的多级聚合模块,不可避免地增加了计算冗余。如何高效、简洁地实现多层次聚合仍是一个挑战。相反,本文使用 gOctConvs 来融合来自特征提取器各个阶段的多尺度特征,并生成高分辨率输出。作为效率和性能之间的权衡,使用了前三个阶段的特性。gOctConv 1x1 将每个阶段的最后一个卷积的不同尺度的特征作为输入,对输出的不同尺度的特征进行跨级卷积。为了提取粒度级的多尺度特征,每个尺度的特征都由一组不同膨胀率的并行卷积处理。然后,功能被发送到另一个 gOctConv 1x1 生成最高分辨率。另一个标准的 conv 1x1 输出显著性映射的预测结果。

Learnable Channels for gOctConv

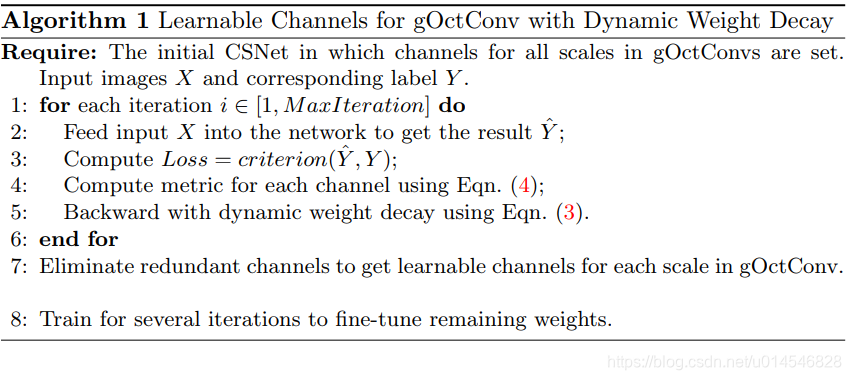

We propose to get learnable channels for each scale in gOctConv by utilizing our proposed dynamic weight decay assisted pruning during training. Dynamic weight decay maintains a stable weights distribution among channels while introducing sparsity, helping pruning algorithms to eliminate redundant channels with negligible performance drop.

在训练过程中利用,本文提出的动态权重衰减辅助修剪,为 gOctConv 中的每个尺度获得可学习的通道。动态权重衰减在引入稀疏性的同时保持了信道间稳定的权重分布,帮助剪枝算法消除冗余信道,而性能下降可以忽略不计。

- Dynamic Weight Decay.

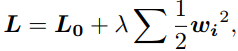

The commonly used regularization trick weight decay [33,77] endows CNNs with better generalization performance. Mehta et al.[53] shows that weight decay introduces sparsity into CNNs, which helps to prune unimportant weights. Training with weight decay makes unimportant weights in CNN have values close to zero. Thus, weight decay has been widely used in pruning algorithms to introduce sparsity [38,50,48,22,47,21]. The common implementation of weight decay is by adding the L2 regularization to the loss function, which can be written as follows:

where L0 is the loss for the specific task, wi is the weight of ith layer, and λ is the weight for weight decay. During back propagation, the weight wi is updated as

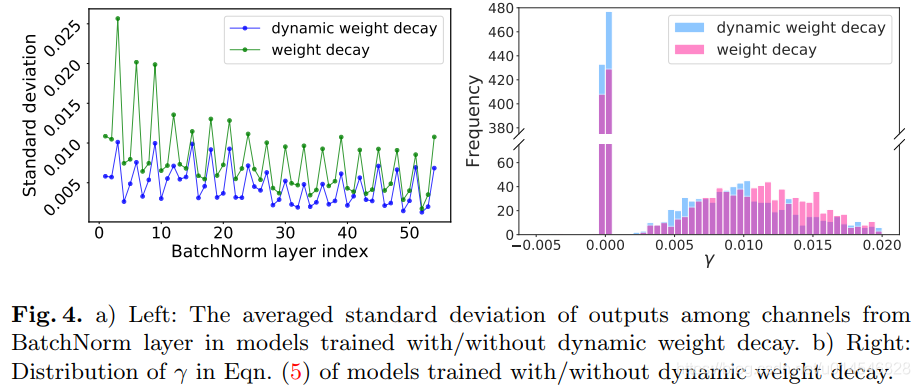

where ∇fi (wi) is the gradient to be updated, and λwi is the decay term, which is only associated with the weight itself. Applying a large decay term enhances sparsity, and meanwhile inevitably enlarges the diversity of weights among channels. Fig. 4 (a) shows that diverse weights cause unstable distribution of outputs among channels. Ruan et al.[8] reveals that channels with diverse outputs are more likely to contain noise, leading to biased representation for subsequent filters. Attention mechanisms have been widely used to re-calibrate the diverse outputs with extra blocks and computational cost [29,8]. We propose to relieve diverse outputs among channels with no extra cost during inference. We argue that the diverse outputs are mainly caused by the indiscriminate suppression of decay terms to weights. Therefore, we propose to adjust the weight decay based on specific features of certain channels. Specifically, during back propagation, decay terms are dynamically changed according to features of certain channels. The weight update of the proposed dynamic weight decay is written as

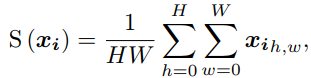

where λd is the weight of dynamic weight decay, xi denotes the features calculated by wi, and S (xi) is the metric of the feature, which can have multiple definitions depending on the task. In this paper, our goal is to stabilize the weight distribution among channels according to features. Thus, we simply use the global average pooling (GAP) [42] as the metric for a certain channel:

where H and W are the height and width of the feature map xi. The dynamic weight decay with the GAP metric ensures that the weights producing large value features are suppressed, giving a compact and stable weights and outputs distribution as revealed in Fig. 4. Also, the metric can be defined as other forms to suit certain tasks as we will study in our future work. Please refer to Sec. 4.3 for a more detailed interpretation of dynamic weight decay.

前面一段讲的是权重衰减的一般实现方式是在损失函数中加入 L2 正则化,以及其反向传播的更新方式。常用的正则化技巧权值衰减使得 CNN 具有更好的泛化性能。权重衰减将稀疏性引入 CNN,有助于修剪不重要的权重。权重衰减训练使得 CNN 中不重要的权重值接近于零。因此,权重衰减被广泛应用于引入稀疏性的剪枝算法中。

本文认为在推理过程中减少不同渠道的输出,而不需要额外的成本。不同的输出主要是由于对衰减项对权重的任意抑制。因此,根据特定通道的特征来调整权重衰减。具体来说,在反向传播过程中,衰减项会根据特定通道的特征动态变化。所提出的动态权重衰减的权重更新为 ![]() 多了一个 S(xi)。S (xi) 是特性的度量标准,它可以根据任务有多个定义。本文的目标是根据特征稳定信道间的权重分布。因此,本文使用全局平均池 (GAP)[42] 作为特定通道的度量,如上面最后一个公式。使用 GAP 度量的动态权值衰减保证了产生大值特征的权值被抑制,给出了如图4所示的紧凑和稳定的权值和输出分布。

多了一个 S(xi)。S (xi) 是特性的度量标准,它可以根据任务有多个定义。本文的目标是根据特征稳定信道间的权重分布。因此,本文使用全局平均池 (GAP)[42] 作为特定通道的度量,如上面最后一个公式。使用 GAP 度量的动态权值衰减保证了产生大值特征的权值被抑制,给出了如图4所示的紧凑和稳定的权值和输出分布。

- Learnable channels with model compression.

Now, we incorporate dynamic weight decay with pruning algorithms to remove redundant weights, so as to get learnable channels of each scale in gOctConvs. In this paper, we follow [48] to use the weight of BatchNorm layer as the indicator of the channel importance. The BatchNorm operation [30] is written as follows:

where x and y are input and output features, E(x) and Var(x) are the mean and variance, respectively, and is a small factor to avoid zero variance. γ and β are learned factors.We apply the dynamic weight decay to γ during training. Fig. 4 (b) reveals that there is a clear gap between important and redundant weights, and unimportant weights are suppressed to nearly zero (wi < 1e−20). Thus, we can easily remove channels whose γ is less than a small threshold. The learnable channels of each resolution features in gOctConv are obtained. The algorithm of getting learnbale channels of gOctConvs is illustrated in Alg. 1.

本文将动态权值衰减与剪枝算法相结合来去除冗余权值,从而在 gOctConvs 中获得每个尺度的可学习通道。本文采用 [48] 法,利用 BatchNorm 层的权值作为通道重要性的指标,如上面最后这个公式。

γ 和 β 是学习因子。在训练过程中,将动态权值衰减应用于 γ。图4 (b) 显示重要权重和冗余权重之间存在明显的差距,不重要权重被抑制到接近零 (wi < 1e−20)。因此,可以将 γ 小于一个小阈值的通道移除,得到了 gOctConv 中各分辨率特征的可学习通道。算法1中给出了获取 gOctConvs 学习通道的算法。

来源:oschina

链接:https://my.oschina.net/u/4360424/blog/4939442